尝试用DBNet进行文本检测:

官方代码了: 添加链接描述

之后会上传代码所需所有数据集和模型文件!

首先,建立镜像:

docker build -t dbnet:v1 .

dockerfile文件:

FROM zergmk2/chineseocr:pytorch1.0-gpu-py3.6

USER root

run mkdir /DBNet

copy . /DBNet

#run apt update

#run pip install --upgrade -i https://pypi.douban.com/simple pip

RUN pip install -r /DBNet/requirement1.txt -i https://pypi.douban.com/simple

#run apt install cuda-drivers -y

workdir /DBNet/

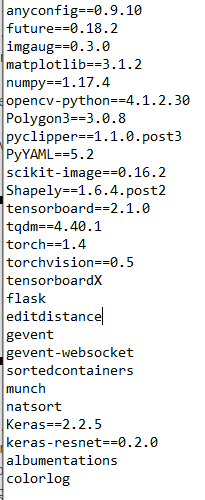

requirement文件:

运行train.py文件:

docker run --gpus all -v /....../.... -w /... dbnet:v1 python train.py experiments/seg_detector/totaltext_resnet50_deform_thre.yaml --num_gpus 2

对于数据集:

generate the txt file for train/test in our framework

import os

def get_images(img_path):

'''

find image files in data path

:return: list of files found

'''

img_path = os.path.abspath(img_path)

files = []

exts = ['jpg', 'png', 'jpeg', 'JPG', 'PNG']

for parent, dirnames, filenames in os.walk(img_path):

for filename in filenames:

for ext in exts:

if filename.endswith(ext):

files.append(os.path.join(parent, filename))

break

print('Find {} images'.format(len(files)))

return sorted(files)

def get_txts(txt_path):

'''

find gt files in data path

:return: list of files found

'''

txt_path = os.path.abspath(txt_path)

files = []

exts = ['txt']

for parent, dirnames, filenames in os.walk(txt_path):

for filename in filenames:

for ext in exts:

if filename.endswith(ext):

files.append(os.path.join(parent, filename))

break

print('Find {} txts'.format(len(files)))

return sorted(files)

if __name__ == '__main__':

#img_path = './data/ch4_training_images'

img_path = './data/ch4_test_images'

files = get_images(img_path)

#txt_path = './data/ch4_training_localization_transcription_gt'

txt_path = './data/Challenge4_Test_Task4_GT'

txts = get_txts(txt_path)

n = len(files)

assert len(files) == len(txts)

with open('dataset_ic13_test.txt', 'w') as f:

for i in range(n):

line = files[i] + '\t' + txts[i] + '\n'

f.write(line)

print('dataset generated ^_^ ')

config.json:

{

"name": "DB",

"data_loader": {

"type": "ImageDataset",

"args": {

"dataset": {

"train_data_path": [

[

"./dataset_ic13and15_train.txt"

]

],

"train_data_ratio": [

1.0

],

"val_data_path": "./dataset_ic13_test.txt",

"input_size": 640,

"img_channel": 3,

"shrink_ratio": 0.4

},

"loader": {

"validation_split": 0.1,

"train_batch_size": 10,

"shuffle": false,

"pin_memory": false,

"num_workers": 0

}

}

},

"arch": {

"type": "DBModel",

"args": {

"backbone": "shufflenetv2",

"fpem_repeat": 2,

"pretrained": true,

"segmentation_head": "FPN"

}

},

"loss": {

"type": "DBLoss",

"args": {

"alpha": 1.0,

"beta": 10.0,

"ohem_ratio": 3

}

},

"optimizer": {

"type": "Adam",

"args": {

"lr": 0.001,

"weight_decay": 0,

"amsgrad": true

}

},

"lr_scheduler": {

"type": "StepLR",

"args": {

"step_size": 300,

"gamma": 0.1

}

},

"trainer": {

"seed": 2,

"gpus": [

5

],

"epochs": 1000,

"display_interval": 10,

"show_images_interval": 100,

"resume_checkpoint": "",

"finetune_checkpoint": "",

"output_dir": "output",

"tensorboard": false,

"metrics": "loss"

}

}

601

601

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?