高性能网站设计之缓存

Prerequisite: Cache Memory and its levels

先决条件: 高速缓存及其级别

缓存性能 (Cache Performance)

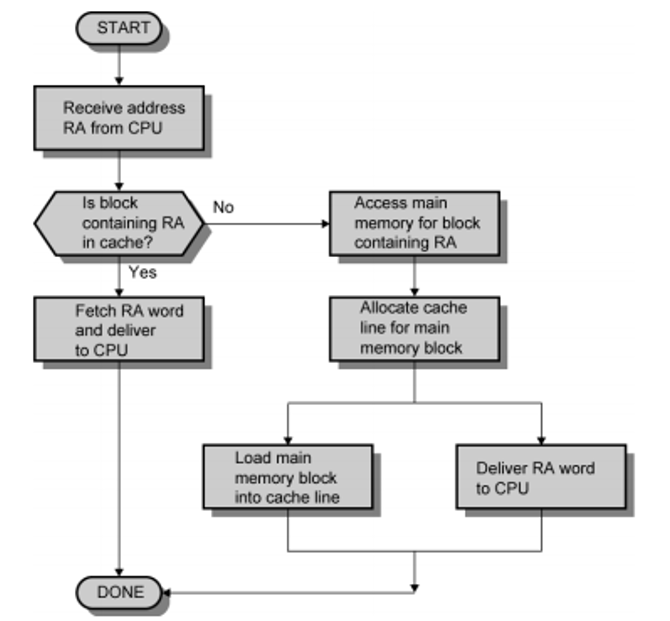

When the CPU needs to read or write a location in the main memory that is any process requires some data, it first checks for a corresponding entry in the cache.

当CPU需要读取或写入主存储器中的某个位置(任何进程需要一些数据)时,它首先检查高速缓存中是否有相应的条目。

If the processor finds that the memory location is in the cache and the data is available in the cache, this is referred to as a cache hit and data is read from the cache.

如果处理器发现内存位置在高速缓存中并且数据在高速缓存中可用,则这称为高速缓存命中,并且从高速缓存中读取数据。

If the processor does not find the memory location in the cache, this is referred to as a cache miss. Due to a cache miss, the data is read from the main memory. For this cache allocates a new entry and copies data from main memory, by assuming that data will be needed again.

如果处理器未在高速缓存中找到内存位置,则称为高速缓存未命中。 由于高速缓存未命中,因此将从主存储器读取数据。 为此,通过假定再次需要数据,该缓存分配一个新条目并从主内存中复制数据。

The performance of cache memory is measured in a term known as "Hit ratio".

高速缓冲存储器的性能以称为“命中率”的术语来测量。

Hit ratio = Cache hit / (Cache hit + Cache miss)

= Number of Cache hits/total accesses

We can improve the performance of Cache using higher cache block size, higher associativity, reduce miss rate, reduce miss penalty, and reduce the time to hit in the cache.

我们可以使用更大的缓存块大小,更高的关联性,降低未命中率,减少未命中代价以及减少命中时间来提高缓存的性能。

缓存存储器设计 (Cache Memory Design)

Cache Memory design represents the following categories: Block size, Cache size, Mapping function, Replacement algorithm, and Write policy. These are as follows,

缓存存储器设计代表以下类别:块大小,缓存大小,映射功能,替换算法和写入策略。 这些如下

Cache Read Operation

缓存读取操作

1)块大小 (1) Block Size)

Block size is the unit of information changed between cache and main memory. On the storage system, all volumes share the same cache space, so that, the volumes can have only one cache block size.

块大小是在高速缓存和主存储器之间更改的信息单位。 在存储系统上,所有卷共享相同的缓存空间,因此,这些卷只能具有一个缓存块大小。

As the block size increases from small to larger sizes, the cache hit magnitude relation increases as a result of the principle of locality and a lot of helpful data or knowledge can be brought into the cache.

随着块大小从小到大增加,由于局部性原理,高速缓存命中量级关系增加,并且可以将大量有用的数据或知识带入高速缓存。

Since the block becomes even larger, the hit magnitude relation can begin to decrease.

由于块变得更大,命中幅度关系可以开始减小。

2)缓存大小 (2) Cache Size)

If the size of the cache is small it increases the performance of the system.

如果高速缓存的大小很小,它将提高系统的性能。

3)映射功能 (3) Mapping Function)

Cache lines or cache blocks are fixed-size blocks in which data transfer takes place between memory and cache. When a cache line is copied into the cache from memory, a cache entry is created.

高速缓存行或高速缓存块是固定大小的块,其中在内存和高速缓存之间进行数据传输。 将缓存行从内存复制到缓存时,将创建一个缓存条目。

There are fewer cache lines than memory blocks that are why we need an algorithm for mapping memory into the cache lines.

缓存行少于内存块,这就是为什么我们需要一种将内存映射到缓存行的算法的原因。

This is a means to determine which memory block is in which cache lines. Whenever a cache entry is created, the mapping function determines that the cache location the block will occupy.

这是确定哪个存储块位于哪些高速缓存行中的一种方法。 每当创建缓存条目时,映射功能都会确定该块将占用的缓存位置。

There are two main points that are one block of data scan in and another could be replaced.

有两个要点,一个是数据扫描块,另一个是可以替换的。

For example, cache is of 64kb and cache block of 4 bytes i,e. cache is 16k lines of cache.

例如 ,缓存为64kb,缓存块为4字节,即。 缓存是16k行缓存。

4)替换算法 (4) Replacement Algorithm)

If the cache already has all slots of alternative blocks are full and we want to read a line is from memory it replaces some other line which is already in cache. The replacement algorithmic chooses, at intervals, once a replacement block is to be loaded into the cache then which block to interchange. We replace that block of memory is not required within the close to future.

如果缓存中已经有备用块的所有插槽都已满,并且我们想从内存中读取一行,它将替换缓存中已有的其他行。 替换算法会每隔一段时间选择一次将替换块加载到缓存中,然后替换哪个块。 我们替换了在不久的将来不再需要的内存块。

Policies to replace a block are the least-recently-used (LRU) algorithm. According to this rule most recently used is likely to be used again. Some other replacement algorithms are FIFO (First Come First Serve), least-frequently-used.

替换块的策略是最近最少使用(LRU)算法。 根据此规则,最近使用过的很可能会再次使用。 其他一些替换算法是使用最少的FIFO(先到先服务)。

5)写政策 (5) Write Policy)

If we want to write data to the cache, at that point it must also be written to the main memory and the timing of this write is referred to as the write policy.

如果我们想将数据写入高速缓存,则此时还必须将其写入主存储器,并且此写入的时间称为写入策略。

In a write-through operation, every write to the cache follows a write to main memory.

在直写操作中,每次对高速缓存的写操作都会在对主存储器的写操作之后进行。

In a write-back operation or copy-back cache operation, writes are not immediately appeared to the main memory that is writing is done only to the cache and the cache memory tracks the locations have been written over, marking them as dirty.

在回写操作或回写缓存操作中,不会立即对主存储器进行写操作,而仅对缓存进行写操作,并且缓存存储器会跟踪已被写入的位置,从而将其标记为脏。

These locations contain some data that is written back to the main memory only and that data is removed from the cache.

这些位置包含一些仅写回主存储器的数据,并且这些数据已从缓存中删除。

References:

参考文献:

翻译自: https://www.includehelp.com/operating-systems/cache-memory-performance-and-its-design.aspx

高性能网站设计之缓存

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?