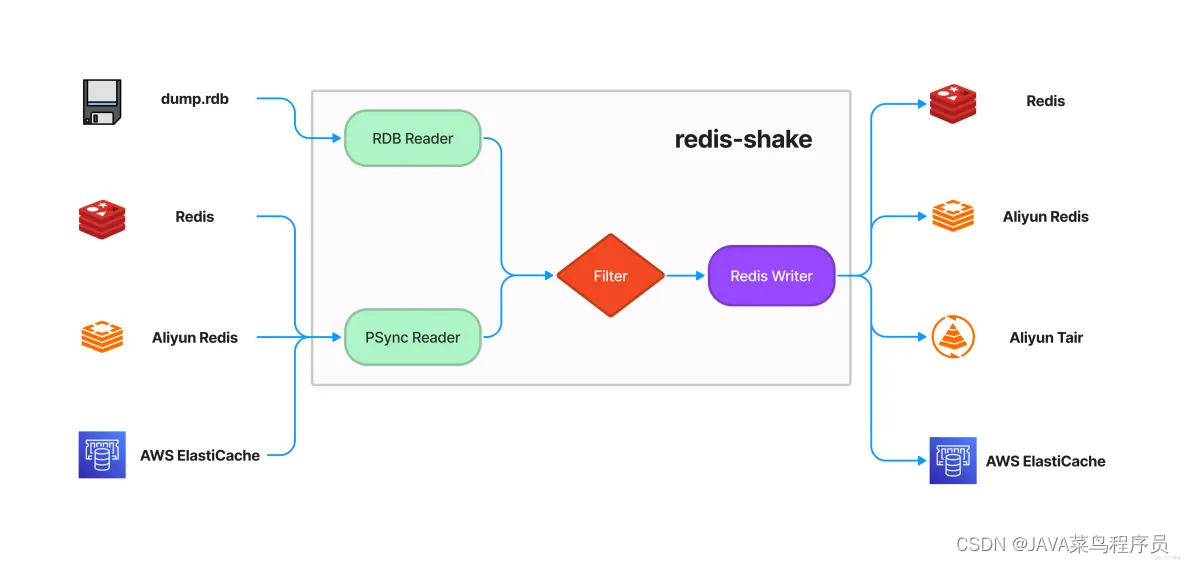

redis-shake是阿里云Redis团队开源的用于Redis数据迁移和数据过滤的工具。

一、基本功能

redis-shake它支持解析、恢复、备份、同步四个功能

恢复restore:将RDB文件恢复到目的redis数据库。

备份dump:将源redis的全量数据通过RDB文件备份起来。

解析decode:对RDB文件进行读取,并以json格式解析存储。

同步sync:支持源redis和目的redis的数据同步,支持全量和增量数据的迁移,支持单节点、主从版、集群版之间的互相同步。

同步rump:支持源redis和目的redis的数据同步,仅支持全量的迁移,采用scan和restore命令进行迁移,支持不同云厂商不同redis版本的迁移。

二、基本原理

三、RedisShake同步原理

1.源Redis服务实例相当于主库,Redis-shake相当于从库,它会发送psync指令给源Redis服务实例。

2.源Redis实例先把RDB文件传输给 Redis-shake ,Redis-shake 会把RDB文件发送给目的实例。

3.源实例会再把增量命令发送给 Redis-shake ,Redis-shake负责把这些增量命令再同步给目的实例。

四、RedisShake安装

确保您在本地机器上设置了 Golang 环境。

4.1、release包下载

Releases · tair-opensource/RedisShake · GitHub

4.2、解压

tar -zxvf redis-shake-linux-amd64.tar.gz -C /home/redisshake/解压完了之后有两个文件:

![]()

4.3、修改shake.toml配置文件

function = ""

[sync_reader]

cluster = false # set to true if source is a redis cluster

address = "127.0.0.1:6379" # when cluster is true, set address to one of the cluster node

username = "" # keep empty if not using ACL

password = "" # keep empty if no authentication is required

tls = false

sync_rdb = true # set to false if you don't want to sync rdb

sync_aof = true # set to false if you don't want to sync aof

prefer_replica = true # set to true if you want to sync from replica node

#[scan_reader]

#cluster = false # set to true if source is a redis cluster

#address = "127.0.0.1:6379" # when cluster is true, set address to one of the cluster node

#username = "" # keep empty if not using ACL

#password = "" # keep empty if no authentication is required

#tls = false

#dbs = [] # set you want to scan dbs such as [1,5,7], if you don't want to scan all

#scan = true # set to false if you don't want to scan keys

#ksn = false # set to true to enabled Redis keyspace notifications (KSN) subscription

#count = 1 # number of keys to scan per iteration

# [rdb_reader]

# filepath = "/tmp/dump.rdb"

# [aof_reader]

# filepath = "/tmp/.aof"

# timestamp = 0 # subsecond

[redis_writer]

cluster = false # set to true if target is a redis cluster

sentinel = false # set to true if target is a redis sentinel

master = "" # set to master name if target is a redis sentinel

address = "192.168.72.129:6379" # when cluster is true, set address to one of the cluster node

username = "" # keep empty if not using ACL

password = "" # keep empty if no authentication is required

tls = false

off_reply = false # ture off the server reply

[advanced]

dir = "data"

ncpu = 0 # runtime.GOMAXPROCS, 0 means use runtime.NumCPU() cpu cores

pprof_port = 0 # pprof port, 0 means disable

status_port = 0 # status port, 0 means disable

# log

log_file = "shake.log"

log_level = "info" # debug, info or warn

log_interval = 5 # in seconds

# redis-shake gets key and value from rdb file, and uses RESTORE command to

# create the key in target redis. Redis RESTORE will return a "Target key name

# is busy" error when key already exists. You can use this configuration item

# to change the default behavior of restore:

# panic: redis-shake will stop when meet "Target key name is busy" error.

# rewrite: redis-shake will replace the key with new value.

# ignore: redis-shake will skip restore the key when meet "Target key name is busy" error.

rdb_restore_command_behavior = "panic" # panic, rewrite or skip

# redis-shake uses pipeline to improve sending performance.

# This item limits the maximum number of commands in a pipeline.

pipeline_count_limit = 1024

# Client query buffers accumulate new commands. They are limited to a fixed

# amount by default. This amount is normally 1gb.

target_redis_client_max_querybuf_len = 1024_000_000

# In the Redis protocol, bulk requests, that are, elements representing single

# strings, are normally limited to 512 mb.

target_redis_proto_max_bulk_len = 512_000_000

# If the source is Elasticache or MemoryDB, you can set this item.

aws_psync = "" # example: aws_psync = "10.0.0.1:6379@nmfu2sl5osync,10.0.0.1:6379@xhma21xfkssync"

# destination will delete itself entire database before fetching files

# from source during full synchronization.

# This option is similar redis replicas RDB diskless load option:

# repl-diskless-load on-empty-db

empty_db_before_sync = false

[module]

# The data format for BF.LOADCHUNK is not compatible in different versions. v2.6.3 <=> 20603

target_mbbloom_version = 20603

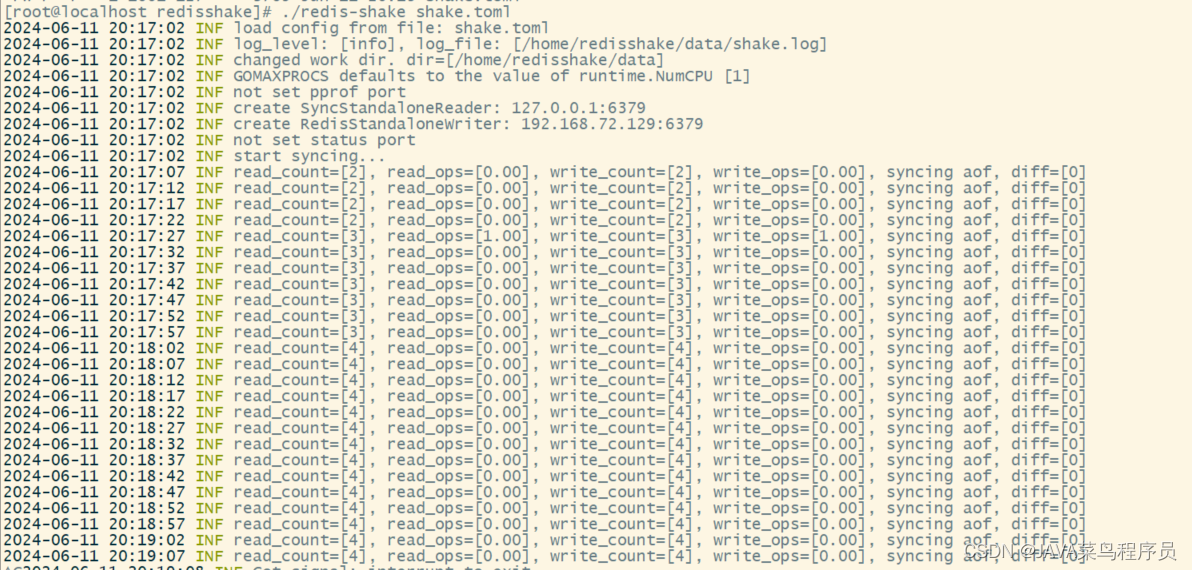

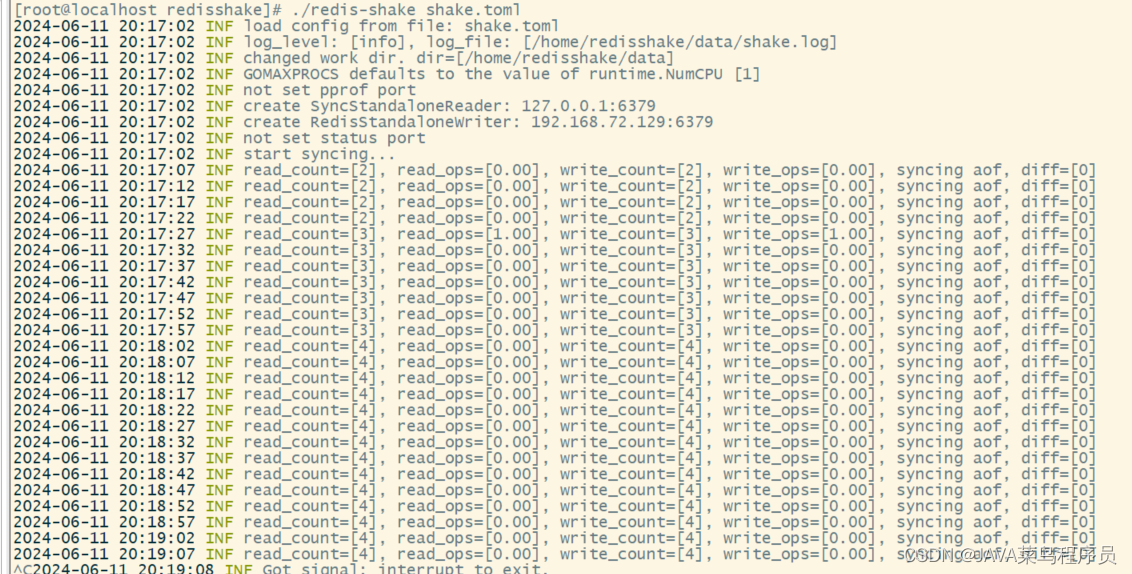

4.4、启动RediShake

./redis-shake shake.toml

4.5、测试数据迁移

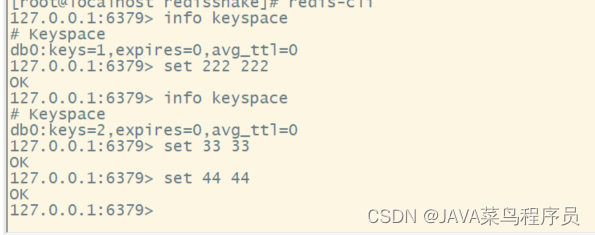

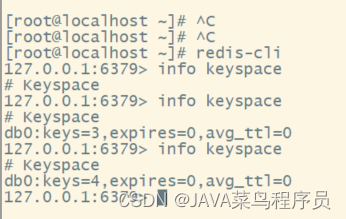

在192.168.72.128这台机器上插入几个key

可以看到RedisShake工具在实施监听key,有新增的就会把新增的key迁移到另外一机器的redis中;看打印的日志是写了4条数据

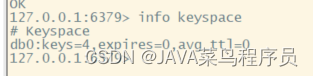

在192.168.72.129这台机器上查看迁移的数据

4.5、数据校验

通过info keyspace命令,查看所有的key和过期key的数量。

1777

1777

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?