K近邻法分类和回归

#-*- coding=utf-8 -*-

import numpy as np

from sklearn import datasets,neighbors

from sklearn.model_selection import train_test_split

import matplotlib.pyplot as plt

def load_data():

digits=datasets.load_digits()

X_train=digits.data

y_train=digits.target

return train_test_split(X_train,y_train, test_size=0.25,random_state=0,stratify=y_train)

def creat_regression_data(n):

'''

在sin(x)基础上添加噪声

'''

X=5*np.random.rand(n,1)

y=np.sin(X).ravel()

y[::5]+=1*(0.5*np.random.rand(int(n/5)))

return train_test_split(X_train,y_train, test_size=0.25,random_state=0,stratify=y_train)

def test_KNeighborsClassifier(*data):

X_train,X_test,y_train,y_test=data

cls=neighbors.KNeighborsClassifier()

cls.fit(X_train,y_train)

print('KNeighborsClassifier Train score :%.2f'%cls.score(X_train,y_train))

print('KNeighborsClassifier Test score :%.2f'%cls.score(X_test,y_test))

def test_KNeighborsRegressor(*data):

X_train,X_test,y_train,y_test=data

cls=neighbors.KNeighborsRegressor()

cls.fit(X_train,y_train)

print('KNeighborsRegressor Train score :%.2f'%cls.score(X_train,y_train))

print('KNeighborsRegressor Test score :%.2f'%cls.score(X_test,y_test))

if __name__=='__main__':

X_train,X_test,y_train,y_test=load_data()

test_KNeighborsClassifier(X_train,X_test,y_train,y_test)

X_train,X_test,y_train,y_test=creat_regression_data(1000)

test_KNeighborsRegressor(X_train,X_test,y_train,y_test)

PS E:\p> python test.py

KNeighborsClassifier Train score :0.99

KNeighborsClassifier Test score :0.98

KNeighborsRegressor Train score :0.97

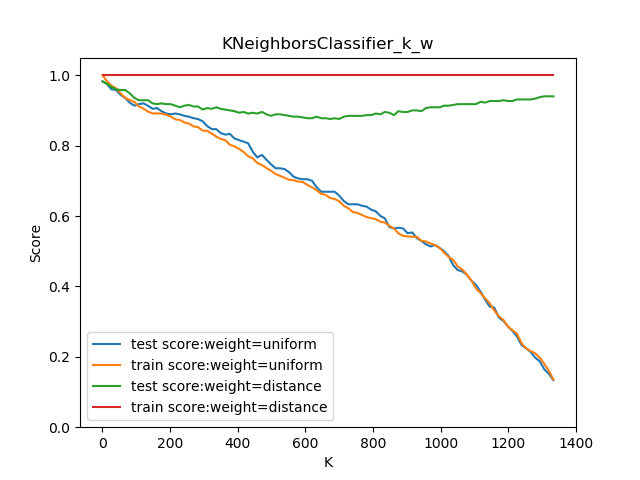

KNeighborsRegressor Test score :0.94看看KNN分类K和权重对评分的影响:

#-*- coding=utf-8 -*-

import numpy as np

from sklearn import datasets,neighbors

from sklearn.model_selection import train_test_split

import matplotlib.pyplot as plt

def load_data():

digits=datasets.load_digits()

X_train=digits.data

y_train=digits.target

return train_test_split(X_train,y_train, test_size=0.25,random_state=0,stratify=y_train)

def test_KNeighborsClassifier_k_w(*data):

X_train,X_test,y_train,y_test=data

Ks=np.linspace(1,y_train.size,num=100,endpoint=False,dtype='int')#arange数据类型的size

#linspace最初是从MATLAB中学来的,用此来创建等差数列

#endpoint : bool, optional

#If True, stop is the last sample. Otherwise, it is not included. Default is True.

weights=['uniform','distance']

fig=plt.figure()

ax=fig.add_subplot(1,1,1)

for weight in weights:

Train_scores=[]

Test_scores=[]

for K in Ks:

clf=neighbors.KNeighborsClassifier(weights=weight,n_neighbors=K)

clf.fit(X_train,y_train)

Train_scores.append(clf.score(X_train,y_train))

Test_scores.append(clf.score(X_test,y_test))

ax.plot(Ks,Test_scores,label="test score:weight=%s"%weight)

ax.plot(Ks,Train_scores,label="train score:weight=%s"%weight)

ax.legend(loc='best')

ax.set_xlabel("K")

ax.set_ylabel("Score")

ax.set_ylim(0,1.05)

ax.set_title("KNeighborsClassifier_k_w")

plt.show()

if __name__=='__main__':

X_train,X_test,y_train,y_test=load_data()

test_KNeighborsClassifier_k_w( X_train,X_test,y_train,y_test)

#-*- coding=utf-8 -*-

import numpy as np

from sklearn import datasets,neighbors

from sklearn.model_selection import train_test_split

import matplotlib.pyplot as plt

def load_data():

digits=datasets.load_digits()

X_train=digits.data

y_train=digits.target

return train_test_split(X_train,y_train, test_size=0.25,random_state=0,stratify=y_train)

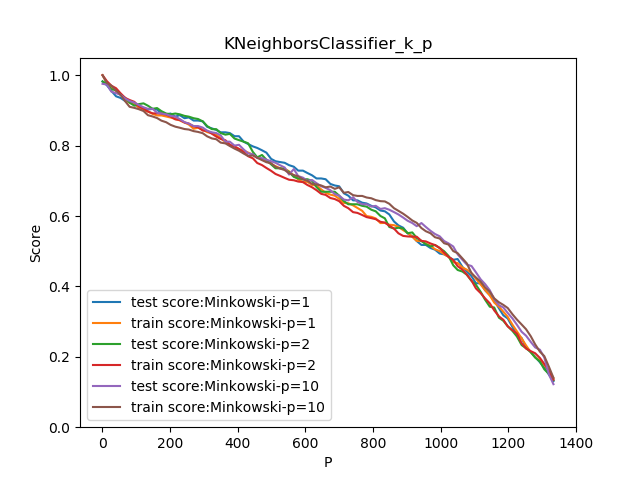

def test_KNeighborsClassifier_k_p(*data):

X_train,X_test,y_train,y_test=data

Ks=np.linspace(1,y_train.size,num=100,endpoint=False,dtype='int')#arange数据类型的size

#linspace最初是从MATLAB中学来的,用此来创建等差数列

#endpoint : bool, optional

#If True, stop is the last sample. Otherwise, it is not included. Default is True.

ps=[1,2,10]

fig=plt.figure()

ax=fig.add_subplot(1,1,1)

for p in ps:

Train_scores=[]

Test_scores=[]

for K in Ks:

clf=neighbors.KNeighborsClassifier(p=p,n_neighbors=K)

clf.fit(X_train,y_train)

Train_scores.append(clf.score(X_train,y_train))

Test_scores.append(clf.score(X_test,y_test))

ax.plot(Ks,Test_scores,label="test score:Minkowski-p=%s"%p)#闵可夫斯基

ax.plot(Ks,Train_scores,label="train score:Minkowski-p=%s"%p)

ax.legend(loc='best')

ax.set_xlabel("P")

ax.set_ylabel("Score")

ax.set_ylim(0,1.05)

ax.set_title("KNeighborsClassifier_k_p")

plt.show()

if __name__=='__main__':

X_train,X_test,y_train,y_test=load_data()

test_KNeighborsClassifier_k_p( X_train,X_test,y_train,y_test)

我想说这图画的好慢,电脑性能太菜了。

关于回归图的情况是一样一样的只不过更光滑一些。

3630

3630

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?