下面的部署步骤,除非说明是在哪个服务器上操作,否则默认为在所有服务器上都要操作。为了方便,使用root用户。

1.准备工作

1.1 centOS7服务器3台

master 192.168.174.132

node1 192.168.174.133

node2 192.168.174.134

1.2 软件包

hadoop-2.7.3.tar.gz

jdk-7u79-linux-x64.tar.gz

上传到3台服务器的/soft目录下

1.3 关闭防火墙

检查防火墙

[root@localhost ~]# systemctl status firewalld

● firewalld.service

Loaded: not-found (Reason: No such file or directory)

Active: inactive (dead) systemctl stop firewalld.service #停止firewall

systemctl disable firewalld.service #禁止firewall开机启动

1.4 关闭selinux

检查selinux状态

[root@localhost ~]# sestatus

SELinux status: enabled

SELinuxfs mount: /sys/fs/selinux

SELinux root directory: /etc/selinux

Loaded policy name: targeted

Current mode: enforcing

Mode from config file: enforcing

Policy MLS status: enabled

Policy deny_unknown status: allowed

Max kernel policy version: 28临时关闭

[root@localhost ~]# setenforce 0

[root@localhost ~]# vi /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

#SELINUX=enforcing

SELINUX=disabled

# SELINUXTYPE= can take one of three two values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted1.5 安装ntp

ntp纠正系统时间。检查

[root@localhost ~]# rpm -q ntp

未安装软件包 ntp

[root@localhost ~]# yum -y install ntp[root@localhost ~]# systemctl enable ntpd[root@localhost ~]# systemctl start ntpd1.6 安装JDK

解压jdk

[root@localhost ~]# mkdir /soft/java

[root@localhost soft]# tar -zxvf jdk-7u79-linux-x64.tar.gz -C /soft/java/

[root@localhost soft]# echo -e "\nexport JAVA_HOME=/soft/java/jdk1.7.0_79" >> /etc/profile[root@localhost soft]# echo -e "\nexport PATH=\$PATH:\$JAVA_HOME/bin" >> /etc/profile[root@localhost soft]# echo -e "\nexport CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar" >> /etc/profile1.7 配置主机域名

1)master 192.168.174.132上操作

[root@localhost soft]# hostname master

[root@master ~]# vi /etc/hostname

master2)node1 192.168.174.133上操作

[root@localhost soft]# hostname node1

[root@master ~]# vi /etc/hostname

node1

[root@localhost soft]# hostname node2

[root@master ~]# vi /etc/hostname

node21.8 配置hosts

3台服务器上都执行

[root@master ~]# echo '192.168.174.132 master' >> /etc/hosts

[root@master ~]# echo '192.168.174.133 node1' >> /etc/hosts

[root@master ~]# echo '192.168.174.134 node2' >> /etc/hosts

1.9 ssh免密码登录

master上操作

[root@master home]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

1d:33:50:ac:03:2f:d8:10:8f:3d:48:95:d3:f8:7a:05 root@master

The key's randomart image is:

+--[ RSA 2048]----+

| oo.+.o. |

| ..== E.. |

| o++= o+ |

| . o.=..+ |

| oSo. |

| . . |

| . |

| |

| |

+-----------------+

[root@master home]#

[root@master ~]# cat /root/.ssh/id_rsa.pub >> /root/.ssh/authorized_keys检查node1,node2上/root下,是否有.ssh目录,没有则创建,注意要有ll -a命令

node1,node2上操作

[root@localhost ~]# ll -a /root/

总用量 36

dr-xr-x---. 2 root root 4096 11月 16 17:31 .

dr-xr-xr-x. 18 root root 4096 11月 17 16:49 ..

-rw-------. 1 root root 953 11月 16 17:27 anaconda-ks.cfg

-rw-------. 1 root root 369 11月 17 18:12 .bash_history

-rw-r--r--. 1 root root 18 12月 29 2013 .bash_logout

-rw-r--r--. 1 root root 176 12月 29 2013 .bash_profile

-rw-r--r--. 1 root root 176 12月 29 2013 .bashrc

-rw-r--r--. 1 root root 100 12月 29 2013 .cshrc

-rw-r--r--. 1 root root 129 12月 29 2013 .tcshrc

[root@localhost ~]# mkdir /root/.sshmaster上操作

[root@master ~]# scp /root/.ssh/authorized_keys root@192.168.174.133:/root/.ssh/

[root@master ~]# scp /root/.ssh/authorized_keys root@192.168.174.134:/root/.ssh/

[root@master ~]# chmod 700 /root/.ssh验证

master上操作

ssh master,ssh node1,ssh node2

[root@master .ssh]# ssh node1

Last failed login: Fri Nov 18 16:52:28 CST 2016 from master on ssh:notty

There were 2 failed login attempts since the last successful login.

Last login: Fri Nov 18 16:22:23 2016 from 192.168.174.1

[root@node1 ~]# logout

Connection to node1 closed.

[root@master .ssh]# ssh node2

The authenticity of host 'node2 (192.168.174.134)' can't be established.

ECDSA key fingerprint is 95:76:9a:bc:ef:5e:f2:b3:cf:35:67:7a:3e:da:0e:e2.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'node2' (ECDSA) to the list of known hosts.

Last failed login: Fri Nov 18 16:57:12 CST 2016 from master on ssh:notty

There was 1 failed login attempt since the last successful login.

Last login: Fri Nov 18 16:22:40 2016 from 192.168.174.1

[root@node2 ~]# logout

Connection to node2 closed.

[root@master .ssh]# ssh master

Last failed login: Fri Nov 18 16:51:45 CST 2016 from master on ssh:notty

There was 1 failed login attempt since the last successful login.

Last login: Fri Nov 18 15:33:56 2016 from 192.168.174.1

[root@master ~]# 2.配置hadoop集群

下面操作,若无特别指明,均是3台服务器都执行操作。

2.1 解压

[root@master soft]# mkdir -p /soft/hadoop/

[root@master soft]# tar -zxvf hadoop-2.7.3.tar.gz -C /soft/hadoop/2.2 配置环境

[root@master hadoop-2.7.3]# echo "export HADOOP_HOME=/soft/hadoop/hadoop-2.7.3'" >> /etc/profile

[root@master hadoop-2.7.3]# echo -e "export PATH=\$PATH:\$HADOOP_HOME/bin:\$HADOOP_HOME/sbin" >> /etc/profile[root@master hadoop-2.7.3]# source /etc/profile

[root@master hadoop-2.7.3]# hadoop version

Hadoop 2.7.3

Subversion https://git-wip-us.apache.org/repos/asf/hadoop.git -r baa91f7c6bc9cb92be5982de4719c1c8af91ccff

Compiled by root on 2016-08-18T01:41Z

Compiled with protoc 2.5.0

From source with checksum 2e4ce5f957ea4db193bce3734ff29ff4

This command was run using /soft/hadoop/hadoop-2.7.3/share/hadoop/common/hadoop-common-2.7.3.jar

[root@master hadoop-2.7.3]# 修改hadoop配置文件

hadoop-env.sh,yarn-env.sh增加JAVA_HOME配置

[root@node2 soft]# echo -e "export JAVA_HOME=/soft/java/jdk1.7.0_79" >> /soft/hadoop/hadoop-2.7.3/etc/hadoop/hadoop-env.sh

[root@node2 soft]# echo -e "export JAVA_HOME=/soft/java/jdk1.7.0_79" >> /soft/hadoop/hadoop-2.7.3/etc/hadoop/yarn-env.sh创建目录/hadoop,/hadoop/tmp,/hadoop/hdfs/data,/hadoop/hdfs/name

[root@master hadoop]# mkdir -p /hadoop/tmp

[root@master hadoop]# mkdir -p /hadoop/hdfs/data

[root@master hadoop]# mkdir -p /hadoop/hdfs/name修改core-site.xml文件

vi /soft/hadoop/hadoop-2.7.3/etc/hadoop/core-site.xml

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>/hadoop/tmp</value>

<description>Abase for other temporary directories.</description>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://master:9000</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>4096</value>

</property>

</configuration>vi /soft/hadoop/hadoop-2.7.3/etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/hadoop/hdfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/hadoop/hdfs/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>master:9001</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

</configuration>

[root@master hadoop]# cd /soft/hadoop/hadoop-2.7.3/etc/hadoop/

[root@master hadoop]# cp mapred-site.xml.template mapred-site.xml

[root@master hadoop]# vi mapred-site.xml<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

<final>true</final>

</property>

<property>

<name>mapreduce.jobtracker.http.address</name>

<value>master:50030</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>master:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>master:19888</value>

</property>

<property>

<name>mapred.job.tracker</name>

<value>http://master:9001</value>

</property>

</configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>master</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>master:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>master:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>master:8031</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>master:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>master:8088</value>

</property>

[root@master hadoop]# echo -e "node1\nnode2" > /soft/hadoop/hadoop-2.7.3/etc/hadoop/slaves 2.3 启动

只在master执行,格式化

[root@master hadoop]# cd /soft/hadoop/hadoop-2.7.3/bin/

[root@master bin]# ./hadoop namenode -format启动,只在master执行

[root@master bin]# cd /soft/hadoop/hadoop-2.7.3/sbin/

[root@master sbin]# ./start-all.sh2.4 验证

master

[root@master sbin]# jps

3337 Jps

2915 SecondaryNameNode

3060 ResourceManager

2737 NameNode

[root@master sbin]# node1

[root@node1 hadoop]# jps

2608 DataNode

2806 Jps

2706 NodeManager

[root@node1 hadoop]# node2

[root@node2 hadoop]# jps

2614 DataNode

2712 NodeManager

2812 Jps

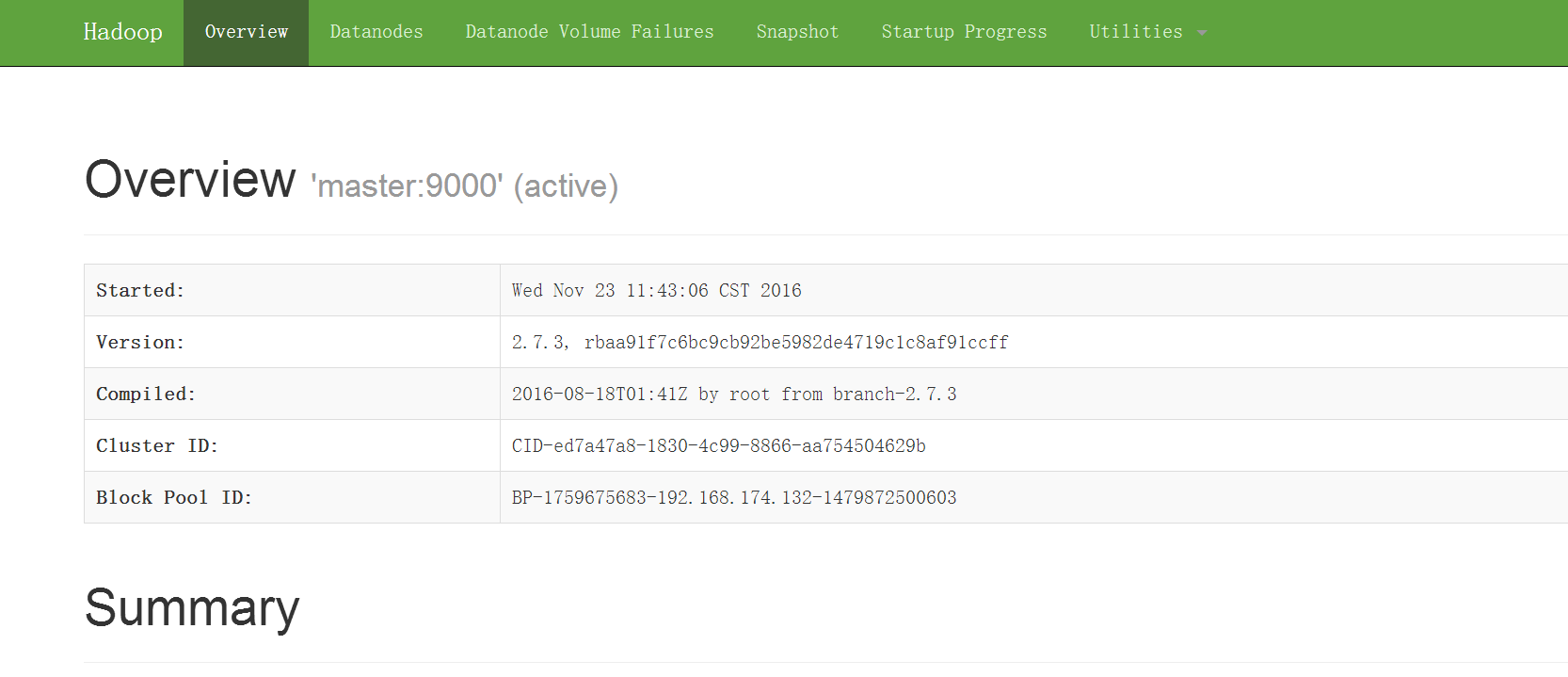

[root@node2 hadoop]# 浏览器访问master的50070,比如http://192.168.174.132:50070

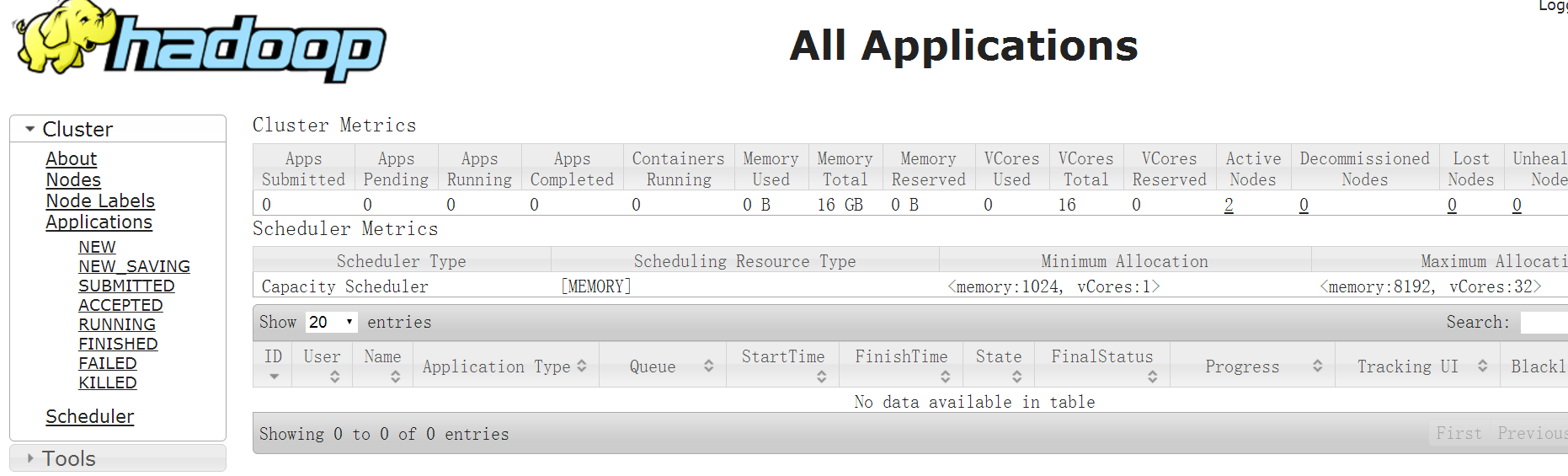

http://192.168.174.132:8088/

好了,说明hadoop集群正常工作了

2.5 停止

[root@master bin]# cd /soft/hadoop/hadoop-2.7.3/sbin/

[root@master sbin]# ./stop-all.sh

1891

1891

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?