简介

docker是一种虚拟化技术,目标就是屏蔽差异和更高效的利用系统资源,但与传统的虚拟化技术相比,docker是在操作系统上进行的虚拟化,而传统虚拟化技术是在硬件层面进行虚拟化,所以docker更轻量级、效率更高。

从微观上理解,docker即使一个Linux Kernal + 运行在上面的应用软件。

www.docker.com

hub.docker.com安装(Mac)

- 从www.docker.com下周docker.dmg

- 安装;

- 注册账号;

- 验证 docker --version;

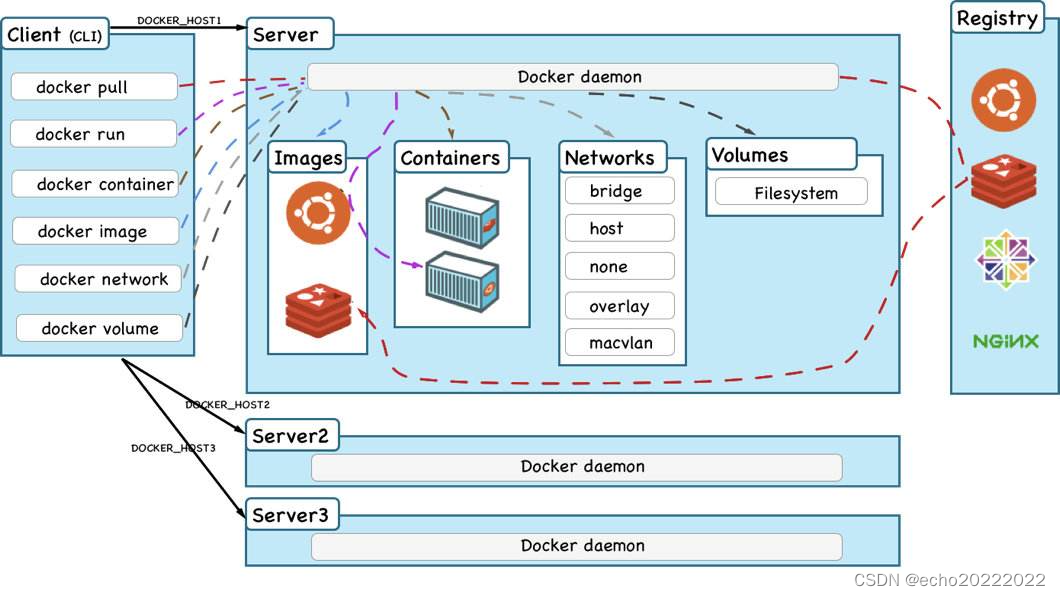

架构

在docker中主要涉及三个核心组件,即镜像、容器和仓库。

| 镜像 | 即组成可运行程序的文件,镜像采用分层的联合文件系统构建 |

| 容器 | 容器是镜像运行起来的实体 |

| 仓库 | 仓库用来存储镜像文件,Docker Hub、Aliyun Docker Hub、私有Docker Hub |

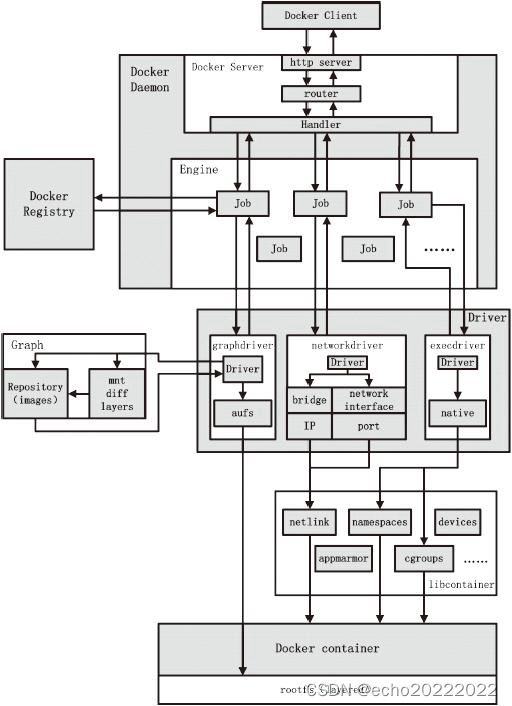

但实际上docker内部的结构要更加复杂,下面是docker的详细的内部机构

但实际上docker内部的结构要更加复杂,下面是docker的详细的内部机构

常用命令

镜像

docker search centos --limit 5

docker pull centos

docker system df //查看镜像/容器/数据券 所占的空间大小

docker rmi [-f] 46331d942d63

docker tag oldtag newtag

docker push d4916ee6944f echo20222022/redis:1.0.0容器

docker run -it --name mycentos e6a0117ec169 /bin/bash //运行容器,并以交互式的方式进入容器

docker ps //查看正在运行的容器

docker start mycentos //启动已经停止的容器

docker stop mycentos //终止一个运行的容器

docker kill mycentos //强行终止一个运行的容器

docker rm [-f] mycentos //删除某个容器

docker run -d d4deb73856a2 //以守护进行的方式运行容器

docker logs 85cd9ab85d0e //查看容器的日志

docker top 85cd9ab85d0e //查看容器内的进程top

docker inspect 85cd9ab85d0e //查看容器配置详情

docker exec -it 85cd9ab85d0e /bin/bash //以bash的方式进入容器内部

docker cp 9bd88d355846:/root/a.txt /tmp //将容器内的文件复制到宿主机

docker export 85cd9ab85d0e > docker-redis-6.0.8.tar //将容器导出一个归档文件

docker import docker-redis-6.0.8.tar echo/redis:6.0.8 //将一个归档的镜像文件导入docker

docker commit -m="add file" -a="echo" d4916ee6944f echo/redis:1.0.0 //将容器生成一个新的镜像文件其他

docker info

docker --help

docker command --help

docker login //登录docker hub

docker logout

镜像

镜像分层

docker pull redis:6.0.8

6.0.8: Pulling from library/redis

4e6164a63b7b: Pull complete

66a6505e9bfd: Pull complete

fb4766a9fc53: Pull complete

d8c852e17f8c: Pull complete

c32d3bc72329: Pull complete

ecf4c6322a59: Pull complete

Digest: sha256:21db12e5ab3cc343e9376d655e8eabbdbe5516801373e95a8a9e66010c5b8819

Status: Downloaded newer image for redis:6.0.8

docker.io/library/redis:6.0.8联合文件系统

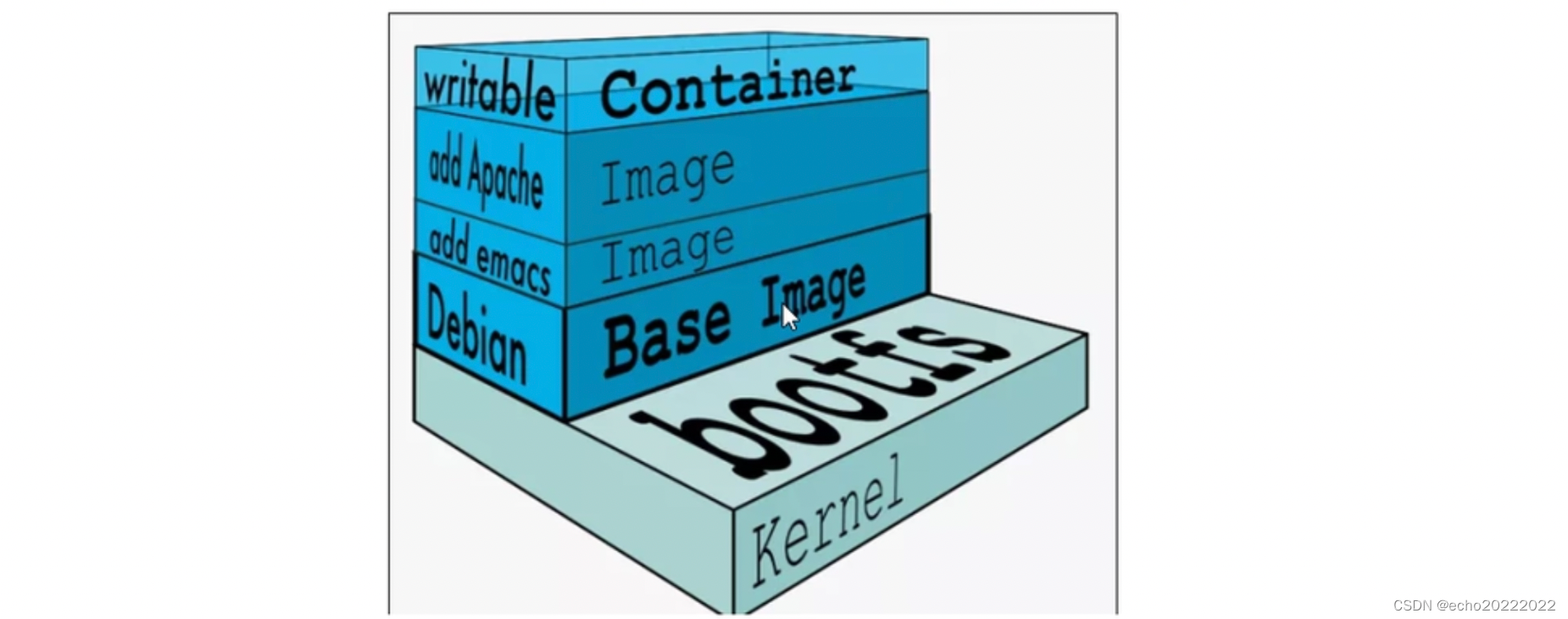

联合文件系统是一种分层的、轻量级并且高性能的文件系统,它支持文件系统的修改作为一次提交来层层叠加,同时可以将不同的目录挂载到同一个虚拟文件系统下。联合文件系统是docker镜像的基础,镜像可以通过分层来实现继承,基于基础镜像可以制作出各种具体的应用镜像。

镜像分层最大的好处就是共享资源,方便复制和迁移,比如有多个镜像都从相同的base镜像构建而来,那么docker host秩序在磁盘上保存一份base镜像,同时内存中也只需要加载一份base镜像就可为多个容器提供服务,而且镜像的每一层都可以被共享。

docker镜像层都是只读的,容器层是可写的,当容器启动时,一个新的可写层被加载到镜像的顶部,这层被称为容器层,容器层之下的都叫做镜像层。

//利用现有的容器生成一个新的镜像文件

>docker commit -m="add file" -a="echo" d4916ee6944f echo/redis:1.0.0

sha256:25ad315b0ee85fd448f0eb6a139b8df2f6755fdaade70ba81aa012cd9c6c6569DockerHub

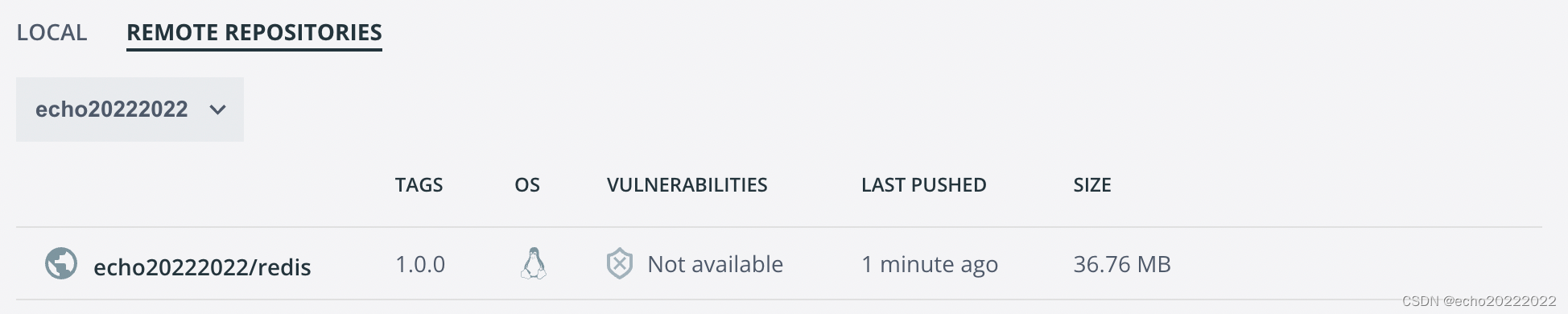

docker hub就是存储docker镜像的服务,官方的提供的是docker hub(hub.docker.com),但在国内的访问速度比较慢,所以一些云服务商也提供了docker hub,比如阿里云、华为云等,如果能科学上网的话,还是推荐使用官方的docker hub。

- 创建docker hub账号(与docker desktop通用);

- 登录;

- 创建仓库;

- 利用docker push将本地镜像提交的远程docker hub;

//将镜像文件上传到docker hub

docker login //输入用户名和秘密

//导出符合命名空间名称的镜像文件 echo20222022

docker commit -m="add file" -a="echo" d4916ee6944f echo20222022/redis:1.0.0

sha256:188a32229dccde7354732ca76bec3fa8db6dc21b0d14f89686be0dc5d5013c2d

//上传镜像文件到docker hub

docker push d4916ee6944f echo20222022/redis:1.0.0

容器卷

容器卷简单理解就是将容器内的目录映射到宿主机上的目录,类似于Lin中的磁盘挂载。

docker run -v 主机目录:容器目路,将容器内的某个目录挂在到主机上的某个目录例如:

docker run -d -p 6000:5000 -v /Users/dongzhang/myregistry:/tmp/registry --privileged=true registry以上命令中的-v就是对容器卷进行配置,即将容器中的/tmp/registry目录挂载到宿主机上的/Users/dongzhang/myregistry目录,默认情况下,宿主机和容器都具有读写的权限,但也可以让容器内只具有读的权限:

-v /Users/dongzhang/myregistry:/tmp/registry:ro -- ro=read only此外,如果某个容器想继承另外一个容器的容器卷的配置,可以通过--volumes-from直接继承过来:

>docker run -it --privileged=true --volumes-from u1 --name u2 ubuntuDockerfile

Dockerfile是一个定义镜像的文件,以联合文件的方式层层想基础模块上添加自动以功能,最终通过Dockerfile构建一个镜像文件,将镜像文件运行起来以后就包含了在Dockerfile中定义的服务。

关键字

Dockerfile reference | Docker Documentation

| FROM | 基础镜像,当前新的镜像是基于哪个镜像,指定一个已经存在的镜像作为模板,第一条必须是FROM |

| MAINTAINER | 定义镜像维护者的姓名和邮箱地址 |

| RUN | 容器构建时需要执行的命令,支持shell格式和exec格式,在docker build是运行 |

| EXPOSE | 当前容器对外暴露出的端口 |

| WORKDIR | 指定在床架容器后中断默认登录时进入的工作目录 |

| USER | 指定该镜像以什么样的用户去执行,如果都不指定,默认时root |

| ENV | 用来在构建镜像过程中设置环境变量 |

| VOLUME | 容器数据卷,用于数据保持和持久化工作 |

| ADD | 将诉诸机目录下的文件拷贝进镜像且会自动处理URL和解压tar压缩包 |

| COPY | 类似ADD,拷贝文件和目录到镜像中。 |

| CMD | 指定容器启动后要做的事情,一般是启动内部服务,支持shell和exec两种格式。 Dockerfile可以有多个CMD指令,但只有最后一个生效,CMD会被docker run之后的参数替换掉 |

| ENTRYPOINT | 也是用来指定容器启动时要运行的命令,类似于CMD指令,但是ENTRYPOINT不会被docker run后面的命令覆盖,而且这些命令行参数会被当做参数送给ENTRYPOINT指令指定的程序。 Dockerfile: FROM nginx ENTRYPOINT ["nginx","-c"] #定参 CMD ["/etc/nginx/nginx/nginx.conf"] #变参 EXPOSE 80 如果执行 docker run ,最终就会执行 nginx -c /etc/nginx/nginx/nginx.conf 如果执行 docker run nginx:test -c /etc/nginx/nginx/new.conf 那么最终执行的指令就会变成 nginx -c /etc/nginx/new.conf |

示例

构建一个包含centos + java8 + ifconfig + vim的镜像

在一个给定目录下包含 Dockerfile 和 jdk-8u341-linux-aarch64.tar.gz

FROM centos

MAINTAINER echogz2021@gmail.com

ENV MYPATH /usr/local

WORKDIR $MYPATH

RUN cd /etc/yum.repos.d/

RUN sed -i 's/mirrorlist/#mirrorlist/g' /etc/yum.repos.d/CentOS-*

RUN sed -i 's|#baseurl=http://mirror.centos.org|baseurl=http://vault.centos.org|g' /etc/yum.repos.d/CentOS-*

RUN yum makecache

RUN yum update -y

#安装vim

RUN yum -y install vim

#安装ifconfig

RUN yum -y install net-tools

#安装java8及lib

#RUN yum -y install glibc.i686

RUN mkdir /usr/local/java

ADD jdk-8u341-linux-aarch64.tar.gz /usr/local/java

#配置环境变量

ENV JAVA_HOME /usr/local/java/jdk1.8.0_341

ENV JRE_HOME $JAVA_HOME/jre

ENV CLASSPATH $JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JRE_HOME/lib:$CLASSPATH

ENV PATH $JAVA_HOME/bin:$PATH

EXPOSE 80

CMD echo $MYPATH

CMD echo "success"

CMD /bin/bash构建镜像文件:

docker build -t centosjava8:1.0 .

#启动自定义容器

docker run -it centosjava8

[root@00eb7124874a local]# java -version

java version "1.8.0_341"

Java(TM) SE Runtime Environment (build 1.8.0_341-b10)

Java HotSpot(TM) 64-Bit Server VM (build 25.341-b10, mixed mode)

[root@00eb7124874a local]#部署一个Eureka服务:

parallels@ubuntu-linux-22-04-desktop:/develops/workspace/dockerfile-demo/cloud-eureka$ ll

total 204972

drwxrwxr-x 2 parallels parallels 4096 Sep 29 16:14 ./

drwxrwxr-x 4 parallels parallels 4096 Sep 29 15:55 ../

-rw-rw-r-- 1 parallels parallels 45030317 Sep 29 14:04 cloud-eureka-1.0-SNAPSHOT.jar

-rw-rw-r-- 1 parallels parallels 671 Sep 29 16:14 Dockerfile

-rw-r--r-- 1 parallels parallels 164845983 Sep 29 14:14 jdk-11.0.16.1_linux-aarch64_bin.tar.gzFROM openjdk:11

MAINTAINER echogz2021@gmail.com

ENV MYPATH=/opt/cloud-eureka

WORKDIR $MYPATH

#JAVA 11

#RUN mkdir /usr/local/java

#ADD jdk-11.0.16.1_linux-aarch64_bin.tar.gz /usr/local/java

#ENV JAVA_HOME /usr/local/java/jdk-11.0.16.1

#ENV JRE_HOME $JAVA_HOME/jre

#ENV CLASSPATH $JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JRE_HOME/lib:$CLASSPATH

#ENV PATH $JAVA_HOME/bin:$PATH

COPY cloud-eureka-1.0-SNAPSHOT.jar cloud-eureka-1.0-SNAPSHOT.jar

#RUN bash -c 'touch /cloud-eureka-1.0-SNAPSHOT.jar'

#ENTRYPOINT echo $(ls)

ENTRYPOINT java -jar cloud-eureka-1.0-SNAPSHOT.jar

#ENTRYPOINT echo $(java -version)

EXPOSE 8000

CMD echo "start cloud-eureka success on port 8000"

网络

网络模式

| bridge | 为每一个容器分配和设置IP地址,并将容器连接到一个docker0虚拟网桥上,默认的使用这种模式 |

| host | 容器不会虚拟出自己的网卡、配置自己的IP等,而是使用宿主机的IP和端口,相当于在宿主机上启动了一个进程 |

| none | 容器有独立的network namespace,但并没有对其进行任何网络设置 |

| container | 新创建的容器不会创建自己的虚拟网卡和配置自己的IP,而是和一个指定的容器共享IP和端口范围 |

常用命令

docker network --help

Usage: docker network COMMAND

Manage networks

Commands:

connect Connect a container to a network

create Create a network

disconnect Disconnect a container from a network

inspect Display detailed information on one or more networks

ls List networks

prune Remove all unused networks

rm Remove one or more networks

#查看网络相亲

docker network inspect host

docker network inspect bridge自定义网络

docker network create my_network;

docker run --network my_network //为容器指定某个网络

root@ubuntu-linux-22-04-desktop:/home/parallels# docker network create my_network

41a34f764730bb6fca429680fa42211b555e5a75dfa8874f5ec8de1774887329

docker run -it --network my_network --name centos1 centos /bin/bash

docker run -it --network my_network --name centos2 centos /bin/bash

[root@77075ab57e23 /]# ping centos1

PING centos1 (172.18.0.2) 56(84) bytes of data.

64 bytes from centos1.my_network (172.18.0.2): icmp_seq=1 ttl=64 time=0.175 ms

64 bytes from centos1.my_network (172.18.0.2): icmp_seq=2 ttl=64 time=0.252 ms自定义的bridge类型的网络自身会维护容器的名称和IP地址的映射,所以能够通过容器名称来进行互相访问。

容器编排

概述

容器编排主要是为了解决管理多个服务实例的问题,并且可以管理这些服务之间的关系,docker-compose是docker官方提供的能够试下服务编排的工具。

https://docs.docker.com/compose

https://docs.docker.com/compose/install/linux/安装compose:

sudo curl -L "https://github.com/docker/compose/releases/download/v2.11.2/docker-compose-linux-aarch64" -o /usr/local/bin/docker-compose

sudo chmod +x /usr/local/bin/docker-compose

sudo ln -s /usr/local/bin/docker-compose /usr/bin/docker-compose

docker-compose --version

Docker Compose version v2.11.2核心概念

- 一个文件:docker-compose.yml;

- 两个概念:服务、工程;

其中docker-compose.yml是定义服务和工程的文件,docker-compose根据这个文件来管理容器,服务指的是一个个应用容器实例,比如订单服务、库存服务、mysql服务、redis服务等,而工程指的是由一组关联的应用容器组成的一个完整的业务单元,在docker-compose.yml文件中定义。

使用步骤

- 编写Dockerfile定义各个微服务应用并构建出对应的镜像文件;

- 使用docker-compose.yml定义一个完整的业务单元,安排好整体应用中的各个容器服务;

- 通过docker-compose up启动并运行整个应用程序,完成一间部署上线;

常用命令

docker-compose -h #查看帮助

docker-compose up #启动所有编排的服务

docker-compose down

docker-compose ps

docker-compose top 服务id

docker-compose logs 服务id

docker-compose config

docker-compose restart

docker-compose start

docker-compose stop应用示例

略。

portainer

portainer是一个监控docker容器的图形化界面工具。

Docker and Kubernetes Management | Portainer

Install Portainer with Docker on Linux - Portainer Documentation

sudo docker run -d -p 9000:9000 --restart=always -v /var/run/docker.sock:/var/run/docker.sock --name prtainer portainer/portainer

安装软件

- 搜索镜像

- 拉取镜像

- 查看镜像

- 启动镜像

- 停止镜像

- 移除镜像

tomcat

>docker pull tomcat

>docker images tomcat

REPOSITORY TAG IMAGE ID CREATED SIZE

tomcat latest 51e6b55125ec 6 hours ago 470MB

>docker run -d -p 8080:8080 tomcat

c3639a693e6a227159be36350d303d416c8d38c0185cccdb8733282993a5c8fc

>docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c3639a693e6a tomcat "catalina.sh run" 18 seconds ago Up 17 seconds 0.0.0.0:8080->8080/tcp thirsty_ganguly

>docker exec -it tomcat /bin/bash

>rm -fr webapps

>mv webapps.dist/ webapps在浏览器中访问http://localhost:8080

mysql

mysql

单机版

>docker pull mysql

//启动mysql服务

>docker run -d --name mysql -p 3306:3306 -e MYSQL_ROOT_PASSWORD=Gz2021.. mysql

//进入mysql容器内部

>docker exec -it dbae6db65f7e /bin/bash

>mysql -uroot -p

//优化,挂载数据卷到宿主机

docker run -d -p 3306:3306 --privileged=true -v /Users/dongzhang/mysql/log:/var/log/mysql -v /Users/dongzhang/mysql/data:/var/lib/mysql -v /Users/dongzhang/mysql/conf:/etc/mysql/conf.d -e MYSQL_ROOT_PASSWORD=Gz2021.. mysql

5fda22bdb0731617e5a395c1002dde6984b566f7b806c17b17affbe53099b0f3此时,创建user数据库,并创建users表,就能够在本地的/Users/dongzhang/mysql/data目录中找到对应的表文件,即使将容器删除,数据仍然存在。

一主一从

指定master节点的配置文件:

/Users/dongzhang/mysql/mysql-cluster/master/conf/my.cnf

[mysqld]

server_id=101

binlog-ignore-db=mysql

log-bin=mall-mysql-bin

binlog_cache_size=1M

binlog_format=mixed

expire_logs_days=7

slave_skip_errors=1062启动一个主节点:

docker run -d -p 3307:3306 --name mysqlmaster --privileged=true -v /Users/dongzhang/mysql/mysql-cluster/master/log:/var/log/mysql -v /Users/dongzhang/mysql/mysql-cluster/master/data:/var/lib/mysql -v /Users/dongzhang/mysql/mysql-cluster/master/conf:/etc/mysql/conf.d -e MYSQL_ROOT_PASSWORD=Gz2021.. mysql

5fda22bdb0731617e5a395c1002dde6984b566f7b806c17b17affbe53099b0f3创建主从复制的用户:

create user 'slave'@'%' identified by 'Gz2021..';

grant replication slave,replication client on *.* to 'slave'@'%';指定slave节点的配置文件:

[mysqld]

server_id=102

binlog-ignore-db=mysql

log-bin=mall-mysql-slave1-bin

binlog_cache_size=1M

binlog_format=mixed

expire_logs_days=7

slave_skip_errors=1062

relay_log=mall-mysql-relay-bin

log_slave_updates=1

read_only=1启动一个从节点:

docker run -d -p 3308:3306 --name mysqlslave --privileged=true -v /Users/dongzhang/mysql/mysql-cluster/slave/log:/var/log/mysql -v /Users/dongzhang/mysql/mysql-cluster/slave/data:/var/lib/mysql -v /Users/dongzhang/mysql/mysql-cluster/slave/conf:/etc/mysql/conf.d -e MYSQL_ROOT_PASSWORD=Gz2021.. mysql查看master节点的binlog状态:

mysql> show master status;

+-----------------------+----------+--------------+------------------+-------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB | Executed_Gtid_Set |

+-----------------------+----------+--------------+------------------+-------------------+

| mall-mysql-bin.000002 | 704 | | mysql | |

+-----------------------+----------+--------------+------------------+-------------------+在从数据库中配置主从复制:

mysql>change master to master_host='172.17.0.2', master_user='slave', master_password='Gz2021..', master_port=3306, master_log_file='mall-mysql-bin.000002', master_log_pos=704, master_connect_retry=30; 查看从机的同步状态:

mysql> show slave status \G;

*************************** 1. row ***************************

Slave_IO_State:

Master_Host: 10.37.129.2

Master_User: slave

Master_Port: 3307

Connect_Retry: 30

Master_Log_File: mall-mysql-bin.000002

Read_Master_Log_Pos: 704

Relay_Log_File: mall-mysql-relay-bin.000001

Relay_Log_Pos: 4

Relay_Master_Log_File: mall-mysql-bin.000002

Slave_IO_Running: No

Slave_SQL_Running: No

因为主节点还没写入数据,所以此时还没有进行数据同步 。在从数据库中插入新的 数据:

create database;

create table users(

id int primary key auto_increment,

name varchar(20);

age int

);

insert into users (name,age) values ('zhangsan',1),('lisi',2);在从库中启动主从同步:

start slave;查看从库中的数据:

mysql> select * from users;

+----+----------+------+

| id | name | age |

+----+----------+------+

| 1 | zhangsan | 10 |

| 2 | lisi | 10 |

+----+----------+------+redis

单机模式

创建配置文件目录和数据目录:

mkdir /User/zhangdong/redis/conf

mkdir /User/zhangdong/redis/data修改redis配置文件中如下选项:

/Users/dongzhang/redis/conf/redis.conf //宿主机上的配置文件

bind 127.0.0.1

appandonly yes

protected-mode no

daemonize no启动redis容器:

docker run -d -p 6379:6379 --name redis --privileged=true -v /Users/dongzhang/redis/conf/redis.conf:/etc/redis/redis.conf -v /Users/dongzhang/redis/data:/data redis:7.0.4 redis-server /etc/redis/redis.conf

//进入redis容器,运行正常

docker exec -it 80ad9b8ce68a /bin/bashcluster模式

一致性哈希算法

哈希算法是一种在分布式环境下使用最广泛的一种算法,它的主要作用是进行流量或存储的负载均衡,最简单的哈希算法是 hash(key) % size,在存储负载均衡中,这种算法在节点数量频繁发生变化时会带来麻烦,即会造成某些节点上的数据查询失效,只能通过rehash的方式解决,但如果数据集很大的话,rehash是非常耗时且危险的。

为了将ash(key) & size的影响降到最低,人们想到了将size设置的足够大,一次来减小局部变化造成的影响,比如memcache采用的一致性哈希环就是构造一个有2^32个节点的哈希环,然后将存储节点分布到这个哈希环上,然后在一个哈希值区间内顺时针找到存储节点,但这中解决方案的问题在意,存储节点在哈希环中的位置并不确定,很容易造成数据倾斜。

为了解决一致性哈希的数据倾斜问题,redis提出了基于槽位的哈希存储,也就是在数据和存储节点之间增加一层哈希槽,哈希槽可以动态的分配到不同的节点上,数据只面对哈希槽,也就是hash(key) % slot,这样就能解决数据倾斜的问题。

redis规定一个redis cluster包含16384个槽位。

搭建集群

docker run -d --name redis-node-1 --net host -p 6381:6381 --privileged=true -v /Users/dongzhang/redis/node01:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6381

docker run -d --name redis-node-2 --net host -p 6382:6382 --privileged=true -v /Users/dongzhang/redis/node02:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6382

docker run -d --name redis-node-3 --net host -p 6383:6383 --privileged=true -v /Users/dongzhang/redis/node03:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6383

docker run -d --name redis-node-4 --net host -p 6384:6384 --privileged=true -v /Users/dongzhang/redis/node04:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6384

docker run -d --name redis-node-5 --net host -p 6385:6385 --privileged=true -v /Users/dongzhang/redis/node05:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6385

docker run -d --name redis-node-6 --net host -p 6386:6386 --privileged=true -v /Users/dongzhang/redis/node06:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6386进入到某个redis节点的bash,构建主从关系:

redis-cli --cluster create 127.0.0.1:6381 127.0.0.1:6382 127.0.0.1:6383 127.0.0.1:6384 127.0.0.1:6385 127.0.0.1:6386 --cluster-replicas 1

>>> Performing Cluster Check (using node 127.0.0.1:6381)

M: f27d66f080dc8dd1afd49436d2b17f12c1428002 127.0.0.1:6381

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: faa59d8ed4ef46b7b006f78404d3f3e8d23f5617 127.0.0.1:6386

slots: (0 slots) slave

replicates f27d66f080dc8dd1afd49436d2b17f12c1428002

M: b89c4cfb62fc06f0999f1da422e5cfb21332fd6f 127.0.0.1:6383

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 923a52af8e311f27e658535dbea45b38c7c15c78 127.0.0.1:6384

slots: (0 slots) slave

replicates d76bb56be5a4a89b8bc63fa2f5faa50141709d4c

M: d76bb56be5a4a89b8bc63fa2f5faa50141709d4c 127.0.0.1:6382

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: 112af1eb46cb50653dde60d372c1dd80b002a39e 127.0.0.1:6385

slots: (0 slots) slave

replicates b89c4cfb62fc06f0999f1da422e5cfb21332fd6f

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.查看集群状态:

root@docker-desktop:/data# redis-cli -p 6381

127.0.0.1:6381> cluster info

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3

cluster_current_epoch:6

cluster_my_epoch:1

cluster_stats_messages_ping_sent:205

cluster_stats_messages_pong_sent:216

cluster_stats_messages_sent:421

cluster_stats_messages_ping_received:211

cluster_stats_messages_pong_received:205

cluster_stats_messages_meet_received:5

cluster_stats_messages_received:421

master slave

6381 6386

6382 6384

6383 6385

127.0.0.1:6381> cluster nodes

faa59d8ed4ef46b7b006f78404d3f3e8d23f5617 127.0.0.1:6386@16386 slave f27d66f080dc8dd1afd49436d2b17f12c1428002 0 1664335199862 1 connected

b89c4cfb62fc06f0999f1da422e5cfb21332fd6f 127.0.0.1:6383@16383 master - 0 1664335198852 3 connected 10923-16383

923a52af8e311f27e658535dbea45b38c7c15c78 127.0.0.1:6384@16384 slave d76bb56be5a4a89b8bc63fa2f5faa50141709d4c 0 1664335197000 2 connected

d76bb56be5a4a89b8bc63fa2f5faa50141709d4c 127.0.0.1:6382@16382 master - 0 1664335197000 2 connected 5461-10922

112af1eb46cb50653dde60d372c1dd80b002a39e 127.0.0.1:6385@16385 slave b89c4cfb62fc06f0999f1da422e5cfb21332fd6f 0 1664335197844 3 connected

f27d66f080dc8dd1afd49436d2b17f12c1428002 127.0.0.1:6381@16381 myself,master - 0 1664335198000 1 connected 0-5460以集群模式连接到reids,并设置数据:

redis-cli -c -p 6381

127.0.0.1:6381> get k1

-> Redirected to slot [12706] located at 127.0.0.1:6383

"v1"测试主备容错

master slave

6381 6386

6382 6384

6383 6385

//停掉node01,node06会升级为master节点

docker stop redis-node-1

redis-node-1

//6381 failed 6386 master

127.0.0.1:6383> cluster nodes

d76bb56be5a4a89b8bc63fa2f5faa50141709d4c 127.0.0.1:6382@16382 master - 0 1664336397011 2 connected 5461-10922

f27d66f080dc8dd1afd49436d2b17f12c1428002 127.0.0.1:6381@16381 master,fail - 1664336318127 1664336315000 1 disconnected

923a52af8e311f27e658535dbea45b38c7c15c78 127.0.0.1:6384@16384 slave d76bb56be5a4a89b8bc63fa2f5faa50141709d4c 0 1664336395996 2 connected

b89c4cfb62fc06f0999f1da422e5cfb21332fd6f 127.0.0.1:6383@16383 myself,master - 0 1664336397000 3 connected 10923-16383

112af1eb46cb50653dde60d372c1dd80b002a39e 127.0.0.1:6385@16385 slave b89c4cfb62fc06f0999f1da422e5cfb21332fd6f 0 1664336398022 3 connected

faa59d8ed4ef46b7b006f78404d3f3e8d23f5617 127.0.0.1:6386@16386 master - 0 1664336396000 7 connected 0-5460

//重启node01,node01编程slave

docker start redis-node-1

redis-node-1

127.0.0.1:6383> cluster nodes

d76bb56be5a4a89b8bc63fa2f5faa50141709d4c 127.0.0.1:6382@16382 master - 0 1664336458690 2 connected 5461-10922

f27d66f080dc8dd1afd49436d2b17f12c1428002 127.0.0.1:6381@16381 slave faa59d8ed4ef46b7b006f78404d3f3e8d23f5617 0 1664336459699 7 connected

923a52af8e311f27e658535dbea45b38c7c15c78 127.0.0.1:6384@16384 slave d76bb56be5a4a89b8bc63fa2f5faa50141709d4c 0 1664336457684 2 connected

b89c4cfb62fc06f0999f1da422e5cfb21332fd6f 127.0.0.1:6383@16383 myself,master - 0 1664336457000 3 connected 10923-16383

112af1eb46cb50653dde60d372c1dd80b002a39e 127.0.0.1:6385@16385 slave b89c4cfb62fc06f0999f1da422e5cfb21332fd6f 0 1664336457000 3 connected

faa59d8ed4ef46b7b006f78404d3f3e8d23f5617 127.0.0.1:6386@16386 master - 0 1664336457000 7 connected 0-5460测试扩容

新建6387和6388两个节点:

新建6387 6388两个节点

docker run -d --name redis-node-7 --net host -p 6387:6387 --privileged=true -v /Users/dongzhang/redis/node07:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6387

docker run -d --name redis-node-8 --net host -p 6388:6388 --privileged=true -v /Users/dongzhang/redis/node08:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6388

redis docker ps | grep redis

a34387cffab2 redis:6.0.8 "docker-entrypoint.s…" 8 seconds ago Up 7 seconds redis-node-8

07ead6e1ac4f redis:6.0.8 "docker-entrypoint.s…" 13 seconds ago Up 13 seconds redis-node-7

16a435490cf3 redis:6.0.8 "docker-entrypoint.s…" 35 minutes ago Up 35 minutes redis-node-6

e58d2f3cb027 redis:6.0.8 "docker-entrypoint.s…" 35 minutes ago Up 35 minutes redis-node-5

22b80ed83d6f redis:6.0.8 "docker-entrypoint.s…" 35 minutes ago Up 35 minutes redis-node-4

9fa4799e2b6d redis:6.0.8 "docker-entrypoint.s…" 35 minutes ago Up 35 minutes redis-node-3

a1820178ba81 redis:6.0.8 "docker-entrypoint.s…" 35 minutes ago Up 35 minutes redis-node-2

9c2f94c3cf9a redis:6.0.8 "docker-entrypoint.s…" 35 minutes ago Up 8 minutes 将6387作为一个新的master接入到集群中:

root@docker-desktop:/data# redis-cli --cluster add-node 127.0.0.1:6387 127.0.0.1:6382

>>> Adding node 127.0.0.1:6387 to cluster 127.0.0.1:6382

>>> Performing Cluster Check (using node 127.0.0.1:6382)

M: d76bb56be5a4a89b8bc63fa2f5faa50141709d4c 127.0.0.1:6382

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: f27d66f080dc8dd1afd49436d2b17f12c1428002 127.0.0.1:6381

slots: (0 slots) slave

replicates faa59d8ed4ef46b7b006f78404d3f3e8d23f5617

S: 923a52af8e311f27e658535dbea45b38c7c15c78 127.0.0.1:6384

slots: (0 slots) slave

replicates d76bb56be5a4a89b8bc63fa2f5faa50141709d4c

M: b89c4cfb62fc06f0999f1da422e5cfb21332fd6f 127.0.0.1:6383

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 112af1eb46cb50653dde60d372c1dd80b002a39e 127.0.0.1:6385

slots: (0 slots) slave

replicates b89c4cfb62fc06f0999f1da422e5cfb21332fd6f

M: faa59d8ed4ef46b7b006f78404d3f3e8d23f5617 127.0.0.1:6386

slots:[0-5460] (5461 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 127.0.0.1:6387 to make it join the cluster.

[OK] New node added correctly.

//此时新的master还没有被分配槽位

root@docker-desktop:/data# redis-cli --cluster add-node 127.0.0.1:6387 127.0.0:6382

>>> Adding node 127.0.0.1:6387 to cluster 127.0.0:6382

Could not connect to Redis at 127.0.0:6382: Network is unreachable

root@docker-desktop:/data# redis-cli --cluster check 127.0.0.1:6382

127.0.0.1:6382 (d76bb56b...) -> 1 keys | 5462 slots | 1 slaves.

127.0.0.1:6387 (8dc43529...) -> 0 keys | 0 slots | 0 slaves.

127.0.0.1:6383 (b89c4cfb...) -> 2 keys | 5461 slots | 1 slaves.

127.0.0.1:6386 (faa59d8e...) -> 2 keys | 5461 slots | 1 slaves.重新分配槽位:

//

root@docker-desktop:/data# redis-cli --cluster reshared 127.0.0.1:6382

Unknown --cluster subcommand

root@docker-desktop:/data# redis-cli --cluster reshard 127.0.0.1:6382

>>> Performing Cluster Check (using node 127.0.0.1:6382)

M: d76bb56be5a4a89b8bc63fa2f5faa50141709d4c 127.0.0.1:6382

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: f27d66f080dc8dd1afd49436d2b17f12c1428002 127.0.0.1:6381

slots: (0 slots) slave

replicates faa59d8ed4ef46b7b006f78404d3f3e8d23f5617

M: 8dc43529e6341a369ac01cd5c5b087e344111e29 127.0.0.1:6387

slots: (0 slots) master

S: 923a52af8e311f27e658535dbea45b38c7c15c78 127.0.0.1:6384

slots: (0 slots) slave

replicates d76bb56be5a4a89b8bc63fa2f5faa50141709d4c

M: b89c4cfb62fc06f0999f1da422e5cfb21332fd6f 127.0.0.1:6383

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 112af1eb46cb50653dde60d372c1dd80b002a39e 127.0.0.1:6385

slots: (0 slots) slave

replicates b89c4cfb62fc06f0999f1da422e5cfb21332fd6f

M: faa59d8ed4ef46b7b006f78404d3f3e8d23f5617 127.0.0.1:6386

slots:[0-5460] (5461 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)? 4096

What is the receiving node ID? 8dc43529e6341a369ac01cd5c5b087e344111e29

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

>all

//重新分配后的槽位

root@docker-desktop:/data# redis-cli --cluster check 127.0.0.1:6382

127.0.0.1:6382 (d76bb56b...) -> 1 keys | 4096 slots | 1 slaves.

127.0.0.1:6387 (8dc43529...) -> 1 keys | 4096 slots | 0 slaves.

127.0.0.1:6383 (b89c4cfb...) -> 2 keys | 4096 slots | 1 slaves.

127.0.0.1:6386 (faa59d8e...) -> 1 keys | 4096 slots | 1 slaves.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 127.0.0.1:6382)

M: d76bb56be5a4a89b8bc63fa2f5faa50141709d4c 127.0.0.1:6382

slots:[6827-10922] (4096 slots) master

1 additional replica(s)

S: f27d66f080dc8dd1afd49436d2b17f12c1428002 127.0.0.1:6381

slots: (0 slots) slave

replicates faa59d8ed4ef46b7b006f78404d3f3e8d23f5617

M: 8dc43529e6341a369ac01cd5c5b087e344111e29 127.0.0.1:6387

//每个原主节点分出了一部分操作给到新的master节点

slots:[0-1364],[5461-6826],[10923-12287] (4096 slots) master

S: 923a52af8e311f27e658535dbea45b38c7c15c78 127.0.0.1:6384

slots: (0 slots) slave

replicates d76bb56be5a4a89b8bc63fa2f5faa50141709d4c

M: b89c4cfb62fc06f0999f1da422e5cfb21332fd6f 127.0.0.1:6383

slots:[12288-16383] (4096 slots) master

1 additional replica(s)

S: 112af1eb46cb50653dde60d372c1dd80b002a39e 127.0.0.1:6385

slots: (0 slots) slave

replicates b89c4cfb62fc06f0999f1da422e5cfb21332fd6f

M: faa59d8ed4ef46b7b006f78404d3f3e8d23f5617 127.0.0.1:6386

slots:[1365-5460] (4096 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.将6388作为6387的slave加入到集群中:

root@docker-desktop:/data# redis-cli --cluster add-node 127.0.0.1:6388 127.0.0.1:6387 --cluster-slave --cluster-master-id 8dc43529e6341a369ac01cd5c5b087e344111e29

>>> Adding node 127.0.0.1:6388 to cluster 127.0.0.1:6387

>>> Performing Cluster Check (using node 127.0.0.1:6387)

M: 8dc43529e6341a369ac01cd5c5b087e344111e29 127.0.0.1:6387

slots:[0-1364],[5461-6826],[10923-12287] (4096 slots) master

M: faa59d8ed4ef46b7b006f78404d3f3e8d23f5617 127.0.0.1:6386

slots:[1365-5460] (4096 slots) master

1 additional replica(s)

S: 923a52af8e311f27e658535dbea45b38c7c15c78 127.0.0.1:6384

slots: (0 slots) slave

replicates d76bb56be5a4a89b8bc63fa2f5faa50141709d4c

S: f27d66f080dc8dd1afd49436d2b17f12c1428002 127.0.0.1:6381

slots: (0 slots) slave

replicates faa59d8ed4ef46b7b006f78404d3f3e8d23f5617

S: 112af1eb46cb50653dde60d372c1dd80b002a39e 127.0.0.1:6385

slots: (0 slots) slave

replicates b89c4cfb62fc06f0999f1da422e5cfb21332fd6f

M: d76bb56be5a4a89b8bc63fa2f5faa50141709d4c 127.0.0.1:6382

slots:[6827-10922] (4096 slots) master

1 additional replica(s)

M: b89c4cfb62fc06f0999f1da422e5cfb21332fd6f 127.0.0.1:6383

slots:[12288-16383] (4096 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 127.0.0.1:6388 to make it join the cluster.

Waiting for the cluster to join

>>> Configure node as replica of 127.0.0.1:6387.

[OK] New node added correctly.

//此时6387有了一个slave节点

root@docker-desktop:/data# redis-cli --cluster check 127.0.0.1:6382

127.0.0.1:6382 (d76bb56b...) -> 1 keys | 4096 slots | 1 slaves.

127.0.0.1:6387 (8dc43529...) -> 1 keys | 4096 slots | 1 slaves.

127.0.0.1:6383 (b89c4cfb...) -> 2 keys | 4096 slots | 1 slaves.

127.0.0.1:6386 (faa59d8e...) -> 1 keys | 4096 slots | 1 slaves.测试缩容

将6388的从节点删除:

redis-cli --cluster del-node 127.0.0.1:6388 2648131f1c8c9b6eb30471e3f4810d2a6baacc73 (6388的节点id)

>>> Removing node 2648131f1c8c9b6eb30471e3f4810d2a6baacc73 from cluster 127.0.0.1:6388

>>> Sending CLUSTER FORGET messages to the cluster...

>>> Sending CLUSTER RESET SOFT to the deleted node.将6387的槽位进行重新分配:

root@docker-desktop:/data# redis-cli --cluster check 127.0.0.1:6382

//把6387上的槽位全部分配6383

How many slots do you want to move (from 1 to 16384)? 4086

What is the receiving node ID? b89c4cfb62fc06f0999f1da422e5cfb21332fd6f 6383的id

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1: 8dc43529e6341a369ac01cd5c5b087e344111e29 6387的id

Source node #2: done

root@docker-desktop:/data# redis-cli --cluster check 127.0.0.1:6382

127.0.0.1:6382 (d76bb56b...) -> 1 keys | 2730 slots | 1 slaves.

127.0.0.1:6387 (8dc43529...) -> 0 keys | 0 slots | 0 slaves.

127.0.0.1:6383 (b89c4cfb...) -> 0 keys | 5462 slots | 1 slaves.

127.0.0.1:6386 (faa59d8e...) -> 4 keys | 8192 slots | 1 slaves.删除6387节点:

//删除6387

redis-cli --cluster del-node 127.0.0.1:6387 8dc43529e6341a369ac01cd5c5b087e344111e29

//恢复成了3主3从

root@docker-desktop:/data# redis-cli --cluster check 127.0.0.1:6382

127.0.0.1:6382 (d76bb56b...) -> 1 keys | 2730 slots | 1 slaves.

127.0.0.1:6383 (b89c4cfb...) -> 0 keys | 5462 slots | 1 slaves.

127.0.0.1:6386 (faa59d8e...) -> 4 keys | 8192 slots | 1 slaves.

859

859

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?