目录

一、企业 keepalived 高可用项目实战

1、Keepalived VRRP 介绍

keepalived是什么

keepalived是集群管理中保证集群高可用的一个服务软件,用来防止单节点故障。

keepalived工作原理

keepalived是以VRRP协议为实现基础的,VRRP全称Virtual Router Redundancy Protocol,即虚拟路由冗余协议。

虚拟路由冗余协议,可以认为是实现高可用的协议,即将N台提供相同功能的路由器组成一个路由器组,这个组里面有一个master和多个backup,master上面有一个对外提供服务的vip(该路由器所在局域网内其他机器的默认路由为该vip),master会发组播,当backup收不到vrrp包时就认为master宕掉了,这时就需要根据VRRP的优先级来选举一个backup当master。这样的话就可以保证路由器的高可用了。

2、keepalived模块

keepalived主要有三个模块,分别是core、check和vrrp。core模块为keepalived的核心,负责主进程的启动、维护以及全局配置文件的加载和解析。check负责健康检查,包括常见的各种检查方式。vrrp模块是来实现VRRP协议的。

3、脑裂(裂脑)

Keepalived的BACKUP主机在收到不MASTER主机报文后就会切换成为master,如果是它们之间的通信线路出现问题,无法接收到彼此的组播通知,但是两个节点实际都处于正常工作状态,这时两个节点均为master强行绑定虚拟IP,导致不可预料的后果,这就是脑裂。

解决方式

- 添加更多的检测手段,比如冗余的心跳线(两块网卡做健康监测),ping对方等等。尽量减少"裂脑"发生机会。(治标不治本,只是提高了检测到的概率);

- 做好对裂脑的监控报警(如邮件及手机短信等或值班).在问题发生时人为第一时间介入仲裁,降低损失。例如,百度的监控报警短倍就有上行和下行的区别。报警消息发送到管理员手机上,管理员可以通过手机回复对应数字或简单的字符串操作返回给服务器.让服务器根据指令自动处理相应故障,这样解决故障的时间更短.

- 爆头,将master停掉。然后检查机器之间的防火墙。网络之间的通信;

二、Nginx+keepalived实现七层的负载均衡

Nginx通过Upstream模块实现负载均衡

upstream 支持的负载均衡算法

- 轮询(默认):可以通过weight指定轮询的权重,权重越大,被调度的次数越多

- ip_hash:可以实现会话保持,将同一客户的IP调度到同一样后端服务器,可以解决session的问题,不能使用weight

- fair:可以根据请求页面的大小和加载时间长短进行调度,使用第三方的upstream_fair模块

- url_hash:按请求的url的hash进行调度,从而使每个url定向到同一服务器,使用第三方的url_hash模块

实验准备:

所有机器关闭防火墙,selinux,保证网络畅通

MASTER:192.168.242.147

BACKUP:192.168.242.148

WEB-server1:192.168.242.145

WEB-server2:192.168.242.146

客户端client:192.168.242.144

实验思路:客户端无需任何操作,先在WEB-server1和WEB-server2两台服务器上分别下载nginx,创建一个测试界面,用客户端访问测试,访问成功前提下,在MASTER和BACKUP上都使用nginx部署负载均衡,用客户端访问测试,在成功前提下,部署keepalived实现调度器HA(VIP我们直接写在keepalived的配置文件中)

实验过程:

1、两台web服务器创建测试页面

WEB-server1:

[root@localhost ~]# yum -y install nginx&&systemctl start nginx &&echo "rs-1" > /usr/share/nginx/html/index.html

WEB-server2:

[root@localhost ~]# yum -y install nginx&&systemctl start nginx &&echo "rs-2" > /usr/share/nginx/html/index.html客户端client访问测试:

[root@localhost ~]# curl 192.168.242.145

rs-1

[root@localhost ~]# curl 192.168.242.146

rs-22、MASTER和BACKUP部署负载均衡

MASTER:

[root@localhost ~]# yum -y install nginx &&systemctl start nginx

[root@localhost ~]# cd /etc/nginx/

[root@localhost nginx]# rm -rf nginx.conf #这两步选做,我比较喜欢用default.conf

[root@localhost ~]# cp nginx.conf.default nginx.conf

[root@localhost ~]# vim nginx.conf

.... 在http全局块添加负载均衡以及地址池,地址池是我们的WEB-server

upstream web-1 {

server 192.168.242.145;

server 192.168.242.146;

}

...

server {

listen 80;

server_name localhost;

#charset koi8-r;

#access_log logs/host.access.log main;

location / {

# root html; #将原有的网站发布页面注释掉

# index index.html index.htm;

proxy_pass http://web-1; #添加代理配置

}

[root@localhost ~]# nginx -t

[root@localhost ~]# nginx -s reload

BACKUP:

[root@localhost ~]# yum -y install nginx &&systemctl start nginx

[root@localhost ~]# cd /etc/nginx/

[root@localhost nginx]# rm -rf nginx.conf

[root@localhost ~]# cp nginx.conf.default nginx.conf

[root@localhost ~]# vim nginx.conf

.... 在http全局块添加负载均衡以及地址池,地址池是我们的WEB-server

upstream web-2 {

server 192.168.242.145;

server 192.168.242.146;

}

...

server {

listen 80;

server_name localhost;

#charset koi8-r;

#access_log logs/host.access.log main;

location / {

# root html; #将原有的网站发布页面注释掉

# index index.html index.htm;

proxy_pass http://web-2; #添加代理配置

}

[root@localhost ~]# nginx -t

[root@localhost ~]# nginx -s reload客户端访问测试

[root@localhost ~]# curl 192.168.242.147

rs-1

[root@localhost ~]# curl 192.168.242.147

rs-2

[root@localhost ~]# curl 192.168.242.148

rs-1

[root@localhost ~]# curl 192.168.242.148

rs-23、部署keepalived

MASTER:

[root@localhost ~]# yum -y install keepalived

[root@localhost keepalived]# cd /etc/keepalived/

[root@localhost keepalived]# cp keepalived.conf keepalived.conf.bak

[root@localhost keepalived]# vim keepalived.conf

删除配置文件内所有内容,手动添加内容

! Configuration File for keepalived

global_defs {

router_id director1 #辅助改为director2

}

vrrp_instance VI_1 {

state MASTER #定义主还是备

interface ens33 #VIP绑定接口

virtual_router_id 80 #整个集群的调度器一致

priority 100 #back改为50

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.242.200/24

}

}

BACKUP机器做相同的操作,只需要把配置文件部分内容做修改

[root@localhost keepalived]# vim keepalived.conf

! Configuration File for keepalived

global_defs {

router_id directory2

}

vrrp_instance VI_1 { #实例名称,两台要保持相同

state BACKUP #设置为backup

interface ens33 #心跳网卡

nopreempt #设置到back上面,不抢占资源

virtual_router_id 80 #虚拟路由编号,主备要保持一致

priority 50 #辅助改为50

advert_int 1 #检查间隔,单位秒

authentication { 秘钥认证

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.242.200/24

}

}

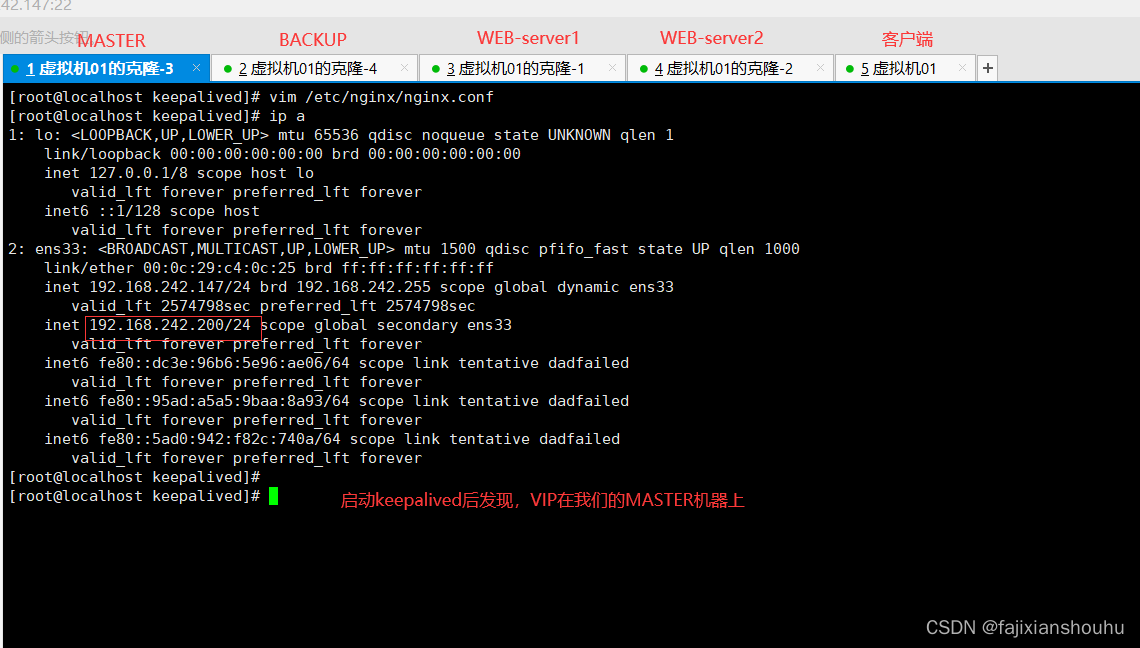

然后MASTER和BACKUP两台服务器都启动keepalived

[root@localhost ~]# systemctl start keepalived

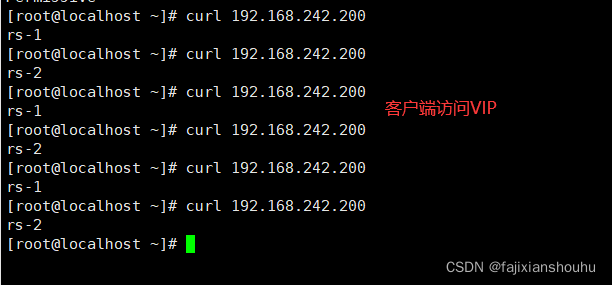

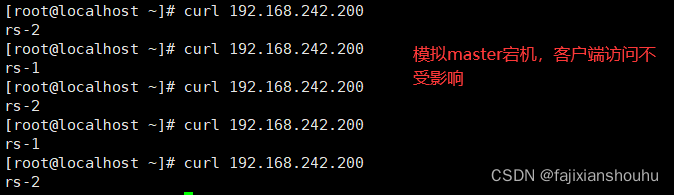

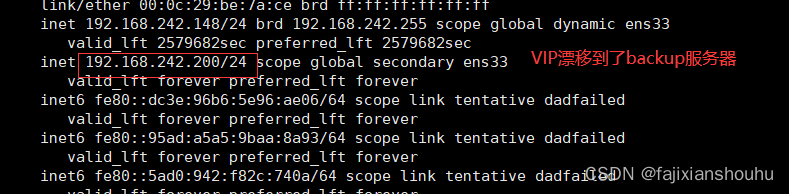

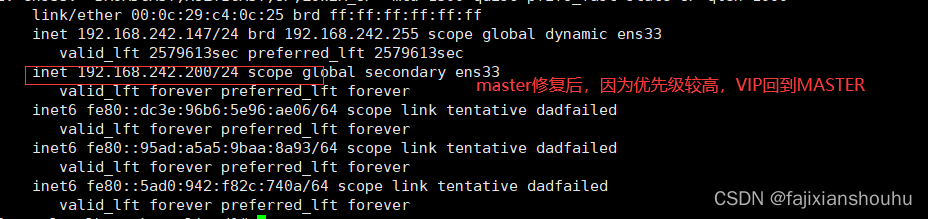

我们把MASTER机器的keepalived停止,再用客户端访问测试

将MASTER机器的keepalived重启,再用客户端访问测试

总结:

- 可以解决心跳故障keepalived

- 不能解决Nginx服务故障,也就是心跳检测,确认的是keepalived主节点是否存活,而不是nginx服务是否正常运行

对调度器Nginx健康检查

利用keepalived的script模块,创建一个脚本,使keepalived没个一段时间执行这个脚本对nginx进行健康检查

思路:

让Keepalived以一定时间间隔执行一个外部脚本,脚本的功能是当Nginx失败,则关闭本机的Keepalived

[root@localhost ~]# cd /etc/keepalived/

[root@localhost keepalived]# vim a.sh

#!/bin/bash

/usr/bin/curl -I http://localhost &>/dev/null

if [ $? -ne 0 ];then

counter=$(ps -C nginx --no-heading|wc -l)

if [ "${counter}" = "0" ]; then

service nginx start

sleep 5

counter=$(ps -C httpd --no-heading|wc -l)

if [ "${counter}" = "0" ]; then

service keepalived stop

fi

fi

fi

[root@localhost keepalived]# chmod a+x a.sh

[root@localhost keepalived]# vim keepalived.conf # keepalived使用script

! Configuration File for keepalived

global_defs {

router_id director1

}

vrrp_script a { #健康检测模块调用

script "/etc/keepalived/a.sh" #指定脚本

interval 5 #检查频率,秒

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 80

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.246.16/24

}

track_script { 引用脚本

a

}

}

注:必须先启动nginx再启动keepalived三、LVS_Director+Keepalived

客户端:192.168.242.144

web-1:192.168.242.145

web-2:192.168.242.146

LVS+keepalived_master:192.168.242.147

LVS+keepalived_backup:192.168.242.148

实验思路:

客户端不做任何操作,只用来测试;web-1和web-2使用nginx分别创建两个测试页面,客户端访问测试,成功之后在LVS+keepalived_master和LVS+keepalived_backup上分别部署LVS,在web-1和web-2上的lo网卡接口上绑定LVS的VIP,设置忽略arp广播,并设置匹配精确ip回包。部署好之后客户端访问LVS的VIP测试。成功之后部署keepalived。

实验过程:

1、web-1和web-2创建测试界面

web-1:

[root@localhost ~]# yum -y install nginx &&systemctl start nginx &&echo "web-1" > /usr/share/nginx/html/index.html

web-2:

[root@localhost ~]# yum -y install nginx &&systemctl start nginx &&echo "web-1" > /usr/share/nginx/html/index.html

客户端测试:

[root@localhost ~]# curl 192.168.242.145

web-1

[root@localhost ~]# curl 192.168.242.146

web-22、部署LVS

LVS+keepalived_master:

[root@localhost ~]# yum -y install ipvsadm

[root@localhost ~]# ip addr add dev ens33 192.168.242.111/32 #设置VIP

[root@localhost ~]# ipvsadm -S > /etc/sysconfig/ipvsadm

[root@localhost ~]# systemctl start ipvsadm

[root@localhost ~]# ipvsadm -A -t 192.168.242.111:80 -s rr

[root@localhost ~]# ipvsadm -a -t 192.168.242.111:80 -r 192.168.242.145 -g

[root@localhost ~]# ipvsadm -a -t 192.168.242.111:80 -r 192.168.242.146 -g

LVS+keepalived_backup:做相同操作

[root@localhost ~]# yum -y install ipvsadm

[root@localhost ~]# ip addr add dev ens33 192.168.242.111/32 #设置VIP

[root@localhost ~]# ipvsadm -S > /etc/sysconfig/ipvsadm

[root@localhost ~]# systemctl start ipvsadm

[root@localhost ~]# ipvsadm -A -t 192.168.242.111:80 -s rr

[root@localhost ~]# ipvsadm -a -t 192.168.242.111:80 -r 192.168.242.145 -g

[root@localhost ~]# ipvsadm -a -t 192.168.242.111:80 -r 192.168.242.146 -g

web-1:

[root@localhost ~]# ip addr add dev lo 192.168.242.111/32

[root@localhost ~]# echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

[root@localhost ~]# echo 1 > /proc/sys/net/ipv4/ip_forward

[root@localhost ~]# echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

[root@localhost ~]# sysctl -p

web-2:

[root@localhost ~]# ip addr add dev lo 192.168.242.111/32

[root@localhost ~]# echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

[root@localhost ~]# echo 1 > /proc/sys/net/ipv4/ip_forward

[root@localhost ~]# echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

[root@localhost ~]# sysctl -p

客户端访问测试:

[root@localhost ~]# curl 192.168.242.111

web-1

[root@localhost ~]# curl 192.168.242.111

web-2

3、部署keepalived

LVS+keepalived_master:

[root@localhost ~]# yum -y install keepalived

[root@localhost ~]# cd /etc/keepalived

[root@localhost keepalived]# rm -rf keepalived.conf

[root@localhost ~]# vim keepalived.conf

! Configuration File for keepalived

global_defs {

router_id lvs-keepalived-master #辅助改为lvs-backup

}

vrrp_instance VI_1 {

state MASTER

interface ens33 #VIP绑定接口

virtual_router_id 80 #VRID 同一组集群,主备一致

priority 100 #本节点优先级,辅助改为50

advert_int 1 #检查间隔,默认为1s

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.242.111/24

}

}

virtual_server 192.168.242.111 80 { #LVS配置

delay_loop 3

lb_algo rr #LVS调度算法

lb_kind DR #LVS集群模式(路由模式)

nat_mask 255.255.255.0

protocol TCP #健康检查使用的协议

real_server 192.168.242.145 80 {

weight 1

inhibit_on_failure #当该节点失败时,把权重设置为0,而不是从IPVS中删除

TCP_CHECK { #健康检查

connect_port 80 #检查的端口

connect_timeout 3 #连接超时的时间

}

}

real_server 192.168.242.146 80 {

weight 1

inhibit_on_failure

TCP_CHECK {

connect_timeout 3

connect_port 80

}

}

}

LVS+keepalived_backup:

[root@localhost ~]# yum -y install keepalived

[root@localhost ~]# cd /etc/keepalived

[root@localhost keepalived]# rm -rf keepalived.conf

[root@localhost ~]# vim keepalived.conf

! Configuration File for keepalived

global_defs {

router_id lvs-keepalived-backup

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

nopreempt #不抢占资源

virtual_router_id 80

priority 50

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.242.111/24

}

}

virtual_server 192.168.242.111 80 {

delay_loop 3

lb_algo rr

lb_kind DR

nat_mask 255.255.255.0

persistence_timeout 20

protocol TCP

real_server 192.168.242.145 80 {

weight 1

inhibit_on_failure

TCP_CHECK {

connect_port 80

connect_timeout 3

}

}

real_server 192.168.242.146 80 {

weight 1

inhibit_on_failure

TCP_CHECK {

connect_timeout 3

connect_port 80

}

}

}

然后两台都启动keepalived,客户端访问keepalived的VIP测试

客户端访问测试:

[root@localhost ~]# curl 192.168.242.111

web-1

[root@localhost ~]# curl 192.168.242.111

web-2

LVS+keepalived_master:

[root@localhost ~]# ip a #在ens33网卡接口上能看到三个ip

....

192.168.242.147/24

...

192.168.242.111/32 LVS的VIP

...

192.168.242.111/24 keepalived的VIP

然后将Keepalived-master服务器上的keepalived停止,再用客户端访问测试

[root@localhost ~]# curl 192.168.242.111

web-1

[root@localhost ~]# curl 192.168.242.111

web-2

发现客户端访问不受影响,而且keepalived-master上的VIP会漂移到keepalived-backup上去。测试

LVS+keepalived_master:

[root@localhost ~]# ip a #发现keepalived的VIP消失

......

192.168.242.147/24

...

192.168.242.111/32

LVS+keepalived_backup:

[root@localhost ~]# ip a

...

192.168.242.148/24

...

192.168.242.111/32

...

192.168.242.111/24 #keepalived的VIP漂移过来了

2573

2573

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?