二进制部署Kubernetes

部署环境

node1:192.168.11.25

node2:192.168.11.26

node3:192.168.11.27

一、准备工作

1.修改主机名(三台机子分别修改主机名)

hostnamectl set-hostname node1

hostnamectl set-hostname node2

hostnamectl set-hostname node3

2.修改host文件,添加主机名和 IP 的对应关系

vim /etc/hosts

192.168.11.25 node1 node1

192.168.11.26 node2 node2

192.168.11.27 node3 node33.添加 k8s 和 docker 账户

useradd -m k8s

为k8s账户设置密码

sh -c 'echo 123456 | passwd k8s --stdin'

gpasswd -a k8s wheel

查看:visudo和grep '%wheel.*NOPASSWD: ALL' /etc/sudoers

4.在每台机器上添加 docker 账户,将 k8s 账户添加到 docker 组中,同时配置 dockerd 参数

useradd -m docker

gpasswd -a k8s docker

mkdir -p /etc/docker/

vim /etc/docker/daemon.json

{

"registry-mirrors": ["https://hub-mirror.c.163.com", "https://docker.mirrors.ustc.edu.cn"],

"max-concurrent-downloads": 20

}5.无密码 ssh 登录其它节点

该操作均在node1节点上执行,然后远程分发文件和执行命令

设置node1节点可以无密码登录所有节点的 k8s 和 root 账户

ssh-keygen -t rsa

ssh-copy-id root@node1

ssh-copy-id root@node2

ssh-copy-id root@node3

ssh-copy-id k8s@node1

ssh-copy-id k8s@node2

ssh-copy-id k8s@node3

6.将可执行文件路径 /opt/k8s/bin 添加到 PATH 变量中(每个节点上执行)

sh -c "echo 'PATH=/opt/k8s/bin:$PATH:$HOME/bin:$JAVA_HOME/bin' >>/root/.bashrc"

echo 'PATH=/opt/k8s/bin:$PATH:$HOME/bin:$JAVA_HOME/bin' >>~/.bashrc

7.安装依赖包(每个节点上执行)

yum install -y epel-release conntrack ipvsadm ipset jq sysstat curl iptables libseccomp

8.关闭防火墙

systemctl stop firewalld && systemctl disable firewalld

iptables -F && iptables -X && iptables -F -t nat && iptables -X -t nat

iptables -P FORWARD ACCEPT

9.关闭 swap 分区

swapoff -a

下次启动自动关闭

sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

10.关闭selinux

setenforce 0

下次启动自动关闭

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

11.关闭 dnsmasq

service dnsmasq stop

systemctl disable dnsmasq

12.设置系统参数

vim /etc/sysctl.d/kubernetes.conf

et.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

vm.swappiness=0

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100sysctl -p /etc/sysctl.d/kubernetes.conf

mount -t cgroup -o cpu,cpuacct none /sys/fs/cgroup/cpu,cpuacct

13.加载内核模块

vim /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

14.设置系统时区

timedatectl set-timezone Asia/Shanghai

timedatectl set-local-rtc 0

systemctl restart rsyslog

systemctl restart crond

15.创建所需要的存储目录

mkdir -p /opt/k8s/bin

chown -R k8s /opt/k8s

mkdir -p /etc/kubernetes/cert

chown -R k8s /etc/kubernetes

mkdir -p /etc/etcd/cert

chown -R k8s /etc/etcd/cert

mkdir -p /var/lib/etcd && chown -R k8s /etc/etcd/cert

16.检查系统内核和模块是否适合运行docker(仅适用于 linux 系统)

curl https://raw.githubusercontent.com/docker/docker/master/contrib/check-config.sh > check-config.sh

bash ./check-config.sh

注意这里需要把raw.githubusercontent.com的网址加入到host文件,或者科学上网方式

echo "199.232.68.133 raw.githubusercontent.com" >> /etc/hosts

17.配置集群环境变量

在后面的部署中都需要使用这些全局变量,根据自己的网络环境修改

vim path.sh

#!/usr/bin/bash

# 生成 EncryptionConfig 所需的加密 key

ENCRYPTION_KEY=$(head -c 32 /dev/urandom | base64)

# 最好使用 当前未用的网段 来定义服务网段和 Pod 网段

# 服务网段,部署前路由不可达,部署后集群内路由可达(kube-proxy 和 ipvs 保证)

SERVICE_CIDR="10.254.0.0/16"

# Pod 网段,建议 /16 段地址,部署前路由不可达,部署后集群内路由可达(flanneld 保证)

CLUSTER_CIDR="172.30.0.0/16"

# 服务端口范围 (NodePort Range)

export NODE_PORT_RANGE="8400-9000"

# 集群各机器 IP 数组

export NODE_IPS=(192.168.11.25 192.168.11.26 192.168.11.27)

# 集群各 IP 对应的 主机名数组

export NODE_NAMES=(node1 node2 node3)

# kube-apiserver 的 VIP(HA 组件 keepalived 发布的 IP)

export MASTER_VIP=192.168.11.24

# kube-apiserver VIP 地址(HA 组件 haproxy 监听 8443 端口)

export KUBE_APISERVER="https://${MASTER_VIP}:8443"

# HA 节点,VIP 所在的网络接口名称

export VIP_IF="eno16777736"

# etcd 集群服务地址列表

export ETCD_ENDPOINTS="https://192.168.11.25:2379,https://192.168.11.26:2379,https://192.168.11.27:2379"

# etcd 集群间通信的 IP 和端口

export ETCD_NODES="node1=https://192.168.11.25:2380,node2=https://192.168.11.26:2380,node3=https://192.168.11.27:2380"

# flanneld 网络配置前缀

export FLANNEL_ETCD_PREFIX="/kubernetes/network"

# kubernetes 服务 IP (一般是 SERVICE_CIDR 中第一个IP)

export CLUSTER_KUBERNETES_SVC_IP="10.254.0.1"

# 集群 DNS 服务 IP (从 SERVICE_CIDR 中预分配)

export CLUSTER_DNS_SVC_IP="10.254.0.2"

# 集群 DNS 域名

export CLUSTER_DNS_DOMAIN="cluster.local."

# 将二进制目录 /opt/k8s/bin 加到 PATH 中

export PATH=/opt/k8s/bin:$PATH

source path.sh

使用下面的脚本把配置环境变量的脚本发送到其他node节点

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp path.sh k8s@${node_ip}:/opt/k8s/bin/

ssh k8s@${node_ip} "chmod +x /opt/k8s/bin/*"

done二、创建 CA 证书和秘钥

使用cloudFlare的PKI工具集cfssl创建所有证书

1.安装 cfssl 工具集

mkdir -p /opt/k8s/cert && chown -R k8s /opt/k8s && cd /opt/k8s

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

mv cfssl_linux-amd64 /opt/k8s/bin/cfssl

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

mv cfssljson_linux-amd64 /opt/k8s/bin/cfssljson

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

mv cfssl-certinfo_linux-amd64 /opt/k8s/bin/cfssl-certinfo

chmod +x /opt/k8s/bin/*

export PATH=/opt/k8s/bin:$PATH

2.创建根证书 (CA)

CA 证书是集群所有节点共享的,只需要创建一个 CA 证书,后续创建的所有证书都由它签名

cd /opt/k8s/bin

3.创建配置文件

vim ca-config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h"

}

}

}

}4.创建证书签名请求文件

vim ca-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "4Paradigm"

}

]

}5.生成 CA 证书和私钥

到/opt/k8s/bin目录下执行

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

查看生成的证书:ls ca*

6.分发证书文件

将生成的 CA 证书、秘钥文件、配置文件拷贝到所有节点的/etc/kubernetes/cert目录下

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /etc/kubernetes/cert && chown -R k8s /etc/kubernetes"

scp ca*.pem ca-config.json k8s@${node_ip}:/etc/kubernetes/cert

donesource scp2.sh

三、部署 kubectl 命令行工具

1.下载和分发 kubectl 二进制文件

wget https://dl.k8s.io/v1.10.4/kubernetes-client-linux-amd64.tar.gz

tar -zxf kubernetes-client-linux-amd64.tar.gz

分发kubectl文件

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp kubernetes/client/bin/kubectl k8s@${node_ip}:/opt/k8s/bin/

ssh k8s@${node_ip} "chmod +x /opt/k8s/bin/*"

done2.创建 admin 证书和私钥

kubectl 与 apiserver https 安全端口通信,apiserver 对提供的证书进行认证和授权

kubectl 作为集群的管理工具,需要被授予最高权限。这里创建具有最高权限的 admin 证书

vim admin-csr.json

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "system:masters",

"OU": "4Paradigm"

}

]

}cfssl gencert -ca=/etc/kubernetes/cert/ca.pem -ca-key=/etc/kubernetes/cert/ca-key.pem -config=/etc/kubernetes/cert/ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

查看证书和私钥:ls admin*

3.创建 kubeconfig 文件

# 设置集群参数

kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/cert/ca.pem --embed-certs=true --server=${KUBE_APISERVER} --kubeconfig=kubectl.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials admin --client-certificate=admin.pem --client-key=admin-key.pem --embed-certs=true --kubeconfig=kubectl.kubeconfig

# 设置上下文参数

kubectl config set-context kubernetes --cluster=kubernetes --user=admin --kubeconfig=kubectl.kubeconfig

# 设置默认上下文

kubectl config use-context kubernetes --kubeconfig=kubectl.kubeconfig4.分发 kubeconfig 文件

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh k8s@${node_ip} "mkdir -p ~/.kube"

scp kubectl.kubeconfig k8s@${node_ip}:~/.kube/config

ssh root@${node_ip} "mkdir -p ~/.kube"

scp kubectl.kubeconfig root@${node_ip}:~/.kube/config

done四、部署 etcd 集群

1.下载和分发 etcd 二进制文件

下载最新版本的发布包

wget https://github.com/coreos/etcd/releases/download/v3.3.7/etcd-v3.3.7-linux-amd64.tar.gz

tar zxf etcd-v3.3.7-linux-amd64.tar.gz

分发二进制文件到集群所有节点

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp etcd-v3.3.7-linux-amd64/etcd* k8s@${node_ip}:/opt/k8s/bin

ssh k8s@${node_ip} "chmod +x /opt/k8s/bin/*"

done2.创建证书签名请求

vim etcd-csr.json

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"192.168.11.25",

"192.168.11.26",

"192.168.11.27"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "4Paradigm"

}

]

}生成证书和私钥

cfssl gencert -ca=/etc/kubernetes/cert/ca.pem -ca-key=/etc/kubernetes/cert/ca-key.pem -config=/etc/kubernetes/cert/ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd

查看生成的证书和私钥:ls etcd*

分发生成的证书和私钥

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /etc/etcd/cert && chown -R k8s /etc/etcd/cert"

scp etcd*.pem k8s@${node_ip}:/etc/etcd/cert/

done3.创建 etcd 的 systemd unit 模板文件

vim etcd.service.template

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

Documentation=https://github.com/coreos

[Service]

User=k8s

Type=notify

WorkingDirectory=/var/lib/etcd/

ExecStart=/opt/k8s/bin/etcd \

--data-dir=/var/lib/etcd \

--name=##NODE_NAME## \

--cert-file=/etc/etcd/cert/etcd.pem \

--key-file=/etc/etcd/cert/etcd-key.pem \

--trusted-ca-file=/etc/kubernetes/cert/ca.pem \

--peer-cert-file=/etc/etcd/cert/etcd.pem \

--peer-key-file=/etc/etcd/cert/etcd-key.pem \

--peer-trusted-ca-file=/etc/kubernetes/cert/ca.pem \

--peer-client-cert-auth \

--client-cert-auth \

--listen-peer-urls=https://##NODE_IP##:2380 \

--initial-advertise-peer-urls=https://##NODE_IP##:2380 \

--listen-client-urls=https://##NODE_IP##:2379,https://127.0.0.1:2379 \

--advertise-client-urls=https://##NODE_IP##:2379 \

--initial-cluster-token=etcd-cluster-0 \

--initial-cluster=${ETCD_NODES} \

--initial-cluster-state=new

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target为各节点创建和分发 etcd systemd unit 文件

for (( i=0; i < 3; i++ ))

do

sed -e "s/##NODE_NAME##/${NODE_NAMES[i]}/" -e "s/##NODE_IP##/${NODE_IPS[i]}/" etcd.service.template > etcd-${NODE_IPS[i]}.service

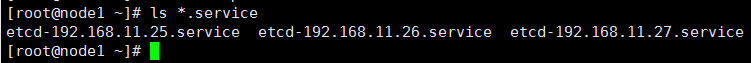

done查看创建的文件:ls *.service

分发生成的 systemd unit 文件

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /var/lib/etcd && chown -R k8s /var/lib/etcd"

scp etcd-${node_ip}.service root@${node_ip}:/etc/systemd/system/etcd.service

done4.使用ssh远程启动etcd服务

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable etcd && systemctl restart etcd &"

done5.检查服务启动的状况

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh k8s@${node_ip} "systemctl status etcd|grep Active"

done状态为running才是成功启动了

如果服务不是活动状态就再运行一次启动服务的脚本可能就启动了,还是不行则查看日志文件:journalctl -u etcd

6.验证服务状态

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ETCDCTL_API=3 /opt/k8s/bin/etcdctl \

--endpoints=https://${node_ip}:2379 \

--cacert=/etc/kubernetes/cert/ca.pem \

--cert=/etc/etcd/cert/etcd.pem \

--key=/etc/etcd/cert/etcd-key.pem endpoint health;

done如下图则群集为健康状态

五、部署 flannel 网络

1.下载和分发 flanneld 二进制文件

mkdir flannel

wget https://github.com/coreos/flannel/releases/download/v0.10.0/flannel-v0.10.0-linux-amd64.tar.gz

tar -zxf flannel-v0.10.0-linux-amd64.tar.gz -C flannel

分发flanneld文件

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp flannel/{flanneld,mk-docker-opts.sh} k8s@${node_ip}:/opt/k8s/bin/

ssh k8s@${node_ip} "chmod +x /opt/k8s/bin/*"

done2.创建 flannel 证书和私钥

vim flanneld-csr.json

{

"CN": "flanneld",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "4Paradigm"

}

]

}生成证书和私钥

cfssl gencert -ca=/etc/kubernetes/cert/ca.pem \

-ca-key=/etc/kubernetes/cert/ca-key.pem \

-config=/etc/kubernetes/cert/ca-config.json \

-profile=kubernetes flanneld-csr.json | cfssljson -bare flanneld查看生成的证书和私钥:ls flanneld*pem

将生成的证书和私钥分发到所有节点

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /etc/flanneld/cert && chown -R k8s /etc/flanneld"

scp flanneld*.pem k8s@${node_ip}:/etc/flanneld/cert

done3.向etcd写入集群Pod网段信息

etcdctl \

--endpoints=${ETCD_ENDPOINTS} \

--ca-file=/etc/kubernetes/cert/ca.pem \

--cert-file=/etc/flanneld/cert/flanneld.pem \

--key-file=/etc/flanneld/cert/flanneld-key.pem \

set ${FLANNEL_ETCD_PREFIX}/config '{"Network":"'${CLUSTER_CIDR}'", "SubnetLen": 24, "Backend": {"Type": "vxlan"}}'4.创建flanneld 的 systemd unit文件

export IFACE=eno16777736

vim flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network.target

After=network-online.target

Wants=network-online.target

After=etcd.service

Before=docker.service

[Service]

Type=notify

ExecStart=/opt/k8s/bin/flanneld \

-etcd-cafile=/etc/kubernetes/cert/ca.pem \

-etcd-certfile=/etc/flanneld/cert/flanneld.pem \

-etcd-keyfile=/etc/flanneld/cert/flanneld-key.pem \

-etcd-endpoints=https://192.168.11.25:2379,https://192.168.11.26:2379,https://192.168.11.27:2379 \

-etcd-prefix=/kubernetes/network \

-iface=eno16777736

ExecStartPost=/opt/k8s/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/docker

Restart=on-failure

[Install]

WantedBy=multi-user.target

RequiredBy=docker.service5.分发flanneld systemd unit文件到所有节点

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp flanneld.service root@${node_ip}:/etc/systemd/system/

done6.启动flanneld服务

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh k8s@${node_ip} "systemctl status flanneld|grep Active"

done状态为running才是成功启动了

如果服务不是活动状态就再运行一次启动服务的脚本可能就启动了,还是不行则查看日志文件:journalctl -u flanneld

7.检查分配给各flanneld的Pod网段信息

etcdctl \

--endpoints=${ETCD_ENDPOINTS} \

--ca-file=/etc/kubernetes/cert/ca.pem \

--cert-file=/etc/flanneld/cert/flanneld.pem \

--key-file=/etc/flanneld/cert/flanneld-key.pem \

get ${FLANNEL_ETCD_PREFIX}/config8.查看已分配的Pod子网段列表(/24)

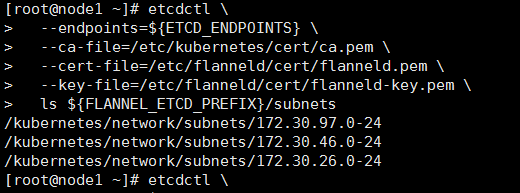

etcdctl \

--endpoints=${ETCD_ENDPOINTS} \

--ca-file=/etc/kubernetes/cert/ca.pem \

--cert-file=/etc/flanneld/cert/flanneld.pem \

--key-file=/etc/flanneld/cert/flanneld-key.pem \

ls ${FLANNEL_ETCD_PREFIX}/subnets如下图所示

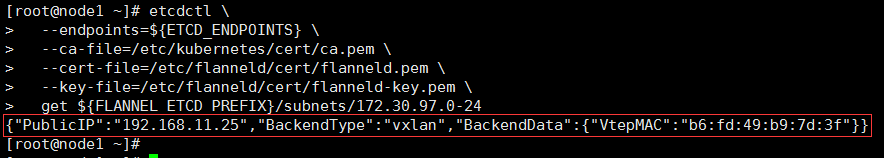

9.查看某一 Pod网段对应的节点IP和flannel接口地址

etcdctl \

--endpoints=${ETCD_ENDPOINTS} \

--ca-file=/etc/kubernetes/cert/ca.pem \

--cert-file=/etc/flanneld/cert/flanneld.pem \

--key-file=/etc/flanneld/cert/flanneld-key.pem \

get ${FLANNEL_ETCD_PREFIX}/subnets/172.30.81.0-24注意:这里的后面subnets的值要修改成你上面查看的容器网段

结果如下图所示

10.验证各节点能通过Pod网段互通

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh ${node_ip} "/usr/sbin/ip addr show flannel.1|grep -w inet"

done查询结果如下图

11.在各节点上ping所有flannel接口 IP,确保能通

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh ${node_ip} "ping -c 1 172.30.97.0"

ssh ${node_ip} "ping -c 1 172.30.46.0"

ssh ${node_ip} "ping -c 1 172.30.26.0"

done注意:这里的网段结合上面查询结果更改

六、部署 master 节点

1.下载最新版本的二进制文件

wget https://dl.k8s.io/v1.10.4/kubernetes-server-linux-amd64.tar.gz

tar zxf kubernetes-server-linux-amd64.tar.gz

cd kubernetes

tar zxf kubernetes-src.tar.gz

将二进制文件拷贝到所有 master 节点

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp server/bin/* k8s@${node_ip}:/opt/k8s/bin/

ssh k8s@${node_ip} "chmod +x /opt/k8s/bin/*"

done七、haproxy部署(部署高可用组件)

1.为三个节点安装软件

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "yum install -y keepalived haproxy"

done2.配置和下发haproxy配置文件

vim haproxy.cfg

global

log /dev/log local0

log /dev/log local1 notice

chroot /var/lib/haproxy

stats socket /var/run/haproxy-admin.sock mode 660 level admin

stats timeout 30s

user haproxy

group haproxy

daemon

nbproc 1

defaults

log global

timeout connect 5000

timeout client 10m

timeout server 10m

listen admin_stats

bind 0.0.0.0:10080

mode http

log 127.0.0.1 local0 err

stats refresh 30s

stats uri /status

stats realm welcome login\ Haproxy

stats auth admin:123456

stats hide-version

stats admin if TRUE

listen kube-master

bind 0.0.0.0:8443

mode tcp

option tcplog

balance source

server 192.168.11.25 192.168.11.25:6443 check inter 2000 fall 2 rise 2 weight 1

server 192.168.11.26 192.168.11.26:6443 check inter 2000 fall 2 rise 2 weight 1

server 192.168.11.27 192.168.11.27:6443 check inter 2000 fall 2 rise 2 weight 13.启动haproxy服务

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl restart haproxy"

done4.检查haproxy服务状态

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl status haproxy|grep Active"

done状态为running才是成功启动了

如果服务不是活动状态就再运行一次启动服务的脚本可能就启动了,还是不行则查看日志文件:journalctl -u haproxy

5.检查haproxy是否监听8443端口

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "netstat -lnpt|grep haproxy"

done结果如下图

6.配置和下发keepalived配置文件

环境分配:

master: 192.168.11.25

backup:192.168.11.26和192.168.11.27

master 配置文件:

vim keepalived-master.conf

global_defs {

router_id lb-master-105

}

vrrp_script check-haproxy {

script "killall -0 haproxy"

interval 5

weight -30

}

vrrp_instance VI-kube-master {

state MASTER

priority 120

dont_track_primary

interface eno16777736

virtual_router_id 68

advert_int 3

track_script {

check-haproxy

}

virtual_ipaddress {

192.168.11.24

}

}backup 配置文件:

vim keepalived-backup.conf

global_defs {

router_id lb-backup-105

}

vrrp_script check-haproxy {

script "killall -0 haproxy"

interval 5

weight -30

}

vrrp_instance VI-kube-master {

state BACKUP

priority 110

dont_track_primary

interface eno16777736

virtual_router_id 68

advert_int 3

track_script {

check-haproxy

}

virtual_ipaddress {

192.168.11.24

}

}7.下发keepalived配置文件

master配置文件:

scp keepalived-master.conf root@192.168.11.25:/etc/keepalived/keepalived.conf

backup配置文件:

scp keepalived-backup.conf root@192.168.11.26:/etc/keepalived/keepalived.conf

scp keepalived-backup.conf root@192.168.11.27:/etc/keepalived/keepalived.conf

8.启动keepalived 服务

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl restart keepalived"

done9.检查keepalived服务启动状态

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl status keepalived|grep Active"

done状态为running才是成功启动了

如果服务不是活动状态就再运行一次启动服务的脚本可能就启动了,还是不行则查看日志文件:journalctl -u keepalived

10.查看VIP所在的节点,确保可以ping通VIP

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh ${node_ip} "/usr/sbin/ip addr show ${VIP_IF}"

ssh ${node_ip} "ping -c 1 ${MASTER_VIP}"

done11.查看haproxy状态页面,访问master的IP地址的10080端口

比如我的网址就是:http://192.168.11.25:10080/status

注意:默认没有修改的话用户为admin,密码为123456

如下图

八、部署 kube-apiserver 组件

1.创建kubernetes证书和私钥

vim kubernetes-csr.json

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"192.168.11.25",

"192.168.11.26",

"192.168.11.27",

"",

"",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "4Paradigm"

}

]

}生成证书和私钥

cfssl gencert -ca=/etc/kubernetes/cert/ca.pem \

-ca-key=/etc/kubernetes/cert/ca-key.pem \

-config=/etc/kubernetes/cert/ca-config.json \

-profile=kubernetes kubernetes-csr.json | cfssljson -bare kubernetes查看生成的证书和私钥:ls kubernetes*pem

将生成的证书和私钥文件分发到master节点

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /etc/kubernetes/cert/ && sudo chown -R k8s /etc/kubernetes/cert/"

scp kubernetes*.pem k8s@${node_ip}:/etc/kubernetes/cert/

done2.创建加密配置文件

vim encryption-config.yaml

kind: EncryptionConfig

apiVersion: v1

resources:

- resources:

- secrets

providers:

- aescbc:

keys:

- name: key1

secret: 0Av9Vvddzvr6l4vmpHNAdq2RgMTvdrhdxr9x3H39Jsc=

- identity: {}

3.将加密配置文件拷贝到节点的/etc/kubernetes/目录下

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp encryption-config.yaml root@${node_ip}:/etc/kubernetes/

done4.创建kube-apiserver systemd unit模板文件

vim kube-apiserver.service.template

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

ExecStart=/opt/k8s/bin/kube-apiserver \

--enable-admission-plugins=Initializers,NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \

--anonymous-auth=false \

--experimental-encryption-provider-config=/etc/kubernetes/encryption-config.yaml \

--advertise-address=##NODE_IP## \

--bind-address=##NODE_IP## \

--insecure-port=0 \

--authorization-mode=Node,RBAC \

--runtime-config=api/all \

--enable-bootstrap-token-auth \

--service-cluster-ip-range=10.254.0.0/16 \

--service-node-port-range=8400-9000 \

--tls-cert-file=/etc/kubernetes/cert/kubernetes.pem \

--tls-private-key-file=/etc/kubernetes/cert/kubernetes-key.pem \

--client-ca-file=/etc/kubernetes/cert/ca.pem \

--kubelet-client-certificate=/etc/kubernetes/cert/kubernetes.pem \

--kubelet-client-key=/etc/kubernetes/cert/kubernetes-key.pem \

--service-account-key-file=/etc/kubernetes/cert/ca-key.pem \

--etcd-cafile=/etc/kubernetes/cert/ca.pem \

--etcd-certfile=/etc/kubernetes/cert/kubernetes.pem \

--etcd-keyfile=/etc/kubernetes/cert/kubernetes-key.pem \

--etcd-servers=https://192.168.11.25:2379,https://192.168.11.26:2379,https://192.168.11.27:2379 \

--enable-swagger-ui=true \

--allow-privileged=true \

--apiserver-count=3 \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/var/log/kube-apiserver-audit.log \

--event-ttl=1h \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=2

Restart=on-failure

RestartSec=5

Type=notify

User=k8s

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target5.为各节点创建和分发kube-apiserver systemd unit文件

for (( i=0; i < 3; i++ ))

do

sed -e "s/##NODE_NAME##/${NODE_NAMES[i]}/" -e "s/##NODE_IP##/${NODE_IPS[i]}/" kube-apiserver.service.template > kube-apiserver-${NODE_IPS[i]}.service

done查看生成的unit文件:ls kube-apiserver*.service

分发生成的systemd unit文件

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /var/log/kubernetes && chown -R k8s /var/log/kubernetes"

scp kube-apiserver-${node_ip}.service root@${node_ip}:/etc/systemd/system/kube-apiserver.service

done6.启动kube-apiserver服务

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kube-apiserver && systemctl restart kube-apiserver"

done7.检查kube-apiserver运行状态

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl status kube-apiserver |grep 'Active:'"

done状态为running才是成功启动了

如果服务不是活动状态就再运行一次启动服务的脚本可能就启动了,还是不行则查看日志文件:journalctl -u kube-apiserver

8.打印kube-apiserver写入etcd的数据

ETCDCTL_API=3 etcdctl \

--endpoints=${ETCD_ENDPOINTS} \

--cacert=/etc/kubernetes/cert/ca.pem \

--cert=/etc/etcd/cert/etcd.pem \

--key=/etc/etcd/cert/etcd-key.pem \

get /registry/ --prefix --keys-only9.检查群集状态命令

kubectl cluster-info

kubectl get all --all-namespaces

kubectl get componentstatuses

10.检查kube-apiserver监听的端口

netstat -lnpt|grep kube

11.授予kubernetes 证书访问 kubelet API的权限

kubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes

九、部署高可用 kube-controller-manager 集群

1.创建kube-controller-manager证书和私钥

vim kube-controller-manager-csr.json

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"hosts": [

"127.0.0.1",

"192.168.11.25",

"192.168.11.26",

"192.168.11.27"

],

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "system:kube-controller-manager",

"OU": "4Paradigm"

}

]

}生成证书和私钥

cfssl gencert -ca=/etc/kubernetes/cert/ca.pem \

-ca-key=/etc/kubernetes/cert/ca-key.pem \

-config=/etc/kubernetes/cert/ca-config.json \

-profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager将生成的证书和私钥分发到所有master节点

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-controller-manager*.pem k8s@${node_ip}:/etc/kubernetes/cert/

done2.创建和分发kubeconfig文件

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/cert/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-controller-manager.kubeconfigkubectl config set-credentials system:kube-controller-manager \

--client-certificate=kube-controller-manager.pem \

--client-key=kube-controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=kube-controller-manager.kubeconfigkubectl config set-context system:kube-controller-manager \

--cluster=kubernetes \

--user=system:kube-controller-manager \

--kubeconfig=kube-controller-manager.kubeconfigkubectl config use-context system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig

分发kubeconfig到所有master节点

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-controller-manager.kubeconfig k8s@${node_ip}:/etc/kubernetes/

done3.创建和分发kube-controller-manager systemd unit文件

vim kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

ExecStart=/opt/k8s/bin/kube-controller-manager \

--port=0 \

--secure-port=10252 \

--bind-address=127.0.0.1 \

--kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \

--service-cluster-ip-range=10.254.0.0/16 \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/etc/kubernetes/cert/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/cert/ca-key.pem \

--experimental-cluster-signing-duration=8760h \

--root-ca-file=/etc/kubernetes/cert/ca.pem \

--service-account-private-key-file=/etc/kubernetes/cert/ca-key.pem \

--leader-elect=true \

--feature-gates=RotateKubeletServerCertificate=true \

--controllers=*,bootstrapsigner,tokencleaner \

--horizontal-pod-autoscaler-use-rest-clients=true \

--horizontal-pod-autoscaler-sync-period=10s \

--tls-cert-file=/etc/kubernetes/cert/kube-controller-manager.pem \

--tls-private-key-file=/etc/kubernetes/cert/kube-controller-manager-key.pem \

--use-service-account-credentials=true \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=2

Restart=on

Restart=on-failure

RestartSec=5

User=k8s

[Install]

WantedBy=multi-user.target分发systemd unit文件到所有master节点

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-controller-manager.service root@${node_ip}:/etc/systemd/system/

done4.启动kube-controller-manager服务

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /var/log/kubernetes && chown -R k8s /var/log/kubernetes"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kube-controller-manager && systemctl restart kube-controller-manager"

done5.检查服务运行状态

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh k8s@${node_ip} "systemctl status kube-controller-manager|grep Active"

done状态为running才是成功启动了

如果服务不是活动状态就再运行一次启动服务的脚本可能就启动了,还是不行则查看日志文件:journalctl -u kube-controller-manager

6.查看输出的metric

netstat -anpt | grep kube-controll

kube-controller-manager监听10252端口,接收https请求

curl -s --cacert /etc/kubernetes/cert/ca.pem https://127.0.0.1:10252/metrics | head

7.测试 kube-controller-manager 集群的高可用

停掉一个或两个节点的 kube-controller-manager 服务,观察其它节点的日志,看是否获取了leader权限

8.查看当前的 leader

kubectl get endpoints kube-controller-manager --namespace=kube-system -o yaml

十、部署高可用kube-scheduler集群

1.创建kube-scheduler证书和私钥

vim kube-scheduler-csr.json

{

"CN": "system:kube-scheduler",

"hosts": [

"127.0.0.1",

"192.168.11.25",

"192.168.11.26",

"192.168.11.27"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "system:kube-scheduler",

"OU": "4Paradigm"

}

]

}生成证书和私钥

cfssl gencert -ca=/etc/kubernetes/cert/ca.pem \

-ca-key=/etc/kubernetes/cert/ca-key.pem \

-config=/etc/kubernetes/cert/ca-config.json \

-profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler2.创建和分发kubeconfig文件

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/cert/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-scheduler.kubeconfigkubectl config set-credentials system:kube-scheduler \

--client-certificate=kube-scheduler.pem \

--client-key=kube-scheduler-key.pem \

--embed-certs=true \

--kubeconfig=kube-scheduler.kubeconfigkubectl config set-context system:kube-scheduler \

--cluster=kubernetes \

--user=system:kube-scheduler \

--kubeconfig=kube-scheduler.kubeconfigkubectl config use-context system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig分发 kubeconfig 到所有 master 节点

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-scheduler.kubeconfig k8s@${node_ip}:/etc/kubernetes/

done3.创建和分发kube-scheduler systemd unit文件

vim kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

ExecStart=/opt/k8s/bin/kube-scheduler \\

--address=127.0.0.1 \\

--kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \\

--leader-elect=true \\

--alsologtostderr=true \\

--logtostderr=false \\

--log-dir=/var/log/kubernetes \\

--v=2

Restart=on-failure

RestartSec=5

User=k8s

[Install]

WantedBy=multi-user.target分发systemd unit文件到所有master节点

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-scheduler.service root@${node_ip}:/etc/systemd/system/

done4.启动kube-scheduler服务

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /var/log/kubernetes && chown -R k8s /var/log/kubernetes"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kube-scheduler && systemctl restart kube-scheduler"

done5.检查服务运行状态

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh k8s@${node_ip} "systemctl status kube-scheduler|grep Active"

done状态为running才是成功启动了

如果服务不是活动状态就再运行一次启动服务的脚本可能就启动了,还是不行则查看日志文件:journalctl -u kube-scheduler

6.查看输出的metric

netstat -anpt | grep kube-scheduler

kube-scheduler监听10251端口

curl -s https://127.0.0.1:10251/metrics | head

7.查看当前的leader

kubectl get endpoints kube-scheduler --namespace=kube-system -o yaml

十一、部署worker节点

1.安装依赖包(三台都要安装部署)

yum install -y epel-release conntrack ipvsadm ipset jq iptables curl sysstat libseccomp

/usr/sbin/modprobe ip_vs

十二、部署docker组件

1.下载安装docker

wget https://download.docker.com/linux/static/stable/x86_64/docker-18.03.1-ce.tgz

tar zxf docker-18.03.1-ce.tgz

2.分发到各节点

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp docker/docker* k8s@${node_ip}:/opt/k8s/bin/

ssh k8s@${node_ip} "chmod +x /opt/k8s/bin/*"

done3.创建和分发systemd unit文件

vim docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.io

[Service]

Environment="PATH=/opt/k8s/bin:/bin:/sbin:/usr/bin:/usr/sbin"

EnvironmentFile=-/run/flannel/docker

ExecStart=/opt/k8s/bin/dockerd --log-level=error $DOCKER_NETWORK_OPTIONS

ExecReload=/bin/kill -s HUP $MAINPID

Restart=on-failure

RestartSec=5

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target分发systemd unit 文件到所有worker机器:

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp docker.service root@${node_ip}:/etc/systemd/system/

done4.配置和分发 docker 配置文件

vim docker-daemon.json

{

"registry-mirrors": ["https://hub-mirror.c.163.com", "https://docker.mirrors.ustc.edu.cn"],

"max-concurrent-downloads": 20

}分发docker配置文件到所有work节点

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /etc/docker/"

scp docker-daemon.json root@${node_ip}:/etc/docker/daemon.json

done5.启动docker服务

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl stop firewalld && systemctl disable firewalld"

ssh root@${node_ip} "/usr/sbin/iptables -F && /usr/sbin/iptables -X && /usr/sbin/iptables -F -t nat && /usr/sbin/iptables -X -t nat"

ssh root@${node_ip} "/usr/sbin/iptables -P FORWARD ACCEPT"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable docker && systemctl restart docker"

ssh root@${node_ip} 'for intf in /sys/devices/virtual/net/docker0/brif/*; do echo 1 > $intf/hairpin_mode; done'

ssh root@${node_ip} "sudo sysctl -p /etc/sysctl.d/kubernetes.conf"

done6.检查docker服务启动状态

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh k8s@${node_ip} "systemctl status docker|grep Active"

done状态为running才是成功启动了

如果服务不是活动状态就再运行一次启动服务的脚本可能就启动了,还是不行则查看日志文件:journalctl -u docker

7.检查docker0网桥

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh k8s@${node_ip} "/usr/sbin/ip addr show flannel.1 && /usr/sbin/ip addr show docker0"

done确认各work节点的docker0网桥和 flannel.1接口的IP处于同一个网段中

十三、部署kubelet组件

1.创建kubelet bootstrap kubeconfig文件

for node_name in ${NODE_NAMES[@]}

do

echo ">>> ${node_name}"

#创建 token

export BOOTSTRAP_TOKEN=$(kubeadm token create \

--description kubelet-bootstrap-token \

--groups system:bootstrappers:${node_name} \

--kubeconfig ~/.kube/config)

#设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/cert/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig

#设置客户端认证的参数

kubectl config set-credentials kubelet-bootstrap \

--token=${BOOTSTRAP_TOKEN} \

--kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig

#设置上下文的参数

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig

#设置默认上下文

kubectl config use-context default --kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig

done查看kubeadm为各节点创建的token

kubeadm token list --kubeconfig ~/.kube/config

查看各token关联的Secret

kubectl get secrets -n kube-system

2.分发bootstrap kubeconfig文件到所有worker节点

for node_name in ${NODE_NAMES[@]}

do

echo ">>> ${node_name}"

scp kubelet-bootstrap-${node_name}.kubeconfig k8s@${node_name}:/etc/kubernetes/kubelet-bootstrap.kubeconfig

done3.创建和分发kubelet参数配置文件

vim kubelet.config.json.template

{

"kind": "KubeletConfiguration",

"apiVersion": "kubelet.config.k8s.io/v1beta1",

"authentication": {

"x509": {

"clientCAFile": "/etc/kubernetes/cert/ca.pem"

},

"webhook": {

"enabled": true,

"cacheTTL": "2m0s"

},

"anonymous": {

"enabled": false

}

},

"authorization": {

"mode": "Webhook",

"webhook": {

"cacheAuthorizedTTL": "5m0s",

"cacheUnauthorizedTTL": "30s"

}

},

"address": "##NODE_IP##",

"port": 10250,

"readOnlyPort": 0,

"cgroupDriver": "cgroupfs",

"hairpinMode": "promiscuous-bridge",

"serializeImagePulls": false,

"featureGates": {

"RotateKubeletClientCertificate": true,

"RotateKubeletServerCertificate": true

},

"clusterDomain": "${CLUSTER_DNS_DOMAIN}",

"clusterDNS": ["${CLUSTER_DNS_SVC_IP}"]

}为各节点创建和分发kubelet配置文件

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

sed -e "s/##NODE_IP##/${node_ip}/" kubelet.config.json.template > kubelet.config-${node_ip}.json

scp kubelet.config-${node_ip}.json root@${node_ip}:/etc/kubernetes/kubelet.config.json

done4.创建和分发kubelet systemd unit文件

vim kubelet.service.template

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=/var/lib/kubelet

ExecStart=/opt/k8s/bin/kubelet \\

--bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig \\

--cert-dir=/etc/kubernetes/cert \\

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig \\

--config=/etc/kubernetes/kubelet.config.json \\

--hostname-override=##NODE_NAME## \\

--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest \\

--allow-privileged=true \\

--alsologtostderr=true \\

--logtostderr=false \\

--log-dir=/var/log/kubernetes \\

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target为各节点创建和分发kubelet systemd unit文件

for node_name in ${NODE_NAMES[@]}

do

echo ">>> ${node_name}"

sed -e "s/##NODE_NAME##/${node_name}/" kubelet.service.template > kubelet-${node_name}.service

scp kubelet-${node_name}.service root@${node_name}:/etc/systemd/system/kubelet.service

done5.关于kubelet启动失败错误日志(Bootstrap Token Auth和授予权限)

默认情况下,这个 user 和 group 没有创建 CSR 的权限,kubelet 启动失败,错误日志如下

journalctl -u kubelet -a | grep -A 2 'certificatesigningrequests'

May 06 06:42:36 kube-node1 kubelet[26986]: F0506 06:42:36.314378 26986 server.go:233] failed to run Kubelet: cannot create certificate signing request: certificatesigningrequests.certificates.k8s.io is forbidden: User "system:bootstrap:lemy40" cannot create certificatesigningrequests.certificates.k8s.io at the cluster scope

May 06 06:42:36 kube-node1 systemd[1]: kubelet.service: Main process exited, code=exited, status=255/n/a

May 06 06:42:36 kube-node1 systemd[1]: kubelet.service: Failed with result 'exit-code'.解决方法就是创建一个clusterrolebinding

create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --group=system:bootstrappers

6.启动kubelet服务

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /var/lib/kubelet"

ssh root@${node_ip} "/usr/sbin/swapoff -a"

ssh root@${node_ip} "mkdir -p /var/log/kubernetes && chown -R k8s /var/log/kubernetes"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kubelet && systemctl restart kubelet"

done7.approve kubelet CSR请求

手动 approve CSR 请求

查看CSR列表:kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-QzuuQiuUfcSdp3j5W4B2UOuvQ_n9aTNHAlrLzVFiqrk 43s system:bootstrap:zkiem5 Pending

node-csr-oVbPmU-ikVknpynwu0Ckz_MvkAO_F1j0hmbcDa__sGA 27s system:bootstrap:mkus5s Pending

node-csr-u0E1-ugxgotO_9FiGXo8DkD6a7-ew8sX2qPE6KPS2IY 13m system:bootstrap:k0s2bj Pendingkubectl certificate approve node-csr-QzuuQiuUfcSdp3j5W4B2UOuvQ_n9aTNHAlrLzVFiqrk

certificatesigningrequest.certificates.k8s.io "node-csr-QzuuQiuUfcSdp3j5W4B2UOuvQ_n9aTNHAlrLzVFiqrk" approved

查看Approve结果:kubectl describe csr node-csr-QzuuQiuUfcSdp3j5W4B2UOuvQ_n9aTNHAlrLzVFiqrk

自动approve CSR请求

vim csr-crb.yaml

# Approve all CSRs for the group "system:bootstrappers"

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: auto-approve-csrs-for-group

subjects:

- kind: Group

name: system:bootstrappers

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:nodeclient

apiGroup: rbac.authorization.k8s.io

---

# To let a node of the group "system:nodes" renew its own credentials

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: node-client-cert-renewal

subjects:

- kind: Group

name: system:nodes

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclient

apiGroup: rbac.authorization.k8s.io

---

# A ClusterRole which instructs the CSR approver to approve a node requesting a

# serving cert matching its client cert.

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: approve-node-server-renewal-csr

rules:

- apiGroups: ["certificates.k8s.io"]

resources: ["certificatesigningrequests/selfnodeserver"]

verbs: ["create"]

---

# To let a node of the group "system:nodes" renew its own server credentials

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: node-server-cert-renewal

subjects:

- kind: Group

name: system:nodes

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: approve-node-server-renewal-csr

apiGroup: rbac.authorization.k8s.io生效配置文件:kubectl apply -f csr-crb.yaml

8.查看kublet的情况

kubectl get csr

kubectl get nodes

9.kublet api认证和授权

kublet配置了如下认证参数

authentication.anonymous.enabled:设置为 false,不允许匿名访问 10250 端口

authentication.x509.clientCAFile:指定签名客户端证书的 CA 证书,开启 HTTPs 证书认证

authentication.webhook.enabled=true:开启 HTTPs bearer token 认证

authroization.mode=Webhook:开启 RBAC 授权

curl -s --cacert /etc/kubernetes/cert/ca.pem https://192.168.11.25:10250/metrics

Unauthorized

curl -s --cacert /etc/kubernetes/cert/ca.pem -H "Authorization: Bearer 123456" https://192.168.11.25:10250/metrics

Unauthorized

证书认证和授权:

权限不足的证书

curl -s --cacert /etc/kubernetes/cert/ca.pem --cert /etc/kubernetes/cert/kube-controller-manager.pem --key /etc/kubernetes/cert/kube-controller-manager-key.pem https://172.27.129.111:10250/metrics

Forbidden (user=system:kube-controller-manager, verb=get, resource=nodes, subresource=metrics)

使用部署kubectl命令行工具时创建的、具有最高权限的admin证书

curl -s --cacert /etc/kubernetes/cert/ca.pem --cert ./admin.pem --key ./admin-key.pem https://172.27.129.111:10250/metrics|head

bear token认证和授权

kubectl create sa kubelet-api-test

kubectl create clusterrolebinding kubelet-api-test --clusterrole=system:kubelet-api-admin --serviceaccount=default:kubelet-api-test

SECRET=$(kubectl get secrets | grep kubelet-api-test | awk '{print $1}')

TOKEN=$(kubectl describe secret ${SECRET} | grep -E '^token' | awk '{print $2}')

echo ${TOKEN}

curl -s --cacert /etc/kubernetes/cert/ca.pem -H "Authorization: Bearer ${TOKEN}" https://172.27.129.111:10250/metrics|head

10.cadvisor和metrics

浏览器访问https://192.168.11.25:4194/containers/可以查看到 cadvisor 的监控页面

浏览器访问https://192.168.11.25:10250/metrics和https://172.27.129.80:10250/metrics/cadvisor分别返回kublet和cadvisor的metrics

11.获取kublet的配置

使用部署kubectl命令行工具时创建的、具有最高权限的admin证书

curl -sSL --cacert /etc/kubernetes/cert/ca.pem --cert ./admin.pem --key ./admin-key.pem ${KUBE_APISERVER}/api/v1/nodes/kube-node1/proxy/configz | jq '.kubeletconfig|.kind="KubeletConfiguration"|.apiVersion="kubelet.config.k8s.io/v1beta1"'

{

"syncFrequency": "1m0s",

"fileCheckFrequency": "20s",

"httpCheckFrequency": "20s",

"address": "172.27.129.80",

"port": 10250,

"readOnlyPort": 10255,

"authentication": {

"x509": {},

"webhook": {

"enabled": false,

"cacheTTL": "2m0s"

},

"anonymous": {

"enabled": true

}

},

"authorization": {

"mode": "AlwaysAllow",

"webhook": {

"cacheAuthorizedTTL": "5m0s",

"cacheUnauthorizedTTL": "30s"

}

},

"registryPullQPS": 5,

"registryBurst": 10,

"eventRecordQPS": 5,

"eventBurst": 10,

"enableDebuggingHandlers": true,

"healthzPort": 10248,

"healthzBindAddress": "127.0.0.1",

"oomScoreAdj": -999,

"clusterDomain": "cluster.local.",

"clusterDNS": [

"10.254.0.2"

],

"streamingConnectionIdleTimeout": "4h0m0s",

"nodeStatusUpdateFrequency": "10s",

"imageMinimumGCAge": "2m0s",

"imageGCHighThresholdPercent": 85,

"imageGCLowThresholdPercent": 80,

"volumeStatsAggPeriod": "1m0s",

"cgroupsPerQOS": true,

"cgroupDriver": "cgroupfs",

"cpuManagerPolicy": "none",

"cpuManagerReconcilePeriod": "10s",

"runtimeRequestTimeout": "2m0s",

"hairpinMode": "promiscuous-bridge",

"maxPods": 110,

"podPidsLimit": -1,

"resolvConf": "/etc/resolv.conf",

"cpuCFSQuota": true,

"maxOpenFiles": 1000000,

"contentType": "application/vnd.kubernetes.protobuf",

"kubeAPIQPS": 5,

"kubeAPIBurst": 10,

"serializeImagePulls": false,

"evictionHard": {

"imagefs.available": "15%",

"memory.available": "100Mi",

"nodefs.available": "10%",

"nodefs.inodesFree": "5%"

},

"evictionPressureTransitionPeriod": "5m0s",

"enableControllerAttachDetach": true,

"makeIPTablesUtilChains": true,

"iptablesMasqueradeBit": 14,

"iptablesDropBit": 15,

"featureGates": {

"RotateKubeletClientCertificate": true,

"RotateKubeletServerCertificate": true

},

"failSwapOn": true,

"containerLogMaxSize": "10Mi",

"containerLogMaxFiles": 5,

"enforceNodeAllocatable": [

"pods"

],

"kind": "KubeletConfiguration",

"apiVersion": "kubelet.config.k8s.io/v1beta1"

}十四、部署kube-proxy组件

1.创建kube-proxy证书

vim kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "4Paradigm"

}

]

}生成证书和私钥

cfssl gencert -ca=/etc/kubernetes/cert/ca.pem \

-ca-key=/etc/kubernetes/cert/ca-key.pem \

-config=/etc/kubernetes/cert/ca-config.json \

-profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy2.创建和分发kubeconfig文件

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/cert/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfigkubectl config set-credentials kube-proxy \

--client-certificate=kube-proxy.pem \

--client-key=kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfigkubectl config use-context default --kubeconfig=kube-proxy.kubeconfig分发kubeconfig文件

for node_name in ${NODE_NAMES[@]}

do

echo ">>> ${node_name}"

scp kube-proxy.kubeconfig k8s@${node_name}:/etc/kubernetes/

done3.创建kube-proxy配置文件

vim kube-proxy.config.yaml.template

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: ##NODE_IP##

clientConnection:

kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig

clusterCIDR: ${CLUSTER_CIDR}

healthzBindAddress: ##NODE_IP##:10256

hostnameOverride: ##NODE_NAME##

kind: KubeProxyConfiguration

metricsBindAddress: ##NODE_IP##:10249

mode: "ipvs"为各节点创建和分发kube-proxy配置文件

for (( i=0; i < 3; i++ ))

do

echo ">>> ${NODE_NAMES[i]}"

sed -e "s/##NODE_NAME##/${NODE_NAMES[i]}/" -e "s/##NODE_IP##/${NODE_IPS[i]}/" kube-proxy.config.yaml.template > kube-proxy-${NODE_NAMES[i]}.config.yaml

scp kube-proxy-${NODE_NAMES[i]}.config.yaml root@${NODE_NAMES[i]}:/etc/kubernetes/kube-proxy.config.yaml

done4.创建和分发kube-proxy systemd unit文件

vim kube-proxy.service

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

WorkingDirectory=/var/lib/kube-proxy

ExecStart=/opt/k8s/bin/kube-proxy \\

--config=/etc/kubernetes/kube-proxy.config.yaml \\

--alsologtostderr=true \\

--logtostderr=false \\

--log-dir=/var/log/kubernetes \\

--v=2

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target分发kube-proxy systemd unit文件

for node_name in ${NODE_NAMES[@]}

do

echo ">>> ${node_name}"

scp kube-proxy.service root@${node_name}:/etc/systemd/system/

done5.启动kube-proxy服务

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /var/lib/kube-proxy"

ssh root@${node_ip} "mkdir -p /var/log/kubernetes && chown -R k8s /var/log/kubernetes"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kube-proxy && systemctl restart kube-proxy"

done6.检查启动结果

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh k8s@${node_ip} "systemctl status kube-proxy|grep Active"

done状态为running才是成功启动了

如果服务不是活动状态就再运行一次启动服务的脚本可能就启动了,还是不行则查看日志文件:journalctl -u kube-proxy

7.查看监听端口和metrics

netstat -anpt | grep kube-prox

10249:http prometheus metrics port

10256:http healthz port

查看ipvs路由规则

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "/usr/sbin/ipvsadm -ln"

done最后检测群集的功能

检查节点状态:kubectl get nodes

查看pod运行状态:kubectl get pods -o wide

部署dashboard插件

修改配置文件/opt/k8s/kubernetes/cluster/addons/dashboard

cp dashboard-controller.yaml{,.orig}

diff dashboard-controller.yaml{,.orig}

cp dashboard-service.yaml{,.orig}

diff dashboard-service.yaml.orig dashboard-service.yaml

执行所有定义文件:ls *.yaml

kubectl create -f *.yaml

kubectl get deployment kubernetes-dashboard -n kube-system

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

kubernetes-dashboard 1 1 1 1 2mkubectl --namespace kube-system get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

coredns-77c989547b-6l6jr 1/1 Running 0 58m 172.30.39.3 kube-node3

coredns-77c989547b-d9lts 1/1 Running 0 58m 172.30.81.3 kube-node1

kubernetes-dashboard-65f7b4f486-wgc6j 1/1 Running 0 2m 172.30.81.5 kube-node1kubectl get services kubernetes-dashboard -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes-dashboard NodePort 10.254.96.204 <none> 443:8607/TCP 2m然后就可以访问dashboard了

1369

1369

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?