第一节 参考

一.编译问题

1.调试时编译的tag为3.6.0的版本

2.如果是ant编译,ufpr.dl.sourceforge.net的地址使用https

只需要改动build.xml文件

以前版本用ant,新版都用maven,需要添加一个依赖

<dependency>

<groupId>com.codahale.metrics</groupId>

<artifactId>metrics-core</artifactId>

<version>3.0.0</version>

</dependency>

<dependency>

<groupId>org.xerial.snappy</groupId>

<artifactId>snappy-java</artifactId>

<version>1.1.7</version>

</dependency>

3.如果遇到类找不到,去pom文件里面去掉provider的scope

二.启动问题

1.添加进程参数./xxx/conf/zoo.cfg.如果在idea中调试集群,启动多个zookeeper,可以在不同启动进程配置zoocfg的路径为绝对路径.

2.如果出现admin server的8080端口占用,配置admin.serverPort=8081.可以从其他zk的Servier进程启动参数中,拷贝出参数,填到idea的调试启动参数中.

3.客户端连接命令 ./zkcli.sh -server 127.0.0.1:2181

查看节点主从命令 ./zkserver.sh status /xxx/zoo.cfg

三.调试目标

(1).zookeeper投票过程?投票包含哪些内容字段,如何比较大小?

(2).zookeeper的事务是怎么实现的

(3).leader的事务请求,比如创建节点,删除节点,修改数据,怎么同步到follower的,什么时候同步?

(4).zookeeper对客户端请求的处理流程是什么?包含哪几个处理器?

(5).zk的提案Proposal和事务有什么关联

第二节 原理

一.应用场景

1.数据发布/订阅

2.负载均衡

3.命名服务

4.分布式协调/通知

5.集群管理

6.master选举

7.分布式锁

8.分布式队列

二.paxos和zab

第三节 源码

standalong模式入口为org.apache.zookeeper.server.ZooKeeperServerMain.main().集群模式入口为org.apache.zookeeper.server.quorum.QuorumPeerMain.main().

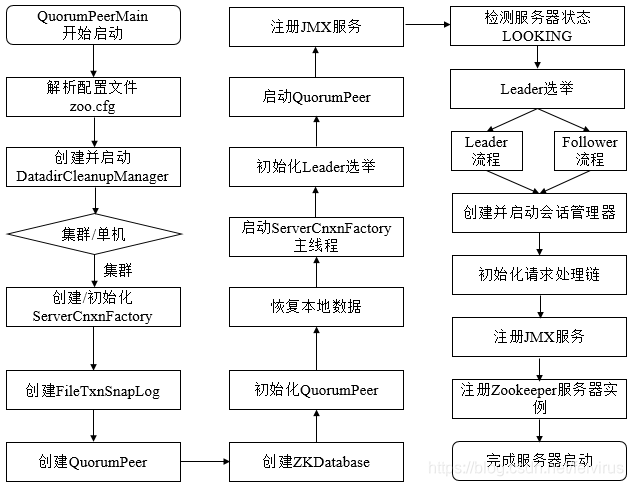

一.集群模式服务端main方法启动

启动过程如下图:

![]()

编辑

进入QuorumPeerMain#main().调用QuorumPeerMain#initializeAndRun()。代码如下:

protected void initializeAndRun(String[] args) throws ConfigException, IOException, AdminServerException {

//创建zk配置类,里面的成员记录了zk的zoo.cfg的各种配置项值

QuorumPeerConfig config = new QuorumPeerConfig();

if (args.length == 1) {

/* 解析进程参数.解析zoo.cfg文件的路径,调用QuorumPeerConfig#parseProperties()解析到QuorumPeerConfig成员变量中,比如客户端连接端口号,

data目录路径,dataLog目录路径,选举策略默认为3,zk服务端机器数量(我调试配置的3台),代码在后面1分析 */

config.parse(args[0]);

}

// Start and schedule the the purge task

//对事务日志和数据的快照文件进行定时清理

DatadirCleanupManager purgeMgr = new DatadirCleanupManager(

config.getDataDir(),

config.getDataLogDir(),

config.getSnapRetainCount(),

config.getPurgeInterval());

purgeMgr.start();

if (args.length == 1 && config.isDistributed()) {

//启动zookeeper,在后面2处分析

runFromConfig(config);

} else {

LOG.warn("Either no config or no quorum defined in config, running in standalone mode");

// there is only server in the quorum -- run as standalone

ZooKeeperServerMain.main(args);

}

}1.解析zoo.cfg文件

读取配置

public void parseProperties(Properties zkProp) throws IOException, ConfigException {

...

VerifyingFileFactory vff = new VerifyingFileFactory.Builder(LOG).warnForRelativePath().build();

//遍历zoo.cfg文件的配置项

for (Entry<Object, Object> entry : zkProp.entrySet()) {

String key = entry.getKey().toString().trim();

String value = entry.getValue().toString().trim();

if (key.equals("dataDir")) {

//data目录位置

dataDir = vff.create(value);

} else if (key.equals("dataLogDir")) {

//dataLog目录位置

dataLogDir = vff.create(value);

} else if (key.equals("clientPort")) {

//客户端连接端口号

clientPort = Integer.parseInt(value);

} ...

} else if (key.equals("clientPortAddress")) {

clientPortAddress = value.trim();

} ...

} else if (key.equals("tickTime")) {

//心跳毫秒数

tickTime = Integer.parseInt(value);

} else if (key.equals("maxClientCnxns")) {

//最大客户端连接数

maxClientCnxns = Integer.parseInt(value);

} else if (key.equals("minSessionTimeout")) {

minSessionTimeout = Integer.parseInt(value);

} else if (key.equals("maxSessionTimeout")) {

//最大session超时时间

maxSessionTimeout = Integer.parseInt(value);

} else if (key.equals("initLimit")) {

initLimit = Integer.parseInt(value);

} else if (key.equals("syncLimit")) {

//客户端和服务端最大的同步连接数

syncLimit = Integer.parseInt(value);

} ...

} else if (key.equals("electionAlg")) {

//选举策略,成员变量直接赋值3

electionAlg = Integer.parseInt(value);

if (electionAlg != 3) {

throw new ConfigException("Invalid electionAlg value. Only 3 is supported.");

}

} ...

} else if (key.equals("peerType")) {

if (value.toLowerCase().equals("observer")) {

peerType = LearnerType.OBSERVER;

} else if (value.toLowerCase().equals("participant")) {

peerType = LearnerType.PARTICIPANT;

} else {

throw new ConfigException("Unrecognised peertype: " + value);

}

} ...

} else if (key.equals("standaloneEnabled")) {

if (value.toLowerCase().equals("true")) {

setStandaloneEnabled(true);

} else if (value.toLowerCase().equals("false")) {

setStandaloneEnabled(false);

} else {

throw new ConfigException("Invalid option "

+ value

+ " for standalone mode. Choose 'true' or 'false.'");

}

} ...

} else if (key.equals("quorum.cnxn.threads.size")) {

quorumCnxnThreadsSize = Integer.parseInt(value);

} ...

} else {

System.setProperty("zookeeper." + key, value);

}

}

...

...

try {

//创建监控类

Class.forName(metricsProviderClassName, false, Thread.currentThread().getContextClassLoader());

} catch (ClassNotFoundException error) {

throw new IllegalArgumentException("metrics provider class was not found", error);

}

// backward compatibility - dynamic configuration in the same file as

// static configuration params see writeDynamicConfig()

if (dynamicConfigFileStr == null) {

/*这里面读取myid文件,设置servierId.*/

setupQuorumPeerConfig(zkProp, true);

if (isDistributed() && isReconfigEnabled()) {

// we don't backup static config for standalone mode.

// we also don't backup if reconfig feature is disabled.

backupOldConfig();

}

}

}2.启动zookeeper.

进入QuorumPeerMain#runFromConfig().

public void runFromConfig(QuorumPeerConfig config) throws IOException, AdminServerException {

try {

//添加log4j监控

ManagedUtil.registerLog4jMBeans();

} catch (JMException e) {

LOG.warn("Unable to register log4j JMX control", e);

}

MetricsProvider metricsProvider;

try {

//使用DefaultMetricsProvider进行性能监控

metricsProvider = MetricsProviderBootstrap.startMetricsProvider(

config.getMetricsProviderClassName(),

config.getMetricsProviderConfiguration());

} catch (MetricsProviderLifeCycleException error) {

throw new IOException("Cannot boot MetricsProvider " + config.getMetricsProviderClassName(), error);

}

try {

ServerMetrics.metricsProviderInitialized(metricsProvider);

ServerCnxnFactory cnxnFactory = null;

ServerCnxnFactory secureCnxnFactory = null;

if (config.getClientPortAddress() != null) {

//使用NIO,创建NIOServerCnxnFactory的连接池,处理客户端或者服务端发来的连接

cnxnFactory = ServerCnxnFactory.createFactory();

//配置连接池的客户端地址

cnxnFactory.configure(config.getClientPortAddress(), config.getMaxClientCnxns(), config.getClientPortListenBacklog(), false);

}

...

//创建QuorumPeer选举类对象,处理选举的逻辑

quorumPeer = getQuorumPeer();

//添加事务日志管理器,处理data目录下日志文件的备份恢复

quorumPeer.setTxnFactory(new FileTxnSnapLog(config.getDataLogDir(), config.getDataDir()));

...

//设置选举策略,myid,超时时间等从配置文件zoo.cfg中读取的值

quorumPeer.setElectionType(config.getElectionAlg());

quorumPeer.setMyid(config.getServerId());

...

//创建内存数据库,用来存储zookeeper的树形文件系统,文件系统代码在后面3处分析.

quorumPeer.setZKDatabase(new ZKDatabase(quorumPeer.getTxnFactory()));

...

/* 把zookeeper的几台服务器的地址添加到/zookeeper/config节点, 把zoo.cfg中的server.x,每个server信息写入如下,

get /zookeeper/config

server.1=127.0.0.1:2888:3888:participant

server.2=127.0.0.1:2889:3889:participant

server.3=127.0.0.1:2890:3890:participant

version=0

*/

quorumPeer.initConfigInZKDatabase();

//设置连接池

quorumPeer.setCnxnFactory(cnxnFactory);

//默认服务器角色是枚举LearnerType#PARTICIPANT

quorumPeer.setLearnerType(config.getPeerType());

...

quorumPeer.setQuorumCnxnThreadsSize(config.quorumCnxnThreadsSize);

//启动账号密码认证服务器,里面是空实现

quorumPeer.initialize();

if (config.jvmPauseMonitorToRun) {

quorumPeer.setJvmPauseMonitor(new JvmPauseMonitor(config));

}

//进入QuorumPeer#start(),加载数据库,启动连接池,不是运行线程,代码在后面4处分析

quorumPeer.start();

ZKAuditProvider.addZKStartStopAuditLog();

quorumPeer.join();

} catch (InterruptedException e) {

...

}

}3.zookeeper内存里面的树形文件系统

在ZKDatabase类中定义.代码如下:

public class ZKDatabase {

//树形结构根节点,即"/"路径

protected DataTree dataTree;

protected ConcurrentHashMap<Long, Integer> sessionsWithTimeouts;

//事务日志

protected FileTxnSnapLog snapLog;

protected long minCommittedLog, maxCommittedLog;

/**

* Default value is to use snapshot if txnlog size exceeds 1/3 the size of snapshot

*/

public static final String SNAPSHOT_SIZE_FACTOR = "zookeeper.snapshotSizeFactor";

public static final double DEFAULT_SNAPSHOT_SIZE_FACTOR = 0.33;

private double snapshotSizeFactor;

public static final String COMMIT_LOG_COUNT = "zookeeper.commitLogCount";

public static final int DEFAULT_COMMIT_LOG_COUNT = 500;

public int commitLogCount;

protected static int commitLogBuffer = 700;

protected Queue<Proposal> committedLog = new ArrayDeque<>();

protected ReentrantReadWriteLock logLock = new ReentrantReadWriteLock();

/**

* Number of txn since last snapshot;

*/

private AtomicInteger txnCount = new AtomicInteger(0);

}树形节点定义:

public class DataTree {

private final RateLogger RATE_LOGGER = new RateLogger(LOG, 15 * 60 * 1000);

/**

* This map provides a fast lookup to the datanodes. The tree is the

* source of truth and is where all the locking occurs

*/

//孩子节点

private final NodeHashMap nodes;

//对数据变化的监控

private IWatchManager dataWatches;

//对子节点的监控

private IWatchManager childWatches;

...

/**

* This hashtable lists the paths of the ephemeral nodes of a session.

*/

//临时节点的子节点

private final Map<Long, HashSet<String>> ephemerals = new ConcurrentHashMap<Long, HashSet<String>>();

//修改事务的最大id

private volatile ZxidDigest lastProcessedZxidDigest;

//构造函数中初始化几个固定路径

DataTree(DigestCalculator digestCalculator) {

this.digestCalculator = digestCalculator;

nodes = new NodeHashMapImpl(digestCalculator);

/* Rather than fight it, let root have an alias */

//添加“”路径

nodes.put("", root);

//添加“/”路径

nodes.putWithoutDigest(rootZookeeper, root);

/** add the proc node and quota node */

root.addChild(procChildZookeeper);

// 添加 /zookeeper路径

nodes.put(procZookeeper, procDataNode);

procDataNode.addChild(quotaChildZookeeper);

//添加/zookeeper/quota路径

nodes.put(quotaZookeeper, quotaDataNode);

// 添加/zookeeper/config路径

addConfigNode();

nodeDataSize.set(approximateDataSize());

try {

//添加数据变化的监听器

dataWatches = WatchManagerFactory.createWatchManager();

//添加子节点变化的监听器

childWatches = WatchManagerFactory.createWatchManager();

} catch (Exception e) {

LOG.error("Unexpected exception when creating WatchManager, exiting abnormally", e);

ServiceUtils.requestSystemExit(ExitCode.UNEXPECTED_ERROR.getValue());

}

}

}4.QuorumPeer#start()从快照和事务日终中恢复数据,启动连接池

public synchronized void start() {

if (!getView().containsKey(myid)) {

throw new RuntimeException("My id " + myid + " not in the peer list");

}

//从文件中恢复,加载数据库,调用FileTxnSnapLog#restore(),在后面代码6处分析

loadDataBase();

//调用NIOServerCnxnFactory#start()启动连接池,接收客户端和其他server发来的请求.

startServerCnxnFactory();

try {

adminServer.start();

} catch (AdminServerException e) {

LOG.warn("Problem starting AdminServer", e);

System.out.println(e);

}

//初始化选举算法,创建myid,默认事务id为0,epoch为0的Vote选票,然后进入QuorumPeer#createElectionAlgorithm()代码在后面7分析.

//这里会创建两个线程,一个发送,一个接收线程,处理其他server发送过来的选举请求。在下面的5处发送,在后面的代码7处新线程接收。

startLeaderElection();

startJvmPauseMonitor();

//进入下面的5.QuorumPeer#run()方法,主程序,同时发送自己的选票.

super.start();

}5.zookeeper选举主线程.

进入QuorumPeer#run()方法.

public void run() {

//修改线程名为QuorumPeer[myid=xx]xxx格式

updateThreadName();

LOG.debug("Starting quorum peer");

///监控相关代码,忽略

try {

/*

* Main loop

*/

//死循环

while (running) {

//获取当前机器状态

switch (getPeerState()) {

case LOOKING:

//正在寻找Leader,刚启动时处于这个状态

ServerMetrics.getMetrics().LOOKING_COUNT.add(1);

if (Boolean.getBoolean("readonlymode.enabled")) {

...

} else {

try {

reconfigFlagClear();

if (shuttingDownLE) {

shuttingDownLE = false;

startLeaderElection();

}

/* makeLEStrategy()获取当前的选举算法. lookForLeader()选举过程,判断谁是leader,

进入FastLeaderElection#lookForLeader(),这里就是进入zab算法的逻辑,在后面11处分析.

这里调用完,选举结果就有了.调试时server3做leader*/

setCurrentVote(makeLEStrategy().lookForLeader());

} catch (Exception e) {

LOG.warn("Unexpected exception", e);

setPeerState(ServerState.LOOKING);

}

}

break;

case OBSERVING:

try {

LOG.info("OBSERVING");

setObserver(makeObserver(logFactory));

observer.observeLeader();

} catch (Exception e) {

LOG.warn("Unexpected exception", e);

} finally {

observer.shutdown();

setObserver(null);

updateServerState();

// Add delay jitter before we switch to LOOKING

// state to reduce the load of ObserverMaster

if (isRunning()) {

Observer.waitForObserverElectionDelay();

}

}

break;

case FOLLOWING:

try {

LOG.info("FOLLOWING");

setFollower(makeFollower(logFactory));

follower.followLeader();

} catch (Exception e) {

LOG.warn("Unexpected exception", e);

} finally {

follower.shutdown();

setFollower(null);

updateServerState();

}

break;

case LEADING:

LOG.info("LEADING");

try {

//经过和1之间的投票结果判断,server3做leader.循环进入这里.设置自己为leader

setLeader(makeLeader(logFactory));

//server3开始领导过程,在后面10处分析

leader.lead();

setLeader(null);

} catch (Exception e) {

LOG.warn("Unexpected exception", e);

} finally {

if (leader != null) {

leader.shutdown("Forcing shutdown");

setLeader(null);

}

updateServerState();

}

break;

}

}

} finally {

LOG.warn("QuorumPeer main thread exited");

MBeanRegistry instance = MBeanRegistry.getInstance();

instance.unregister(jmxQuorumBean);

instance.unregister(jmxLocalPeerBean);

for (RemotePeerBean remotePeerBean : jmxRemotePeerBean.values()) {

instance.unregister(remotePeerBean);

}

jmxQuorumBean = null;

jmxLocalPeerBean = null;

jmxRemotePeerBean = null;

}

}6.从快照和事务日终中恢复zookeeper数据

进入FileTxnSnapLog#restore().

public long restore(DataTree dt, Map<Long, Integer> sessions, PlayBackListener listener) throws IOException {

long snapLoadingStartTime = Time.currentElapsedTime();

//从目录data/version-2/snapshot中恢复日志,看下面6.1

long deserializeResult = snapLog.deserialize(dt, sessions);

ServerMetrics.getMetrics().STARTUP_SNAP_LOAD_TIME.add(Time.currentElapsedTime() - snapLoadingStartTime);

FileTxnLog txnLog = new FileTxnLog(dataDir);

boolean trustEmptyDB;

//初始化文件

File initFile = new File(dataDir.getParent(), "initialize");

if (Files.deleteIfExists(initFile.toPath())) {

LOG.info("Initialize file found, an empty database will not block voting participation");

trustEmptyDB = true;

} else {

trustEmptyDB = autoCreateDB;

}

// 快照可能比较老,未及时刷新到磁盘。从事务日志中恢复最新的数据到内容中

RestoreFinalizer finalizer = () -> {

//从事务日志中恢复数据.读取log.xxxx前缀的文件,比如log.100000001.看下面6.2

long highestZxid = fastForwardFromEdits(dt, sessions, listener);

// The snapshotZxidDigest will reset after replaying the txn of the

// zxid in the snapshotZxidDigest, if it's not reset to null after

// restoring, it means either there are not enough txns to cover that

// zxid or that txn is missing

DataTree.ZxidDigest snapshotZxidDigest = dt.getDigestFromLoadedSnapshot();

if (snapshotZxidDigest != null) {

LOG.warn(

"Highest txn zxid 0x{} is not covering the snapshot digest zxid 0x{}, "

+ "which might lead to inconsistent state",

Long.toHexString(highestZxid),

Long.toHexString(snapshotZxidDigest.getZxid()));

}

return highestZxid;

};

//之前没有事务日志记录

if (-1L == deserializeResult) {

/* this means that we couldn't find any snapshot, so we need to

* initialize an empty database (reported in ZOOKEEPER-2325) */

if (txnLog.getLastLoggedZxid() != -1) {

// ZOOKEEPER-3056: provides an escape hatch for users upgrading

// from old versions of zookeeper (3.4.x, pre 3.5.3).

if (!trustEmptySnapshot) {

throw new IOException(EMPTY_SNAPSHOT_WARNING + "Something is broken!");

} else {

LOG.warn("{}This should only be allowed during upgrading.", EMPTY_SNAPSHOT_WARNING);

return finalizer.run();

}

}

if (trustEmptyDB) {

/* TODO: (br33d) we should either put a ConcurrentHashMap on restore()

* or use Map on save() */

//新建数据库文件

save(dt, (ConcurrentHashMap<Long, Integer>) sessions, false);

/* return a zxid of 0, since we know the database is empty */

return 0L;

} else {

...

dt.lastProcessedZxid = -1L;

return -1L;

}

}

return finalizer.run();

}6.1 从文件中反序列化数据到DataTree,进入方法FileSnap#deserialize(),代码如下:

public long deserialize(DataTree dt, Map<Long, Integer> sessions) throws IOException {

// we run through 100 snapshots (not all of them)

// if we cannot get it running within 100 snapshots

// we should give up

//从zookeeper-branch-3.6\data\version-2目录中加载100个snapshot为前缀的100个文件.

//比如snapshot.0,其中0为事务id,snapZxid.

List<File> snapList = findNValidSnapshots(100);

if (snapList.size() == 0) {

return -1L;

}

File snap = null;

long snapZxid = -1;

boolean foundValid = false;

for (int i = 0, snapListSize = snapList.size(); i < snapListSize; i++) {

snap = snapList.get(i);

LOG.info("Reading snapshot {}", snap);

//获取文件名的后缀数字

snapZxid = Util.getZxidFromName(snap.getName(), SNAPSHOT_FILE_PREFIX);

try (CheckedInputStream snapIS = SnapStream.getInputStream(snap)) {

//从文件中读取输入流

InputArchive ia = BinaryInputArchive.getArchive(snapIS);

deserialize(dt, sessions, ia);

SnapStream.checkSealIntegrity(snapIS, ia);

// Digest feature was added after the CRC to make it backward

// compatible, the older code can still read snapshots which

// includes digest.

//

// To check the intact, after adding digest we added another

// CRC check.

if (dt.deserializeZxidDigest(ia, snapZxid)) {

SnapStream.checkSealIntegrity(snapIS, ia);

}

foundValid = true;

break;

} catch (IOException e) {

LOG.warn("problem reading snap file {}", snap, e);

}

}

if (!foundValid) {

throw new IOException("Not able to find valid snapshots in " + snapDir);

}

dt.lastProcessedZxid = snapZxid;

lastSnapshotInfo = new SnapshotInfo(dt.lastProcessedZxid, snap.lastModified() / 1000);

// compare the digest if this is not a fuzzy snapshot, we want to compare

// and find inconsistent asap.

if (dt.getDigestFromLoadedSnapshot() != null) {

dt.compareSnapshotDigests(dt.lastProcessedZxid);

}

return dt.lastProcessedZxid;

}进入方法DataTree#deserialize,解析文件到DataTree内,代码如下:

public void deserialize(InputArchive ia, String tag) throws IOException {

aclCache.deserialize(ia);

nodes.clear();

pTrie.clear();

nodeDataSize.set(0);

String path = ia.readString("path");

while (!"/".equals(path)) {

DataNode node = new DataNode();

ia.readRecord(node, "node");

//解析到一个节点放入datatree的NodeHashMap类型nodes内部

nodes.put(path, node);

synchronized (node) {

aclCache.addUsage(node.acl);

}

int lastSlash = path.lastIndexOf('/');

if (lastSlash == -1) {

root = node;

} else {

String parentPath = path.substring(0, lastSlash);

DataNode parent = nodes.get(parentPath);

if (parent == null) {

throw new IOException("Invalid Datatree, unable to find "

+ "parent "

+ parentPath

+ " of path "

+ path);

}

//添加子节点

parent.addChild(path.substring(lastSlash + 1));

long eowner = node.stat.getEphemeralOwner();

EphemeralType ephemeralType = EphemeralType.get(eowner);

if (ephemeralType == EphemeralType.CONTAINER) {

containers.add(path);

} else if (ephemeralType == EphemeralType.TTL) {

ttls.add(path);

} else if (eowner != 0) {

HashSet<String> list = ephemerals.get(eowner);

if (list == null) {

list = new HashSet<String>();

ephemerals.put(eowner, list);

}

list.add(path);

}

}

path = ia.readString("path");

}

// have counted digest for root node with "", ignore here to avoid

// counting twice for root node

nodes.putWithoutDigest("/", root);

nodeDataSize.set(approximateDataSize());

// we are done with deserializing the

// the datatree

// update the quotas - create path trie

// and also update the stat nodes

setupQuota();

aclCache.purgeUnused();

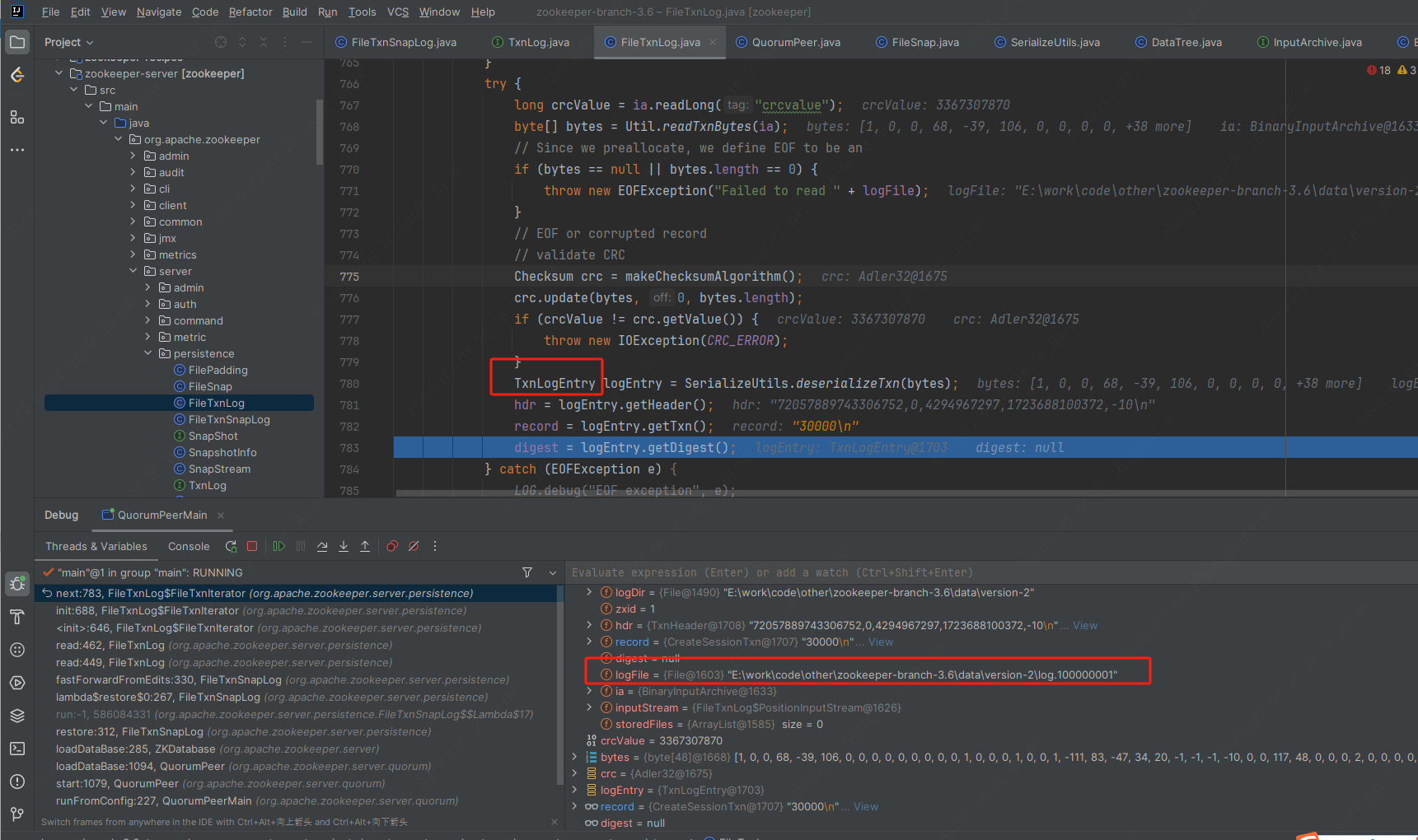

}6.2 从事务日志中恢复数据到内存.代码如下:

public long fastForwardFromEdits(

DataTree dt,

Map<Long, Integer> sessions,

PlayBackListener listener) throws IOException {

//从文件中读取事务日志文件log.100000001,读取该文件到FileTxnLog.FileTxnIterator#ia变量里面.调试中,栈如下图.每次读一条TxnLogEntry事务记录.

TxnIterator itr = txnLog.read(dt.lastProcessedZxid + 1);

long highestZxid = dt.lastProcessedZxid;

TxnHeader hdr;

int txnLoaded = 0;

long startTime = Time.currentElapsedTime();

try {

//遍历事务日志文件的所有事务,取出一条调用processTransaction执行一条.

while (true) {

// iterator points to

// the first valid txn when initialized

hdr = itr.getHeader();

if (hdr == null) {

//empty logs

return dt.lastProcessedZxid;

}

if (hdr.getZxid() < highestZxid && highestZxid != 0) {

LOG.error("{}(highestZxid) > {}(next log) for type {}", highestZxid, hdr.getZxid(), hdr.getType());

} else {

highestZxid = hdr.getZxid();

}

try {

//执行一条事务.

processTransaction(hdr, dt, sessions, itr.getTxn());

dt.compareDigest(hdr, itr.getTxn(), itr.getDigest());

txnLoaded++;

} catch (KeeperException.NoNodeException e) {

throw new IOException("Failed to process transaction type: "

+ hdr.getType()

+ " error: "

+ e.getMessage(),

e);

}

listener.onTxnLoaded(hdr, itr.getTxn(), itr.getDigest());

if (!itr.next()) {

break;

}

}

} finally {

if (itr != null) {

itr.close();

}

}

long loadTime = Time.currentElapsedTime() - startTime;

LOG.info("{} txns loaded in {} ms", txnLoaded, loadTime);

ServerMetrics.getMetrics().STARTUP_TXNS_LOADED.add(txnLoaded);

ServerMetrics.getMetrics().STARTUP_TXNS_LOAD_TIME.add(loadTime);

return highestZxid;

}

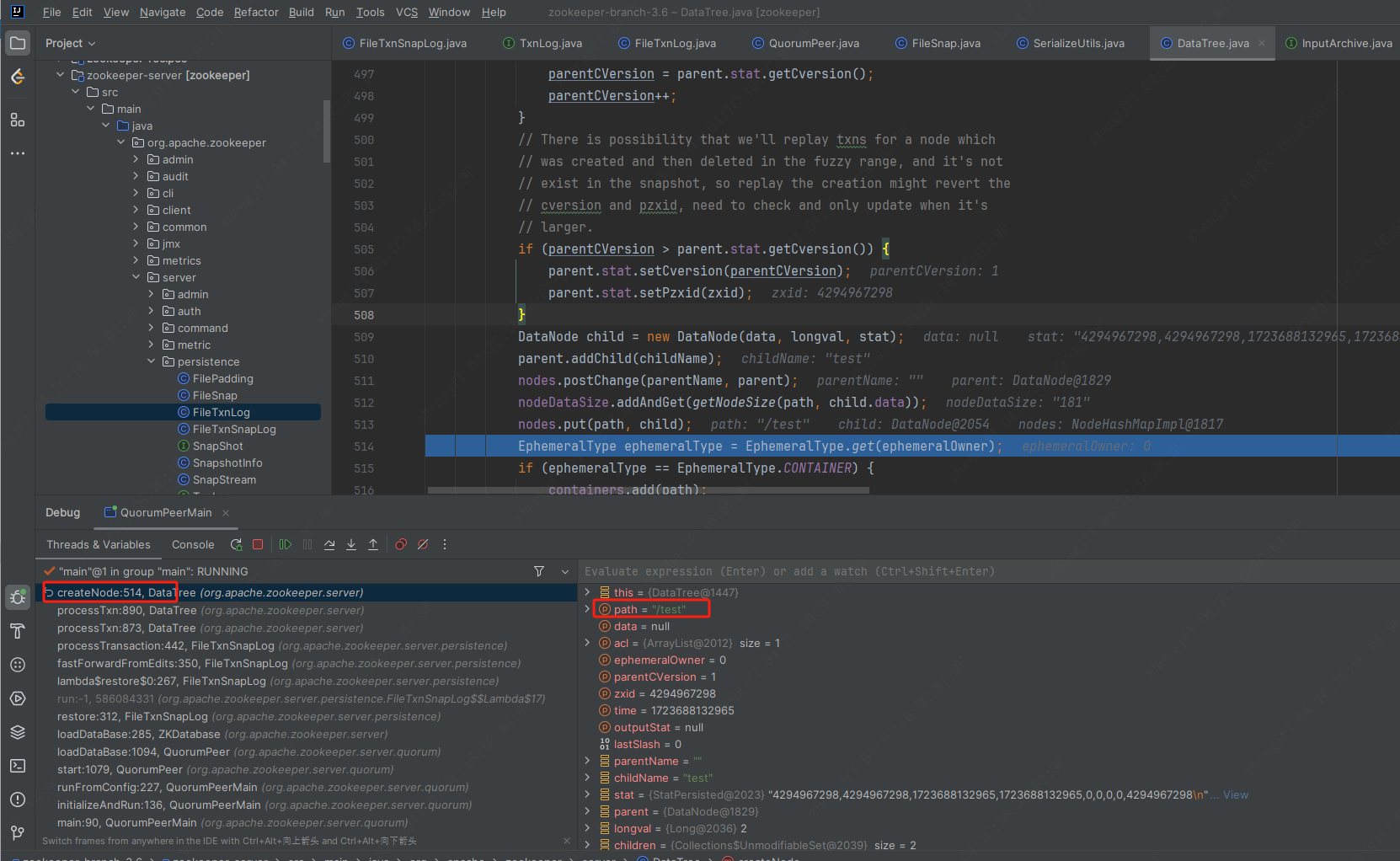

执行事务,比如创建/test节点,栈如下图:

7.初始化选举线程

进入QuorumPeer#createElectionAlgorithm(),代码如下:

protected Election createElectionAlgorithm(int electionAlgorithm) {

Election le = null;

//TODO: use a factory rather than a switch

switch (electionAlgorithm) {

case 1:

throw new UnsupportedOperationException("Election Algorithm 1 is not supported.");

case 2:

throw new UnsupportedOperationException("Election Algorithm 2 is not supported.");

case 3:

//3是FastLeaderElection,其他两个不再支持.创建选举连接管理器

QuorumCnxManager qcm = createCnxnManager();

QuorumCnxManager oldQcm = qcmRef.getAndSet(qcm);

if (oldQcm != null) {

LOG.warn("Clobbering already-set QuorumCnxManager (restarting leader election?)");

oldQcm.halt();

}

QuorumCnxManager.Listener listener = qcm.listener;

if (listener != null) {

//启动新线程,监听其他服务器的选举结果

listener.start();

FastLeaderElection fle = new FastLeaderElection(this, qcm);

//创建线程,依次进入FastLeaderElection.Messenger#start.这里启动两个线程,第一个线程是Messenger.WorkerSender#run

//轮询发送队列FastLeaderElection#sendqueue,发送数据。第二个线程是Messenger.WorkerReceiver#run,取出接收队列

//QuorumCnxManager#recvQueue,处理请求server发送过来的选举消息。见下面代码8处分析.

fle.start();

le = fle;

} else {

LOG.error("Null listener when initializing cnx manager");

}

break;

default:

assert false;

}

return le;

}8.处理其他服务器投票

进入FastLeaderElection.Messenger.WorkerReceiver#run()方法.

public void run() {

Message response;

while (!stop) {

// Sleeps on receive

try {

//从队列里面取出消息

response = manager.pollRecvQueue(3000, TimeUnit.MILLISECONDS);

if (response == null) {

continue;

}

...

//选举相关信息的封装

Notification n = new Notification();

int rstate = response.buffer.getInt();

//源服务器的推荐的leader

long rleader = response.buffer.getLong();

//源服务器的最新事务id

long rzxid = response.buffer.getLong();

//源服务器的epoch代数

long relectionEpoch = response.buffer.getLong();

long rpeerepoch;

int version = 0x0;

...

QuorumVerifier rqv = null;

...

/*

* If it is from a non-voting server (such as an observer or

* a non-voting follower), respond right away.

*/

if (!validVoter(response.sid)) {

//源服务器不能投票,不是follower,直接返回

Vote current = self.getCurrentVote();

QuorumVerifier qv = self.getQuorumVerifier();

ToSend notmsg = new ToSend(

ToSend.mType.notification,

current.getId(),

current.getZxid(),

logicalclock.get(),

self.getPeerState(),

response.sid,

current.getPeerEpoch(),

qv.toString().getBytes());

sendqueue.offer(notmsg);

} else {

...

QuorumPeer.ServerState ackstate = QuorumPeer.ServerState.LOOKING;

switch (rstate) {

case 0:

//启动一台zk1,然后启动调试服务器做3后,进入这里,状态是寻找服务器

ackstate = QuorumPeer.ServerState.LOOKING;

break;

case 1:

ackstate = QuorumPeer.ServerState.FOLLOWING;

break;

case 2:

ackstate = QuorumPeer.ServerState.LEADING;

break;

case 3:

ackstate = QuorumPeer.ServerState.OBSERVING;

break;

default:

continue;

}

//选举消息的填充,n是Notification类型,dto的作用

n.leader = rleader;

n.zxid = rzxid;

n.electionEpoch = relectionEpoch;

n.state = ackstate;

n.sid = response.sid;

n.peerEpoch = rpeerepoch;

n.version = version;

n.qv = rqv;

/*

* If this server is looking, then send proposed leader

*/

if (self.getPeerState() == QuorumPeer.ServerState.LOOKING) {

//投票放入队列里面,然后QuorumPeer#run()死循环,异步取出选票处理

recvqueue.offer(n);

...

}

} else {

/*

* If this server is not looking, but the one that sent the ack

* is looking, then send back what it believes to be the leader.

*/

Vote current = self.getCurrentVote();

if (ackstate == QuorumPeer.ServerState.LOOKING) {

if (self.leader != null) {

if (leadingVoteSet != null) {

self.leader.setLeadingVoteSet(leadingVoteSet);

leadingVoteSet = null;

}

self.leader.reportLookingSid(response.sid);

}

QuorumVerifier qv = self.getQuorumVerifier();

ToSend notmsg = new ToSend(

ToSend.mType.notification,

current.getId(),

current.getZxid(),

current.getElectionEpoch(),

self.getPeerState(),

response.sid,

current.getPeerEpoch(),

qv.toString().getBytes());

sendqueue.offer(notmsg);

}

}

}

} catch (InterruptedException e) {

...

}

}

...

}

}9.选票类Vote

代码如下:

public class Vote {

//版本

private final int version;

private final long id;

//事务id

private final long zxid;

//选票代数

private final long electionEpoch;

//推荐服务器代数

private final long peerEpoch;

}10.开始Leader领导过程

从上面5调用过来,进入Leader#lead()

void lead() throws IOException, InterruptedException {

...

try {

//设置当前Zab为DISCOVERY,寻找leader状态

self.setZabState(QuorumPeer.ZabState.DISCOVERY);

self.tick.set(0);

//设置最新的事务id,调用ZooKeeperServer#takeSnapshot(boolean)做一个干净的快照,此时没有事务进来

zk.loadData();

leaderStateSummary = new StateSummary(self.getCurrentEpoch(), zk.getLastProcessedZxid());

// Start thread that waits for connection requests from

// new followers.

//创建线程,等待Flollower角色的连接请求,线程启动进入LearnerHandler#run()方法.Leader以外的server都是Learner

cnxAcceptor = new LearnerCnxAcceptor();

cnxAcceptor.start();

//获取提案的代数

long epoch = getEpochToPropose(self.getId(), self.getAcceptedEpoch());

zk.setZxid(ZxidUtils.makeZxid(epoch, 0));

synchronized (this) {

lastProposed = zk.getZxid();

}

newLeaderProposal.packet = new QuorumPacket(NEWLEADER, zk.getZxid(), null, null);

if ((newLeaderProposal.packet.getZxid() & 0xffffffffL) != 0) {

LOG.info("NEWLEADER proposal has Zxid of {}", Long.toHexString(newLeaderProposal.packet.getZxid()));

}

QuorumVerifier lastSeenQV = self.getLastSeenQuorumVerifier();

QuorumVerifier curQV = self.getQuorumVerifier();

if (curQV.getVersion() == 0 && curQV.getVersion() == lastSeenQV.getVersion()) {

try {

QuorumVerifier newQV = self.configFromString(curQV.toString());

newQV.setVersion(zk.getZxid());

self.setLastSeenQuorumVerifier(newQV, true);

} catch (Exception e) {

throw new IOException(e);

}

}

newLeaderProposal.addQuorumVerifier(self.getQuorumVerifier());

if (self.getLastSeenQuorumVerifier().getVersion() > self.getQuorumVerifier().getVersion()) {

newLeaderProposal.addQuorumVerifier(self.getLastSeenQuorumVerifier());

}

// We have to get at least a majority of servers in sync with

// us. We do this by waiting for the NEWLEADER packet to get

// acknowledged

waitForEpochAck(self.getId(), leaderStateSummary);

self.setCurrentEpoch(epoch);

self.setLeaderAddressAndId(self.getQuorumAddress(), self.getId());

self.setZabState(QuorumPeer.ZabState.SYNCHRONIZATION);

try {

waitForNewLeaderAck(self.getId(), zk.getZxid());

} catch (InterruptedException e) {

shutdown("Waiting for a quorum of followers, only synced with sids: [ "

+ newLeaderProposal.ackSetsToString()

+ " ]");

HashSet<Long> followerSet = new HashSet<Long>();

for (LearnerHandler f : getLearners()) {

if (self.getQuorumVerifier().getVotingMembers().containsKey(f.getSid())) {

followerSet.add(f.getSid());

}

}

boolean initTicksShouldBeIncreased = true;

for (Proposal.QuorumVerifierAcksetPair qvAckset : newLeaderProposal.qvAcksetPairs) {

if (!qvAckset.getQuorumVerifier().containsQuorum(followerSet)) {

initTicksShouldBeIncreased = false;

break;

}

}

if (initTicksShouldBeIncreased) {

LOG.warn("Enough followers present. Perhaps the initTicks need to be increased.");

}

return;

}

startZkServer();

String initialZxid = System.getProperty("zookeeper.testingonly.initialZxid");

if (initialZxid != null) {

long zxid = Long.parseLong(initialZxid);

zk.setZxid((zk.getZxid() & 0xffffffff00000000L) | zxid);

}

if (!System.getProperty("zookeeper.leaderServes", "yes").equals("no")) {

self.setZooKeeperServer(zk);

}

self.setZabState(QuorumPeer.ZabState.BROADCAST);

self.adminServer.setZooKeeperServer(zk);

boolean tickSkip = true;

// If not null then shutdown this leader

String shutdownMessage = null;

while (true) {

synchronized (this) {

long start = Time.currentElapsedTime();

long cur = start;

long end = start + self.tickTime / 2;

while (cur < end) {

wait(end - cur);

cur = Time.currentElapsedTime();

}

if (!tickSkip) {

self.tick.incrementAndGet();

}

// We use an instance of SyncedLearnerTracker to

// track synced learners to make sure we still have a

// quorum of current (and potentially next pending) view.

SyncedLearnerTracker syncedAckSet = new SyncedLearnerTracker();

syncedAckSet.addQuorumVerifier(self.getQuorumVerifier());

if (self.getLastSeenQuorumVerifier() != null

&& self.getLastSeenQuorumVerifier().getVersion() > self.getQuorumVerifier().getVersion()) {

syncedAckSet.addQuorumVerifier(self.getLastSeenQuorumVerifier());

}

syncedAckSet.addAck(self.getId());

for (LearnerHandler f : getLearners()) {

if (f.synced()) {

syncedAckSet.addAck(f.getSid());

}

}

// check leader running status

if (!this.isRunning()) {

// set shutdown flag

shutdownMessage = "Unexpected internal error";

break;

}

if (!tickSkip && !syncedAckSet.hasAllQuorums()) {

// Lost quorum of last committed and/or last proposed

// config, set shutdown flag

shutdownMessage = "Not sufficient followers synced, only synced with sids: [ "

+ syncedAckSet.ackSetsToString()

+ " ]";

break;

}

tickSkip = !tickSkip;

}

for (LearnerHandler f : getLearners()) {

f.ping();

}

}

if (shutdownMessage != null) {

shutdown(shutdownMessage);

// leader goes in looking state

}

} finally {

zk.unregisterJMX(this);

}

}11.zab选举过程

从前面5调用过来.进入FastLeaderElection#lookForLeader()方法.代码如下:

public Vote lookForLeader() throws InterruptedException {

try {

//监控统计用的bean

self.jmxLeaderElectionBean = new LeaderElectionBean();

MBeanRegistry.getInstance().register(self.jmxLeaderElectionBean, self.jmxLocalPeerBean);

} catch (Exception e) {

...

}

self.start_fle = Time.currentElapsedTime();

try {

/*

* The votes from the current leader election are stored in recvset. In other words, a vote v is in recvset

* if v.electionEpoch == logicalclock. The current participant uses recvset to deduce on whether a majority

* of participants has voted for it.

*/

//如上面英文注释,存储当前的选票

Map<Long, Vote> recvset = new HashMap<Long, Vote>();

/*

* The votes from previous leader elections, as well as the votes from the current leader election are

* stored in outofelection. Note that notifications in a LOOKING state are not stored in outofelection.

* Only FOLLOWING or LEADING notifications are stored in outofelection. The current participant could use

* outofelection to learn which participant is the leader if it arrives late (i.e., higher logicalclock than

* the electionEpoch of the received notifications) in a leader election.

*/

//上一任leader的选票

Map<Long, Vote> outofelection = new HashMap<Long, Vote>();

int notTimeout = minNotificationInterval;

synchronized (this) {

//累加时钟周期

logicalclock.incrementAndGet();

//更新自己的选票,默认投给自己,,投给leader的id(投给自己),最新事务id,最新代数

updateProposal(getInitId(), getInitLastLoggedZxid(), getPeerEpoch());

}

LOG.info(

"New election. My id = {}, proposed zxid=0x{}",

self.getId(),

Long.toHexString(proposedZxid));

//把自己的选票发给其他server.每个server叫一个peer

sendNotifications();

SyncedLearnerTracker voteSet;

/*

* Loop in which we exchange notifications until we find a leader

*/

//死循环,接收其他server发送的选票,做选举判断,直到有leader选出

while ((self.getPeerState() == ServerState.LOOKING) && (!stop)) {

/*

* Remove next notification from queue, times out after 2 times

* the termination time

*/

Notification n = recvqueue.poll(notTimeout, TimeUnit.MILLISECONDS);

/*

* Sends more notifications if haven't received enough.

* Otherwise processes new notification.

*/

//第一次可能没有接收到任何消息,认为自己可能断开连接了,去尝试连接所有的server。

if (n == null) {

if (manager.haveDelivered()) {

sendNotifications();

} else {

manager.connectAll();

}

/*

* Exponential backoff

*/

int tmpTimeOut = notTimeout * 2;

notTimeout = Math.min(tmpTimeOut, maxNotificationInterval);

LOG.info("Notification time out: {}", notTimeout);

} else if (validVoter(n.sid) && validVoter(n.leader)) {

//上面if条件判断其他server发来的选票的来源sid,和选举的leader的sid是否包含在zoo.cfg配置的server id列表中

//调试源码时,先启动server 1,调试server做为server3,没有启动server2,所以这里收到的是server1的消息

//消息是类型,在后面12处

/*

* Only proceed if the vote comes from a replica in the current or next

* voting view for a replica in the current or next voting view.

*/

switch (n.state) {

case LOOKING:

//系统刚启动处于选举状态

if (getInitLastLoggedZxid() == -1) {

LOG.debug("Ignoring notification as our zxid is -1");

break;

}

if (n.zxid == -1) {

LOG.debug("Ignoring notification from member with -1 zxid {}", n.sid);

break;

}

// If notification > current, replace and send messages out

//源server的选举代数>当前server自己的代数,说明自己处于落后的代数,用源server的选票代替自己选票.

//进入这里.调试时server1是2,自己是1.所以进入.

if (n.electionEpoch > logicalclock.get()) {

logicalclock.set(n.electionEpoch);

recvset.clear();

//zab协议ide判断逻辑,很简单就一个条件判断,代码在后面13处分析.updateProposal方法的第一个参数就是赋值选出来的leader.

if (totalOrderPredicate(n.leader, n.zxid, n.peerEpoch, getInitId(), getInitLastLoggedZxid(), getPeerEpoch())) {

updateProposal(n.leader, n.zxid, n.peerEpoch);

} else {

//调试时server1和server3的选举代数,事务id相同,但是server3的sid大,做leader。进入这里

//更新自己的选票.updateProposal方法的第一个参数就是赋值选出来的leader.

updateProposal(getInitId(), getInitLastLoggedZxid(), getPeerEpoch());

}

//向其他server发送自己投票的结果.

sendNotifications();

} else if (n.electionEpoch < logicalclock.get()) {

//源server的代数比自己低,自己忽略它的选票

LOG.debug(

"Notification election epoch is smaller than logicalclock. n.electionEpoch = 0x{}, logicalclock=0x{}",

Long.toHexString(n.electionEpoch),

Long.toHexString(logicalclock.get()));

break;

} else if (totalOrderPredicate(n.leader, n.zxid, n.peerEpoch, proposedLeader, proposedZxid, proposedEpoch)) {

//代数一样,updateProposal方法的第一个参数就是赋值选出来的leader.

updateProposal(n.leader, n.zxid, n.peerEpoch);

//向其他server发送自己投票的结果.

sendNotifications();

}

LOG.debug(

"Adding vote: from={}, proposed leader={}, proposed zxid=0x{}, proposed election epoch=0x{}",

n.sid,

n.leader,

Long.toHexString(n.zxid),

Long.toHexString(n.electionEpoch));

// don't care about the version if it's in LOOKING state

//更新自己饿投票箱

recvset.put(n.sid, new Vote(n.leader, n.zxid, n.electionEpoch, n.peerEpoch));

//更新最新的投票箱

voteSet = getVoteTracker(recvset, new Vote(proposedLeader, proposedZxid, logicalclock.get(), proposedEpoch));

//已经收集到所有选票

if (voteSet.hasAllQuorums()) {

// Verify if there is any change in the proposed leader

while ((n = recvqueue.poll(finalizeWait, TimeUnit.MILLISECONDS)) != null) {

if (totalOrderPredicate(n.leader, n.zxid, n.peerEpoch, proposedLeader, proposedZxid, proposedEpoch)) {

recvqueue.put(n);

break;

}

}

/*

* This predicate is true once we don't read any new

* relevant message from the reception queue

*/

if (n == null) {

//如果proposedLeader的sid是自己的话,把自己改为LEADING状态.否则为Participant或者observing状态.

//通过前面updateProposal方法设置的leader

setPeerState(proposedLeader, voteSet);

Vote endVote = new Vote(proposedLeader, proposedZxid, logicalclock.get(), proposedEpoch);

leaveInstance(endVote);

return endVote;

}

}

break;

case OBSERVING:

LOG.debug("Notification from observer: {}", n.sid);

break;

case FOLLOWING:

case LEADING:

/*

* Consider all notifications from the same epoch

* together.

*/

if (n.electionEpoch == logicalclock.get()) {

recvset.put(n.sid, new Vote(n.leader, n.zxid, n.electionEpoch, n.peerEpoch, n.state));

voteSet = getVoteTracker(recvset, new Vote(n.version, n.leader, n.zxid, n.electionEpoch, n.peerEpoch, n.state));

if (voteSet.hasAllQuorums() && checkLeader(recvset, n.leader, n.electionEpoch)) {

setPeerState(n.leader, voteSet);

Vote endVote = new Vote(n.leader, n.zxid, n.electionEpoch, n.peerEpoch);

leaveInstance(endVote);

return endVote;

}

}

/*

* Before joining an established ensemble, verify that

* a majority are following the same leader.

*

* Note that the outofelection map also stores votes from the current leader election.

* See ZOOKEEPER-1732 for more information.

*/

outofelection.put(n.sid, new Vote(n.version, n.leader, n.zxid, n.electionEpoch, n.peerEpoch, n.state));

voteSet = getVoteTracker(outofelection, new Vote(n.version, n.leader, n.zxid, n.electionEpoch, n.peerEpoch, n.state));

if (voteSet.hasAllQuorums() && checkLeader(outofelection, n.leader, n.electionEpoch)) {

synchronized (this) {

logicalclock.set(n.electionEpoch);

setPeerState(n.leader, voteSet);

}

Vote endVote = new Vote(n.leader, n.zxid, n.electionEpoch, n.peerEpoch);

leaveInstance(endVote);

return endVote;

}

break;

default:

LOG.warn("Notification state unrecoginized: {} (n.state), {}(n.sid)", n.state, n.sid);

break;

}

} else {

...

}

}

return null;

} finally {

...

}

}12.其他server发过来的投票类型

public static class Notification {

/*

* Format version, introduced in 3.4.6

*/

public static final int CURRENTVERSION = 0x2;

//固定值2

int version;

/*

* Proposed leader

*/

//刚启动时server1推荐自己,所以这里是1

long leader;

/*

* zxid of the proposed leader

*/

//刚启动时server1创建了节点,这里有事务id值

long zxid;

/*

* Epoch

*/

//第几代选举,server1传过来2

long electionEpoch;

/*

* current state of sender

*/

//源server目前的状态,server1传过来也是Looking,寻找leader中

QuorumPeer.ServerState state;

/*

* Address of sender

*/

//源server的id号,这里是1

long sid;

QuorumVerifier qv;

/*

* epoch of the proposed leader

*/

//被推荐的leader的代数,server1传过来1

long peerEpoch;

}13.zab算法判断选票大小逻辑

从前面11调用过来

进入FastLeaderElection#totalOrderPredicate(),如果新选票更大,返回true.调试过程中,代数和事务id,server1和server3相等,但是调试用的server3的sid更大,所以选择server3做leader

protected boolean totalOrderPredicate(long newId, long newZxid, long newEpoch, long curId, long curZxid, long curEpoch) {

if (self.getQuorumVerifier().getWeight(newId) == 0) {

return false;

}

/*

* We return true if one of the following three cases hold:

* 1- New epoch is higher

* 2- New epoch is the same as current epoch, but new zxid is higher

* 3- New epoch is the same as current epoch, new zxid is the same

* as current zxid, but server id is higher.

*/

/*

(1).新选票代数 > 当前server的代数,返回true,否则进入(2)

(2).代数相等,新选票的事务id大于当前server的事务id,返回true,否则进入(3)

(3).事务id相等,新选票的sid大于当前server的sid.返回true.sid是zoo.cfg中配置的server.1,server.2等这个数字

*/

return ((newEpoch > curEpoch)

|| ((newEpoch == curEpoch)

&& ((newZxid > curZxid)

|| ((newZxid == curZxid)

&& (newId > curId)))));

}

二.standalong模式服务端main方法启动

主要逻辑是解析zoo.cfg文件,初始化数据管理器,ServerCnxnFactory网络管理器,恢复本地数据,初始化会话管理器,JMX服务等.

(一).调用ManagedUtil.registerLog4jMBeans()初始化Log4J.

(二).调用ServerConfig.parse()解析zoo.cfg配置文件.进入QuorumPeerConfig.parse()解析zoo.cfg为properties文件,配置值都解析到QuorumPeerConfig类的属性中.parse()内部调用setupQuorumPeerConfig()解析serverId(myid文件中配置的数字),初始化本机为PARTICIPANT参与者还是OBSERVER观察者,默认是PARTICIPANT.

(三).调用ZooKeeperServerMain.runFromConfig(ServerConfig)

1.根据dataDir,dataLogDir目录初始化FileTxnSnapLog事务快照

2.初始化ZooKeeperServer服务器.

3.初始化并且启动AdminServer管理服务,端口8080,通过Jetty服务器管理.处理Http请求的类为JettyAdminServer.CommandServlet.

4.创建ServerCnxnFactory的ZooKeeper连接池.连接池的实现类为NIOServerCnxnFactory.调用NIOServerCnxnFactory.startup().启动连接池。

(1).在连接池内创建工作线程池SelectorThread,等待接受网络连接。如果有网络连接进来,则进入NIOServerCnxnFactory.cnxnExpiryQueue的JMX队列里面处理.

(2).创建ZKDatabase内存数据库,即ZNode节点文件系统.在ZKDatabase构造方法内部,调用createDataTree()创建节点树.每一个节点是一个DataNode类型.调用WatchManagerFactory.createWatchManager()创建节点变化,数据变化,监听管理器WatchManager赋值给DataTree的属性.调用ZKDatabase.loadDataBase()进入FileTxnSnapLog.restore()方法从文件中恢复节点树.restore()方法从data/version-2/目录中遍历快照文件,调用jute组件反序列化为BinaryInputArchive格式.调用FileSnap.deserialize()方法解析到DataTree对象中,主要是解析文件偏移的一些操作.调用FileTxnSnapLog.fastForwardFromEdits()获取事务id.调用FileTxnSnapLog.save()保存当前Snapsot.

(3).调用ZooKeeperServer.createSessionTracker()创建Session管理器.

(4).调用ZooKeeperServer.setupRequestProcessors()创建请求处理的职责链.依次添加PrepRequestProcessor(创建node),SyncRequestProcessor(持久化事务日志),FinalRequestProcessor(更新内存数据库,响应客户端).职责链是分散管理的,每个职责链上的节点存储了下一个节点的引用.每个节点的处理都是异步的,放到节点内部的队列中,通过新线程while循环依次取出一个个请求,处理请求.

(5).调用ZooKeeperServer.registerJMX()注册JMX消息服务.

(6).调用ZooKeeperServer.registerMetrics()注册统计信息.调用DefaultMetricsProvider.DefaultMetricsContext.registerGauge()注册每个统计指标的函数指针,java8的::新特性用法.

5.创建ContainerManager.

6.调用 shutdownLatch.await()等待zookeeper关闭.

7.如果是QuorumPeerMain启动,默认角色类型为LearnerType.PARTICIPANT。在QuorumPeer.start()中进入QuorumPeer.startLeaderElection()开始选举过程.具体选举过程见"五.Leader选举/zab".然后进入QuorumPeer.run()死循环运行直到进程退出,根据自己的角色运行不同的逻辑.

3086

3086

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?