转自:http://blog.csdn.net/hareric/article/details/48582211

本文介绍朴素贝叶斯分类器(Naive Bayes classifier),它是一种简单有效的常用分类算法。

一、朴素贝叶斯分类的基本原理

给定的待分类项的特征属性,计算该项在各个类别出现的概率,取最大的概率类别作为预测项。

二、贝叶斯定理

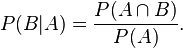

根据条件概率的定义。在事件B发生的条件下事件A发生的概率是:

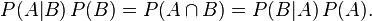

同样地,在事件A发生的条件下事件B发生的概率:

整理与合并这两个方程式,我们可以得到:

这个引理有时称作概率乘法规则。上式两边同除以P(A),若P(A)是非零的,我们可以得到贝叶斯定理:

三、贝叶斯定理的变式应用

现有一个待分类项x,拥有n个特征,归于类别c的概率可表示为:

使用贝叶斯定理可变式为:

由于在计算其他类别的概率时,均会除以 ,并不影响最后结果的判断预测,因此可省略,即:

,并不影响最后结果的判断预测,因此可省略,即:

变式后:

例:

现给出10个被分类项的特征和已确定的类别,用来训练该分类器。

| 被分类项\特征 | F1 | F2 | F3 | F4 | F5 | F6 | 类别 |

|---|---|---|---|---|---|---|---|

| U1 | 1 | 0 | 1 | 0 | 1 | 0 | a |

| U2 | 1 | 1 | 0 | 1 | 0 | 0 | a |

| U3 | 1 | 0 | 0 | 1 | 1 | 1 | b |

| U4 | 0 | 0 | 1 | 0 | 1 | 1 | a |

| U5 | 1 | 0 | 0 | 1 | 1 | 0 | c |

| U6 | 1 | 1 | 1 | 1 | 1 | 0 | b |

| U7 | 1 | 1 | 1 | 0 | 1 | 1 | a |

| U8 | 1 | 0 | 1 | 0 | 1 | 0 | c |

| U9 | 0 | 1 | 0 | 0 | 1 | 0 | c |

| U10 | 0 | 1 | 1 | 0 | 1 | 0 | a |

由上示表格可计算出 P(F1|c)=0.66 ; P(F5|a)=0.8 ; P(F3|b)=0.5 ; P(c)=0.3…

现给出 U11 项的特征,进行预测推断该项应属于a,b,c中的哪一类

| 被分类项\特征 | F1 | F2 | F3 | F4 | F5 | F6 | 类别 |

|---|---|---|---|---|---|---|---|

| U11 | 0 | 0 | 1 | 0 | 1 | 0 | 未知 |

由表格可得知:被分类项 U11 拥有特征F3和F5

预测分到类别a的概率:

预测分到类别b的概率:

预测分到类别c的概率:

因此可预测该项应分至类别a

四、贝叶斯分类运用于文本分类中

实例:对邮件进行垃圾邮件和正常邮件的分类

基本思路:

1、将邮件中的文本的每一个词都作为特征,是否存在这个词可视为该邮件是否存在这个特征。

2、首先给出了已人工划分好的邮件作为训练集,创建关于邮件的完整词库。

3、对每条邮件都创建关于词库的向量,邮件若存在某词语则为1,不存在则为0.

4、创建如下图的向量表。

| ability | ad | after | basket | battle | behind | 。。。 | zebu | zest | zoom | 是否为垃圾邮件 | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Email01 | 1 | 0 | 1 | 0 | 1 | 1 | 0 | 1 | 1 | 1 | |

| Email02 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 1 | 1 | |

| Email03 | 1 | 1 | 1 | 1 | 0 | 1 | 1 | 1 | 0 | 1 | |

| Email04 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | |

| Email05 | 1 | 1 | 0 | 1 | 1 | 1 | 1 | 0 | 0 | 1 | |

| Email06 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | |

| Email07 | 0 | 1 | 0 | 1 | 1 | 1 | 1 | 0 | 0 | 0 | |

| Email08 | 0 | 1 | 1 | 1 | 0 | 0 | 0 | 1 | 1 | 1 | |

| Email09 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 0 | 1 | |

| Email10 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | |

| … | |||||||||||

| Email n | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 1 | 0 | 0 |

5、待分类的邮件则可以利用上图表使用贝叶斯定理,计算出归于垃圾邮件和正常邮件的概率,并进行预测。

代码实例

1、首先读入数据集 spam 文件夹中的垃圾邮件和 ham 文件夹中的正常邮件,并对它进行解码、分词处理和小写处理,最后合并成一个列表。

<code class="hljs python has-numbering"><span class="hljs-function"><span class="hljs-keyword">def</span> <span class="hljs-title">loadDataSet</span><span class="hljs-params">()</span>:</span> <span class="hljs-comment">#导入垃圾邮件和正常邮件作为训练集</span>

test_list = []

<span class="hljs-keyword">for</span> i <span class="hljs-keyword">in</span> range(<span class="hljs-number">21</span>)[<span class="hljs-number">1</span>:]:

file1 = open(<span class="hljs-string">r'spam/%s.txt'</span>%i)

text = file1.read()

code = chardet.detect(text)[<span class="hljs-string">'encoding'</span>]

text = text.decode(code).lower()

words = nltk.word_tokenize(text)

test_list.append(words)

file1.close()

<span class="hljs-keyword">for</span> i <span class="hljs-keyword">in</span> range(<span class="hljs-number">21</span>)[<span class="hljs-number">1</span>:]:

file1 = open(<span class="hljs-string">r'ham/%s.txt'</span>%i)

text = file1.read()

code = chardet.detect(text)[<span class="hljs-string">'encoding'</span>]

text = text.decode(code).lower()

words = nltk.word_tokenize(text)

test_list.append(words)

file1.close()

classVec = [<span class="hljs-number">1</span> <span class="hljs-keyword">for</span> i <span class="hljs-keyword">in</span> range(<span class="hljs-number">20</span>)]

classVec.extend([<span class="hljs-number">0</span> <span class="hljs-keyword">for</span> j <span class="hljs-keyword">in</span> range(<span class="hljs-number">20</span>)])<span class="hljs-comment">#1 代表垃圾邮件 0代表普通邮件</span>

<span class="hljs-keyword">return</span> test_list,classVec</code><ul style="FILTER: ; ZOOM: 1" class="pre-numbering"><li>1</li></ul>

2、利用set类型操作,对列表进行处理,删除重复单词,最后合并成一个词库

<code class="hljs python has-numbering"><span class="hljs-function"><span class="hljs-keyword">def</span> <span class="hljs-title">createVocabList</span><span class="hljs-params">(dataSet)</span>:</span><span class="hljs-comment">#创建词库</span>

vocabSet = set([])

<span class="hljs-keyword">for</span> i <span class="hljs-keyword">in</span> dataSet:

vocabSet = vocabSet | set(i) <span class="hljs-comment">#取并集,消除重复集</span>

<span class="hljs-keyword">return</span> list(vocabSet)</code><ul style="FILTER: ; ZOOM: 1" class="pre-numbering"><li>1</li></ul>

3、对单条邮件创建向量

<code class="hljs python has-numbering"><span class="hljs-function"><span class="hljs-keyword">def</span> <span class="hljs-title">createVector</span><span class="hljs-params">(unit,vocabList)</span>:</span>

vector = [<span class="hljs-number">0</span>]*len(vocabList)

<span class="hljs-keyword">for</span> i <span class="hljs-keyword">in</span> unit:

<span class="hljs-keyword">if</span> i <span class="hljs-keyword">in</span> vocabList:

vector[vocabList.index(i)] = <span class="hljs-number">1</span>

<span class="hljs-keyword">else</span>:

<span class="hljs-keyword">print</span> <span class="hljs-string">"the word %s is not in my vocabList"</span>%i

<span class="hljs-keyword">continue</span>

<span class="hljs-keyword">return</span> vector</code><ul style="FILTER: ; ZOOM: 1" class="pre-numbering"><li>1</li></ul>

4、利用已分好类型的邮件数对贝叶斯分类器进行训练。训练结束后能够得到:正常邮件中和垃圾邮件词库中每个词出现的概率列表(p1Vect,p0Vect),以及垃圾邮件和正常邮件的概率(p1,p0)

<code class="hljs python has-numbering"><span class="hljs-function"><span class="hljs-keyword">def</span> <span class="hljs-title">trainNBO</span><span class="hljs-params">(train_matrix,train_bool)</span>:</span>

train_num = len(train_matrix)

words_num = len(train_matrix[<span class="hljs-number">0</span>])

sum_1 = [<span class="hljs-number">0</span> <span class="hljs-keyword">for</span> i <span class="hljs-keyword">in</span> range(words_num)]

sum_0 = [<span class="hljs-number">0</span> <span class="hljs-keyword">for</span> i <span class="hljs-keyword">in</span> range(words_num)]

_1_num = <span class="hljs-number">0</span> <span class="hljs-comment">#是垃圾邮件的邮件数</span>

_0_num = <span class="hljs-number">0</span> <span class="hljs-comment">#非垃圾邮件的邮件数</span>

<span class="hljs-keyword">for</span> i <span class="hljs-keyword">in</span> range(train_num): <span class="hljs-comment">#将训练矩阵向量进行相加</span>

<span class="hljs-keyword">if</span> train_bool[i]==<span class="hljs-number">1</span>:

<span class="hljs-keyword">for</span> j <span class="hljs-keyword">in</span> range(words_num):

sum_1[j] += train_matrix[i][j]

_1_num = _1_num + <span class="hljs-number">1</span>

<span class="hljs-keyword">if</span> train_bool[i]==<span class="hljs-number">0</span>:

<span class="hljs-keyword">for</span> j <span class="hljs-keyword">in</span> range(words_num):

sum_0[j] += train_matrix[i][j]

_0_num = _0_num + <span class="hljs-number">1</span>

<span class="hljs-keyword">print</span> <span class="hljs-string">"正常邮件数:"</span>,_0_num,<span class="hljs-string">" 垃圾邮件数:"</span>,_1_num

p1Vect = [(float(sum_1[j])/_1_num) <span class="hljs-keyword">for</span> j <span class="hljs-keyword">in</span> range(words_num)]

p0Vect = [(float(sum_0[j])/_0_num) <span class="hljs-keyword">for</span> j <span class="hljs-keyword">in</span> range(words_num)]

p1 = float(_1_num)/train_num

p0 = float(_0_num)/train_num

<span class="hljs-keyword">return</span> p1Vect,p0Vect,p1,p0</code><ul style="FILTER: ; ZOOM: 1" class="pre-numbering"><li>1</li></ul>

5、将已转化成向量的邮件,进行类别推测。

<code class="hljs python has-numbering"><span class="hljs-function"><span class="hljs-keyword">def</span> <span class="hljs-title">classing</span><span class="hljs-params">(p1Vect,p0Vect,P1,P0,unitVector)</span>:</span>

p1 = <span class="hljs-number">1.</span>

p0 = <span class="hljs-number">1.</span>

words_num = len(unitVector)

<span class="hljs-keyword">for</span> i <span class="hljs-keyword">in</span> range(words_num):

<span class="hljs-keyword">if</span> unitVector[i]==<span class="hljs-number">1</span>:

p1 *= p1_vect[i]

p0 *= p0_vect[i]

p1 *= P1

p0 *= P0

<span class="hljs-keyword">if</span> p1>p0:

<span class="hljs-keyword">return</span> <span class="hljs-number">1</span>

<span class="hljs-keyword">else</span>:<span class="hljs-keyword">return</span> <span class="hljs-number">0</span></code><ul style="FILTER: ; ZOOM: 1" class="pre-numbering"><li>1</li></ul>

完整代码

<code class="hljs python has-numbering"><span class="hljs-comment">#coding=utf-8</span>

__author__ = <span class="hljs-string">'Eric Chan'</span>

<span class="hljs-keyword">import</span> nltk

<span class="hljs-keyword">import</span> chardet

<span class="hljs-function"><span class="hljs-keyword">def</span> <span class="hljs-title">loadDataSet</span><span class="hljs-params">()</span>:</span> <span class="hljs-comment">#导入垃圾邮件和正常邮件作为训练集</span>

test_list = []

<span class="hljs-keyword">for</span> i <span class="hljs-keyword">in</span> range(<span class="hljs-number">21</span>)[<span class="hljs-number">1</span>:]:

file1 = open(<span class="hljs-string">r'spam/%s.txt'</span>%i)

text = file1.read()

code = chardet.detect(text)[<span class="hljs-string">'encoding'</span>]

text = text.decode(code).lower()

words = nltk.word_tokenize(text)

test_list.append(words)

file1.close()

<span class="hljs-keyword">for</span> i <span class="hljs-keyword">in</span> range(<span class="hljs-number">21</span>)[<span class="hljs-number">1</span>:]:

file1 = open(<span class="hljs-string">r'ham/%s.txt'</span>%i)

text = file1.read()

code = chardet.detect(text)[<span class="hljs-string">'encoding'</span>]

text = text.decode(code).lower()

words = nltk.word_tokenize(text)

test_list.append(words)

file1.close()

classVec = [<span class="hljs-number">1</span> <span class="hljs-keyword">for</span> i <span class="hljs-keyword">in</span> range(<span class="hljs-number">20</span>)]

classVec.extend([<span class="hljs-number">0</span> <span class="hljs-keyword">for</span> j <span class="hljs-keyword">in</span> range(<span class="hljs-number">20</span>)])<span class="hljs-comment">#1 代表垃圾邮件 0代表普通邮件</span>

<span class="hljs-keyword">return</span> test_list,classVec

<span class="hljs-function"><span class="hljs-keyword">def</span> <span class="hljs-title">createVocabList</span><span class="hljs-params">(dataSet)</span>:</span><span class="hljs-comment">#创建词库</span>

vocabSet = set([])

<span class="hljs-keyword">for</span> i <span class="hljs-keyword">in</span> dataSet:

vocabSet = vocabSet | set(i) <span class="hljs-comment">#取并集,消除重复集</span>

<span class="hljs-keyword">return</span> list(vocabSet)

<span class="hljs-function"><span class="hljs-keyword">def</span> <span class="hljs-title">createVector</span><span class="hljs-params">(unit,vocabList)</span>:</span> <span class="hljs-comment">#对每条邮件创建向量</span>

vector = [<span class="hljs-number">0</span>]*len(vocabList)

<span class="hljs-keyword">for</span> i <span class="hljs-keyword">in</span> unit:

<span class="hljs-keyword">if</span> i <span class="hljs-keyword">in</span> vocabList:

vector[vocabList.index(i)] = <span class="hljs-number">1</span>

<span class="hljs-keyword">else</span>:

<span class="hljs-keyword">print</span> <span class="hljs-string">"the word %s is not in my vocabList"</span>%i

<span class="hljs-keyword">continue</span>

<span class="hljs-keyword">return</span> vector

<span class="hljs-function"><span class="hljs-keyword">def</span> <span class="hljs-title">trainNBO</span><span class="hljs-params">(train_matrix,train_bool)</span>:</span>

train_num = len(train_matrix)

words_num = len(train_matrix[<span class="hljs-number">0</span>])

sum_1 = [<span class="hljs-number">0</span> <span class="hljs-keyword">for</span> i <span class="hljs-keyword">in</span> range(words_num)]

sum_0 = [<span class="hljs-number">0</span> <span class="hljs-keyword">for</span> i <span class="hljs-keyword">in</span> range(words_num)]

_1_num = <span class="hljs-number">0</span> <span class="hljs-comment">#是垃圾邮件的邮件数</span>

_0_num = <span class="hljs-number">0</span> <span class="hljs-comment">#非垃圾邮件的邮件数</span>

<span class="hljs-keyword">for</span> i <span class="hljs-keyword">in</span> range(train_num): <span class="hljs-comment">#将训练矩阵向量进行相加</span>

<span class="hljs-keyword">if</span> train_bool[i]==<span class="hljs-number">1</span>:

<span class="hljs-keyword">for</span> j <span class="hljs-keyword">in</span> range(words_num):

sum_1[j] += train_matrix[i][j]

_1_num = _1_num + <span class="hljs-number">1</span>

<span class="hljs-keyword">if</span> train_bool[i]==<span class="hljs-number">0</span>:

<span class="hljs-keyword">for</span> j <span class="hljs-keyword">in</span> range(words_num):

sum_0[j] += train_matrix[i][j]

_0_num = _0_num + <span class="hljs-number">1</span>

<span class="hljs-keyword">print</span> <span class="hljs-string">"正常邮件数:"</span>,_0_num,<span class="hljs-string">" 垃圾邮件数:"</span>,_1_num

p1Vect = [(float(sum_1[j])/_1_num) <span class="hljs-keyword">for</span> j <span class="hljs-keyword">in</span> range(words_num)]

p0Vect = [(float(sum_0[j])/_0_num) <span class="hljs-keyword">for</span> j <span class="hljs-keyword">in</span> range(words_num)]

p1 = float(_1_num)/train_num

p0 = float(_0_num)/train_num

<span class="hljs-keyword">return</span> p1Vect,p0Vect,p1,p0

vocabList = [] <span class="hljs-comment">#定义全局变量 创建词库</span>

<span class="hljs-function"><span class="hljs-keyword">def</span> <span class="hljs-title">createClassifier</span><span class="hljs-params">()</span>:</span>

mail_list,spam_bool = loadDataSet()

<span class="hljs-keyword">global</span> vocabList

vocabList = createVocabList(mail_list)

vocabList.sort()

train_matrix = [] <span class="hljs-comment">#训练贝叶斯分类器的数据集 向量矩阵</span>

<span class="hljs-keyword">for</span> i <span class="hljs-keyword">in</span> range(len(mail_list)):

train_matrix.append(createVector(mail_list[i],vocabList))

<span class="hljs-keyword">return</span> trainNBO(train_matrix,spam_bool)

<span class="hljs-function"><span class="hljs-keyword">def</span> <span class="hljs-title">classing</span><span class="hljs-params">(p1Vect,p0Vect,P1,P0,unitVector)</span>:</span>

p1 = <span class="hljs-number">1.</span>

p0 = <span class="hljs-number">1.</span>

words_num = len(unitVector)

<span class="hljs-keyword">for</span> i <span class="hljs-keyword">in</span> range(words_num):

<span class="hljs-keyword">if</span> unitVector[i]==<span class="hljs-number">1</span>:

p1 *= p1_vect[i]

p0 *= p0_vect[i]

p1 *= P1

p0 *= P0

<span class="hljs-keyword">if</span> p1>p0:

<span class="hljs-keyword">return</span> <span class="hljs-number">1</span>

<span class="hljs-keyword">else</span>:<span class="hljs-keyword">return</span> <span class="hljs-number">0</span>

text_data = []

<span class="hljs-keyword">for</span> i <span class="hljs-keyword">in</span> [<span class="hljs-number">21</span>,<span class="hljs-number">22</span>,<span class="hljs-number">23</span>,<span class="hljs-number">24</span>,<span class="hljs-number">25</span>]:

file1 = open(<span class="hljs-string">r'spam/%s.txt'</span>%i)

text = file1.read()

code = chardet.detect(text)[<span class="hljs-string">'encoding'</span>]

text = text.decode(code).lower()

words = nltk.word_tokenize(text)

text_data.append(words)

file1.close()

<span class="hljs-keyword">for</span> i <span class="hljs-keyword">in</span> [<span class="hljs-number">21</span>,<span class="hljs-number">22</span>,<span class="hljs-number">23</span>,<span class="hljs-number">24</span>,<span class="hljs-number">25</span>]:

file1 = open(<span class="hljs-string">r'ham/%s.txt'</span>%i)

text = file1.read()

code = chardet.detect(text)[<span class="hljs-string">'encoding'</span>]

text = text.decode(code).lower()

words = nltk.word_tokenize(text)

text_data.append(words)

file1.close()

p1_vect,p0_vect,p1,p0 = createClassifier()

<span class="hljs-keyword">print</span> vocabList

<span class="hljs-keyword">for</span> i <span class="hljs-keyword">in</span> text_data:

temp = createVector(i,vocabList)

<span class="hljs-keyword">print</span> classing(p1_vect,p0_vect,p1,p0,temp),<span class="hljs-string">" "</span></code><ul style="FILTER: ; ZOOM: 1" class="pre-numbering"><li>1</li></ul>

资料参考:

1、 维基百科

2、《机器学习实战》

735

735

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?