参考文章:http://www.cnblogs.com/daihengchen/p/5754383.html

KNN

参考文章:http://blog.csdn.net/zhyh1435589631/article/details/54236643

knn 本质实现部分 代码分析

2.2.3.1 KNearestNeighbor 类整体分析

- 本质上, 这是一个类, 有多个成员函数构成, 用户调用的时候, 只需要调用

train 和 predict即可得到想要的预测数据 - 其中,

compute_distances_two_loops,compute_distances_one_loop,compute_distances_no_loops分别是用来实现需要预测的数据集 X 和 原始记录的训练集 self.X_train之间的距离关系, 并通过predict_labels 进行KNN预测

class KNearestNeighbor(object):

""" a kNN classifier with L2 distance """

def __init__(self):

pass

def train(self, X, y):

...

def predict(self, X, k=1, num_loops=0):

...

def compute_distances_two_loops(self, X):

...

def compute_distances_one_loop(self, X):

...

def compute_distances_no_loops(self, X):

...

def getNormMatrix(self, x, lines_num):

...

def predict_labels(self, dists, k=1):

...

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

2.2.3.2 compute_distances_two_loops

这个函数主要通过两层 for 循环对计算测试集与训练集数据之间的欧式距离

d2(I1,I2)=∑p(Ip1−Ip2)2−−−−−−−−−−−−√

def compute_distances_two_loops(self, X):

"""

Compute the distance between each test point in X and each training point

in self.X_train using a nested loop over both the training data and the

test data.

Inputs:

- X: A numpy array of shape (num_test, D) containing test data.

Returns:

- dists: A numpy array of shape (num_test, num_train) where dists[i, j]

is the Euclidean distance between the ith test point and the jth training

point.

"""

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train))

for i in xrange(num_test):

for j in xrange(num_train):

dists[i][j] = np.sqrt(np.sum(np.square(self.X_train[j,:] - X[i,:])))

return dists

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

2.2.3.3 compute_distances_one_loop

本质上这里填入的代码和 上一节中的是一致的, 只是多了一个 axis = 1 指定方向

def compute_distances_one_loop(self, X):

"""

Compute the distance between each test point in X and each training point

in self.X_train using a single loop over the test data.

Input / Output: Same as compute_distances_two_loops

"""

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train))

for i in xrange(num_test):

dists[i,:] = np.sqrt(np.sum(np.square(self.X_train-X[i,:]),axis = 1))

return dists

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

2.2.3.4 compute_distances_no_loops

- 这部分公式虽然短小, 但是需要一定的数学功底, 参考文章:http://blog.csdn.net/geekmanong/article/details/51524402

- 我们记测试集矩阵 为

P

大小为

M×D

, 训练集矩阵 为

C

大小为

N×D

- 记

Pi

是

P

的第

i

行, 同理

Cj

是

C

的 第

j

行:

Pi=[Pi1Pi2⋯PiD]Cj=[Cj1Cj2⋯CjD]

- 我们先来计算一下

Pi

和

Cj

之间的距离

d(Pi,Cj)=(Pi1−Cj1)2+(Pi2−Cj2)2+⋯+(PiD−CjD)2−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−√=(P2i1+P2i2+⋯+P2iD)+(C2j1+C2j2+⋯+C2jD)−2∗(Pi1Cj1+Pi2Cj2+⋯+PiDCjD)−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−√=||Pi||2+||Cj||2−2∗PiC′j−−−−−−−−−−−−−−−−−−−−√

- 我们可以推广得到,结果矩阵的每行元素为:

Res(i)=(||Pi||2||Pi||2⋯||Pi||2)+(||C1||2||C2||2⋯||CN||2)−2∗Pi(C′1C′2⋯C′N)−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−√=(||Pi||2||Pi||2⋯||Pi||2)+(||C1||2||C2||2⋯||CN||2)−2∗PiC′−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−√

- 继而, 结果矩阵为:

Res=⎛⎝⎜⎜⎜⎜⎜⎜||P1||2||P2||2⋮||PM||2||P1||2||P2||2⋮||PM||2⋯⋯⋱⋯||P1||2||P2||2⋮||PM||2⎞⎠⎟⎟⎟⎟⎟⎟+⎛⎝⎜⎜⎜⎜⎜⎜||C1||2||C1||2⋮||C1||2||C2||2||C2||2⋮||C2||2⋯⋯⋱⋯||CN||2||CN||2⋮||CN||2⎞⎠⎟⎟⎟⎟⎟⎟−2PC′−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−⎷=⎛⎝⎜⎜⎜⎜⎜||P1||2||P2||2⋮||PM||2⎞⎠⎟⎟⎟⎟⎟M×1∗(11⋯1)1×N+⎛⎝⎜⎜⎜⎜11⋮1⎞⎠⎟⎟⎟⎟M×1∗(||C1||2||C2||2⋯||CN||2)1×N−2PM×DC′N×D−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−−⎷

- 转换为python 代码如下:

def compute_distances_no_loops(self, X):

"""

Compute the distance between each test point in X and each training point

in self.X_train using no explicit loops.

Input / Output: Same as compute_distances_two_loops

"""

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train))

sq1=np.sum(np.square(X),axis=1)

sq2=np.sum(np.square(self.X_train),axis=1)

s=np.dot(X,self.X_train.T)

dist = np.sqrt(sq1+sq2.T-2*s) #dists = np.sqrt(self.getNormMatrix(X, num_train).T + self.getNormMatrix(self.X_train, num_test) - 2 * np.dot(X, self.X_train.T))

return dists

def getNormMatrix(self, x, lines_num):

"""

Get a lines_num x size(x, 1) matrix

"""

return np.ones((lines_num, 1)) * np.sum(np.square(x), axis = 1)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

2.2.3.5 predict_labels

根据计算得到的距离关系, 挑选 K 个数据组成选民, 进行党派选举

def predict_labels(self, dists, k=1):

"""

Given a matrix of distances between test points and training points,

predict a label for each test point.

Inputs:

- dists: A numpy array of shape (num_test, num_train) where dists[i, j]

gives the distance betwen the ith test point and the jth training point.

Returns:

- y: A numpy array of shape (num_test,) containing predicted labels for the

test data, where y[i] is the predicted label for the test point X[i].

"""

num_test = dists.shape[0]

y_pred = np.zeros(num_test)

for i in xrange(num_test):

closest_y = []

kids = np.argsort(dists[i])

closest_y = self.y_train[kids[:k]]

return y_pred

2.2.4 cross-validation 代码分析

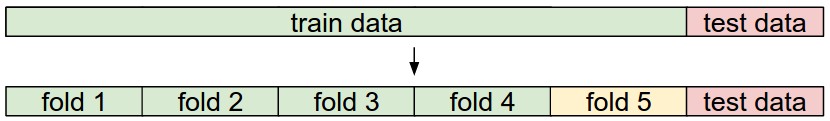

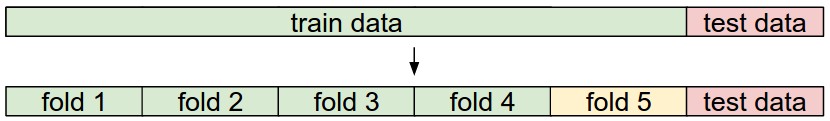

- 交叉验证实际上是将数据的训练集进行拆分, 分成多个组, 构成多个训练和测试集, 来筛选较好的超参数

- 如图所示, 可以分为 5组数据, (分别将 fold 1, 2 .. 5 作为验证集, 将剩余的数据作为训练集, 训练得到超参数)

2.2.4.1 筛选不同的k

num_folds = 5

k_choices = [1, 3, 5, 8, 10, 12, 15, 20, 50, 100]

X_train_folds = []

y_train_folds = []

X_train_folds = np.array_split(X_train, num_folds)

y_train_folds = np.array_split(y_train, num_folds)

k_to_accuracies = {}

for k in k_choices:

k_to_accuracies[k] = np.zeros(num_folds)

for i in range(num_folds):

Xtr = np.array(X_train_folds[:i] + X_train_folds[i+1:])

ytr = np.array(y_train_folds[:i] + y_train_folds[i+1:])

Xte = np.array(X_train_folds[i])

yte = np.array(y_train_folds[i])

Xtr = np.reshape(Xtr, (X_train.shape[0] * 4 / 5, -1))

ytr = np.reshape(ytr, (y_train.shape[0] * 4 / 5, -1))

Xte = np.reshape(Xte, (X_train.shape[0] / 5, -1))

yte = np.reshape(yte, (y_train.shape[0] / 5, -1))

classifier.train(Xtr, ytr)

yte_pred = classifier.predict(Xte, k)

yte_pred = np.reshape(yte_pred, (yte_pred.shape[0], -1))

num_correct = np.sum(yte_pred == yte)

accuracy = float(num_correct) / len(yte)

k_to_accuracies[k][i] = accuracy

for k in sorted(k_to_accuracies):

for accuracy in k_to_accuracies[k]:

print 'k = %d, accuracy = %f' % (k, accuracy)

1915

1915

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?