给自己一个动力去看英语论文,每天翻译一节,纯属自己翻译,小白一只,如果您能提出建议或者翻译修改,将非常感谢,首先谢谢!

How Generative Adversarial Networks and Their Variants Work: An Overview

Yongjun Hong, Uiwon Hwang, Jaeyoon Yoo and Sungroh Yoon Department of Electrical and Computer Engineering Seoul National University, Seoul, Korea {yjhong, uiwon.hwang, yjy765, sryoon}@snu.ac.kr

Abstract GenerativeAdversarialNetworks(GAN)havereceivedwideattentioninthemachinelearning field for their potential to learn high-dimensional, complex real data distribution. Specifically, they do not rely on any assumptions about the distribution and can generate real-like samplesfromlatentspaceinasimplemanner. ThispowerfulpropertyleadsGANtobeapplied to various applications such as image synthesis, image attribute editing, image translation, domain adaptation and other academic fields. In this paper, we aim to discuss the details of GAN for those readers who are familiar with, but do not comprehend GAN deeply or who wish to view GAN from various perspectives. In addition, we explain how GAN operates and the fundamental meaning of various objective functions that have been suggested recently. We then focus on how the GAN can be combined with an autoencoder framework. Finally, we enumerate the GAN variants that are applied to various tasks and other fields for those who are interested in exploiting GAN for their research.

1 Introduction

Recently, in the machine learning field, generative models have become more important and popular because of their applicability in various fields. Their capability to represent complex and high-dimensional data can be utilized in treating images [2, 12, 57, 65, 127, 133, 145], videos [122, 125, 126], music generation [41, 66, 141], natural languages [48, 73] and other academic domains such as medical images [16, 77, 136] and security [109, 124]. Specifically, generative models are highly useful for image to image translation (See Figure 1) [9, 57, 137, 145] which transfers images to another specific domain, image super-resolution [65], changing some features of an object in an image [3, 37, 75, 94, 144] and predicting the next frames of a video [122, 125, 126]. In addition, generative models can be the solution for various problems in the machine learning field such as semisupervised learning [21, 67, 104, 115], which tries to address the lack of labeled data, and domain adaptation [2, 12, 47, 108, 111, 140], which leverages known knowledge for some tasks in other domains where only little information is given. Formally, a generative model learns to model a real data probability distribution pdata(x) where the data x exists in the d-dimensional real space Rd, and most generative models, including autoregressive models [92, 105], are based on the maximum likelihood principle with a model parametrized by parameters θ. With independent and identically distributed (i.i.d.) training samples xi where i ∈ {1,2,···n}, the likelihood is defined as the product of probabilities that the model gives to each training data:Qn i=1 pθ(xi) where pθ(x) is the probability that the model assigns to x. The maximum likelihood principle trains the model to maximize the likelihood that the model follows the real data distribution. From this point of view, we need to assume a certain form of pθ(x) explicitly to estimate the likelihood of the given data and retrieve the samples from the learned model after the training. In this way, some approaches [91, 92, 105] successfully learned the generative model in various fields includingspeechsynthesis. However, whiletheexplicitlydefinedprobabilitydensityfunctionbrings about computational tractability, it may fail to represent the complexity of real data distribution and learn the high-dimensional data distributions [87]. Generative Adversarial Networks (GANs) [36] were proposed to solve the disadvantages of other generativemodels. Insteadofmaximizingthelikelihood,GANintroducestheconceptofadversarial

1

arXiv:1711.05914v9 [cs.LG] 13 Nov 2018

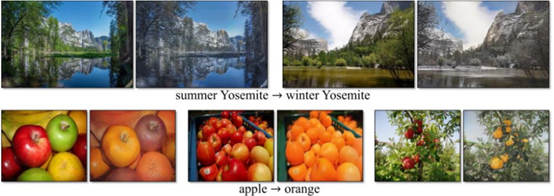

Figure 1: Examples of unpaired image to image translation from CycleGAN [145]. CycleGAN use a GAN concept, converting contents of the input image to the desired output image. There are many creative applications using a GAN, including image to image translation, and these will be introduced in Section 4. Images from CycleGAN [145].

learning between the generator and the discriminator. The generator and the discriminator act as adversaries with respect to each other to produce real-like samples. The generator is a continuous, differentiable transformation function mapping a prior distribution pz from the latent space Z into the data space X that tries to fool the discriminator. The discriminator distinguishes its input whether it comes from the real data distribution or the generator. The basic intuition behind the adversarial learning is that as the generator tries to deceive the discriminator which also evolves against the generator, the generator improves. This adversarial process gives GAN notable advantages over the other generative models. GAN avoids defining pθ(x) explicitly, and instead trains the generator using a binary classification of the discriminator. Thus, the generator does not need to follow a certain form of pθ(x). In addition, since the generator is a simple, usually deterministic feed-forward network from Z to X, GAN can sample the generated data in a simple manner unlike other models using the Markov chain [113] in which the sampling is computationally slow and not accurate. Furthermore, GAN can parallelize the generation, which is not possible for other models such as PixelCNN [105], PixelRNN [92], and WaveNet [91] due to their autoregressive nature. For these advantages, GAN has been gaining considerable attention, and the desire to use GAN in many fields is growing. In this study, we explain GAN [35, 36] in detail which generates sharper and better real-like samples than the other generative models by adopting two components, the generator and the discriminator. We look into how GAN works theoretically and how GAN has been applied to various applications.

1.1 Paper Organization Table 1 shows GAN and GAN variants which will be discussed in Section 2 and 3. In Section 2, we first present a standard objective function of a GAN and describe how its components work. After that, we present various objective functions proposed recently, focusing on their similarities in terms of the feature matching problem. We then explain the architecture of GAN extending the discussion to dominant obstacles caused by optimizing a minimax problem, especially a mode collapse, and how to address those issues. In Section 3, we discuss how GAN can be exploited to learn the latent space where a compressed and low dimensional representation of data lies. In particular, we emphasize how the GAN extracts the latent space from the data space with autoencoder frameworks. Section 4 provides several extensions of the GAN applied to other domains and various topics as shown in Table 2. In Section 5, we observe a macroscopic view of GAN, especially why GAN is advantageous over other generative models. Finally, Section 6 concludes the paper.

生成性对抗网络及其变体如何工作:综述

生成性对抗网络(Generative-departarial Networks,简称GAN)因其具有学习高维、复杂的真实数据分布的潜力而受到机器学习领域的广泛关注。具体来说,它们不依赖于任何关于分布的假设,并且拥有以简单的方式从潜在空间生成真实的样本这种强大的特性使得GAN可以应用于图像合成、图像属性编辑、图像翻译、做主编等学术领域。本文旨为那些熟悉但对GAN理解不深或希望从多个角度来看待甘语的读者研究GAN的细节,另外,本文还解释了GAN的工作原理以及最近提出的各种目标函数的基本含义,注重如何将GAN与自动编码器框架相结合。最后,对那些有兴趣用GAN来进行研究的人,本文列举出GAN应用于各种工作和其他领域。

1 引言

近年来,在机器学习中,生成模型因其在不同领域的适用性而变得越来越重要和流行。它们表示复杂和高维数据的能力可用于处理图像[2、12、57、65、127、133、145]、视频[122、125、126]、音乐生成[41、66、141]、自然语言[48、73]和其他学术领域,例如医学图像[16、77、136]和安全性[109、124]具体来说,生成模型对于图像到图像的转换非常有用(参见图1)[9,57,137,145],它将图像传输到另一个特定的域,图像超分辨率[65],更改图像中对象的某些特征[3,37,75,94,144],并预测视频的下一帧[122,125,126]此外,生成模型可以解决机器学习领域中的各种问题,例如半监督学习[21,67,104,115],它试图解决标记数据的缺乏和域适应[2,12,47,108,111,140]它利用已知的知识来完成其他领域的一些任务,而这些领域只提供很少的信息形式。

生成模型学习建模真实数据概率分布Pdata(x),其中数据X存在于D维实空间R^d中,并且大多数生成模型,包括自回归模型〔92, 105〕,是基于带有参数θ为参数化模型的最大似然原理。具有独立和网络分布(i.i.d)训练样本x^i,其中i∈ {1,2,…n},我们将似然函数定义为是模型对每个训练数据给出的概率的结果:∏_i^n p_Θ (x^i),其中p_Θ (x)是模型分配给x的概率。最大似然函数原则训练模型遵守真实样本分布达到最大似然化。

从这个角度出发,我们需要假设p_Θ (x)的某种形式,明确地估计给定数据的可能性,并在训练后从学习模型中找回样本。通过这种方式,一些方法[91,92,105]在各种领域成功地学习了生成模型,其中包括语音合成。然而,当概率密度函数被定义带来计算的可追踪性时,它可能无法表示真实数据分布的复杂性和学习高维数据分布〔87〕。

生成性对抗网络(GANs)[36]是为了解决其他生成模型的不足而提出的,代替最大化可能性,GAN引入对抗的概念。

图1:CycleGAN的未配对图像到图像转换示例[145]。CycleGAN使用GAN概念,将输入图像的内容转换为所需的输出图像。有许多创新应用程序使用了GAN,包括图像到图像的转换,这些将在第4节中介绍。图像引用CycleGAN [145]。

在生成器和鉴别器之间不断学习。产生器和鉴别器互为羁绊,产生真实的相似样本。生成器是一个连续的、不同的变换函数,它提供先前分配的p_z从潜在空间Z映射到数据空间X,试图欺骗鉴别器的。鉴别器区分其输入是来自真实的数据分配还是来自生成器。对抗性学习背后的基本直觉是当生成器试图欺骗鉴别器时,鉴别器也会演变成与生成器对抗,从而使生成器进化。这种对抗过程使GAN在其他生成模型中具有显著的优势。

GAN避免显式地定义p_Θ (x),而是通过二进制分类来训练生成器。因此,生成器不需要遵循p_Θ (x)确定形式。同样,由于生成器是一个从Z到X的简单的、通常是不可改变的确定性前馈网络,GAN以一种简单的方式对生成的数据进行采样,这不同于使用Markov链的其他模型,在Markov链中,采样在计算上缓慢且不准确。此外,GAN可以并行生成,这对于其他模型是不可能的,例如PixelCNN[105]、PixelRNN[92]和WaveNet[91],因为它们具有自回归性质。

由于这些优点,GAN得到了广泛的关注,并且在许多领域中使用GAN的愿望也越来越强烈。在本研究中,我们详细解释了GAN[35,36]通过采用生成器和鉴别器这两个组件来产生比其他生成模型更锐利和更真实的样本。我们从理论上研究了GAN的工作原理,以及GAN在各种应用中的应用。

1.1论文组织

表1显示了将在第2节和第3节中讨论的GAN和GAN变体。在第二节中,我们首先提出了一个GAN的标准目标函数,并描述了它的组成部分是如何工作的。之后,我们介绍了最近提出的各种目标函数,重点讨论了它们在特征匹配问题上的相似性。然后,我们解释了GAN的体系结构,将讨论扩展到由优化最小化问题引起的主要障碍,特别是模式崩溃,以及如何解决这些问题。

在第3节中,我们讨论如何利用GAN来学习数据的压缩和低维表示所在的潜在空间。特别地,我们强调了GAN如何使用自动编码器框架从数据空间中提取潜在空间。第4节提供了应用于其他领域和各种主题的GAN的几个扩展,如表2所示。在第五节中,我们观察了GAN的宏观观点,特别是为什么GAN优于其他生成模型。最后,第六节对论文进行了总结。

[论文来自](http://scholar.cnki.net/new/Detail/index/WWMERGEJ01/SJCM2287324DA607F1597C137B8CE34D1972)

1167

1167

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?