在执行nova boot命令创建VM时,neutron将会为VM分配MAC和IP,用于创建VM所需的port。网上有些许相关讲述nova与neutron交互的文章,不过都是从架构层面进行分析和讲解,很少涉及到代码层面。本文将从代码层面进行分析nova与neutron的交互。本文主要分析如何从neutron为VM分配MAC和IP,tap设备的创建和相关bridge的创建。注意:这里的分析是基于采用LinuxBridge的Mechanism Driver进行分析的。

我们接到《nova boot代码流程分析(一):Claim机制》文章继续分析后面的代码,即后面的代码涉及nova与neutron的交互。

#/nova/compute/manager.py:ComputeManager

def _build_and_run_instance(self, context, instance, image, injected_files,

admin_password, requested_networks, security_groups,

block_device_mapping, node, limits, filter_properties):

image_name = image.get('name')

self._notify_about_instance_usage(context, instance, 'create.start',

extra_usage_info={'image_name': image_name})

try:

rt = self._get_resource_tracker(node)

with rt.instance_claim(context, instance, limits) as inst_claim:

# NOTE(russellb) It's important that this validation be done

# *after* the resource tracker instance claim, as that is where

# the host is set on the instance.

self._validate_instance_group_policy(context, instance,

filter_properties)

with self._build_resources(context, instance,

requested_networks, security_groups, image,

block_device_mapping) as resources:

instance.vm_state = vm_states.BUILDING

instance.task_state = task_states.SPAWNING

instance.numa_topology = inst_claim.claimed_numa_topology

# NOTE(JoshNang) This also saves the changes to the

# instance from _allocate_network_async, as they aren't

# saved in that function to prevent races.

instance.save(expected_task_state=

task_states.BLOCK_DEVICE_MAPPING)

block_device_info = resources['block_device_info']

network_info = resources['network_info']

self.driver.spawn(context, instance, image,

injected_files, admin_password,

network_info=network_info,

block_device_info=block_device_info)

except (exception.InstanceNotFound,

exception.UnexpectedDeletingTaskStateError) as e:

with excutils.save_and_reraise_exception():

self._notify_about_instance_usage(context, instance,

'create.end', fault=e)

except exception.ComputeResourcesUnavailable as e:

LOG.debug(e.format_message(), instance=instance)

self._notify_about_instance_usage(context, instance,

'create.error', fault=e)

raise exception.RescheduledException(

instance_uuid=instance.uuid, reason=e.format_message())

except exception.BuildAbortException as e:

with excutils.save_and_reraise_exception():

LOG.debug(e.format_message(), instance=instance)

self._notify_about_instance_usage(context, instance,

'create.error', fault=e)

except (exception.FixedIpLimitExceeded,

exception.NoMoreNetworks, exception.NoMoreFixedIps) as e:

LOG.warning(_LW('No more network or fixed IP to be allocated'),

instance=instance)

self._notify_about_instance_usage(context, instance,

'create.error', fault=e)

msg = _('Failed to allocate the network(s) with error %s, '

'not rescheduling.') % e.format_message()

raise exception.BuildAbortException(instance_uuid=instance.uuid,

reason=msg)

except (exception.VirtualInterfaceCreateException,

exception.VirtualInterfaceMacAddressException) as e:

LOG.exception(_LE('Failed to allocate network(s)'),

instance=instance)

self._notify_about_instance_usage(context, instance,

'create.error', fault=e)

msg = _('Failed to allocate the network(s), not rescheduling.')

raise exception.BuildAbortException(instance_uuid=instance.uuid,

reason=msg)

except (exception.FlavorDiskTooSmall,

exception.FlavorMemoryTooSmall,

exception.ImageNotActive,

exception.ImageUnacceptable) as e:

self._notify_about_instance_usage(context, instance,

'create.error', fault=e)

raise exception.BuildAbortException(instance_uuid=instance.uuid,

reason=e.format_message())

except Exception as e:

self._notify_about_instance_usage(context, instance,

'create.error', fault=e)

raise exception.RescheduledException(

instance_uuid=instance.uuid, reason=six.text_type(e))

# NOTE(alaski): This is only useful during reschedules, remove it now.

instance.system_metadata.pop('network_allocated', None)

self._update_instance_after_spawn(context, instance)

try:

instance.save(expected_task_state=task_states.SPAWNING)

except (exception.InstanceNotFound,

exception.UnexpectedDeletingTaskStateError) as e:

with excutils.save_and_reraise_exception():

self._notify_about_instance_usage(context, instance,

'create.end', fault=e)

self._update_scheduler_instance_info(context, instance)

self._notify_about_instance_usage(context, instance, 'create.end',

extra_usage_info={'message': _('Success')},

network_info=network_info)

假设利用Claim机制验证被选中的host满足创建VM的条件,则更新host的资源信息,继续执行下面的操作,我们首先关注_build_resources函数。

#/nova/compute/manager.py:ComputeManager

@contextlib.contextmanager

def _build_resources(self, context, instance, requested_networks,

security_groups, image, block_device_mapping):

resources = {}

network_info = None

try:

network_info = self._build_networks_for_instance(context, instance,

requested_networks, security_groups)

resources['network_info'] = network_info

except (exception.InstanceNotFound,

exception.UnexpectedDeletingTaskStateError):

raise

except exception.UnexpectedTaskStateError as e:

raise exception.BuildAbortException(instance_uuid=instance.uuid,

reason=e.format_message())

except Exception:

# Because this allocation is async any failures are likely to occur

# when the driver accesses network_info during spawn().

LOG.exception(_LE('Failed to allocate network(s)'),

instance=instance)

msg = _('Failed to allocate the network(s), not rescheduling.')

raise exception.BuildAbortException(instance_uuid=instance.uuid,

reason=msg)

... ... ...

try:

yield resources

except Exception as exc:

with excutils.save_and_reraise_exception() as ctxt:

if not isinstance(exc, (exception.InstanceNotFound,

exception.UnexpectedDeletingTaskStateError)):

LOG.exception(_LE('Instance failed to spawn'),

instance=instance)

# Make sure the async call finishes

if network_info is not None:

network_info.wait(do_raise=False)

# if network_info is empty we're likely here because of

# network allocation failure. Since nothing can be reused on

# rescheduling it's better to deallocate network to eliminate

# the chance of orphaned ports in neutron

deallocate_networks = False if network_info else True

try:

self._shutdown_instance(context, instance,

block_device_mapping, requested_networks,

try_deallocate_networks=deallocate_networks)

except Exception:

ctxt.reraise = False

msg = _('Could not clean up failed build,'

' not rescheduling')

raise exception.BuildAbortException(

instance_uuid=instance.uuid, reason=msg)

其中_build_networks_for_instance函数是本文分析的重点,该函数涉及到与neutron的交互为VM的network的创建做准备。

#/nova/compute/manager.py:ComputeManager

def _build_networks_for_instance(self, context, instance,

requested_networks, security_groups):

# If we're here from a reschedule the network may already be allocated.

if strutils.bool_from_string(

instance.system_metadata.get('network_allocated', 'False')):

# NOTE(alex_xu): The network_allocated is True means the network

# resource already allocated at previous scheduling, and the

# network setup is cleanup at previous. After rescheduling, the

# network resource need setup on the new host.

self.network_api.setup_instance_network_on_host(

context, instance, instance.host)

return self._get_instance_nw_info(context, instance)

if not self.is_neutron_security_groups:

security_groups = []

macs = self.driver.macs_for_instance(instance)

dhcp_options = self.driver.dhcp_options_for_instance(instance)

network_info = self._allocate_network(context, instance,

requested_networks, macs, security_groups, dhcp_options)

if not instance.access_ip_v4 and not instance.access_ip_v6:

# If CONF.default_access_ip_network_name is set, grab the

# corresponding network and set the access ip values accordingly.

# Note that when there are multiple ips to choose from, an

# arbitrary one will be chosen.

network_name = CONF.default_access_ip_network_name

if not network_name:

return network_info

for vif in network_info:

if vif['network']['label'] == network_name:

for ip in vif.fixed_ips():

if ip['version'] == 4:

instance.access_ip_v4 = ip['address']

if ip['version'] == 6:

instance.access_ip_v6 = ip['address']

instance.save()

break

return network_info

首先判断创建VM所需的port信息(MAC和IP等信息)是否已经分配(可以查看注释),如果已经被分配完成,则调用neutron的api更新port的binding信息(这里所谓的binding即为port与host之间的binding),然后将其更新的结果返回给上一层函数。如果没有被分配完成,则执行后面的代码流程。

我们这里假设VM所需的port信息没有被分配。所以我们继续分析下面的代码流程。

#/nova/virt/driver.py:ComputeDriver

def macs_for_instance(self, instance):

"""What MAC addresses must this instance have?

Some hypervisors (such as bare metal) cannot do freeform virtualisation

of MAC addresses. This method allows drivers to return a set of MAC

addresses that the instance is to have. allocate_for_instance will take

this into consideration when provisioning networking for the instance.

Mapping of MAC addresses to actual networks (or permitting them to be

freeform) is up to the network implementation layer. For instance,

with openflow switches, fixed MAC addresses can still be virtualised

onto any L2 domain, with arbitrary VLANs etc, but regular switches

require pre-configured MAC->network mappings that will match the

actual configuration.

Most hypervisors can use the default implementation which returns None.

Hypervisors with MAC limits should return a set of MAC addresses, which

will be supplied to the allocate_for_instance call by the compute

manager, and it is up to that call to ensure that all assigned network

details are compatible with the set of MAC addresses.

This is called during spawn_instance by the compute manager.

:return: None, or a set of MAC ids (e.g. set(['12:34:56:78:90:ab'])).

None means 'no constraints', a set means 'these and only these

MAC addresses'.

"""

return None

def dhcp_options_for_instance(self, instance):

"""Get DHCP options for this instance.

Some hypervisors (such as bare metal) require that instances boot from

the network, and manage their own TFTP service. This requires passing

the appropriate options out to the DHCP service. Most hypervisors can

use the default implementation which returns None.

This is called during spawn_instance by the compute manager.

Note that the format of the return value is specific to Quantum

client API.

:return: None, or a set of DHCP options, eg:

| [{'opt_name': 'bootfile-name',

| 'opt_value': '/tftpboot/path/to/config'},

| {'opt_name': 'server-ip-address',

| 'opt_value': '1.2.3.4'},

| {'opt_name': 'tftp-server',

| 'opt_value': '1.2.3.4'}

| ]

"""

return None

默认情况下,_build_networks_for_instance函数中macs和dhcp_options的值为None。

#/nova/compute/manager.py:ComputeManager

def _allocate_network(self, context, instance, requested_networks, macs,

security_groups, dhcp_options):

"""Start network allocation asynchronously. Return an instance

of NetworkInfoAsyncWrapper that can be used to retrieve the

allocated networks when the operation has finished.

"""

# NOTE(comstud): Since we're allocating networks asynchronously,

# this task state has little meaning, as we won't be in this

# state for very long.

instance.vm_state = vm_states.BUILDING

instance.task_state = task_states.NETWORKING

instance.save(expected_task_state=[None])

self._update_resource_tracker(context, instance)

is_vpn = pipelib.is_vpn_image(instance.image_ref)

return network_model.NetworkInfoAsyncWrapper(

self._allocate_network_async, context, instance,

requested_networks, macs, security_groups, is_vpn,

dhcp_options)

#/nova/network/model.py: NetworkInfoAsyncWrapper

class NetworkInfoAsyncWrapper(NetworkInfo):

"""Wrapper around NetworkInfo that allows retrieving NetworkInfo

in an async manner.

This allows one to start querying for network information before

you know you will need it. If you have a long-running

operation, this allows the network model retrieval to occur in the

background. When you need the data, it will ensure the async

operation has completed.

As an example:

def allocate_net_info(arg1, arg2)

return call_neutron_to_allocate(arg1, arg2)

network_info = NetworkInfoAsyncWrapper(allocate_net_info, arg1, arg2)

[do a long running operation -- real network_info will be retrieved

in the background]

[do something with network_info]

"""

def __init__(self, async_method, *args, **kwargs):

self._gt = eventlet.spawn(async_method, *args, **kwargs)

methods = ['json', 'fixed_ips', 'floating_ips']

for method in methods:

fn = getattr(self, method)

wrapper = functools.partial(self._sync_wrapper, fn)

functools.update_wrapper(wrapper, fn)

setattr(self, method, wrapper)

根据注释可知,这里采用的异步方式为VM创建相关的network信息。具体来说,就是创建了一个子线程去执行_allocate_network_async函数(不过具体怎么异步,我也不是很清楚,希望知道的读者可以告知,谢谢)。下面的_allocate_network_async函数将会去调用neutron的api接口。

#/nova/compute/manager.py:ComputeManager

def _allocate_network_async(self, context, instance, requested_networks,

macs, security_groups, is_vpn, dhcp_options):

"""Method used to allocate networks in the background.

Broken out for testing.

"""

LOG.debug("Allocating IP information in the background.",

instance=instance)

retries = CONF.network_allocate_retries

if retries < 0:

LOG.warning(_LW("Treating negative config value (%(retries)s) for "

"'network_allocate_retries' as 0."),

{'retries': retries})

retries = 0

attempts = retries + 1

retry_time = 1

for attempt in range(1, attempts + 1):

try:

nwinfo = self.network_api.allocate_for_instance(

context, instance, vpn=is_vpn,

requested_networks=requested_networks,

macs=macs,

security_groups=security_groups,

dhcp_options=dhcp_options)

LOG.debug('Instance network_info: |%s|', nwinfo,

instance=instance)

instance.system_metadata['network_allocated'] = 'True'

# NOTE(JoshNang) do not save the instance here, as it can cause

# races. The caller shares a reference to instance and waits

# for this async greenthread to finish before calling

# instance.save().

return nwinfo

except Exception:

exc_info = sys.exc_info()

log_info = {'attempt': attempt,

'attempts': attempts}

if attempt == attempts:

LOG.exception(_LE('Instance failed network setup '

'after %(attempts)d attempt(s)'),

log_info)

raise exc_info[0], exc_info[1], exc_info[2]

LOG.warning(_LW('Instance failed network setup '

'(attempt %(attempt)d of %(attempts)d)'),

log_info, instance=instance)

time.sleep(retry_time)

retry_time *= 2

if retry_time > 30:

retry_time = 30

# Not reached.

这里调用neutron的api接口即为:self.network_api.allocate_for_instance

根据/nova/compute/manager.py:ComputeManager类的init函数可知。

| self.network_api = network.API() |

#/nova/network/__init__.py

def API(skip_policy_check=False):

network_api_class = oslo_config.cfg.CONF.network_api_class

if 'quantumv2' in network_api_class:

network_api_class = network_api_class.replace('quantumv2', 'neutronv2')

cls = importutils.import_class(network_api_class)

return cls(skip_policy_check=skip_policy_check)

这里,环境的配置参数为:

| network_api_class=nova.network.neutronv2.api.API |

所以self.network_api.allocate_for_instance应该调用的是neutronv2版本的allocate_for_instance函数。如下

#/nova/network/neutronv2/api.py:API

def allocate_for_instance(self, context, instance, **kwargs):

"""Allocate network resources for the instance.

:param context: The request context.

:param instance: nova.objects.instance.Instance object.

:param requested_networks: optional value containing

network_id, fixed_ip, and port_id

:param security_groups: security groups to allocate for instance

:param macs: None or a set of MAC addresses that the instance

should use. macs is supplied by the hypervisor driver (contrast

with requested_networks which is user supplied).

NB: NeutronV2 currently assigns hypervisor supplied MAC addresses

to arbitrary networks, which requires openflow switches to

function correctly if more than one network is being used with

the bare metal hypervisor (which is the only one known to limit

MAC addresses).

:param dhcp_options: None or a set of key/value pairs that should

determine the DHCP BOOTP response, eg. for PXE booting an instance

configured with the baremetal hypervisor. It is expected that these

are already formatted for the neutron v2 api.

See nova/virt/driver.py:dhcp_options_for_instance for an example.

"""

hypervisor_macs = kwargs.get('macs', None)

available_macs = None

if hypervisor_macs is not None:

# Make a copy we can mutate: records macs that have not been used

# to create a port on a network. If we find a mac with a

# pre-allocated port we also remove it from this set.

available_macs = set(hypervisor_macs)

# The neutron client and port_client (either the admin context or

# tenant context) are read here. The reason for this is that there are

# a number of different calls for the instance allocation.

# We do not want to create a new neutron session for each of these

# calls.

neutron = get_client(context)

# Requires admin creds to set port bindings

port_client = (neutron if not

self._has_port_binding_extension(context,

refresh_cache=True, neutron=neutron) else

get_client(context, admin=True))

# Store the admin client - this is used later

admin_client = port_client if neutron != port_client else None

LOG.debug('allocate_for_instance()', instance=instance)

if not instance.project_id:

msg = _('empty project id for instance %s')

raise exception.InvalidInput(

reason=msg % instance.uuid)

... ... ...

for request in ordered_networks:

# Network lookup for available network_id

network = None

for net in nets:

if net['id'] == request.network_id:

network = net

break

# if network_id did not pass validate_networks() and not available

# here then skip it safely not continuing with a None Network

else:

continue

nets_in_requested_order.append(network)

# If security groups are requested on an instance then the

# network must has a subnet associated with it. Some plugins

# implement the port-security extension which requires

# 'port_security_enabled' to be True for security groups.

# That is why True is returned if 'port_security_enabled'

# is not found.

if (security_groups and not (

network['subnets']

and network.get('port_security_enabled', True))):

raise exception.SecurityGroupCannotBeApplied()

request.network_id = network['id']

zone = 'compute:%s' % instance.availability_zone

port_req_body = {'port': {'device_id': instance.uuid,

'device_owner': zone}}

try:

self._populate_neutron_extension_values(context,

instance,

request.pci_request_id,

port_req_body,

neutron=neutron)

if request.port_id:

port = ports[request.port_id]

port_client.update_port(port['id'], port_req_body)

preexisting_port_ids.append(port['id'])

ports_in_requested_order.append(port['id'])

else:

created_port = self._create_port(

port_client, instance, request.network_id,

port_req_body, request.address,

security_group_ids, available_macs, dhcp_opts)

created_port_ids.append(created_port)

ports_in_requested_order.append(created_port)

except Exception:

with excutils.save_and_reraise_exception():

self._unbind_ports(context,

preexisting_port_ids,

neutron, port_client)

self._delete_ports(neutron, instance, created_port_ids)

nw_info = self.get_instance_nw_info(

context, instance, networks=nets_in_requested_order,

port_ids=ports_in_requested_order,

admin_client=admin_client,

preexisting_port_ids=preexisting_port_ids)

# NOTE(danms): Only return info about ports we created in this run.

# In the initial allocation case, this will be everything we created,

# and in later runs will only be what was created that time. Thus,

# this only affects the attach case, not the original use for this

# method.

return network_model.NetworkInfo([vif for vif in nw_info

if vif['id'] in created_port_ids +

preexisting_port_ids])

这里的代码较多,主要校验创建VM的租户的network,port以及security group等相关参数。按照正常创建VM的流程,VM的port是没有创建的(这里所谓的创建是指没有在neutron数据库中创建port相关的MAC,IP等相关信息,实际的port(tap设备)要到libvirt层才创建),因此,我们分析self._create_port函数。如下

#/nova/network/neutronv2/api.py:API

def _create_port(self, port_client, instance, network_id, port_req_body,

fixed_ip=None, security_group_ids=None,

available_macs=None, dhcp_opts=None):

"""Attempts to create a port for the instance on the given network.

:param port_client: The client to use to create the port.

:param instance: Create the port for the given instance.

:param network_id: Create the port on the given network.

:param port_req_body: Pre-populated port request. Should have the

device_id, device_owner, and any required neutron extension values.

:param fixed_ip: Optional fixed IP to use from the given network.

:param security_group_ids: Optional list of security group IDs to

apply to the port.

:param available_macs: Optional set of available MAC addresses,

from which one will be used at random.

:param dhcp_opts: Optional DHCP options.

:returns: ID of the created port.

:raises PortLimitExceeded: If neutron fails with an OverQuota error.

:raises NoMoreFixedIps: If neutron fails with

IpAddressGenerationFailure error.

"""

try:

if fixed_ip:

port_req_body['port']['fixed_ips'] = [

{'ip_address': str(fixed_ip)}]

port_req_body['port']['network_id'] = network_id

port_req_body['port']['admin_state_up'] = True

port_req_body['port']['tenant_id'] = instance.project_id

if security_group_ids:

port_req_body['port']['security_groups'] = security_group_ids

if available_macs is not None:

if not available_macs:

raise exception.PortNotFree(

instance=instance.uuid)

mac_address = available_macs.pop()

port_req_body['port']['mac_address'] = mac_address

if dhcp_opts is not None:

port_req_body['port']['extra_dhcp_opts'] = dhcp_opts

port_id = port_client.create_port(port_req_body)['port']['id']

LOG.debug('Successfully created port: %s', port_id,

instance=instance)

return port_id

except neutron_client_exc.IpAddressInUseClient:

LOG.warning(_LW('Neutron error: Fixed IP %s is '

'already in use.'), fixed_ip)

msg = _("Fixed IP %s is already in use.") % fixed_ip

raise exception.FixedIpAlreadyInUse(message=msg)

except neutron_client_exc.OverQuotaClient:

LOG.warning(_LW(

'Neutron error: Port quota exceeded in tenant: %s'),

port_req_body['port']['tenant_id'], instance=instance)

raise exception.PortLimitExceeded()

except neutron_client_exc.IpAddressGenerationFailureClient:

LOG.warning(_LW('Neutron error: No more fixed IPs in network: %s'),

network_id, instance=instance)

raise exception.NoMoreFixedIps(net=network_id)

except neutron_client_exc.MacAddressInUseClient:

LOG.warning(_LW('Neutron error: MAC address %(mac)s is already '

'in use on network %(network)s.') %

{'mac': mac_address, 'network': network_id},

instance=instance)

raise exception.PortInUse(port_id=mac_address)

except neutron_client_exc.NeutronClientException:

with excutils.save_and_reraise_exception():

LOG.exception(_LE('Neutron error creating port on network %s'),

network_id, instance=instance)

最终调用发送HTTP请求到neutron-server(相当于neutron的api)的函数为:

| port_id = port_client.create_port(port_req_body)['port']['id'] |

这里,我们直接跳到neutron调用create_port函数的地方,而对于具体如何route到neutron的create_port函数,可以参考《keystone WSGI流程》,后面将分析neutron-server的启动流程。

#/neutron/plugins/ml2/plugin.py:Ml2Plugin

def create_port(self, context, port):

attrs = port[attributes.PORT]

result, mech_context = self._create_port_db(context, port)

new_host_port = self._get_host_port_if_changed(mech_context, attrs)

# notify any plugin that is interested in port create events

kwargs = {'context': context, 'port': new_host_port}

registry.notify(resources.PORT, events.AFTER_CREATE, self, **kwargs)

try:

self.mechanism_manager.create_port_postcommit(mech_context)

except ml2_exc.MechanismDriverError:

with excutils.save_and_reraise_exception():

LOG.error(_LE("mechanism_manager.create_port_postcommit "

"failed, deleting port '%s'"), result['id'])

self.delete_port(context, result['id'])

# REVISIT(rkukura): Is there any point in calling this before

# a binding has been successfully established?

self.notify_security_groups_member_updated(context, result)

try:

bound_context = self._bind_port_if_needed(mech_context)

except ml2_exc.MechanismDriverError:

with excutils.save_and_reraise_exception():

LOG.error(_LE("_bind_port_if_needed "

"failed, deleting port '%s'"), result['id'])

self.delete_port(context, result['id'])

return bound_context._port

#/neutron/plugins/ml2/plugin.py:Ml2Plugin

def _create_port_db(self, context, port):

attrs = port[attributes.PORT]

if not attrs.get('status'):

attrs['status'] = const.PORT_STATUS_DOWN

session = context.session

with session.begin(subtransactions=True):

dhcp_opts = attrs.get(edo_ext.EXTRADHCPOPTS, [])

result = super(Ml2Plugin, self).create_port(context, port)

self.extension_manager.process_create_port(context, attrs, result)

self._portsec_ext_port_create_processing(context, result, port)

# sgids must be got after portsec checked with security group

sgids = self._get_security_groups_on_port(context, port)

self._process_port_create_security_group(context, result, sgids)

network = self.get_network(context, result['network_id'])

binding = db.add_port_binding(session, result['id'])

mech_context = driver_context.PortContext(self, context, result,

network, binding, None)

self._process_port_binding(mech_context, attrs)

result[addr_pair.ADDRESS_PAIRS] = (

self._process_create_allowed_address_pairs(

context, result,

attrs.get(addr_pair.ADDRESS_PAIRS)))

self._process_port_create_extra_dhcp_opts(context, result,

dhcp_opts)

self.mechanism_manager.create_port_precommit(mech_context)

return result, mech_context

首先,我们分析如下代码。

| result = super(Ml2Plugin, self).create_port(context, port) |

该代码主要调用Ml2Plugin的父类的create_port创建port以及为port分配MAC和IP,同时更新neutron中相关的数据库信息。如下

#/neutron/db/db_base_plugin_v2.py:NeutronDbPluginV2

def create_port(self, context, port):

p = port['port']

port_id = p.get('id') or uuidutils.generate_uuid()

network_id = p['network_id']

# NOTE(jkoelker) Get the tenant_id outside of the session to avoid

# unneeded db action if the operation raises

tenant_id = self._get_tenant_id_for_create(context, p)

if p.get('device_owner'):

self._enforce_device_owner_not_router_intf_or_device_id(

context, p.get('device_owner'), p.get('device_id'), tenant_id)

port_data = dict(tenant_id=tenant_id,

name=p['name'],

id=port_id,

network_id=network_id,

admin_state_up=p['admin_state_up'],

status=p.get('status', constants.PORT_STATUS_ACTIVE),

device_id=p['device_id'],

device_owner=p['device_owner'])

with context.session.begin(subtransactions=True):

# Ensure that the network exists.

self._get_network(context, network_id)

# Create the port

if p['mac_address'] is attributes.ATTR_NOT_SPECIFIED:

db_port = self._create_port(context, network_id, port_data)

p['mac_address'] = db_port['mac_address']

else:

db_port = self._create_port_with_mac(

context, network_id, port_data, p['mac_address'])

# Update the IP's for the port

ips = self._allocate_ips_for_port(context, port)

if ips:

for ip in ips:

ip_address = ip['ip_address']

subnet_id = ip['subnet_id']

NeutronDbPluginV2._store_ip_allocation(

context, ip_address, network_id, subnet_id, port_id)

return self._make_port_dict(db_port, process_extensions=False)

首先,从port字典中获取network_id和tenant_id,用于后续查询或更新数据库之用。

1. 生成MAC地址

#/neutron/db/db_base_plugin_v2.py:NeutronDbPluginV2.create_port

# Ensure that the network exists.

self._get_network(context, network_id)

# Create the port

if p['mac_address'] is attributes.ATTR_NOT_SPECIFIED:

db_port = self._create_port(context, network_id, port_data)

p['mac_address'] = db_port['mac_address']

else:

db_port = self._create_port_with_mac(

context, network_id, port_data, p['mac_address'])

_get_network函数通过查询neutron数据库中的networks表,验证从port字典中获取的network_id是否存在。在我的OpenStack环境中,neutron的networks表信息如下。

| MariaDB [neutron]> select * from networks; +----------------------------------+--------------------------------------+----------+--------+----------------+--------+------+------------------+ | tenant_id | id | name | status | admin_state_up | shared | mtu | vlan_transparent | +----------------------------------+--------------------------------------+----------+--------+----------------+--------+------+------------------+ | 09e04766c06d477098201683497d3878 | 5eea5aca-a126-4bb9-b21e-907c33d4200b | demo-net | ACTIVE | 1 | 1 | 0 | NULL | +----------------------------------+--------------------------------------+----------+--------+----------------+--------+------+------------------+ 1 row in set (0.00 sec) |

如果network_id存在,则判断port字典中的mac_address是否有值。如果在port字典中指定了mac_address的值,则利用该mac直接创建port,否则先生成一个mac,然后再创建port。这里我们的port字典中没有指定mac_addres。则执行

| db_port = self._create_port(context, network_id, port_data) |

具体代码如下。

#/neutron/db/db_base_plugin_v2.py:NeutronDbPluginV2

def _create_port(self, context, network_id, port_data):

max_retries = cfg.CONF.mac_generation_retries

for i in range(max_retries):

mac = self._generate_mac()

try:

# nested = True frames an operation that may potentially fail

# within a transaction, so that it can be rolled back to the

# point before its failure while maintaining the enclosing

# transaction

return self._create_port_with_mac(

context, network_id, port_data, mac, nested=True)

except n_exc.MacAddressInUse:

LOG.debug('Generated mac %(mac_address)s exists on '

'network %(network_id)s',

{'mac_address': mac, 'network_id': network_id})

LOG.error(_LE("Unable to generate mac address after %s attempts"),

max_retries)

raise n_exc.MacAddressGenerationFailure(net_id=network_id)

#/neutron/db/db_base_plugin_v2.py:NeutronDbPluginV2

@staticmethod

def _generate_mac():

return utils.get_random_mac(cfg.CONF.base_mac.split(':'))

#/neutron/common/utils.py

def get_random_mac(base_mac):

mac = [int(base_mac[0], 16), int(base_mac[1], 16),

int(base_mac[2], 16), random.randint(0x00, 0xff),

random.randint(0x00, 0xff), random.randint(0x00, 0xff)]

if base_mac[3] != '00':

mac[3] = int(base_mac[3], 16)

return ':'.join(["%02x" % x for x in mac])

从_generate_mac函数的代码可以看出,它是根据neutron.conf配置文件中的base_mac配置参数进行设置的。OpenStack环境中base_mac的配置参数一般为:

| base_mac = fa:16:3e:00:00:00 |

get_random_mac函数将配置的参数base_mac为基准,生成mac地址。其中mac地址的前3个是以fa:16:3e开头的,如果设置了mac地址的第4个,则mac地址的前4个保持不变,后2个随机产生,如果未设置mac地址的第4个,则后3个随机产生。所以你会在你创建的虚拟机中执行ifconfig命令查看到的mac地址都是以fa:16:3e开头的(在你没有修改neutron.conf配置文件中的base_mac参数为前提)。

在产生mac地址后,需利用该mac地址创建port。

#/neutron/db/db_base_plugin_v2.py:NeutronDbPluginV2

def _create_port_with_mac(self, context, network_id, port_data,

mac_address, nested=False):

try:

with context.session.begin(subtransactions=True, nested=nested):

db_port = models_v2.Port(mac_address=mac_address, **port_data)

context.session.add(db_port)

return db_port

except db_exc.DBDuplicateEntry:

raise n_exc.MacAddressInUse(net_id=network_id, mac=mac_address)

从_create_port_with_mac函数可知,所谓的创建port就是在neutron数据库中的ports表中创建一条port信息。比如,我的OpenStack环境中的neutron数据库中的ports表信息如下。

| MariaDB [neutron]> select * from ports; +----------------------------------+--------------------------------------+------+--------------------------------------+-------------------+----------------+--------+--------------------+--------------+ | tenant_id | id | name | network_id | mac_address | admin_state_up | status | device_id | device_owner | +----------------------------------+--------------------------------------+------+--------------------------------------+-------------------+----------------+--------+--------------------+--------------+ | 09e04766c06d477098201683497d3878 | 7a00401c-ecbf-493d-9841-e902b40f66a7 | | 5eea5aca-a126-4bb9-b21e-907c33d4200b | fa:16:3e:77:46:16 | 1 | ACTIVE | reserved_dhcp_port | network:dhcp | +----------------------------------+--------------------------------------+------+--------------------------------------+-------------------+----------------+--------+--------------------+--------------+ |

这个port是neutron-dhcp-agent的port,且这个数据库中的port在实际的物理网络中表现为一个tap设备,且该tap设备桥接到一个bridge上。如下

| [root@jun2 ~]# brctl show bridge name bridge id STP enabled interfaces brq5eea5aca-a1 8000.000c2971c80c no eth1.120 tap7a00401c-ec

[root@jun2 ~]# ifconfig brq5eea5aca-a1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet6 fe80::3cf7:95ff:fea8:ceb5 prefixlen 64 scopeid 0x20<link> ether 00:0c:29:71:c8:0c txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 8 bytes 648 (648.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 192.168.118.3 netmask 255.255.255.0 broadcast 192.168.118.255 inet6 fe80::20c:29ff:fe71:c802 prefixlen 64 scopeid 0x20<link> ether 00:0c:29:71:c8:02 txqueuelen 1000 (Ethernet) RX packets 2491 bytes 277113 (270.6 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 2786 bytes 361898 (353.4 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 192.168.118.4 netmask 255.255.255.0 broadcast 192.168.118.255 inet6 fe80::20c:29ff:fe71:c80c prefixlen 64 scopeid 0x20<link> ether 00:0c:29:71:c8:0c txqueuelen 1000 (Ethernet) RX packets 1337 bytes 161691 (157.9 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 38 bytes 4895 (4.7 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth1.120: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet6 fe80::20c:29ff:fe71:c80c prefixlen 64 scopeid 0x20<link> ether 00:0c:29:71:c8:0c txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 15 bytes 1206 (1.1 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 inet6 ::1 prefixlen 128 scopeid 0x10<host> loop txqueuelen 0 (Local Loopback) RX packets 1563 bytes 99332 (97.0 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 1563 bytes 99332 (97.0 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

tap7a00401c-ec: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet6 fe80::444a:ffff:fead:ed7e prefixlen 64 scopeid 0x20<link> ether 46:4a:ff:ad:ed:7e txqueuelen 1000 (Ethernet) RX packets 8 bytes 648 (648.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 15 bytes 1206 (1.1 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

[root@jun2 ~]# ip netns qdhcp-5eea5aca-a126-4bb9-b21e-907c33d4200b [root@jun2 ~]# ip netns exec qdhcp-5eea5aca-a126-4bb9-b21e-907c33d4200b ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ns-7a00401c-ec: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether fa:16:3e:77:46:16 brd ff:ff:ff:ff:ff:ff inet 192.168.0.2/24 brd 192.168.0.255 scope global ns-7a00401c-ec valid_lft forever preferred_lft forever inet6 fe80::f816:3eff:fe77:4616/64 scope link valid_lft forever preferred_lft forever |

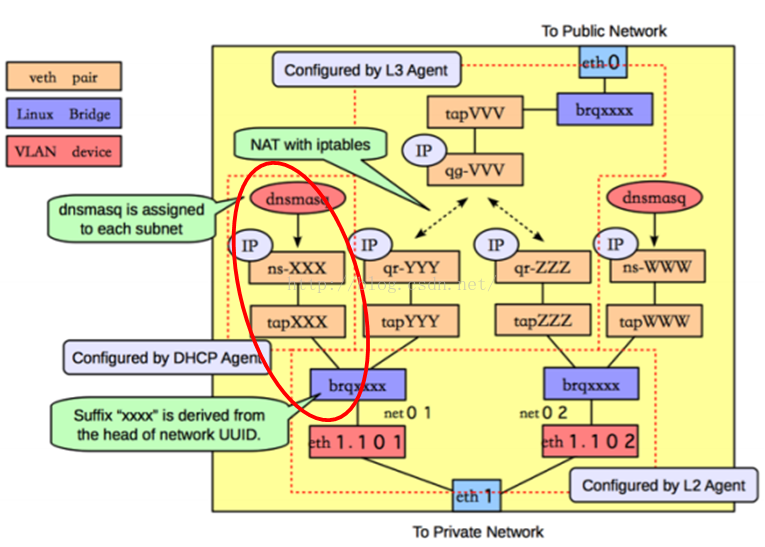

而上述这些物理设备的相关性,可用如下图中红色部分进行直观的表示。

好了,有点跑题了。我们继续回到代码进行分析。当然上面所说的create port是为虚拟机的创建分配port。而上面图中的port为neutron-dhcp-agent的port,neutron-dhcp-agent的port应该是在neutron-dhcp-agent服务启动时就已经创建好了的。由于我的OpenStack环境不能创建VM,所以只能借助neutron-dhcp-agent的port进行一下类似VM的port直观的描述。

上面便是为port分配MAC的代码流程。下面分析为port分配IP的代码流程。

2. 分配IP

分配IP的代码为:

#/neutron/db/db_base_plugin_v2.py:NeutronDbPluginV2.create_port

# Update the IP's for the port

ips = self._allocate_ips_for_port(context, port)

具体代码如下。

#/neutron/db/db_base_plugin_v2.py:NeutronDbPluginV2

def _allocate_ips_for_port(self, context, port):

"""Allocate IP addresses for the port.

If port['fixed_ips'] is set to 'ATTR_NOT_SPECIFIED', allocate IP

addresses for the port. If port['fixed_ips'] contains an IP address or

a subnet_id then allocate an IP address accordingly.

"""

p = port['port']

ips = []

v6_stateless = []

net_id_filter = {'network_id': [p['network_id']]}

subnets = self.get_subnets(context, filters=net_id_filter)

is_router_port = (

p['device_owner'] in constants.ROUTER_INTERFACE_OWNERS or

p['device_owner'] == constants.DEVICE_OWNER_ROUTER_SNAT)

fixed_configured = p['fixed_ips'] is not attributes.ATTR_NOT_SPECIFIED

if fixed_configured:

configured_ips = self._test_fixed_ips_for_port(context,

p["network_id"],

p['fixed_ips'],

p['device_owner'])

ips = self._allocate_fixed_ips(context,

configured_ips,

p['mac_address'])

# For ports that are not router ports, implicitly include all

# auto-address subnets for address association.

if not is_router_port:

v6_stateless += [subnet for subnet in subnets

if ipv6_utils.is_auto_address_subnet(subnet)]

else:

# Split into v4, v6 stateless and v6 stateful subnets

v4 = []

v6_stateful = []

for subnet in subnets:

if subnet['ip_version'] == 4:

v4.append(subnet)

elif ipv6_utils.is_auto_address_subnet(subnet):

if not is_router_port:

v6_stateless.append(subnet)

else:

v6_stateful.append(subnet)

version_subnets = [v4, v6_stateful]

for subnets in version_subnets:

if subnets:

result = NeutronDbPluginV2._generate_ip(context, subnets)

ips.append({'ip_address': result['ip_address'],

'subnet_id': result['subnet_id']})

for subnet in v6_stateless:

# IP addresses for IPv6 SLAAC and DHCPv6-stateless subnets

# are implicitly included.

ip_address = self._calculate_ipv6_eui64_addr(context, subnet,

p['mac_address'])

ips.append({'ip_address': ip_address.format(),

'subnet_id': subnet['id']})

return ips

首先,根据network_id找到该network下面的subnets(可能在一个network下有多个subnet),然后判断创建VM时,是否用户有指定fixed ip。如果指定了fixed ip,则验证fixed ip在该network下是否合法,否则直接利用subnet下的可用ip分配给VM。

这里我们假设创建VM时,指定了fixed_ips。则执行下面的代码。

#/neutron/db/db_base_plugin_v2.py:NeutronDbPluginV2._allocate_ips_for_port

fixed_configured = p['fixed_ips'] is not attributes.ATTR_NOT_SPECIFIED

if fixed_configured:

configured_ips = self._test_fixed_ips_for_port(context,

p["network_id"],

p['fixed_ips'],

p['device_owner'])

ips = self._allocate_fixed_ips(context,

configured_ips,

p['mac_address'])

#/neutron/db/db_base_plugin_v2.py:NeutronDbPluginV2

def _test_fixed_ips_for_port(self, context, network_id, fixed_ips,

device_owner):

"""Test fixed IPs for port.

Check that configured subnets are valid prior to allocating any

IPs. Include the subnet_id in the result if only an IP address is

configured.

:raises: InvalidInput, IpAddressInUse, InvalidIpForNetwork,

InvalidIpForSubnet

"""

fixed_ip_set = []

for fixed in fixed_ips:

found = False

if 'subnet_id' not in fixed:

if 'ip_address' not in fixed:

msg = _('IP allocation requires subnet_id or ip_address')

raise n_exc.InvalidInput(error_message=msg)

filter = {'network_id': [network_id]}

subnets = self.get_subnets(context, filters=filter)

for subnet in subnets:

if self._check_subnet_ip(subnet['cidr'],

fixed['ip_address']):

found = True

subnet_id = subnet['id']

break

if not found:

raise n_exc.InvalidIpForNetwork(

ip_address=fixed['ip_address'])

else:

subnet = self._get_subnet(context, fixed['subnet_id'])

if subnet['network_id'] != network_id:

msg = (_("Failed to create port on network %(network_id)s"

", because fixed_ips included invalid subnet "

"%(subnet_id)s") %

{'network_id': network_id,

'subnet_id': fixed['subnet_id']})

raise n_exc.InvalidInput(error_message=msg)

subnet_id = subnet['id']

is_auto_addr_subnet = ipv6_utils.is_auto_address_subnet(subnet)

if 'ip_address' in fixed:

# Ensure that the IP's are unique

if not NeutronDbPluginV2._check_unique_ip(context, network_id,

subnet_id,

fixed['ip_address']):

raise n_exc.IpAddressInUse(net_id=network_id,

ip_address=fixed['ip_address'])

# Ensure that the IP is valid on the subnet

if (not found and

not self._check_subnet_ip(subnet['cidr'],

fixed['ip_address'])):

raise n_exc.InvalidIpForSubnet(

ip_address=fixed['ip_address'])

if (is_auto_addr_subnet and

device_owner not in

constants.ROUTER_INTERFACE_OWNERS):

msg = (_("IPv6 address %(address)s can not be directly "

"assigned to a port on subnet %(id)s since the "

"subnet is configured for automatic addresses") %

{'address': fixed['ip_address'],

'id': subnet_id})

raise n_exc.InvalidInput(error_message=msg)

fixed_ip_set.append({'subnet_id': subnet_id,

'ip_address': fixed['ip_address']})

else:

# A scan for auto-address subnets on the network is done

# separately so that all such subnets (not just those

# listed explicitly here by subnet ID) are associated

# with the port.

if (device_owner in constants.ROUTER_INTERFACE_OWNERS or

device_owner == constants.DEVICE_OWNER_ROUTER_SNAT or

not is_auto_addr_subnet):

fixed_ip_set.append({'subnet_id': subnet_id})

if len(fixed_ip_set) > cfg.CONF.max_fixed_ips_per_port:

msg = _('Exceeded maximim amount of fixed ips per port')

raise n_exc.InvalidInput(error_message=msg)

return fixed_ip_set

从注释我们可以看出,这里是验证指定的fixedIP是否合法的4种情况,即

是否是合法的输入(这里所谓合法的输入要求有subnet_id或ip,否则raise InvalidInput)。

是否输入的IP在subnet中有效(即如果你只指定了IP,未指定subnet_id,则会查询该network下所有的subnets,然后使用每个subnet与fixed IP作比较,比较两者是否在同一个网段。如果比较完成后,未发现fixed IP与任一个subnet在同一个网段,则raise InvalidIpForNetwork)。

是否IP已经分配给其他VM使用或未被释放(由NeutronDbPluginV2._check_unique_ip函数检测,如果指定的fixed IP被使用了,则raise IpAddressInUse)。

是否fixed IP在subnet中有效(如果指定了fixed IP和subnet_id,则比较fixed IP是否与subnet是同一个网段,如果不是同一个网段,则raise InvalidIpForSubnet)。

验证完成后,才会去具体为VM分配指定的fixed IP。

#/neutron/db/db_base_plugin_v2.py:NeutronDbPluginV2

def _allocate_fixed_ips(self, context, fixed_ips, mac_address):

"""Allocate IP addresses according to the configured fixed_ips."""

ips = []

# we need to start with entries that asked for a specific IP in case

# those IPs happen to be next in the line for allocation for ones that

# didn't ask for a specific IP

fixed_ips.sort(key=lambda x: 'ip_address' not in x)

for fixed in fixed_ips:

subnet = self._get_subnet(context, fixed['subnet_id'])

is_auto_addr = ipv6_utils.is_auto_address_subnet(subnet)

if 'ip_address' in fixed:

if not is_auto_addr:

# Remove the IP address from the allocation pool

NeutronDbPluginV2._allocate_specific_ip(

context, fixed['subnet_id'], fixed['ip_address'])

ips.append({'ip_address': fixed['ip_address'],

'subnet_id': fixed['subnet_id']})

# Only subnet ID is specified => need to generate IP

# from subnet

else:

if is_auto_addr:

ip_address = self._calculate_ipv6_eui64_addr(context,

subnet,

mac_address)

ips.append({'ip_address': ip_address.format(),

'subnet_id': subnet['id']})

else:

subnets = [subnet]

# IP address allocation

result = self._generate_ip(context, subnets)

ips.append({'ip_address': result['ip_address'],

'subnet_id': result['subnet_id']})

return ips

这里我们主要关注ipv4的ip分配。主要有两个分支:

1. 创建VM时,指定了subnet和fixed IP,执行NeutronDbPluginV2._allocate_specific_ip代码。

2. 创建VM时,只指定了subnet,未指定fixed IP,执行self._generate_ip代码。

具体细节在这里就不分析了,主要是查询neutron数据库中的ipavailabilityranges表获取IP的范围,然后为VM分配IP后,更新ipavailabilityranges表。

对于创建VM时,指定了fixed_ips。则直接执行NeutronDbPluginV2._generate_ip函数去为VM分配fixed IP。

VM的IP分配完毕后,则将被分配了的IP更到neutron数据库的ipallocations表。代码如下。

#/neutron/db/db_base_plugin_v2.py:NeutronDbPluginV2.create_port

# Update the IP's for the port

ips = self._allocate_ips_for_port(context, port)

if ips:

for ip in ips:

ip_address = ip['ip_address']

subnet_id = ip['subnet_id']

NeutronDbPluginV2._store_ip_allocation(

context, ip_address, network_id, subnet_id, port_id)

#/neutron/db/db_base_plugin_v2.py:NeutronDbPluginV2

@staticmethod

def _store_ip_allocation(context, ip_address, network_id, subnet_id,

port_id):

LOG.debug("Allocated IP %(ip_address)s "

"(%(network_id)s/%(subnet_id)s/%(port_id)s)",

{'ip_address': ip_address,

'network_id': network_id,

'subnet_id': subnet_id,

'port_id': port_id})

allocated = models_v2.IPAllocation(

network_id=network_id,

port_id=port_id,

ip_address=ip_address,

subnet_id=subnet_id

)

context.session.add(allocated)

对于IP中涉及的相关neutron数据库中的ipavailabilityranges表,ipallocationpools表和ipallocations表信息,在我的OpenStack环境中如下。

| MariaDB [neutron]> select * from ipavailabilityranges; +--------------------------------------+-------------+---------------+ | allocation_pool_id | first_ip | last_ip | +--------------------------------------+-------------+---------------+ | dc77245c-4dfd-4b08-90b4-f21f9c0a69c8 | 192.168.0.3 | 192.168.0.254 | +--------------------------------------+-------------+---------------+ 1 row in set (0.05 sec)

MariaDB [neutron]> select * from ipallocationpools; +--------------------------------------+--------------------------------------+-------------+---------------+ | id | subnet_id | first_ip | last_ip | +--------------------------------------+--------------------------------------+-------------+---------------+ | dc77245c-4dfd-4b08-90b4-f21f9c0a69c8 | 4751cf4d-2aba-46fa-94cb-63cbfc854592 | 192.168.0.2 | 192.168.0.254 | +--------------------------------------+--------------------------------------+-------------+---------------+ 1 row in set (0.00 sec)

MariaDB [neutron]> select * from ipallocations; +--------------------------------------+-------------+--------------------------------------+--------------------------------------+ | port_id | ip_address | subnet_id | network_id | +--------------------------------------+-------------+--------------------------------------+--------------------------------------+ | 7a00401c-ecbf-493d-9841-e902b40f66a7 | 192.168.0.2 | 4751cf4d-2aba-46fa-94cb-63cbfc854592 | 5eea5aca-a126-4bb9-b21e-907c33d4200b | +--------------------------------------+-------------+--------------------------------------+--------------------------------------+ 1 row in set (0.00 sec) |

目前,neutron为VM分配的MAC和IP存入数据库的代码流程分析完成,对于/neutron/plugins/ml2/plugin.py:Ml2Plugin中create_port函数涉及的其他代码流程,待后面分析neutron相关启动流程(比如neutron-server)之后再分析。我们再次回到nova的代码。

#/nova/network/neutronv2/api.py:API

def allocate_for_instance(self, context, instance, **kwargs):

"""Allocate network resources for the instance.

:param context: The request context.

:param instance: nova.objects.instance.Instance object.

:param requested_networks: optional value containing

network_id, fixed_ip, and port_id

:param security_groups: security groups to allocate for instance

:param macs: None or a set of MAC addresses that the instance

should use. macs is supplied by the hypervisor driver (contrast

with requested_networks which is user supplied).

NB: NeutronV2 currently assigns hypervisor supplied MAC addresses

to arbitrary networks, which requires openflow switches to

function correctly if more than one network is being used with

the bare metal hypervisor (which is the only one known to limit

MAC addresses).

:param dhcp_options: None or a set of key/value pairs that should

determine the DHCP BOOTP response, eg. for PXE booting an instance

configured with the baremetal hypervisor. It is expected that these

are already formatted for the neutron v2 api.

See nova/virt/driver.py:dhcp_options_for_instance for an example.

"""

hypervisor_macs = kwargs.get('macs', None)

available_macs = None

if hypervisor_macs is not None:

# Make a copy we can mutate: records macs that have not been used

# to create a port on a network. If we find a mac with a

# pre-allocated port we also remove it from this set.

available_macs = set(hypervisor_macs)

# The neutron client and port_client (either the admin context or

# tenant context) are read here. The reason for this is that there are

# a number of different calls for the instance allocation.

# We do not want to create a new neutron session for each of these

# calls.

neutron = get_client(context)

# Requires admin creds to set port bindings

port_client = (neutron if not

self._has_port_binding_extension(context,

refresh_cache=True, neutron=neutron) else

get_client(context, admin=True))

# Store the admin client - this is used later

admin_client = port_client if neutron != port_client else None

LOG.debug('allocate_for_instance()', instance=instance)

if not instance.project_id:

msg = _('empty project id for instance %s')

raise exception.InvalidInput(

reason=msg % instance.uuid)

... ... ...

for request in ordered_networks:

# Network lookup for available network_id

network = None

for net in nets:

if net['id'] == request.network_id:

network = net

break

# if network_id did not pass validate_networks() and not available

# here then skip it safely not continuing with a None Network

else:

continue

nets_in_requested_order.append(network)

# If security groups are requested on an instance then the

# network must has a subnet associated with it. Some plugins

# implement the port-security extension which requires

# 'port_security_enabled' to be True for security groups.

# That is why True is returned if 'port_security_enabled'

# is not found.

if (security_groups and not (

network['subnets']

and network.get('port_security_enabled', True))):

raise exception.SecurityGroupCannotBeApplied()

request.network_id = network['id']

zone = 'compute:%s' % instance.availability_zone

port_req_body = {'port': {'device_id': instance.uuid,

'device_owner': zone}}

try:

self._populate_neutron_extension_values(context,

instance,

request.pci_request_id,

port_req_body,

neutron=neutron)

if request.port_id:

port = ports[request.port_id]

port_client.update_port(port['id'], port_req_body)

preexisting_port_ids.append(port['id'])

ports_in_requested_order.append(port['id'])

else:

created_port = self._create_port(

port_client, instance, request.network_id,

port_req_body, request.address,

security_group_ids, available_macs, dhcp_opts)

created_port_ids.append(created_port)

ports_in_requested_order.append(created_port)

except Exception:

with excutils.save_and_reraise_exception():

self._unbind_ports(context,

preexisting_port_ids,

neutron, port_client)

self._delete_ports(neutron, instance, created_port_ids)

nw_info = self.get_instance_nw_info(

context, instance, networks=nets_in_requested_order,

port_ids=ports_in_requested_order,

admin_client=admin_client,

preexisting_port_ids=preexisting_port_ids)

# NOTE(danms): Only return info about ports we created in this run.

# In the initial allocation case, this will be everything we created,

# and in later runs will only be what was created that time. Thus,

# this only affects the attach case, not the original use for this

# method.

return network_model.NetworkInfo([vif for vif in nw_info

if vif['id'] in created_port_ids +

preexisting_port_ids])

这里,self._create_port函数内部代码通过HTTP请求到neutron执行neutron中的create_port函数,然后将创建完成后的相关信息返回给created_port变量。下面我们分析self.get_instance_nw_info函数。

#/nova/network/neutronv2/api.py:API

def get_instance_nw_info(self, context, instance, networks=None,

port_ids=None, use_slave=False,

admin_client=None,

preexisting_port_ids=None):

"""Return network information for specified instance

and update cache.

"""

# NOTE(geekinutah): It would be nice if use_slave had us call

# special APIs that pummeled slaves instead of

# the master. For now we just ignore this arg.

with lockutils.lock('refresh_cache-%s' % instance.uuid):

result = self._get_instance_nw_info(context, instance, networks,

port_ids, admin_client,

preexisting_port_ids)

base_api.update_instance_cache_with_nw_info(self, context,

instance,

nw_info=result,

update_cells=False)

return result

#/nova/network/neutronv2/api.py:API

def _get_instance_nw_info(self, context, instance, networks=None,

port_ids=None, admin_client=None,

preexisting_port_ids=None):

# NOTE(danms): This is an inner method intended to be called

# by other code that updates instance nwinfo. It *must* be

# called with the refresh_cache-%(instance_uuid) lock held!

LOG.debug('_get_instance_nw_info()', instance=instance)

nw_info = self._build_network_info_model(context, instance, networks,

port_ids, admin_client,

preexisting_port_ids)

return network_model.NetworkInfo.hydrate(nw_info)

#/nova/network/neutronv2/api.py:API

def _build_network_info_model(self, context, instance, networks=None,

port_ids=None, admin_client=None,

preexisting_port_ids=None):

"""Return list of ordered VIFs attached to instance.

:param context - request context.

:param instance - instance we are returning network info for.

:param networks - List of networks being attached to an instance.

If value is None this value will be populated

from the existing cached value.

:param port_ids - List of port_ids that are being attached to an

instance in order of attachment. If value is None

this value will be populated from the existing

cached value.

:param admin_client - a neutron client for the admin context.

:param preexisting_port_ids - List of port_ids that nova didn't

allocate and there shouldn't be deleted when an instance is

de-allocated. Supplied list will be added to the cached list of

preexisting port IDs for this instance.

"""

search_opts = {'tenant_id': instance.project_id,

'device_id': instance.uuid, }

if admin_client is None:

client = get_client(context, admin=True)

else:

client = admin_client

data = client.list_ports(**search_opts)

current_neutron_ports = data.get('ports', [])

nw_info_refresh = networks is None and port_ids is None

networks, port_ids = self._gather_port_ids_and_networks(

context, instance, networks, port_ids)

nw_info = network_model.NetworkInfo()

if preexisting_port_ids is None:

preexisting_port_ids = []

preexisting_port_ids = set(

preexisting_port_ids + self._get_preexisting_port_ids(instance))

current_neutron_port_map = {}

for current_neutron_port in current_neutron_ports:

current_neutron_port_map[current_neutron_port['id']] = (

current_neutron_port)

# In that case we should repopulate ports from the state of

# Neutron.

if not port_ids:

port_ids = current_neutron_port_map.keys()

for port_id in port_ids:

current_neutron_port = current_neutron_port_map.get(port_id)

if current_neutron_port:

vif_active = False

if (current_neutron_port['admin_state_up'] is False

or current_neutron_port['status'] == 'ACTIVE'):

vif_active = True

network_IPs = self._nw_info_get_ips(client,

current_neutron_port)

subnets = self._nw_info_get_subnets(context,

current_neutron_port,

network_IPs)

devname = "tap" + current_neutron_port['id']

devname = devname[:network_model.NIC_NAME_LEN]

network, ovs_interfaceid = (

self._nw_info_build_network(current_neutron_port,

networks, subnets))

preserve_on_delete = (current_neutron_port['id'] in

preexisting_port_ids)

nw_info.append(network_model.VIF(

id=current_neutron_port['id'],

address=current_neutron_port['mac_address'],

network=network,

vnic_type=current_neutron_port.get('binding:vnic_type',

network_model.VNIC_TYPE_NORMAL),

type=current_neutron_port.get('binding:vif_type'),

profile=current_neutron_port.get('binding:profile'),

details=current_neutron_port.get('binding:vif_details'),

ovs_interfaceid=ovs_interfaceid,

devname=devname,

active=vif_active,

preserve_on_delete=preserve_on_delete))

elif nw_info_refresh:

LOG.info(_LI('Port %s from network info_cache is no '

'longer associated with instance in Neutron. '

'Removing from network info_cache.'), port_id,

instance=instance)

return nw_info

从上面的代码可以看出,主要是根据port的个数去构造VIF对象列表。其中当时浏览nova boot创建VM的代码流程,认为tap设备的创建(指的是存放tap设备名称,不是实际创建)会在/nova/virt/libvirt/driver.py:LibvirtDriver._create_domain_and_network中的self.plug_vifs(instance, network_info)执行。但是查看代码发现并没有,并且在执行self.plug_vifs(instance, network_info)代码的时候,xml已经生成,也就是说tap设备的创建在执行self.plug_vifs(instance,network_info)代码之前就已经完成了。因此,再回到libvirt之前的代码,发现tap设备的创建,是在执行neutron的create_port之后,利用neutron返回的port信息进行构造。

上面便是nova与neutron交互,创建port的代码流程,我们继续向下分析。下面将执行libvirt层的spawn的代码。

#/nova/compute/manager.py:ComputeManager

@object_compat

def _spawn(self, context, instance, image_meta, network_info,

block_device_info, injected_files, admin_password,

set_access_ip=False):

"""Spawn an instance with error logging and update its power state."""

instance.vm_state = vm_states.BUILDING

instance.task_state = task_states.SPAWNING

instance.save(expected_task_state=task_states.BLOCK_DEVICE_MAPPING)

try:

self.driver.spawn(context, instance, image_meta,

injected_files, admin_password,

network_info,

block_device_info)

except Exception:

with excutils.save_and_reraise_exception():

LOG.exception(_LE('Instance failed to spawn'),

instance=instance)

self._update_instance_after_spawn(context, instance)

这里,self.driver为/nova/virt/libvirt/driver.py:LibvirtDriver对象。即执行LibvirtDriver类的spawn函数。

#/nova/virt/libvirt/driver.py:LibvirtDriver

# NOTE(ilyaalekseyev): Implementation like in multinics

# for xenapi(tr3buchet)

def spawn(self, context, instance, image_meta, injected_files,

admin_password, network_info=None, block_device_info=None):

disk_info = blockinfo.get_disk_info(CONF.libvirt.virt_type,

instance,

image_meta,

block_device_info)

self._create_image(context, instance,

disk_info['mapping'],

network_info=network_info,

block_device_info=block_device_info,

files=injected_files,

admin_pass=admin_password)

xml = self._get_guest_xml(context, instance, network_info,

disk_info, image_meta,

block_device_info=block_device_info,

write_to_disk=True)

self._create_domain_and_network(context, xml, instance, network_info,

disk_info,

block_device_info=block_device_info)

LOG.debug("Instance is running", instance=instance)

def _wait_for_boot():

"""Called at an interval until the VM is running."""

state = self.get_info(instance).state

if state == power_state.RUNNING:

LOG.info(_LI("Instance spawned successfully."),

instance=instance)

raise loopingcall.LoopingCallDone()

timer = loopingcall.FixedIntervalLoopingCall(_wait_for_boot)

timer.start(interval=0.5).wait()

其中在对image进行处理后,形成xml文件(port,tap相关信息写入xml),xml创建完成后,利用该xml创建VM。那么或许我们会有疑惑:纯净的OpenStack,第一次创建VM时,bridge如何形成?tap设备如何桥接到bridge上?这些疑问我们将在下篇文章分析,下篇文章也涉及nova与neutron的交互。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?