一、系统环境

官方文档:

https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/install-kubeadm/

kubeadm部署k8s高可用集群的官方文档:

https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/high-availability/

| 系统 | ip | 主机名 | 配置 |

|---|---|---|---|

| centos 7.6 | 192.168.246.166 | k8s-master | 2核3G |

| centos 7.6 | 192.168.246.167 | k8s-node01 | 3G |

| centos 7.6 | 192.168.246.169 | k8s-node02 | 3G |

注意:请确保CPU至少2核,内存2G

1、设置系统主机名以及Host文件

# vim /etc/hosts

192.168.246.166 kub-k8s-master

192.168.246.167 kub-k8s-node1

192.168.246.169 kub-k8s-node2

制作本地解析,修改主机名。相互解析

#hostnamectl set-hostname master

#hostnamectl set-hostname node1

#hostnamectl set-hostname node2

2、关闭防火墙

1.关闭防火墙:

# systemctl stop firewalld

# systemctl disable firewalld

2.禁用SELinux:

# setenforce 0

3.编辑文件/etc/selinux/config,将SELINUX修改为disabled,如下:

# sed -i 's/SELINUX=permissive/SELINUX=disabled/' /etc/sysconfig/selinux

SELINUX=disabled

3、关闭系统Swap

Kubernetes 1.8开始要求关闭系统的Swap,如果不关闭,默认配置下kubelet将无法启动。

- 方法一,通过kubelet的启动参数–fail-swap-on=false更改这个限制。

- 方法二,关闭系统的Swap。

# swapoff -a

修改/etc/fstab文件,注释掉SWAP的自动挂载,使用free -m确认swap已经关闭。

2.注释掉swap分区:

[root@localhost /]# sed -i 's/.*swap.*/#&/' /etc/fstab

[root@localhost /]# free -m

total used free shared buff/cache available

Mem: 3935 144 3415 8 375 3518

Swap: 0 0 0

二、安装Docker

所有机器都必须有镜像

1、安装docker

三台机器都操作

# yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-selinux \

docker-engine-selinux \

docker-engine

# yum install -y yum-utils device-mapper-persistent-data lvm2 git

# yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# yum install docker-ce -y

启动并设置开机启动

开启docker

systemctl start docker&&systemctl enable docker

2、所需镜像

说明:其实不拉取也可以,因为初始化的时候,它会自动拉取,但是自动拉取用的是k8s官网的源地址,所以一般我们都会拉取失败,这里我们自己手动拉取aliyun的镜像

请注意:拉取的docker镜像的版本必须要和kubelet、kubectl的版本保持一致(每个节点都操作)

这里我直接弄了两个脚本,运行拉取,还要修改镜像的tag;至于为什么要修改为这个版本,这是我后面初始化,看到了报错信息,必须有这个版本的镜像;

这里我们拉去的虽然是aliyun的镜像,但是还是要将tag改为kobeadm能识别到的镜像名字;否则kobeadm初始化的时候,由于镜像名字不对,会识别不到;

写成一个脚本,执行此脚本(所有节点都需要,重要的事情说三遍!!!)

[root@k8s-master ~]# vim dockpullImages1.18.1.sh

#!/bin/bash

##所需要的镜像名字

#k8s.gcr.io/kube-apiserver:v1.18.1

#k8s.gcr.io/kube-controller-manager:v1.18.1

#k8s.gcr.io/kube-scheduler:v1.18.1

#k8s.gcr.io/kube-proxy:v1.18.1

#k8s.gcr.io/pause:3.2

#k8s.gcr.io/etcd:3.4.3-0

#k8s.gcr.io/coredns:1.6.7

###拉取镜像

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.18.1

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.18.1

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.18.1

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.18.1

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.3-0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.6.7

###修改tag

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.18.1 k8s.gcr.io/kube-apiserver:v1.18.1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.18.1 k8s.gcr.io/kube-controller-manager:v1.18.1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.18.1 k8s.gcr.io/kube-scheduler:v1.18.1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.18.1 k8s.gcr.io/kube-proxy:v1.18.1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2 k8s.gcr.io/pause:3.2

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.3-0 k8s.gcr.io/etcd:3.4.3-0

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.6.7 k8s.gcr.io/coredns:1.6.7

执行脚本

sh dockpullImages1.18.1.sh

三、安装kubelet、kubeadm 和 kubectl(所有节点执行)

-

kubelet 运行在 Cluster 所有节点上,负责启动 Pod 和容器。

-

kubeadm 用于初始化 Cluster。

-

kubectl 是 Kubernetes 命令行工具。通过 kubectl 可以部署和管理应用,查看各种资源,创建、删除和更新各种组件。

1、配置源

# cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

2、安装 kubeadm 和相关工具

所有节点:

1.安装

yum makecache fast

yum install -y kubelet kubeadm kubectl ipvsadm #注意,这样默认是下载最新版本v1.22.2

======================================================================

[root@k8s-master ~]# yum install -y kubelet-1.18.1-0.x86_64 kubeadm-1.18.1-0.x86_64 kubectl-1.18.1-0.x86_64 ipvsadm

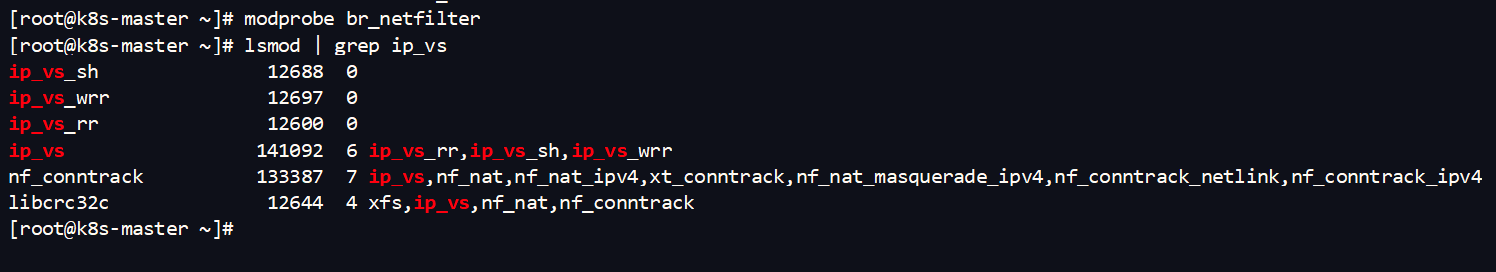

2.加载ipvs相关内核模块

如果重新开机,需要重新加载(可以写在 /etc/rc.local 中开机自动加载)

# modprobe ip_vs

# modprobe ip_vs_rr

# modprobe ip_vs_wrr

# modprobe ip_vs_sh

# modprobe nf_conntrack_ipv4

3.编辑文件添加开机启动

# vim /etc/rc.local

# chmod +x /etc/rc.local

重启服务器 reboot

4.配置:

配置转发相关参数,否则可能会出错

# cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

vm.swappiness=0

EOF

5.使配置生效

# sysctl --system

6.如果net.bridge.bridge-nf-call-iptables报错,加载br_netfilter模块

# modprobe br_netfilter

# sysctl -p /etc/sysctl.d/k8s.conf

7.查看是否加载成功

# lsmod | grep ip_vs

ip_vs_sh 12688 0

ip_vs_wrr 12697 0

ip_vs_rr 12600 0

ip_vs 141092 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 133387 2 ip_vs,nf_conntrack_ipv4

libcrc32c 12644 3 xfs,ip_vs,nf_conntrack

3、启动kubelet

1.配置kubelet使用pause镜像

获取docker的cgroups

# systemctl start docker && systemctl enable docker

# DOCKER_CGROUPS=$(docker info | grep 'Cgroup' | cut -d' ' -f4)

# echo $DOCKER_CGROUPS

=================================

配置变量:

[root@k8s-master ~]# DOCKER_CGROUPS=`docker info |grep 'Cgroup' | awk '{print $3}'`

[root@k8s-master ~]# echo $DOCKER_CGROUPS

cgroupfs

这个是使用国内的源。-###注意我们使用谷歌的镜像--操作下面的第3标题

2.配置kubelet的cgroups

# cat >/etc/sysconfig/kubelet<<EOF

KUBELET_EXTRA_ARGS="--cgroup-driver=$DOCKER_CGROUPS --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.2"

EOF

3.配置kubelet的cgroups

# cat >/etc/sysconfig/kubelet<<EOF

KUBELET_EXTRA_ARGS="--cgroup-driver=$DOCKER_CGROUPS --pod-infra-container-image=k8s.gcr.io/pause:3.2"

EOF

# cat >/etc/sysconfig/kubelet<<EOF

KUBELET_EXTRA_ARGS="--cgroup-driver=cgroupfs --pod-infra-container-image=k8s.gcr.io/pause:3.2"

EOF

启动

# systemctl daemon-reload

# systemctl enable kubelet && systemctl restart kubelet

在这里使用 # systemctl status kubelet,你会发现报错误信息;

10月 11 00:26:43 node1 systemd[1]: kubelet.service: main process exited, code=exited, status=255/n/a

10月 11 00:26:43 node1 systemd[1]: Unit kubelet.service entered failed state.

10月 11 00:26:43 node1 systemd[1]: kubelet.service failed.

运行 # journalctl -xefu kubelet 命令查看systemd日志才发现,真正的错误是:

unable to load client CA file /etc/kubernetes/pki/ca.crt: open /etc/kubernetes/pki/ca.crt: no such file or directory

#这个错误在运行kubeadm init 生成CA证书后会被自动解决,此处可先忽略。

#简单地说就是在kubeadm init 之前kubelet会不断重启。

4、初始化master

运行初始化过程如下:

初始化之前,切记要关闭防火墙和selinux,cpu核心数至少为2

[root@master ~]# kubeadm init --kubernetes-version=v1.18.1 --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=192.168.246.166 --ignore-preflight-errors=Swap

注意修改apiserver-advertise-address为master节点ip

参数解释:

- –kubernetes-version: 用于指定k8s版本;

- –apiserver-advertise-address:用于指定kube-apiserver监听的ip地址,就是 master本机IP地址。

- –pod-network-cidr:用于指定Pod的网络范围; 10.244.0.0/16

- –service-cidr:用于指定SVC的网络范围;

- –image-repository: 指定阿里云镜像仓库地址

看到以下信息表示安装成功

......

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 172.25.63.1:6443 --token cmpyar.n4arbbq0i9irhmum \

--discovery-token-ca-cert-hash sha256:542e77bad47c73c3336ce601ad9fe2078381ea8b04b95f377f46520296a79fe2

成功后注意最后一个命令,这个join命令可以用来添加节点。

注意保持好kubeadm join,后面会用到的。

如果初始化失败,请使用如下代码清除后重新初始化

# kubeadm reset

5、配置kubectl

按照他的提示执行以下命令,如下操作在master节点操作

[root@kub-k8s-master ~]# rm -rf $HOME/.kube

[root@kub-k8s-master ~]# mkdir -p $HOME/.kube

[root@kub-k8s-master ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@kub-k8s-master ~]# chown $(id -u):$(id -g) $HOME/.kube/config

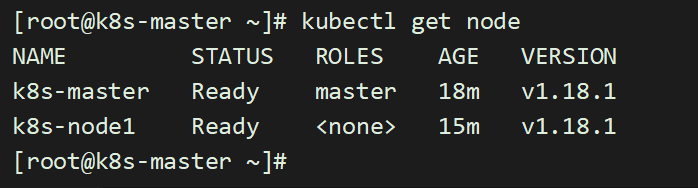

查看node节点

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master NotReady master 2m41s v1.18.1

6、配置使用网络插件

要让 Kubernetes Cluster 能够工作,必须安装 Pod 网络,否则 Pod 之间无法通信。

Kubernetes 支持多种网络方案,这里我们先使用 flannel,后面还会讨论 Canal。

在master节点操作下载配置

# cd ~ && mkdir flannel && cd flannel

# curl -O https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

不出意外是文件会下载失败的,所以我直接把文件内容放在这里,复制文件内容即可运行

(1)kube-flannel.yml

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-amd64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- amd64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

- --iface=ens33

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- arm64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

- key: node.kubernetes.io/not-ready

operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-arm64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-arm64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- arm

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-arm

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-arm

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-ppc64le

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- ppc64le

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-ppc64le

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-ppc64le

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-s390x

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- s390x

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-s390x

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-s390x

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

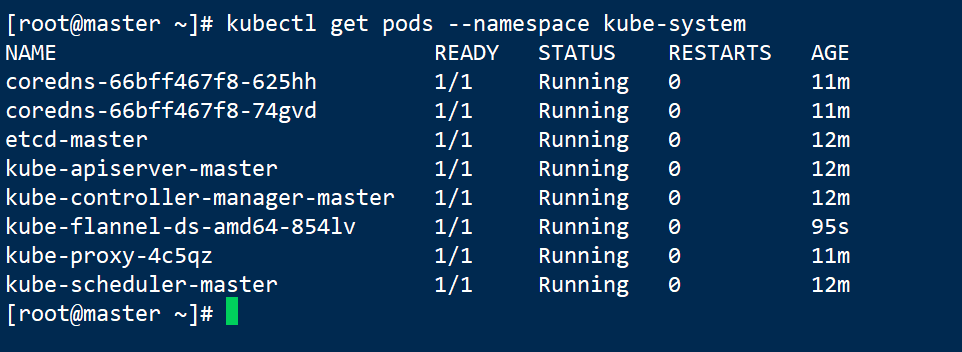

加载flannel

# kubectl apply -f ~/flannel/kube-flannel.yml #启动完成之后需要等待一会

# kubectl get pods --namespace kube-system

NAME READY STATUS RESTARTS AGE

coredns-5644d7b6d9-sm8hs 1/1 Running 0 9m18s

coredns-5644d7b6d9-vddll 1/1 Running 0 9m18s

etcd-kub-k8s-master 1/1 Running 0 8m14s

kube-apiserver-kub-k8s-master 1/1 Running 0 8m17s

kube-controller-manager-kub-k8s-master 1/1 Running 0 8m20s

kube-flannel-ds-amd64-9wgd8 1/1 Running 0 8m42s

kube-proxy-sgphs 1/1 Running 0 9m18s

kube-scheduler-kub-k8s-master 1/1 Running 0 8m10s

查看Pod状态

# kubectl get pods --namespace kube-system

# kubectl get service

# kubectl get svc --namespace kube-system

只有网络插件也安装配置完成之后,才能会显示为ready状态

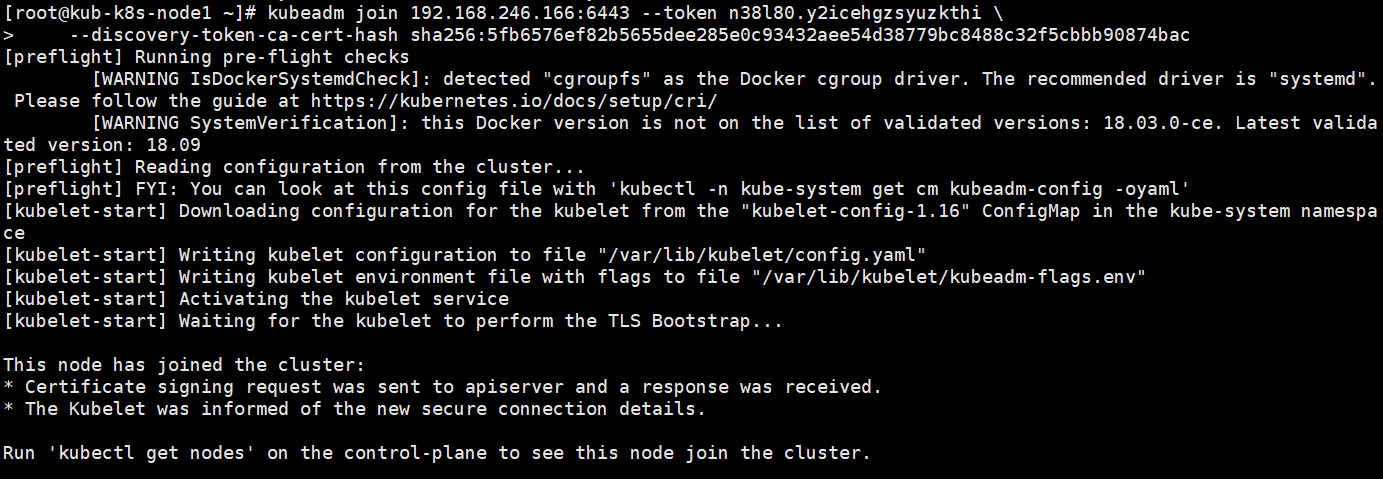

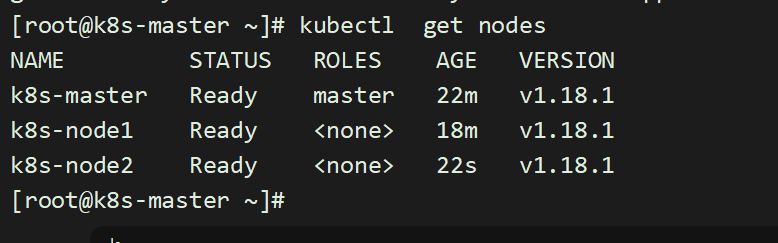

7、添加 node1 和 node2

(1)所有node节点操作

登录到node节点,确保已经安装了docker和kubeadm,kubelet,kubectl

如果报错开启ip转发:

# sysctl -w net.ipv4.ip_forward=1

在所有node节点操作,此命令为初始化master成功后返回的结果

# kubeadm join 192.168.246.166:6443 --token 93erio.hbn2ti6z50he0lqs \

--discovery-token-ca-cert-hash sha256:3bc60f06a19bd09f38f3e05e5cff4299011b7110ca3281796668f4edb29a56d9

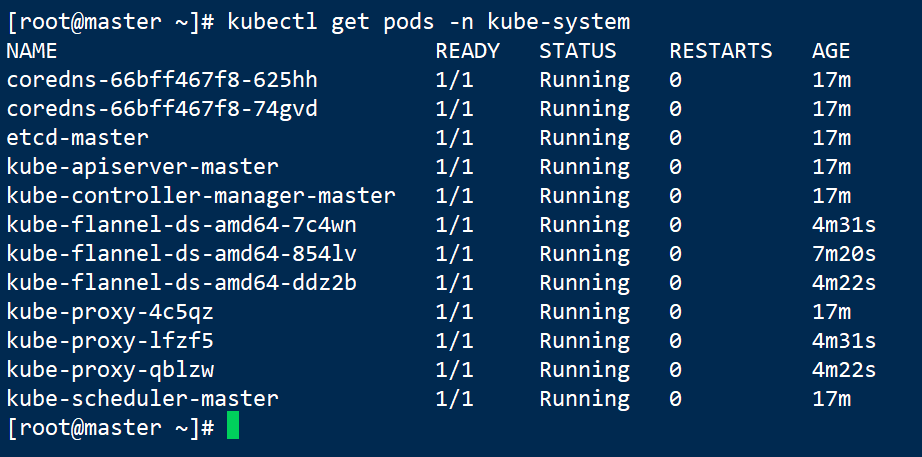

(2)在master操作

各种检测:

1.查看pods:

[root@kub-k8s-master ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-5644d7b6d9-sm8hs 1/1 Running 0 39m

coredns-5644d7b6d9-vddll 1/1 Running 0 39m

etcd-kub-k8s-master 1/1 Running 0 37m

kube-apiserver-kub-k8s-master 1/1 Running 0 38m

kube-controller-manager-kub-k8s-master 1/1 Running 0 38m

kube-flannel-ds-amd64-9wgd8 1/1 Running 0 38m

kube-flannel-ds-amd64-lffc8 1/1 Running 0 2m11s

kube-flannel-ds-amd64-m8kk2 1/1 Running 0 2m2s

kube-proxy-dwq9l 1/1 Running 0 2m2s

kube-proxy-l77lz 1/1 Running 0 2m11s

kube-proxy-sgphs 1/1 Running 0 39m

kube-scheduler-kub-k8s-master 1/1 Running 0 37m

2.查看异常pod信息:

[root@kub-k8s-master ~]# kubectl describe pods kube-flannel-ds-sr6tq -n kube-system

Name: kube-flannel-ds-sr6tq

Namespace: kube-system

Priority: 0

PriorityClassName: <none>

。。。。。

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Pulling 12m kubelet, node2 pulling image "registry.cn-shanghai.aliyuncs.com/gcr-k8s/flannel:v0.10.0-amd64"

Normal Pulled 11m kubelet, node2 Successfully pulled image "registry.cn-shanghai.aliyuncs.com/gcr-k8s/flannel:v0.10.0-amd64"

Normal Created 11m kubelet, node2 Created container

Normal Started 11m kubelet, node2 Started container

Normal Created 11m (x4 over 11m) kubelet, node2 Created container

Normal Started 11m (x4 over 11m) kubelet, node2 Started container

Normal Pulled 10m (x5 over 11m) kubelet, node2 Container image "registry.cn-shanghai.aliyuncs.com/gcr-k8s/flannel:v0.10.0-amd64" already present on machine

Normal Scheduled 7m15s default-scheduler Successfully assigned kube-system/kube-flannel-ds-sr6tq to node2

Warning BackOff 7m6s (x23 over 11m) kubelet, node2 Back-off restarting failed container

3.遇到这种情况直接 删除异常pod:

[root@kub-k8s-master ~]# kubectl delete pod kube-flannel-ds-sr6tq -n kube-system

pod "kube-flannel-ds-sr6tq" deleted

4.查看pods:

[root@kub-k8s-master ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-5644d7b6d9-sm8hs 1/1 Running 0 44m

coredns-5644d7b6d9-vddll 1/1 Running 0 44m

etcd-kub-k8s-master 1/1 Running 0 42m

kube-apiserver-kub-k8s-master 1/1 Running 0 43m

kube-controller-manager-kub-k8s-master 1/1 Running 0 43m

kube-flannel-ds-amd64-9wgd8 1/1 Running 0 43m

kube-flannel-ds-amd64-lffc8 1/1 Running 0 7m10s

kube-flannel-ds-amd64-m8kk2 1/1 Running 0 7m1s

kube-proxy-dwq9l 1/1 Running 0 7m1s

kube-proxy-l77lz 1/1 Running 0 7m10s

kube-proxy-sgphs 1/1 Running 0 44m

kube-scheduler-kub-k8s-master 1/1 Running 0 42m

5.查看节点:

[root@kub-k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

kub-k8s-master Ready master 43m v1.16.1

kub-k8s-node1 Ready <none> 6m46s v1.16.1

kub-k8s-node2 Ready <none> 6m37s v1.16.1

到此集群配置完成

四、报错的解决办法

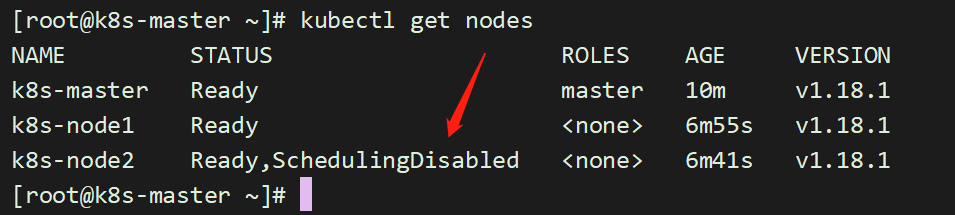

1、移除node节点的方法

[root@k8s-master ~]# kubectl drain k8s-node2 --delete-local-data --force --ignore-daemonsets

[root@k8s-master ~]# kubectl delete nodes k8s-node2

node "k8s-node2" deleted

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 11m v1.18.1

k8s-node1 Ready <none> 7m39s v1.18.1 #node2节点已经移除

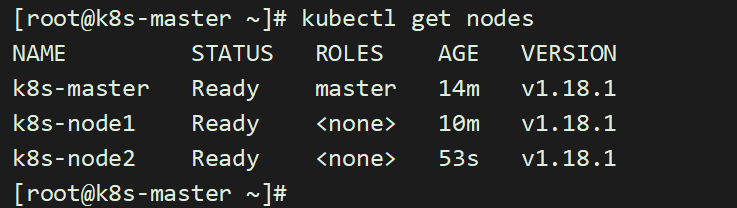

2、添加已删除节点

前提:token未失效

如果这个时候再想添加进来这个node,需要执行两步操作

第一步:停掉kubelet(需要添加进来的节点操作)

[root@k8s-node2 ~]# systemctl stop kubelet

第二步:删除相关文件

[root@k8s-node2 ~]# rm -rf /etc/kubernetes/*

第三步:添加节点

因为之前的token还有效,我这里并没有超出token的有效期;直接执行加入集群的命令即可;

[root@k8s-node2 ~]# kubeadm join 172.16.2.103:6443 --token jvjxs2.xu92rq4fetgtpy1o --discovery-token-ca-cert-hash sha256:56159a0de43781fd57f1df829de4fe906cf355f4fec8ff7f6f9078c77c8c292d

第四步:验证查看

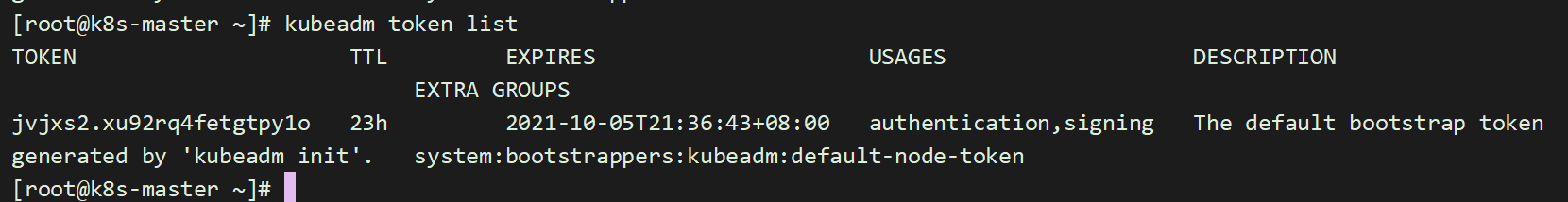

3、忘掉token再次添加进k8s集群

前提:token未失效

第一步:主节点执行命令

在主控节点,获取token:

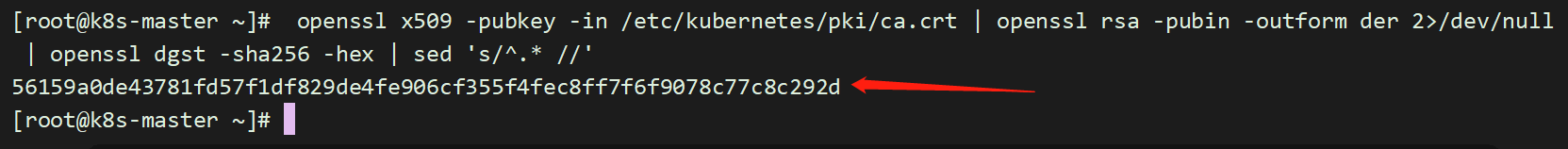

第二步: 获取ca证书sha256编码hash值

在主控节点

[root@k8s-master ~]# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

56159a0de43781fd57f1df829de4fe906cf355f4fec8ff7f6f9078c77c8c292d

第三步:主节点移除node2

[root@k8s-master ~]# kubectl drain k8s-node2 --delete-local-data --force --ignore-daemonsets

[root@k8s-master ~]# kubectl delete nodes k8s-node2

[root@k8s-master ~]# kubectl get node

第四步:从节点执行如下的命令

[root@k8s-node2 ~]# systemctl stop kubelet

[root@k8s-node2 ~]# rm -rf /etc/kubernetes/*

第五步:加入集群

指定主节点IP,端口是6443

在生成的证书前有sha256:

[root@k8s-node2 ~]# kubeadm join 172.16.2.103:6443 --token jvjxs2.xu92rq4fetgtpy1o --discovery-token-ca-cert-hash sha256:56159a0de43781fd57f1df829de4fe906cf355f4fec8ff7f6f9078c77c8c292d

第六步:主节点查看验证

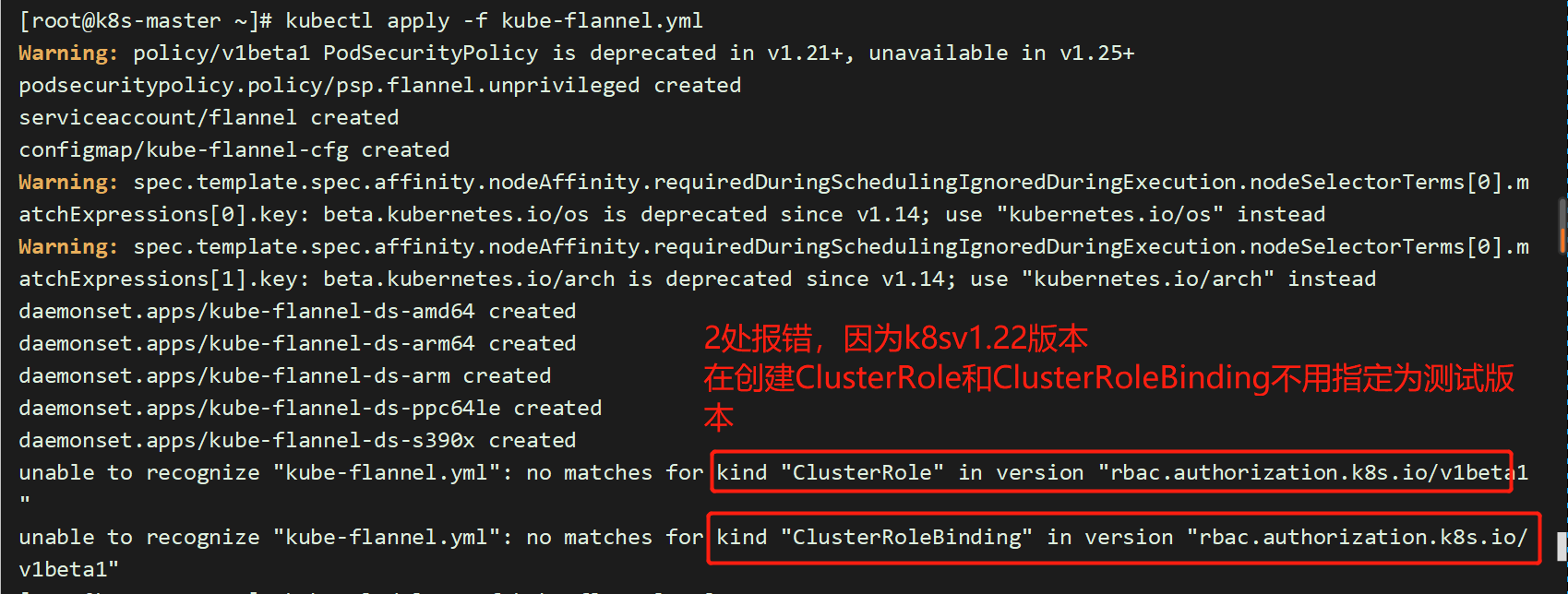

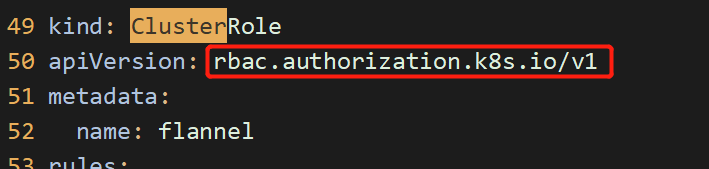

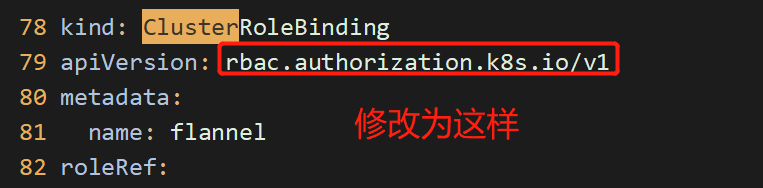

4、加载flannel失败

[root@k8s-master flannel]# kubectl apply -f kube-flannel.yml

报错如下

[root@k8s-master flannel]# kubectl delete -f kube-flannel.yml

[root@k8s-master flannel]# vim kube-flannel.yml #进行版本的修改

重新创建即可

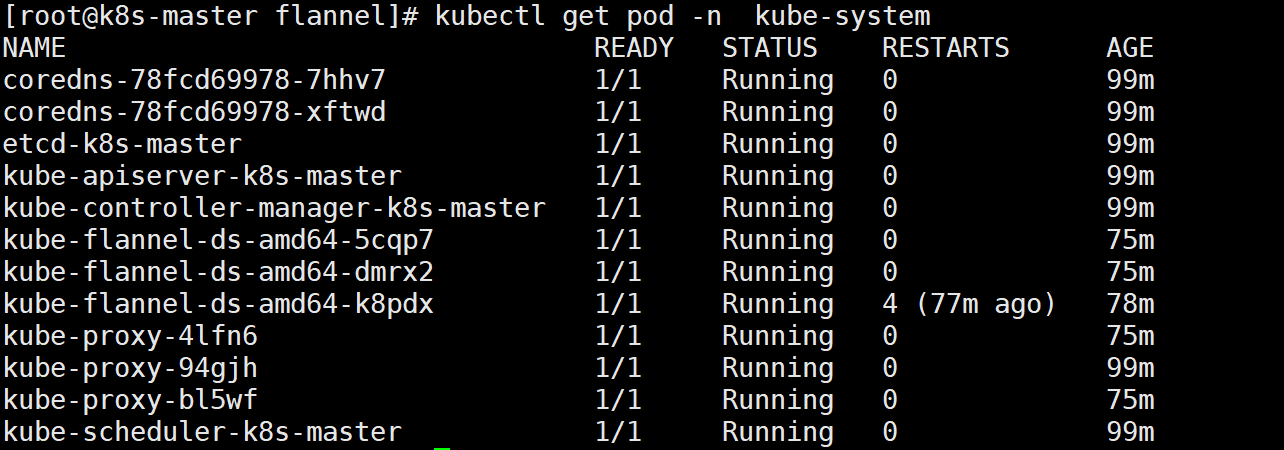

[root@k8s-master flannel]# kubectl apply -f kube-flannel.yml

查看文件中,指定的api对象,是否都创建成功

[root@k8s-master flannel]# kubectl get pod -n kube-system

如果有pod信息异常,一直不成功,可以单独删除这个pod,它会自动生成最新的

5、IP修改为静态

vim /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

NAME=ens33

DEVICE=ens33

ONBOOT=yes

NETMASK=255.255.255.0

IPADDR=192.168.118.191

DNS=192.168.118.2

GATEWAY=192.168.118.2

6、部署Flannel网络后pod及容器无法跨主机互通问题

- 方法一:重启docker,

- 方法二:卸载flannel网络

以下介绍方法二:

1、在master节点删除flannel

kubectl delete -f kube-flannel.yml

2、在node节点清理flannel网络留下的文件

ifconfig cni0 down

ip link delete cni0

ifconfig flannel.1 down

ip link delete flannel.1

rm -rf /var/lib/cni/

rm -f /etc/cni/net.d/*

3、重新部署Flannel网络

[root@k8s-master01 flannel]# kubectl create -f kube-flannel.yml

[root@k8s-master01 flannel]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-5c98db65d4-8bpdd 1/1 Running 0 17s

coredns-5c98db65d4-knfcj 1/1 Running 0 43s

etcd-k8s-master01 1/1 Running 2 10d

kube-apiserver-k8s-master01 1/1 Running 2 10d

kube-controller-manager-k8s-master01 1/1 Running 3 10d

kube-flannel-ds-amd64-56hsf 1/1 Running 0 25m

kube-flannel-ds-amd64-56t49 1/1 Running 0 25m

kube-flannel-ds-amd64-qz42z 1/1 Running 0 25m

kube-proxy-5fn9m 1/1 Running 1 10d

kube-proxy-6hjvp 1/1 Running 2 10d

kube-proxy-t47n9 1/1 Running 2 10d

kube-scheduler-k8s-master01 1/1 Running 4 10d

kubernetes-dashboard-7d75c474bb-4r7hc 1/1 Running 0 23m

[root@k8s-master01 flannel]#

flannel网络显示正常, 容器之间可以跨主机互通!

181

181

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?