基于sm8650平台,关注点在APSS和MPSS之间的跨进程通信,这里我只关注gps模块的QMI LOC。

1、service id table

如“KBA-180423015201 qmi service id table ”文档所述,下表为GPS所使用QMI 对应的service id table。

| Svc ID | Service | Team | Processor(s) | Processor(s) |

| Service Runs On | Client Runs On | |||

| 16 | QMI LOC (GPS Location Service) | GPS | MPSS | APSS |

2、debug qmi framework log

// 新增qmi_fw.conf

QMI_CCI_DEBUG_LEVEL=0

QMI_CSI_DEBUG_LEVEL=0adb root

adb remount

adb push qmi_fw.conf /vendor/etc/qmi_fw.conf

adb push qmi_fw.conf /etc/qmi_fw.conf

adb rebootpush到这两个路径下,是因为下面的宏定义,这里不管它是否define QMI_CCI_SYSTEM,我们只管往两个路径下push即可。

#ifdef QMI_CCI_SYSTEM

#define QMI_FW_CONF_FILE "/etc/qmi_fw.conf"

#else

#define QMI_FW_CONF_FILE "/vendor/etc/qmi_fw.conf"

#endif先看一段简单的日志(使用手机发起gps定位,观察QMI_LOC_EVENT_POSITION_REPORT_IND_V02,对应的msg_id为0x0024):

08-08 15:49:14.612 gps 1242 2665 D QMI_FW : QCCI: data_msg_reader_thread: Received 433 bytes from 25

08-08 15:49:14.612 gps 1242 2665 D QMI_FW : QCCI: QMI_CCI_RX: cntl_flag - 04, txn_id - 008c, msg_id - 0024, msg_len - 01aa, svc_id - 00000010

08-08 15:49:14.612 gps 1242 2665 V LocSvc_api_v02: locClientIndCb:1096]: Indication: msg_id=36 buf_len=426 pCallbackData = 0xb40000708c213d80

08-08 15:49:14.612 gps 1242 2665 V LocSvc_api_v02: locClientGetSizeByEventIndId:2653]: event ind Id 36 size = 6640

08-08 15:49:14.612 gps 1242 2665 V LocSvc_api_v02: locClientGetSizeAndTypeByIndId:869]: indId 36 is an event size = 6640

08-08 15:49:14.612 gps 1242 2665 D LocSvc_ApiV02: <--- globalEventCb line 192 QMI_LOC_EVENT_POSITION_REPORT_IND_V02

08-08 15:49:14.612 gps 1242 2665 V LocSvc_ApiV02: globalEventCb:202] client = 0xb40000708c213d80, event id = 0x24, client cookie ptr = 0xb40000708c241000

08-08 15:49:14.612 gps 1242 2665 V LocSvc_LBSApiV02: eventCb:58] client = 0xb40000708c213d80, event id = 36, event name = QMI_LOC_EVENT_POSITION_REPORT_IND_V02 payload = 0x7075fe9d38

08-08 15:49:14.612 gps 1242 2665 D LocSvc_ApiV02: eventCb:7029] event id = 0x24

08-08 15:49:14.612 gps 1242 2665 D LocSvc_ApiV02: Reporting position from V2 Adapter

08-08 15:49:14.612 gps 1242 2665 V LocSvc_ApiV02: reportPosition:2719] mHlosQtimer2=3801963273

08-08 15:49:14.612 gps 1242 2665 D LocSvc_ApiV02: reportPosition:2751] QMI_PosPacketTime 191(sec) 127429144(nsec), QMI_spoofReportMask 0

08-08 15:49:14.612 gps 1242 1348 V LocSvc_nmea: Entering loc_nmea_generate_sv line 2185

08-08 15:49:14.612 gps 1242 2665 V LocSvc_ApiV02: reportPosition:2759 jammerIndicator is present len=19

08-08 15:49:14.612 gps 1242 2665 V LocSvc_ApiV02: reportPosition:2780] agcMetricDb[1]=-5702; bpMetricDb[1]=5702msg_id我们可以在location_service_v02.h中找到:

#define QMI_LOC_EVENT_POSITION_REPORT_IND_V02 0x0024svc_id定义在location_service_v02.c,loc_qmi_idl_service_object_v02的第三个成员。

/*Service Object*/

struct qmi_idl_service_object loc_qmi_idl_service_object_v02 = {

0x06,

0x02,

0x10,

10015,

{ sizeof(loc_service_command_messages_v02)/sizeof(qmi_idl_service_message_table_entry),

sizeof(loc_service_response_messages_v02)/sizeof(qmi_idl_service_message_table_entry),

sizeof(loc_service_indication_messages_v02)/sizeof(qmi_idl_service_message_table_entry) },

{ loc_service_command_messages_v02, loc_service_response_messages_v02, loc_service_indication_messages_v02},

&loc_qmi_idl_type_table_object_v02,

0xA1,

NULL

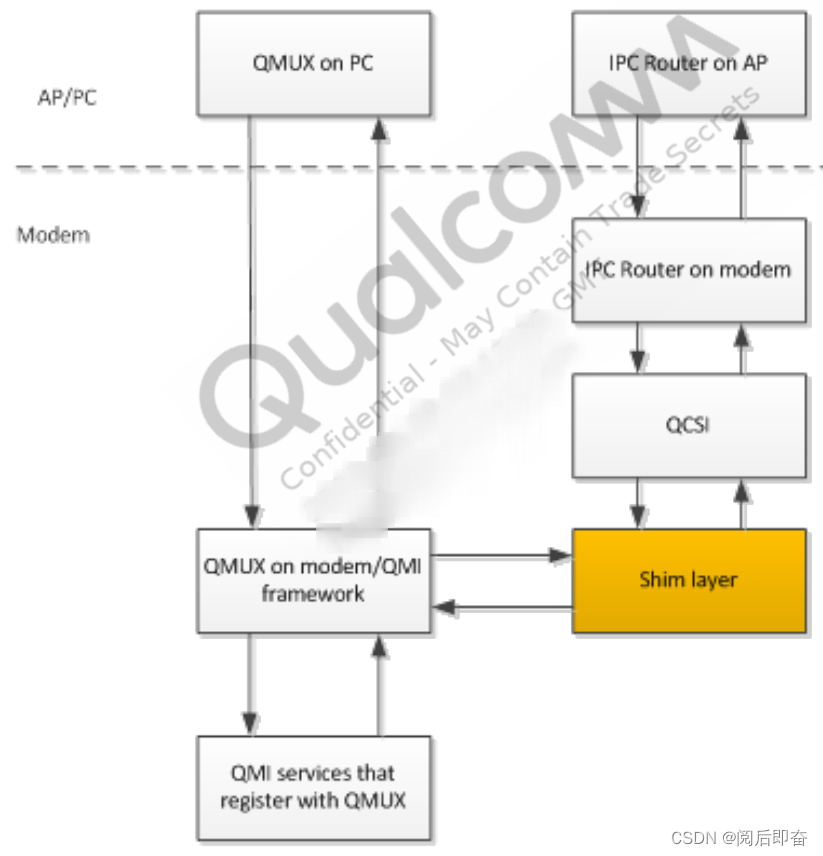

};3、QMI架构变化

首先基于文档“80-NV396-24_REV_C_Presentation__MPSS_TH_2_X_MPSS_AT_X_QMI_Framework_Change_and_Shim_Layer_Overview”

再看文档“80-PF777-4_REV_C_Linux_Android_MPROC_Design_Guidelines”,

Starting with SM8150 chipsets, the multiprocessor communication (MPROC) implementation on Linux Android side has changed. The Linux device driver code is redesigned to improve the efficiency and to upstream the remote processor messaging (RPMSG).

变化:

- The Linux Android is the master of the system and uses various protocols to communicate to multiple subsystems. To communicate with modem, aDSP, DSPS, cDSP, SPSS, the RPMSG driver replaces the Glink.

- A new Qualcomm mailbox driver (QMP) implementation is introduced to communicate with always on processor (AOP) and neural processor unit (NPU) subsystems. However, OEMs should not use the QMP driver to have their own clients communicate with NPU and AOP. For this reason, the QMP driver is not discussed in this document.

- The user space clients use the glink_pkt driver to communicate with the remote subsystems. However, it is advisable for all user space clients to use Qualcomm messaging interface (QMI) to communicate with the remote subsystems.

- The QMI inter processor communication router (IPCRTR) kernel driver is replaced with the QMI Qualcomm router (QRTR) driver.

从上面描述对于跨处理器通信,在MPSS侧还是使用IPCRTR,在APSS侧使用QRTR。但是其本质仍然是基于共享内存。

4、QMI LOC

4.1 APSS用户空间的调用流程

当有GPS定位请求,会有下面的函数调用:

LocApiV02 :: open(LOC_API_ADAPTER_EVENT_MASK_T mask)

locClientOpen(0, &globalCallbacks, &clientHandle, (void *)this);

locClientOpenInstance(eventRegMask, instanceId, pLocClientCallbacks, pLocClientHandle, pClientCookie);

locClientQmiCtrlPointInit(pCallbackData, instanceId);

qmi_client_get_service_instance(locClientServiceObject, instanceId, &serviceInfo);

qmi_client_init(&serviceInfo, locClientServiceObject, locClientIndCb, (void *) pLocClientCbData, NULL, &clnt);

xport->ops = xport_tbl[i].ops;

qmi_cci_client_alloc(service_obj, QMI_CCI_CONNECTED_CLIENT, os_params, ind_cb, ind_cb_data, &clnt);

// 这个回调函数,在后面会用到

clnt->info.client.ind_cb = ind_cb;

xport->handle = xport->ops->open(xport_data, clnt, service_id, idl_version, clnt->info.client.server_addr, max_msg_len);

xport_open

// 在这里初始化了一个reader线程,用于处理qmi ind

reader_thread_data_init(&xp->rdr_tdata, (void *)xp, data_msg_reader_thread)

// 这个回调函数,在后面会用到

pCallbackData->eventCallback = pLocClientCallbacks->eventIndCb;当有indication回调时:

// 以QMI_LOC_EVENT_POSITION_REPORT_IND_V02为例

// 一下调用栈为逆推

reportPosition(eventPayload.pPositionReportEvent);

locApiV02Instance->eventCb(clientHandle, eventId, eventPayload);

globalEventCb

locClientEventIndCbType localEventCallback = pCallbackData->eventCallback;

localEventCallback( (locClientHandleType)pCallbackData, msg_id, eventIndUnion, pCallbackData->pClientCookie);

locClientIndCb

clnt->info.client.ind_cb(CLIENT_HANDLE(clnt), msg_id, msg_len ? buf : NULL, msg_len, clnt->info.client.ind_cb_data);

qmi_cci_xport_recv(xp->clnt, (void *)&src_addr, buf, (uint32_t)rx_len);

// 这个reader线程一直在跑着,知道某个条件下退出

data_msg_reader_thread4.1.1 data_msg_reader_thread

也就是说,这里我们重点看data_msg_reader_thread线程,是它不断地去接收数据的。

这是一个在单独的线程中运行的函数,从传输句柄读取数据。传输句柄由结构“xport_handle”表示。该函数使用“轮询()”系统调用等待数据在传输句柄或用于唤醒线程的管道上可用。当数据在传输句柄上可用时,该函数使用recvfrom系统调用读取数据,并将其传递给qmi_cci_xport_recv函数进行处理。如果唤醒管道上接收到数据,该函数将检查接收字符的值并采取适当的操作。如果从传输句柄读取时发生错误,函数会尝试通过关闭句柄并创建一个新句柄来恢复。该函数继续在无限循环中运行,直到显式终止。

/*===========================================================================

FUNCTION data_msg_reader_thread

===========================================================================*/

/*!

* @brief

*

* This function reads all the data messages for a specific client.

*

* @return

* Transport handle or NULL incase of error.

*

*/

/*=========================================================================*/

static void *data_msg_reader_thread(void *arg)

{

struct xport_handle *xp = (struct xport_handle *)arg;

unsigned char ch, *buf;

int i;

ssize_t rx_len;

struct pollfd pbits[2];

struct xport_ipc_router_server_addr src_addr;

struct sockaddr_qrtr addr;

while(1)

{

pbits[0].fd = xp->rdr_tdata.wakeup_pipe[0];

pbits[0].events = POLLIN;

pbits[1].fd = xp->fd;

pbits[1].events = POLLIN;

// 注释①

i = poll(pbits, 2, -1);

if(i < 0)

{

if (errno == EINTR)

QMI_CCI_LOGD("%s: poll error (%d)\n", __func__, errno);

else

QMI_FW_LOGE("%s: poll error (%d)\n", __func__, errno);

continue;

}

if((pbits[1].revents & POLLIN))

{

socklen_t addr_size = sizeof(struct sockaddr_qrtr);

buf = (unsigned char *)calloc(xp->max_rx_len, 1);

if(!buf)

{

QMI_FW_LOGE("%s: Unable to allocate read buffer for %p of size %d\n",

__func__, xp, xp->max_rx_len);

break;

}

addr_size = sizeof(struct sockaddr_qrtr);

// 注释②

rx_len = recvfrom(xp->fd, buf, xp->max_rx_len, MSG_DONTWAIT, (struct sockaddr *)&addr, &addr_size);

if (rx_len < 0)

{

QMI_FW_LOGE("%s: Error recvfrom %p - rc : %d\n", __func__, xp, errno);

free(buf);

break;

}

else if (rx_len == 0)

{

if (addr_size == sizeof(struct sockaddr_qrtr))

{

QMI_CCI_LOGD("%s: QCCI Received Resume_Tx on FD %d from port %08x:%08x\n",

__func__, xp->fd, addr.sq_node, addr.sq_port);

qmi_cci_xport_resume(xp->clnt);

}

else

{

QMI_FW_LOGE("%s: No data read from %d\n", __func__, xp->fd);

}

free(buf);

continue;

}

else if (addr.sq_port == QRTR_PORT_CTRL)

{

/* NOT expected to receive data from control port */

QMI_FW_LOGE("%s: DATA from control port len[%d]\n", __func__, (int)rx_len);

free(buf);

continue;

}

QMI_CCI_LOGD("%s: Received %d bytes from %d\n", __func__, (int)rx_len, xp->fd);

src_addr.service = 0;

src_addr.instance = 0;

src_addr.node_id = addr.sq_node;

src_addr.port_id = addr.sq_port;

// 注释③

qmi_cci_xport_recv(xp->clnt, (void *)&src_addr, buf, (uint32_t)rx_len);

free(buf);

}

if (pbits[0].revents & POLLIN)

{

read(xp->rdr_tdata.wakeup_pipe[0], &ch, 1);

QMI_CCI_LOGD("%s: wakeup_pipe[0]=%x ch=%c\n", __func__, pbits[0].revents, ch);

if(ch == 'd')

{

close(xp->rdr_tdata.wakeup_pipe[0]);

close(xp->rdr_tdata.wakeup_pipe[1]);

QMI_CCI_LOGD("Close[%d]\n", xp->fd);

close(xp->fd);

pthread_attr_destroy(&xp->rdr_tdata.reader_tattr);

release_xp(xp);

break;

}

else if (ch == 'r')

{

if (xp->srv_conn_reset)

qmi_cci_xport_event_remove_server(xp->clnt, &xp->svc_addr);

}

}

if (pbits[1].revents & POLLERR)

{

int sk_size;

int flags;

int err;

rx_len = recvfrom(xp->fd, (void *)&err, sizeof(err), MSG_DONTWAIT, NULL, NULL);

if (errno != ENETRESET)

continue;

QMI_FW_LOGE("%s: data thread received ENETRESET %d\n", __func__, errno);

qmi_cci_xport_event_remove_server(xp->clnt, &xp->svc_addr);

close(xp->fd);

xp->fd = socket(AF_QIPCRTR, SOCK_DGRAM | SOCK_CLOEXEC, 0);

if(xp->fd < 0)

break;

flags = fcntl(xp->fd, F_GETFL, 0);

fcntl(xp->fd, F_SETFL, flags | O_NONBLOCK);

sk_size = INT_MAX;

setsockopt(xp->fd, SOL_SOCKET, SO_RCVBUF, (char *)&sk_size, sizeof(sk_size));

}

}

QMI_CCI_LOGD("%s data thread exiting\n", __func__);

return NULL;

}注释①:poll()函数是一个用于等待多个文件描述符上的事件的系统调用。

typedef unsigned int nfds_t;

struct pollfd {

int fd; // 文件描述符

short events; // 等待的事件

short revents; // 实际发生的事件

};

// events事件可为以下之一:

// `POLLIN`:有数据可读。

// `POLLOUT`:写操作不会阻塞。

// `POLLPRI`:有紧急数据可读。

// `POLLERR`:发生错误。

// `POLLHUP`:挂起事件。

// `POLLNVAL`:无效请求。

int poll(struct pollfd* const fds __pass_object_size, nfds_t fd_count, int timeout)

// 参数一fds是指向一个 pollfd 结构体数组的指针,每个结构体描述了一个文件描述符和要等待的事件。

// 参数二nfds是等待的文件描述符数量,即 fds 数组的大小。

// 参数三timeout是超时时间,单位是毫秒。如果设置为 -1,则表示无限等待,直到有事件发生或者出错才会返回。如果设置为 0,则表示立即返回,不会阻塞程序执行。如果设置为一个正整数,则表示等待指定的毫秒数后返回,即使没有事件发生。也因此poll(pbits, 2, -1)会阻塞地等到两个fd的事件,

注释②:ecvfrom函数是Linux网络编程中用于接收数据的函数,其函数原型如下:

ssize_t recvfrom(int sockfd, void *buf, size_t len, int flags, struct sockaddr *src_addr, socklen_t *addrlen);各个参数的意义如下:

- sockfd:表示接收端的套接字描述符。

- buf:表示接收数据的缓冲区。

- len:表示接收数据缓冲区的长度。

- flags:表示接收数据的方式,常用的有以下几种:

- 0:默认方式,阻塞等待数据到来。

- MSG_DONTWAIT:非阻塞方式,如果没有数据到来,立即返回。

- MSG_PEEK:接收数据后不清空缓冲区,下次接收数据时仍然可以读取到之前接收到的数据。

- src_addr:表示发送端的地址信息,如果不需要获取发送端的地址信息,可以将其设置为NULL。

- addrlen:表示发送端地址信息的长度,如果不需要获取发送端的地址信息,可以将其设置为NULL。recvfrom函数的返回值表示实际接收到的数据长度,如果返回值为0,表示对端已经关闭连接,如果返回值为-1,表示接收数据出错,可以通过errno变量获取错误码。

rx_len = recvfrom(xp->fd, buf, xp->max_rx_len, MSG_DONTWAIT, (struct sockaddr *)&addr, &addr_size)的作用就是非阻塞方式从socket中读取数据。

注释③:在前面的indication回调中有写到,将数据解码后发送出去。

4.1.2 socket

在xport_open中,创建一个面向数据报的套接字,并且在执行新程序时自动关闭套接字:

// AF_QIPCRTR是高通为QMI IPCRTR预留的domain

xp->fd = socket(AF_QIPCRTR, SOCK_DGRAM | SOCK_CLOEXEC, 0);在xport_send中,向socket发送数据:

send_ret_val = sendto(xp->fd, buf, len, MSG_DONTWAIT, (struct sockaddr *)&dest_addr, sizeof(struct sockaddr_qrtr));在data_msg_reader_thread中,从socket接收数据:

rx_len = recvfrom(xp->fd, buf, xp->max_rx_len, MSG_DONTWAIT, (struct sockaddr *)&addr, &addr_size);4.2 APSS内核空间

我们根据用户空间创建socket时的domain:AF_QIPCRTR去找QMI创建socket时的代码。

4.2.1 QRTR内核模块初始化

// msm-kernel/net/qrtr/af_qrtr.c

static const struct net_proto_family qrtr_family = {

.owner = THIS_MODULE,

.family = AF_QIPCRTR,

.create = qrtr_create,

};

static int __init qrtr_proto_init(void)

{

int rc;

qrtr_update_node_id();

qrtr_local_ilc = ipc_log_context_create(QRTR_LOG_PAGE_CNT,

"qrtr_local", 0);

rc = proto_register(&qrtr_proto, 1);

if (rc)

return rc;

// 注册socket family

rc = sock_register(&qrtr_family);

if (rc)

goto err_proto;

rc = qrtr_ns_init();

if (rc)

goto err_sock;

qrtr_backup_init();

return 0;

err_sock:

sock_unregister(qrtr_family.family);

err_proto:

proto_unregister(&qrtr_proto);

return rc;

}

postcore_initcall(qrtr_proto_init);4.2.2 qrtr_ns_init

// msm-kernel/net/qrtr/ns.c

int qrtr_ns_init(void)

{

struct sockaddr_qrtr sq;

int rx_buf_sz = INT_MAX;

int ret;

INIT_LIST_HEAD(&qrtr_ns.lookups);

kthread_init_worker(&qrtr_ns.kworker);

kthread_init_work(&qrtr_ns.work, qrtr_ns_worker);

ns_ilc = ipc_log_context_create(NS_LOG_PAGE_CNT, "qrtr_ns", 0);

ret = sock_create_kern(&init_net, AF_QIPCRTR, SOCK_DGRAM,

PF_QIPCRTR, &qrtr_ns.sock);

if (ret < 0)

return ret;

ret = kernel_getsockname(qrtr_ns.sock, (struct sockaddr *)&sq);

if (ret < 0) {

pr_err("failed to get socket name\n");

goto err_sock;

}

qrtr_ns.task = kthread_run(kthread_worker_fn, &qrtr_ns.kworker,

"qrtr_ns");

if (IS_ERR(qrtr_ns.task)) {

pr_err("failed to spawn worker thread %ld\n",

PTR_ERR(qrtr_ns.task));

goto err_sock;

}

qrtr_ns.sock->sk->sk_data_ready = qrtr_ns_data_ready;

sq.sq_port = QRTR_PORT_CTRL;

qrtr_ns.local_node = sq.sq_node;

ret = kernel_bind(qrtr_ns.sock, (struct sockaddr *)&sq, sizeof(sq));

if (ret < 0) {

pr_err("failed to bind to socket\n");

goto err_wq;

}

sock_setsockopt(qrtr_ns.sock, SOL_SOCKET, SO_RCVBUF,

KERNEL_SOCKPTR((void *)&rx_buf_sz), sizeof(rx_buf_sz));

qrtr_ns.bcast_sq.sq_family = AF_QIPCRTR;

qrtr_ns.bcast_sq.sq_node = QRTR_NODE_BCAST;

qrtr_ns.bcast_sq.sq_port = QRTR_PORT_CTRL;

ret = say_hello(&qrtr_ns.bcast_sq);

if (ret < 0)

goto err_wq;

return 0;

err_wq:

kthread_stop(qrtr_ns.task);

err_sock:

sock_release(qrtr_ns.sock);

return ret;

}

EXPORT_SYMBOL_GPL(qrtr_ns_init);qrtr_ns_init函数初始化QRTR命名服务的相关数据结构和socket连接。

关键点:

- 初始化qrtr_ns结构体,包括lookups链表头、kworker线程和相关工作。

- 创建命名服务使用的内核socket,family是AF_QIPCRTR和type是SOCK_DGRAM。

- 获取socket名,qrtr_ns.local_node保存本地节点号。

- socket绑定控制端口并设置回调函数qrtr_ns_data_ready。

- 创建广播端口socket地址结构体,用于后面发现其他节点。

- 启动kthread工作线程,循环处理事件。

- 调用say_hello发送广播寻找其他节点。

4.2.3 qrtr_create

QRTR family创建成功后,会走到qrtr_create函数。

static int qrtr_create(struct net *net, struct socket *sock,

int protocol, int kern)

{

struct qrtr_sock *ipc;

struct sock *sk;

if (sock->type != SOCK_DGRAM)

return -EPROTOTYPE;

sk = sk_alloc(net, AF_QIPCRTR, GFP_KERNEL, &qrtr_proto, kern);

if (!sk)

return -ENOMEM;

sock_set_flag(sk, SOCK_ZAPPED);

sock_init_data(sock, sk);

sock->ops = &qrtr_proto_ops;

ipc = qrtr_sk(sk);

ipc->us.sq_family = AF_QIPCRTR;

ipc->us.sq_node = qrtr_local_nid;

ipc->us.sq_port = 0;

ipc->state = QRTR_STATE_INIT;

ipc->signal_on_recv = false;

init_completion(&ipc->rx_queue_has_space);

spin_lock_init(&ipc->signal_lock);

return 0;

}

static const struct net_proto_family qrtr_family = {

.owner = THIS_MODULE,

.family = AF_QIPCRTR,

.create = qrtr_create,

};在这里绑定了operations

static const struct proto_ops qrtr_proto_ops = {

.owner = THIS_MODULE,

.family = AF_QIPCRTR,

.bind = qrtr_bind,

.connect = qrtr_connect,

.socketpair = sock_no_socketpair,

.accept = sock_no_accept,

.listen = sock_no_listen,

.sendmsg = qrtr_sendmsg,

.recvmsg = qrtr_recvmsg,

.getname = qrtr_getname,

.ioctl = qrtr_ioctl,

.gettstamp = sock_gettstamp,

.poll = datagram_poll,

.shutdown = sock_no_shutdown,

.release = qrtr_release,

.mmap = sock_no_mmap,

.sendpage = sock_no_sendpage,

};4.3 QRTR to RPMSG

以前面所说的socket发送消息为例,在用户空间调用sendTo函数,最终会走到qrtr_sendmsg函数,与前面所说的绑定operations的动作有关。

4.3.1 qrtr_sendmsg

static int qrtr_sendmsg(struct socket *sock, struct msghdr *msg, size_t len)

{

DECLARE_SOCKADDR(struct sockaddr_qrtr *, addr, msg->msg_name);

int (*enqueue_fn)(struct qrtr_node *, struct sk_buff *, int,

struct sockaddr_qrtr *, struct sockaddr_qrtr *,

unsigned int);

__le32 qrtr_type = cpu_to_le32(QRTR_TYPE_DATA);

struct qrtr_sock *ipc = qrtr_sk(sock->sk);

struct sock *sk = sock->sk;

struct qrtr_ctrl_pkt pkt;

struct qrtr_node *node;

struct qrtr_node *srv_node;

struct sk_buff *skb;

int pdata_len = 0;

int data_len = 0;

size_t plen;

u32 type;

int rc;

if (msg->msg_flags & ~(MSG_DONTWAIT))

return -EINVAL;

if (len > 65535)

return -EMSGSIZE;

lock_sock(sk);

if (addr) {

if (msg->msg_namelen < sizeof(*addr)) {

release_sock(sk);

return -EINVAL;

}

if (addr->sq_family != AF_QIPCRTR) {

release_sock(sk);

return -EINVAL;

}

rc = qrtr_autobind(sock);

if (rc) {

release_sock(sk);

return rc;

}

} else if (sk->sk_state == TCP_ESTABLISHED) {

addr = &ipc->peer;

} else {

release_sock(sk);

return -ENOTCONN;

}

node = NULL;

srv_node = NULL;

if (addr->sq_node == QRTR_NODE_BCAST) {

if (addr->sq_port != QRTR_PORT_CTRL &&

qrtr_local_nid != QRTR_NODE_BCAST) {

release_sock(sk);

return -ENOTCONN;

}

enqueue_fn = qrtr_bcast_enqueue;

} else if (addr->sq_node == ipc->us.sq_node) {

enqueue_fn = qrtr_local_enqueue;

} else {

node = qrtr_node_lookup(addr->sq_node);

if (!node) {

release_sock(sk);

return -ECONNRESET;

}

enqueue_fn = qrtr_node_enqueue;

if (ipc->state > QRTR_STATE_INIT && ipc->state != node->nid)

ipc->state = QRTR_STATE_MULTI;

else if (ipc->state == QRTR_STATE_INIT)

ipc->state = node->nid;

}

plen = (len + 3) & ~3;

if (plen > SKB_MAX_ALLOC) {

data_len = min_t(size_t,

plen - SKB_MAX_ALLOC,

MAX_SKB_FRAGS * PAGE_SIZE);

pdata_len = PAGE_ALIGN(data_len);

BUILD_BUG_ON(SKB_MAX_ALLOC < PAGE_SIZE);

}

skb = sock_alloc_send_pskb(sk, QRTR_HDR_MAX_SIZE + (plen - data_len),

pdata_len, msg->msg_flags & MSG_DONTWAIT,

&rc, PAGE_ALLOC_COSTLY_ORDER);

if (!skb) {

rc = -ENOMEM;

goto out_node;

}

skb_reserve(skb, QRTR_HDR_MAX_SIZE);

/* len is used by the enqueue functions and should remain accurate

* regardless of padding or allocation size

*/

skb_put(skb, len - data_len);

skb->data_len = data_len;

skb->len = len;

rc = skb_copy_datagram_from_iter(skb, 0, &msg->msg_iter, len);

if (rc) {

kfree_skb(skb);

goto out_node;

}

if (ipc->us.sq_port == QRTR_PORT_CTRL ||

addr->sq_port == QRTR_PORT_CTRL) {

if (len < 4) {

rc = -EINVAL;

kfree_skb(skb);

goto out_node;

}

/* control messages already require the type as 'command' */

skb_copy_bits(skb, 0, &qrtr_type, 4);

}

type = le32_to_cpu(qrtr_type);

if (addr->sq_port == QRTR_PORT_CTRL && type == QRTR_TYPE_NEW_SERVER) {

ipc->state = QRTR_STATE_MULTI;

/* drop new server cmds that are not forwardable to dst node*/

skb_copy_bits(skb, 0, &pkt, sizeof(pkt));

srv_node = qrtr_node_lookup(pkt.server.node);

if (!qrtr_must_forward(srv_node, node, type)) {

rc = 0;

kfree_skb(skb);

qrtr_node_release(srv_node);

goto out_node;

}

qrtr_node_release(srv_node);

}

rc = enqueue_fn(node, skb, type, &ipc->us, addr, msg->msg_flags);

if (rc >= 0)

rc = len;

out_node:

qrtr_node_release(node);

release_sock(sk);

return rc;

}函数qrtr_sendmsg将套接字、消息头和消息长度作为输入参数。如果发送操作失败,它将返回发送的字节数或错误代码。

该函数首先检查消息标志是否有效,消息长度是否在允许的限制范围内。

然后锁定套接字并检查目标地址。如果没有指定地址,套接字不在TCP_ESTABLISHED状态,则返回错误。如果没有指定地址,但套接字处于TCP_ESTABLISHED状态,则使用套接字的对等地址作为目标地址。

然后,该函数根据地址查找目标节点,并根据目标节点确定要使用的适当的en队列函数。它分配一个套接字缓冲区,并将消息数据复制到其中。

如果消息是控制消息,它将提取消息类型,并检查是否是需要转发到目标节点的新服务器命令。 最后,该函数调用适当的入队函数来发送消息,如果发送操作失败,则返回发送的字节数或错误代码。

enqueue_fn是函数指针,

rc = enqueue_fn(node, skb, type, &ipc->us, addr, msg->msg_flags);在不同的条件下,指向不同的函数:

if (addr->sq_node == QRTR_NODE_BCAST) {

if (addr->sq_port != QRTR_PORT_CTRL &&

qrtr_local_nid != QRTR_NODE_BCAST) {

release_sock(sk);

return -ENOTCONN;

}

enqueue_fn = qrtr_bcast_enqueue;

} else if (addr->sq_node == ipc->us.sq_node) {

enqueue_fn = qrtr_local_enqueue;

} else {

node = qrtr_node_lookup(addr->sq_node);

if (!node) {

release_sock(sk);

return -ECONNRESET;

}

enqueue_fn = qrtr_node_enqueue;

if (ipc->state > QRTR_STATE_INIT && ipc->state != node->nid)

ipc->state = QRTR_STATE_MULTI;

else if (ipc->state == QRTR_STATE_INIT)

ipc->state = node->nid;

}在init时,有下面的赋值语句:

qrtr_ns.bcast_sq.sq_node = QRTR_NODE_BCAST;/* Queue packet for broadcast. */

static int qrtr_bcast_enqueue(struct qrtr_node *node, struct sk_buff *skb,

int type, struct sockaddr_qrtr *from,

struct sockaddr_qrtr *to, unsigned int flags)

{

struct sk_buff *skbn;

down_read(&qrtr_epts_lock);

list_for_each_entry(node, &qrtr_all_epts, item) {

if (node->nid == QRTR_EP_NID_AUTO && type != QRTR_TYPE_HELLO)

continue;

skbn = skb_clone(skb, GFP_KERNEL);

if (!skbn)

break;

skb_set_owner_w(skbn, skb->sk);

qrtr_node_enqueue(node, skbn, type, from, to, flags);

}

up_read(&qrtr_epts_lock);

qrtr_local_enqueue(NULL, skb, type, from, to, flags);

return 0;

}4.3.2 qrtr_node_enqueue

上面的流程继续走,到qrtr_node_enqueue函数

/* Pass an outgoing packet socket buffer to the endpoint driver. */

static int qrtr_node_enqueue(struct qrtr_node *node, struct sk_buff *skb,

int type, struct sockaddr_qrtr *from,

struct sockaddr_qrtr *to, unsigned int flags)

{

struct qrtr_hdr_v1 *hdr;

size_t len = skb->len;

int rc, confirm_rx;

mutex_lock(&node->ep_lock);

if (!atomic_read(&node->hello_sent) && type != QRTR_TYPE_HELLO) {

kfree_skb(skb);

mutex_unlock(&node->ep_lock);

return 0;

}

if (atomic_read(&node->hello_sent) && type == QRTR_TYPE_HELLO) {

kfree_skb(skb);

mutex_unlock(&node->ep_lock);

return 0;

}

if (!atomic_read(&node->hello_sent) && type == QRTR_TYPE_HELLO)

atomic_inc(&node->hello_sent);

mutex_unlock(&node->ep_lock);

/* If sk is null, this is a forwarded packet and should not wait */

if (!skb->sk) {

struct qrtr_cb *cb = (struct qrtr_cb *)skb->cb;

confirm_rx = cb->confirm_rx;

} else {

confirm_rx = qrtr_tx_wait(node, to, skb->sk, type, flags);

if (confirm_rx < 0) {

kfree_skb(skb);

return confirm_rx;

}

}

hdr = skb_push(skb, sizeof(*hdr));

hdr->version = cpu_to_le32(QRTR_PROTO_VER_1);

hdr->type = cpu_to_le32(type);

hdr->src_node_id = cpu_to_le32(from->sq_node);

hdr->src_port_id = cpu_to_le32(from->sq_port);

if (to->sq_port == QRTR_PORT_CTRL) {

hdr->dst_node_id = cpu_to_le32(node->nid);

hdr->dst_port_id = cpu_to_le32(QRTR_PORT_CTRL);

} else {

hdr->dst_node_id = cpu_to_le32(to->sq_node);

hdr->dst_port_id = cpu_to_le32(to->sq_port);

}

hdr->size = cpu_to_le32(len);

hdr->confirm_rx = !!confirm_rx;

qrtr_log_tx_msg(node, hdr, skb);

/* word align the data and pad with 0s */

if (skb_is_nonlinear(skb))

rc = qrtr_pad_word_pskb(skb);

else

rc = skb_put_padto(skb, ALIGN(len, 4) + sizeof(*hdr));

if (rc) {

pr_err("%s: failed to pad size %lu to %lu rc:%d\n", __func__,

skb->len, ALIGN(skb->len, 4), rc);

}

if (!rc) {

mutex_lock(&node->ep_lock);

rc = -ENODEV;

if (node->ep)

rc = node->ep->xmit(node->ep, skb);

else

kfree_skb(skb);

mutex_unlock(&node->ep_lock);

}

/* Need to ensure that a subsequent message carries the otherwise lost

* confirm_rx flag if we dropped this one */

if (rc && confirm_rx)

qrtr_tx_flow_failed(node, to->sq_node, to->sq_port);

if (rc && type == QRTR_TYPE_HELLO) {

atomic_dec(&node->hello_sent);

kthread_queue_work(&node->kworker, &node->say_hello);

}

return rc;

}我们关注到这一句,如果endpoint存在的话,则执行node->ep->xmit(node->ep, skb)该函数指针。

if (!rc) {

mutex_lock(&node->ep_lock);

rc = -ENODEV;

if (node->ep)

rc = node->ep->xmit(node->ep, skb);

else

kfree_skb(skb);

mutex_unlock(&node->ep_lock);

}那这个函数指针在哪里赋值的呢?

在smd驱动的probe函数中有qdev->ep.xmit = qcom_smd_qrtr_send;

static int qcom_smd_qrtr_probe(struct rpmsg_device *rpdev)

{

struct qrtr_array svc_arr = {NULL, 0};

struct qrtr_smd_dev *qdev;

u32 net_id;

int size;

bool rt;

int rc;

qdev = devm_kzalloc(&rpdev->dev, sizeof(*qdev), GFP_KERNEL);

if (!qdev)

return -ENOMEM;

qdev->channel = rpdev->ept;

qdev->dev = &rpdev->dev;

qdev->ep.xmit = qcom_smd_qrtr_send;

rc = of_property_read_u32(rpdev->dev.of_node, "qcom,net-id", &net_id);

if (rc < 0)

net_id = QRTR_EP_NET_ID_AUTO;

rt = of_property_read_bool(rpdev->dev.of_node, "qcom,low-latency");

size = of_property_count_u32_elems(rpdev->dev.of_node, "qcom,no-wake-svc");

if (size > 0) {

svc_arr.size = size;

svc_arr.arr = kmalloc_array(size, sizeof(u32), GFP_KERNEL);

if (!svc_arr.arr)

return -ENOMEM;

of_property_read_u32_array(rpdev->dev.of_node, "qcom,no-wake-svc",

svc_arr.arr, size);

}

rc = qrtr_endpoint_register(&qdev->ep, net_id, rt, &svc_arr);

kfree(svc_arr.arr);

if (rc) {

dev_err(qdev->dev, "endpoint register failed: %d, low-latency: %d\n", rc, rt);

return rc;

}

dev_set_drvdata(&rpdev->dev, qdev);

pr_info("%s: SMD QRTR driver probed\n", __func__);

return 0;

}选妃环节,到底是谁?也就是说这个node->ep是谁?

4.3.3 qrtr_endpoint_register

在qrtr_endpoint_register函数中,有过node->ep = ep一次赋值。

int qrtr_endpoint_register(struct qrtr_endpoint *ep, unsigned int net_id,

bool rt, struct qrtr_array *no_wake)

{

int rc, i;

size_t size;

struct qrtr_node *node;

struct sched_param param = {.sched_priority = 1};

if (!ep || !ep->xmit)

return -EINVAL;

node = kzalloc(sizeof(*node), GFP_KERNEL);

if (!node)

return -ENOMEM;

kref_init(&node->ref);

mutex_init(&node->ep_lock);

skb_queue_head_init(&node->rx_queue);

node->nid = QRTR_EP_NID_AUTO;

node->ep = ep;

atomic_set(&node->hello_sent, 0);

atomic_set(&node->hello_rcvd, 0);

kthread_init_work(&node->read_data, qrtr_node_rx_work);

kthread_init_work(&node->say_hello, qrtr_hello_work);

kthread_init_worker(&node->kworker);

node->task = kthread_run(kthread_worker_fn, &node->kworker, "qrtr_rx");

if (IS_ERR(node->task)) {

kfree(node);

return -ENOMEM;

}

if (rt)

sched_setscheduler(node->task, SCHED_FIFO, ¶m);

xa_init(&node->no_wake_svc);

size = no_wake ? no_wake->size : 0;

for (i = 0; i < size; i++) {

rc = xa_insert(&node->no_wake_svc, no_wake->arr[i], node,

GFP_KERNEL);

if (rc) {

kfree(node);

return rc;

}

}

INIT_RADIX_TREE(&node->qrtr_tx_flow, GFP_KERNEL);

mutex_init(&node->qrtr_tx_lock);

qrtr_node_assign(node, node->nid);

node->net_id = net_id;

down_write(&qrtr_epts_lock);

list_add(&node->item, &qrtr_all_epts);

up_write(&qrtr_epts_lock);

ep->node = node;

node->ws = wakeup_source_register(NULL, "qrtr_ws");

kthread_queue_work(&node->kworker, &node->say_hello);

return 0;

}

EXPORT_SYMBOL_GPL(qrtr_endpoint_register);

看Makefile

obj-$(CONFIG_QRTR) += qrtr.o

qrtr-y := af_qrtr.o ns.o

obj-$(CONFIG_QRTR_SMD) += qrtr-smd.o

qrtr-smd-y := smd.o

obj-$(CONFIG_QRTR_TUN) += qrtr-tun.o

qrtr-tun-y := tun.o

obj-$(CONFIG_QRTR_MHI) += qrtr-mhi.o

qrtr-mhi-y := mhi.o

obj-$(CONFIG_QRTR_GUNYAH) += qrtr-gunyah.o

qrtr-gunyah-y := gunyah.o

obj-$(CONFIG_QRTR_GENPOOL) += qrtr-genpool.o

qrtr-genpool-y := genpool.o再去找当前设备的.config,找到如下相关的config:

CONFIG_QRTR=m

CONFIG_QRTR_SMD=m

# CONFIG_QRTR_TUN is not set

CONFIG_QRTR_MHI=m

CONFIG_QRTR_GUNYAH=m那么要选的就剩下三个了

mhi devices下没有设备,devices和drivers无法完成匹配,也即不会probe,因此不是它。

gunyah和smd没分析出来会走到哪位?先TODO。

下面我们按照框架图中的smd先继续分析:

qcom_smd_qrtr_probe会调用到rc = qrtr_endpoint_register(&qdev->ep, net_id, rt, &svc_arr);,与前面所述就串联起来了。

4.4 RPMSG to Glink

4.4.1 rpmsg_send

qcom_smd_qrtr_send中的核心函数就是rpmsg_send

/* from qrtr to smd */

static int qcom_smd_qrtr_send(struct qrtr_endpoint *ep, struct sk_buff *skb)

{

struct qrtr_smd_dev *qdev = container_of(ep, struct qrtr_smd_dev, ep);

int rc;

rc = skb_linearize(skb);

if (rc)

goto out;

rc = rpmsg_send(qdev->channel, skb->data, skb->len);

out:

if (rc)

kfree_skb(skb);

else

consume_skb(skb);

return rc;

}

int rpmsg_send(struct rpmsg_endpoint *ept, void *data, int len)

{

if (WARN_ON(!ept))

return -EINVAL;

if (!ept->ops->send)

return -ENXIO;

return ept->ops->send(ept, data, len);

}

EXPORT_SYMBOL(rpmsg_send);static const struct rpmsg_device_ops glink_device_ops = {

.create_ept = qcom_glink_create_ept,

.announce_create = qcom_glink_announce_create,

};

static const struct rpmsg_endpoint_ops glink_endpoint_ops = {

.destroy_ept = qcom_glink_destroy_ept,

.send = qcom_glink_send,

.sendto = qcom_glink_sendto,

.trysend = qcom_glink_trysend,

.trysendto = qcom_glink_trysendto,

};4.4.2 qcom_glink_send

static int __qcom_glink_send(struct glink_channel *channel,

void *data, int len, bool wait)

{

struct qcom_glink *glink = channel->glink;

struct glink_core_rx_intent *intent = NULL;

struct glink_core_rx_intent *tmp;

int iid = 0;

struct {

struct glink_msg msg;

__le32 chunk_size;

__le32 left_size;

} __packed req;

int ret;

unsigned long flags;

int chunk_size = len;

int left_size = 0;

if (!glink->intentless) {

while (!intent) {

spin_lock_irqsave(&channel->intent_lock, flags);

idr_for_each_entry(&channel->riids, tmp, iid) {

if (tmp->size >= len && !tmp->in_use) {

if (!intent)

intent = tmp;

else if (intent->size > tmp->size)

intent = tmp;

if (intent->size == len)

break;

}

}

if (intent)

intent->in_use = true;

spin_unlock_irqrestore(&channel->intent_lock, flags);

/* We found an available intent */

if (intent)

break;

if (!wait)

return -EBUSY;

ret = qcom_glink_request_intent(glink, channel, len);

if (ret < 0)

return ret;

}

iid = intent->id;

}

if (wait && chunk_size > SZ_8K) {

chunk_size = SZ_8K;

left_size = len - chunk_size;

}

req.msg.cmd = cpu_to_le16(RPM_CMD_TX_DATA);

req.msg.param1 = cpu_to_le16(channel->lcid);

req.msg.param2 = cpu_to_le32(iid);

req.chunk_size = cpu_to_le32(chunk_size);

req.left_size = cpu_to_le32(left_size);

ret = qcom_glink_tx(glink, &req, sizeof(req), data, chunk_size, wait);

/* Mark intent available if we failed */

if (ret && intent) {

intent->in_use = false;

return ret;

}

while (left_size > 0) {

data = (void *)((char *)data + chunk_size);

chunk_size = left_size;

if (chunk_size > SZ_8K)

chunk_size = SZ_8K;

left_size -= chunk_size;

req.msg.cmd = cpu_to_le16(RPM_CMD_TX_DATA_CONT);

req.msg.param1 = cpu_to_le16(channel->lcid);

req.msg.param2 = cpu_to_le32(iid);

req.chunk_size = cpu_to_le32(chunk_size);

req.left_size = cpu_to_le32(left_size);

ret = qcom_glink_tx(glink, &req, sizeof(req), data,

chunk_size, wait);

/* Mark intent available if we failed */

if (ret && intent) {

intent->in_use = false;

break;

}

}

return ret;

}

static int qcom_glink_send(struct rpmsg_endpoint *ept, void *data, int len)

{

struct glink_channel *channel = to_glink_channel(ept);

return __qcom_glink_send(channel, data, len, true);

}

ret = qcom_glink_tx(glink, &req, sizeof(req), data, chunk_size, wait);

4.4.3 qcom_glink_tx

static int qcom_glink_tx(struct qcom_glink *glink,

const void *hdr, size_t hlen,

const void *data, size_t dlen, bool wait)

{

unsigned int tlen = hlen + dlen;

unsigned long flags;

int ret = 0;

/* Reject packets that are too big */

if (tlen >= glink->tx_pipe->length)

return -EINVAL;

spin_lock_irqsave(&glink->tx_lock, flags);

while (qcom_glink_tx_avail(glink) < tlen) {

if (!wait) {

ret = -EAGAIN;

goto out;

}

if (!glink->sent_read_notify) {

glink->sent_read_notify = true;

qcom_glink_send_read_notify(glink);

}

/* Wait without holding the tx_lock */

spin_unlock_irqrestore(&glink->tx_lock, flags);

wait_event_timeout(glink->tx_avail_notify,

qcom_glink_tx_avail(glink) >= tlen, 10 * HZ);

spin_lock_irqsave(&glink->tx_lock, flags);

if (qcom_glink_tx_avail(glink) >= tlen)

glink->sent_read_notify = false;

}

qcom_glink_tx_write(glink, hdr, hlen, data, dlen);

mbox_send_message(glink->mbox_chan, NULL);

mbox_client_txdone(glink->mbox_chan, 0);

out:

spin_unlock_irqrestore(&glink->tx_lock, flags);

return ret;

}qcom_glink_tx_write(glink, hdr, hlen, data, dlen);

mbox_send_message(glink->mbox_chan, NULL);

这里往mailbox中写入的是NULL,对应参数是msg,意思是不走mailbox这条路,从框图看QMP引入的目的是为了和NPU和AOP通信。(关于LINUX中RPMSG协议,可以看相关的一篇文章:https://www.cnblogs.com/sky-heaven/p/13624371.html)

4.4.4 qcom_glink_tx_write

往pipe中写数据

static void qcom_glink_tx_write(struct qcom_glink *glink,

const void *hdr, size_t hlen,

const void *data, size_t dlen)

{

glink->tx_pipe->write(glink->tx_pipe, hdr, hlen, data, dlen);

}glink->tx_pipe = tx;,pipe的赋值是在qcom_glink_native_probe函数中完成的。

4.4.5 qcom_glink_native_probe

struct qcom_glink *qcom_glink_native_probe(struct device *dev,

unsigned long features,

struct qcom_glink_pipe *rx,

struct qcom_glink_pipe *tx,

bool intentless)

{

int irq;

int ret;

struct qcom_glink *glink;

glink = devm_kzalloc(dev, sizeof(*glink), GFP_KERNEL);

if (!glink)

return ERR_PTR(-ENOMEM);

glink->dev = dev;

glink->tx_pipe = tx;

glink->rx_pipe = rx;

glink->features = features;

glink->intentless = intentless;

spin_lock_init(&glink->tx_lock);

spin_lock_init(&glink->rx_lock);

INIT_LIST_HEAD(&glink->rx_queue);

INIT_WORK(&glink->rx_work, qcom_glink_work);

init_waitqueue_head(&glink->tx_avail_notify);

spin_lock_init(&glink->idr_lock);

idr_init(&glink->lcids);

idr_init(&glink->rcids);

glink->dev->groups = qcom_glink_groups;

ret = device_add_groups(dev, qcom_glink_groups);

if (ret)

dev_err(dev, "failed to add groups\n");

glink->mbox_client.dev = dev;

glink->mbox_client.knows_txdone = true;

glink->mbox_chan = mbox_request_channel(&glink->mbox_client, 0);

if (IS_ERR(glink->mbox_chan)) {

if (PTR_ERR(glink->mbox_chan) != -EPROBE_DEFER)

dev_err(dev, "failed to acquire IPC channel\n");

return ERR_CAST(glink->mbox_chan);

}

irq = of_irq_get(dev->of_node, 0);

ret = devm_request_irq(dev, irq,

qcom_glink_native_intr,

IRQF_NO_SUSPEND | IRQF_SHARED,

"glink-native", glink);

if (ret) {

dev_err(dev, "failed to request IRQ\n");

return ERR_PTR(ret);

}

glink->irq = irq;

ret = qcom_glink_send_version(glink);

if (ret)

return ERR_PTR(ret);

ret = qcom_glink_create_chrdev(glink);

if (ret)

dev_err(glink->dev, "failed to register chrdev\n");

return glink;

}

EXPORT_SYMBOL_GPL(qcom_glink_native_probe);qcom_glink_native_probe函数调用的地方还是很多的。

结合Makefile和.config继续分析。

obj-$(CONFIG_RPMSG) += rpmsg_core.o

obj-$(CONFIG_RPMSG_CHAR) += rpmsg_char.o

obj-$(CONFIG_RPMSG_CTRL) += rpmsg_ctrl.o

obj-$(CONFIG_RPMSG_NS) += rpmsg_ns.o

obj-$(CONFIG_RPMSG_MTK_SCP) += mtk_rpmsg.o

qcom_glink-objs := qcom_glink_native.o qcom_glink_ssr.o

obj-$(CONFIG_RPMSG_QCOM_GLINK) += qcom_glink.o

obj-$(CONFIG_RPMSG_QCOM_GLINK_RPM) += qcom_glink_rpm.o

obj-$(CONFIG_RPMSG_QCOM_GLINK_SMEM) += qcom_glink_smem.o

obj-$(CONFIG_RPMSG_QCOM_SMD) += qcom_smd.o

obj-$(CONFIG_RPMSG_VIRTIO) += virtio_rpmsg_bus.o#

# Rpmsg drivers

#

CONFIG_RPMSG=y

CONFIG_RPMSG_CHAR=y

CONFIG_RPMSG_QCOM_GLINK=m

# CONFIG_RPMSG_QCOM_GLINK_RPM is not set

CONFIG_RPMSG_QCOM_GLINK_SMEM=m

# CONFIG_RPMSG_QCOM_GLINK_SPSS is not set

# CONFIG_RPMSG_QCOM_GLINK_HELIOSCOM is not set

CONFIG_RPMSG_QCOM_SMD=m

# CONFIG_RPMSG_VIRTIO is not set

# CONFIG_MSM_RPM_SMD is not set

# end of Rpmsg drivers因此,是qcom_glink_smem.c文件中的赋值操作。

4.4.6 qcom_glink_smem_register

struct qcom_glink *qcom_glink_smem_register(struct device *parent,

struct device_node *node)

{

struct glink_smem_pipe *rx_pipe;

struct glink_smem_pipe *tx_pipe;

struct qcom_glink *glink;

struct device *dev;

u32 remote_pid;

__le32 *descs;

size_t size;

int ret;

dev = kzalloc(sizeof(*dev), GFP_KERNEL);

if (!dev)

return ERR_PTR(-ENOMEM);

dev->parent = parent;

dev->of_node = node;

dev->release = qcom_glink_smem_release;

dev_set_name(dev, "%s:%pOFn", dev_name(parent->parent), node);

ret = device_register(dev);

if (ret) {

pr_err("failed to register glink edge\n");

put_device(dev);

return ERR_PTR(ret);

}

ret = of_property_read_u32(dev->of_node, "qcom,remote-pid",

&remote_pid);

if (ret) {

dev_err(dev, "failed to parse qcom,remote-pid\n");

goto err_put_dev;

}

rx_pipe = devm_kzalloc(dev, sizeof(*rx_pipe), GFP_KERNEL);

tx_pipe = devm_kzalloc(dev, sizeof(*tx_pipe), GFP_KERNEL);

if (!rx_pipe || !tx_pipe) {

ret = -ENOMEM;

goto err_put_dev;

}

ret = qcom_smem_alloc(remote_pid,

SMEM_GLINK_NATIVE_XPRT_DESCRIPTOR, 32);

if (ret && ret != -EEXIST) {

dev_err(dev, "failed to allocate glink descriptors\n");

goto err_put_dev;

}

descs = qcom_smem_get(remote_pid,

SMEM_GLINK_NATIVE_XPRT_DESCRIPTOR, &size);

if (IS_ERR(descs)) {

dev_err(dev, "failed to acquire xprt descriptor\n");

ret = PTR_ERR(descs);

goto err_put_dev;

}

if (size != 32) {

dev_err(dev, "glink descriptor of invalid size\n");

ret = -EINVAL;

goto err_put_dev;

}

tx_pipe->tail = &descs[0];

tx_pipe->head = &descs[1];

rx_pipe->tail = &descs[2];

rx_pipe->head = &descs[3];

ret = qcom_smem_alloc(remote_pid, SMEM_GLINK_NATIVE_XPRT_FIFO_0,

SZ_16K);

if (ret && ret != -EEXIST) {

dev_err(dev, "failed to allocate TX fifo\n");

goto err_put_dev;

}

tx_pipe->fifo = qcom_smem_get(remote_pid, SMEM_GLINK_NATIVE_XPRT_FIFO_0,

&tx_pipe->native.length);

if (IS_ERR(tx_pipe->fifo)) {

dev_err(dev, "failed to acquire TX fifo\n");

ret = PTR_ERR(tx_pipe->fifo);

goto err_put_dev;

}

rx_pipe->native.avail = glink_smem_rx_avail;

rx_pipe->native.peak = glink_smem_rx_peak;

rx_pipe->native.advance = glink_smem_rx_advance;

rx_pipe->remote_pid = remote_pid;

tx_pipe->native.avail = glink_smem_tx_avail;

tx_pipe->native.write = glink_smem_tx_write;

tx_pipe->remote_pid = remote_pid;

*rx_pipe->tail = 0;

*tx_pipe->head = 0;

glink = qcom_glink_native_probe(dev,

GLINK_FEATURE_INTENT_REUSE | GLINK_FEATURE_ZERO_COPY,

&rx_pipe->native, &tx_pipe->native,

false);

if (IS_ERR(glink)) {

ret = PTR_ERR(glink);

goto err_put_dev;

}

return glink;

err_put_dev:

device_unregister(dev);

return ERR_PTR(ret);

}

EXPORT_SYMBOL_GPL(qcom_glink_smem_register);回看到之前的往pipe中写数据操作glink->tx_pipe->write,对应的函数指针为tx_pipe->native.write = glink_smem_tx_write;。

4.4.7 glink_smem_tx_write

static unsigned int glink_smem_tx_write_one(struct glink_smem_pipe *pipe,

unsigned int head,

const void *data, size_t count)

{

size_t len;

if (WARN_ON_ONCE(head > pipe->native.length))

return head;

len = min_t(size_t, count, pipe->native.length - head);

if (len)

// 注释①

memcpy(pipe->fifo + head, data, len);

if (len != count)

memcpy(pipe->fifo, data + len, count - len);

head += count;

if (head >= pipe->native.length)

head -= pipe->native.length;

return head;

}

static void glink_smem_tx_write(struct qcom_glink_pipe *glink_pipe,

const void *hdr, size_t hlen,

const void *data, size_t dlen)

{

struct glink_smem_pipe *pipe = to_smem_pipe(glink_pipe);

unsigned int head;

head = le32_to_cpu(*pipe->head);

head = glink_smem_tx_write_one(pipe, head, hdr, hlen);

head = glink_smem_tx_write_one(pipe, head, data, dlen);

/* Ensure head is always aligned to 8 bytes */

head = ALIGN(head, 8);

if (head >= pipe->native.length)

head -= pipe->native.length;

/* Ensure ordering of fifo and head update */

// 注释②

wmb();

*pipe->head = cpu_to_le32(head);

}glink_smem_tx_write函数将数据写入用于处理器之间通信的共享内存缓冲区。以下是该函数的细分:

-参数一“qcom_glink_pipe”指针,表示处理器之间的通信管道,参数二hdr指针指向要写入管道的标头,参数四data指针指向要写入pipe的数据。

-函数首先使用to_smem_pipe宏将qcom_glink_pipe指针转换为glink_smem_pipe指针,本质是contain_of宏去获取结构体的地址。

-然后,它从glink_smem_pipe结构中读取head成员的当前值,并使用le32_to_cpu宏将一个32位的无符号整数从大端序转换为小端序(如果是大端序的话)。(大端、小端的文章,可以参考什么是Little Endian和Big Endian?(Endianness:字节序、端序、尾序)_little-endian_王大雄_的博客-CSDN博客)

-然后,函数调用两次glink_smem_tx_write_one函数,将头和数据写入共享内存缓冲区。该函数在将数据写入缓冲区时更新head变量。

-然后,该函数使用ALIGN宏将'head'变量8字节对齐(#define __ALIGN_KERNEL(x,a) __ALIGN_KERNEL_MASK(x, (typeof(x)) (a) - 1)

#define __ALIGN_KERNEL_MASK(x,mask) (((x) + (mask)) & ~(mask))),并检查它是否超过共享内存缓冲区的长度。如果超过,它会绕到缓冲区的开头。

-使用'wmb'函数设置内存屏障,确保前面的数据都能正确访问到。

-最后,函数使用'cpu_to_le32'宏将'head'变量更新为大端写回'glink_smem_pipe'结构。

注释①:tx_pipe->fifo指针赋值如下(也在qcom_glink_smem_register中):

tx_pipe->fifo = qcom_smem_get(remote_pid, SMEM_GLINK_NATIVE_XPRT_FIFO_0,

&tx_pipe->native.length);注释②:wmb()宏,用于确保在该指令之前的所有数据访问都已经完成,以便后续指令可以正确地访问这些数据。

#if __LINUX_ARM_ARCH__ >= 7

#define isb(option) __asm__ __volatile__ ("isb " #option : : : "memory")

#define dsb(option) __asm__ __volatile__ ("dsb " #option : : : "memory")

#define dmb(option) __asm__ __volatile__ ("dmb " #option : : : "memory")

#ifdef CONFIG_THUMB2_KERNEL

#define CSDB ".inst.w 0xf3af8014"

#else

#define CSDB ".inst 0xe320f014"

#endif

#define csdb() __asm__ __volatile__(CSDB : : : "memory")

#elif defined(CONFIG_CPU_XSC3) || __LINUX_ARM_ARCH__ == 6

#define isb(x) __asm__ __volatile__ ("mcr p15, 0, %0, c7, c5, 4" \

: : "r" (0) : "memory")

#define dsb(x) __asm__ __volatile__ ("mcr p15, 0, %0, c7, c10, 4" \

: : "r" (0) : "memory")

#define dmb(x) __asm__ __volatile__ ("mcr p15, 0, %0, c7, c10, 5" \

: : "r" (0) : "memory")

#elif defined(CONFIG_CPU_FA526)

#define isb(x) __asm__ __volatile__ ("mcr p15, 0, %0, c7, c5, 4" \

: : "r" (0) : "memory")

#define dsb(x) __asm__ __volatile__ ("mcr p15, 0, %0, c7, c10, 4" \

: : "r" (0) : "memory")

#define dmb(x) __asm__ __volatile__ ("" : : : "memory")

#else

#define isb(x) __asm__ __volatile__ ("" : : : "memory")

#define dsb(x) __asm__ __volatile__ ("mcr p15, 0, %0, c7, c10, 4" \

: : "r" (0) : "memory")

#define dmb(x) __asm__ __volatile__ ("" : : : "memory")

#endif

#ifndef CSDB

#define CSDB

#endif

#ifndef csdb

#define csdb()

#endif

#ifdef CONFIG_ARM_HEAVY_MB

extern void (*soc_mb)(void);

extern void arm_heavy_mb(void);

#define __arm_heavy_mb(x...) do { dsb(x); arm_heavy_mb(); } while (0)

#else

#define __arm_heavy_mb(x...) dsb(x)

#endif

#if defined(CONFIG_ARM_DMA_MEM_BUFFERABLE) || defined(CONFIG_SMP)

#define mb() __arm_heavy_mb()

#define rmb() dsb()

#define wmb() __arm_heavy_mb(st)

#define dma_rmb() dmb(osh)

#define dma_wmb() dmb(oshst)

#else

#define mb() barrier()

#define rmb() barrier()

#define wmb() barrier()

#define dma_rmb() barrier()

#define dma_wmb() barrier()

#endif4.5 Glink to smem

到smem就属于GKI部分了,OEM厂商后续定制的修改手段也很难直接进到里面,因此如果仅关注可修改部分,到这其实就可以了。

4.5.1 qcom_smem_get

/**

* qcom_smem_get() - resolve ptr of size of a smem item

* @host: the remote processor, or -1

* @item: smem item handle

* @size: pointer to be filled out with size of the item

*

* Looks up smem item and returns pointer to it. Size of smem

* item is returned in @size.

*/

void *qcom_smem_get(unsigned int host, unsigned int item, size_t *size)

{

struct smem_partition *part;

unsigned long flags;

int ret;

void *ptr = ERR_PTR(-EPROBE_DEFER);

if (!__smem)

return ptr;

if (WARN_ON(item >= __smem->item_count))

return ERR_PTR(-EINVAL);

ret = hwspin_lock_timeout_irqsave(__smem->hwlock,

HWSPINLOCK_TIMEOUT,

&flags);

if (ret)

return ERR_PTR(ret);

if (host < SMEM_HOST_COUNT && __smem->partitions[host].virt_base) {

part = &__smem->partitions[host];

ptr = qcom_smem_get_private(__smem, part, item, size);

} else if (__smem->global_partition.virt_base) {

part = &__smem->global_partition;

ptr = qcom_smem_get_private(__smem, part, item, size);

} else {

ptr = qcom_smem_get_global(__smem, item, size);

}

hwspin_unlock_irqrestore(__smem->hwlock, &flags);

return ptr;

}

EXPORT_SYMBOL(qcom_smem_get);

qcom_smem_get函数中的参数一host表示远端的处理器。在qcom_glink_smem_register传入的实参为一个叫remote_pid的参数,4.5.2中分析。

qcom_smem_get函数中的参数二item,是share Memory handle,4.5.3中分析。

4.5.2 qcom_smem_get参数一remote_pid

要想获取到remote_pid,得先拿到node。

struct qcom_glink *qcom_glink_smem_register(struct device *parent,

struct device_node *node)

{

// ...

dev->of_node = node;

// ...

ret = of_property_read_u32(dev->of_node, "qcom,remote-pid",

&remote_pid);在源码中搜索,有如下两处:

我们先来排除qcom_common.c

// msm-kernel/drivers/remoteproc/qcom_common.c

qcom_add_glink_subdev(struct rproc *rproc, struct qcom_rproc_glink *glink, const char *ssr_name)

glink_subdev_prepare(struct rproc_subdev *subdev)

glink->edge = qcom_glink_smem_register(glink->dev, glink->node);qcom_add_glink_subdev函数有4处调用,我们可以结合Makefile和.config排除该方案。

obj-$(CONFIG_QCOM_Q6V5_ADSP) += qcom_q6v5_adsp.o

obj-$(CONFIG_QCOM_Q6V5_MSS) += qcom_q6v5_mss.o

obj-$(CONFIG_QCOM_Q6V5_PAS) += qcom_q6v5_pas.o

obj-$(CONFIG_QCOM_Q6V5_WCSS) += qcom_q6v5_wcss.o# CONFIG_QCOM_Q6V5_ADSP is not set

# CONFIG_QCOM_Q6V5_MSS is not set

CONFIG_QCOM_Q6V5_PAS=m

# CONFIG_QCOM_Q6V5_WCSS is not set后续,从glink+probe这条路继续分析下去。

(1) glink_probe

当glink driver和device匹配上以后,会走到glink_probe。

static const struct of_device_id glink_match_table[] = {

{ .compatible = "qcom,glink" },

{},

};

static struct platform_driver glink_probe_driver = {

.probe = glink_probe,

.remove = glink_remove,

.driver = {

.name = "msm_glink",

.of_match_table = glink_match_table,

.pm = &glink_native_pm_ops,

},

};

在设备树中查找 `pdev` 设备节点的所有可用子节点,并对每个子节点调用 `probe_subsystem` 函数进行探测。

#define for_each_available_child_of_node(parent, child) \

for (child = of_get_next_available_child(parent, NULL); child != NULL; \

child = of_get_next_available_child(parent, child))

static int glink_probe(struct platform_device *pdev)

{

struct device_node *pn = pdev->dev.of_node;

struct device_node *cn;

for_each_available_child_of_node(pn, cn) {

probe_subsystem(&pdev->dev, cn);

}

return 0;

}/**

* of_get_next_available_child - Find the next available child node

* @node: parent node

* @prev: previous child of the parent node, or NULL to get first

*

* This function is like of_get_next_child(), except that it

* automatically skips any disabled nodes (i.e. status = "disabled").

*/

struct device_node *of_get_next_available_child(const struct device_node *node,

struct device_node *prev)

{

struct device_node *next;

unsigned long flags;

if (!node)

return NULL;

raw_spin_lock_irqsave(&devtree_lock, flags);

next = prev ? prev->sibling : node->child;

for (; next; next = next->sibling) {

if (!__of_device_is_available(next))

continue;

if (of_node_get(next))

break;

}

of_node_put(prev);

raw_spin_unlock_irqrestore(&devtree_lock, flags);

return next;

}

EXPORT_SYMBOL(of_get_next_available_child);(2) probe_subsystem

static void probe_subsystem(struct device *dev, struct device_node *np)

{

struct edge_info *einfo;

const char *transport;

int ret;

void *handle;

einfo = devm_kzalloc(dev, sizeof(*einfo), GFP_KERNEL);

if (!einfo)

return;

ret = of_property_read_string(np, "label", &einfo->ssr_label);

if (ret < 0)

einfo->ssr_label = np->name;

ret = of_property_read_string(np, "qcom,glink-label",

&einfo->glink_label);

if (ret < 0) {

GLINK_ERR(dev, "no qcom,glink-label for %s\n",

einfo->ssr_label);

return;

}

einfo->dev = dev;

einfo->node = np;

ret = of_property_read_string(np, "transport", &transport);

if (ret < 0) {

GLINK_ERR(dev, "%s missing transport\n", einfo->ssr_label);

return;

}

if (!strcmp(transport, "smem")) {

einfo->register_fn = glink_probe_smem_reg;

einfo->unregister_fn = glink_probe_smem_unreg;

} else if (!strcmp(transport, "spss")) {

einfo->register_fn = glink_probe_spss_reg;

einfo->unregister_fn = glink_probe_spss_unreg;

}

einfo->nb.notifier_call = glink_probe_ssr_cb;

handle = qcom_register_ssr_notifier(einfo->ssr_label, &einfo->nb);

if (IS_ERR_OR_NULL(handle)) {

GLINK_ERR(dev, "could not register for SSR notifier for %s\n",

einfo->ssr_label);

return;

}

einfo->notifier_handle = handle;

list_add_tail(&einfo->list, &edge_infos);

GLINK_INFO("probe successful for %s\n", einfo->ssr_label);

}从probe_sybsystem函数看,该子节点有"label","qcom,glink-label","transport"几个属性。

我们继续以MPSS子系统为例,继续看下去。

从源码中找到该设备的device tree关于modem_pas节点及子节点glink-edge的描述:

modem_pas: remoteproc-mss@04080000 {

compatible = "qcom,diwali-modem-pas";

reg = <0x4080000 0x10000>;

status = "ok";

clocks = <&rpmhcc RPMH_CXO_CLK>;

clock-names = "xo";

cx-supply = <&VDD_CX_LEVEL>;

cx-uV-uA = <RPMH_REGULATOR_LEVEL_TURBO 100000>;

mx-supply = <&VDD_MODEM_LEVEL>;

mx-uV-uA = <RPMH_REGULATOR_LEVEL_TURBO 100000>;

reg-names = "cx", "mx";

interconnects = <&mc_virt MASTER_LLCC &mc_virt SLAVE_EBI1>,

<&aggre2_noc MASTER_CRYPTO &mc_virt SLAVE_EBI1>;

interconnect-names = "rproc_ddr","crypto_ddr";

qcom,qmp = <&aoss_qmp>;

memory-region = <&mpss_mem &system_cma>;

/* Inputs from mss */

interrupts-extended = <&intc GIC_SPI 264 IRQ_TYPE_EDGE_RISING>,

<&modem_smp2p_in 0 0>,

<&modem_smp2p_in 2 0>,

<&modem_smp2p_in 1 0>,

<&modem_smp2p_in 3 0>,

<&modem_smp2p_in 7 0>;

interrupt-names = "wdog",

"fatal",

"handover",

"ready",

"stop-ack",

"shutdown-ack";

/* Outputs to mss */

qcom,smem-states = <&modem_smp2p_out 0>;

qcom,smem-state-names = "stop";

glink-edge {

qcom,remote-pid = <1>;

transport = "smem";

mboxes = <&ipcc_mproc IPCC_CLIENT_MPSS

IPCC_MPROC_SIGNAL_GLINK_QMP>;

mbox-names = "mpss_smem";

interrupt-parent = <&ipcc_mproc>;

interrupts = <IPCC_CLIENT_MPSS

IPCC_MPROC_SIGNAL_GLINK_QMP

IRQ_TYPE_EDGE_RISING>;

label = "modem";

qcom,glink-label = "mpss";

qcom,modem_qrtr {

qcom,glink-channels = "IPCRTR";

qcom,low-latency;

qcom,intents = <0x800 5

0x2000 3

0x4400 2>;

};

qcom,modem_ds {

qcom,glink-channels = "DS";

qcom,intents = <0x4000 0x2>;

};

};

};那么probe_subsystem函数在该设备数结构中,读到transport属性值为"smem",也就是走到einfo->register_fn = glink_probe_smem_reg;。

static int glink_probe_smem_reg(struct edge_info *einfo)

{

struct device *dev = einfo->dev;

einfo->glink = qcom_glink_smem_register(dev, einfo->node);

if (IS_ERR_OR_NULL(einfo->glink)) {

GLINK_ERR(dev, "register failed for %s\n", einfo->ssr_label);

einfo->glink = NULL;

}

GLINK_INFO("register successful for %s\n", einfo->ssr_label);

return 0;

}到这就解释了remote_pid的由来了。

![]()

再回过头看,这里就可以知道,qcom_glink_smem_register被执行了很多次,检测到符合条件的子系统,就会进来一次。

4.5.3 qcom_smem_get参数二item

static const struct of_device_id qcom_smem_of_match[] = {

{ .compatible = "qcom,smem" },

{}

};

MODULE_DEVICE_TABLE(of, qcom_smem_of_match);

static struct platform_driver qcom_smem_driver = {

.probe = qcom_smem_probe,

.remove = qcom_smem_remove,

.driver = {

.name = "qcom-smem",

.of_match_table = qcom_smem_of_match,

.suppress_bind_attrs = true,

.pm = &qcom_smem_pm_ops,

},

};(1) qcom_smem_probe

qcom_smem_probe中对结构体变量smem进行了一系列初始化动作。

struct qcom_smem {

struct device *dev;

struct hwspinlock *hwlock;

struct smem_ptable_entry *global_partition_entry;

struct smem_ptable_entry *ptable_entries[SMEM_HOST_COUNT];

u32 item_count;

struct platform_device *socinfo;

unsigned num_regions;

struct smem_region regions[];

};static int qcom_smem_probe(struct platform_device *pdev)

{

struct smem_header *header;

struct qcom_smem *smem;

size_t array_size;

int num_regions;

int hwlock_id;

u32 version;

int ret;

num_regions = 1;

// 注释①

if (of_find_property(pdev->dev.of_node, "qcom,rpm-msg-ram", NULL))

num_regions++;

array_size = num_regions * sizeof(struct smem_region);

smem = devm_kzalloc(&pdev->dev, sizeof(*smem) + array_size, GFP_KERNEL);

if (!smem)

return -ENOMEM;

smem->dev = &pdev->dev;

smem->num_regions = num_regions;

// 注释②

ret = qcom_smem_map_memory(smem, &pdev->dev, "memory-region", 0);

if (ret)

return ret;

if (num_regions > 1 && (ret = qcom_smem_map_memory(smem, &pdev->dev,

"qcom,rpm-msg-ram", 1)))

return ret;

header = smem->regions[0].virt_base;

if (le32_to_cpu(header->initialized) != 1 ||

le32_to_cpu(header->reserved)) {

dev_err(&pdev->dev, "SMEM is not initialized by SBL\n");

return -EINVAL;

}

version = qcom_smem_get_sbl_version(smem);

switch (version >> 16) {

case SMEM_GLOBAL_PART_VERSION:

ret = qcom_smem_set_global_partition(smem);

if (ret < 0)

return ret;

smem->item_count = qcom_smem_get_item_count(smem);

break;

case SMEM_GLOBAL_HEAP_VERSION:

smem->item_count = SMEM_ITEM_COUNT;

break;

default:

dev_err(&pdev->dev, "Unsupported SMEM version 0x%x\n", version);

return -EINVAL;

}

BUILD_BUG_ON(SMEM_HOST_APPS >= SMEM_HOST_COUNT);

ret = qcom_smem_enumerate_partitions(smem, SMEM_HOST_APPS);

if (ret < 0 && ret != -ENOENT)

return ret;

hwlock_id = of_hwspin_lock_get_id(pdev->dev.of_node, 0);

if (hwlock_id < 0) {

if (hwlock_id != -EPROBE_DEFER)

dev_err(&pdev->dev, "failed to retrieve hwlock\n");

return hwlock_id;

}

smem->hwlock = hwspin_lock_request_specific(hwlock_id);

if (!smem->hwlock)

return -ENXIO;

__smem = smem;

smem->socinfo = platform_device_register_data(&pdev->dev, "qcom-socinfo",

PLATFORM_DEVID_NONE, NULL,

0);

if (IS_ERR(smem->socinfo))

dev_dbg(&pdev->dev, "failed to register socinfo device\n");

return 0;

}qcom_smem_probe在下面的device和driver匹配上后执行。

static const struct of_device_id qcom_smem_of_match[] = {

{ .compatible = "qcom,smem" },

{}

};

MODULE_DEVICE_TABLE(of, qcom_smem_of_match);

static struct platform_driver qcom_smem_driver = {

.probe = qcom_smem_probe,

.remove = qcom_smem_remove,

.driver = {

.name = "qcom-smem",

.of_match_table = qcom_smem_of_match,

.suppress_bind_attrs = true,

.pm = &qcom_smem_pm_ops,

},

};

smem: qcom,smem {

compatible = "qcom,smem";

memory-region = <&smem_mem>;

hwlocks = <&tcsr_mutex 3>;

};

smem_mem: smem_region@80900000 {

no-map;

reg = <0x0 0x80900000 0x0 0x200000>;

};注释①处if (of_find_property(pdev->dev.of_node, "qcom,rpm-msg-ram", NULL))走不进来,也即num_regions = 1。

注释②处ret = qcom_smem_map_memory(smem, &pdev->dev, "memory-region", 0);,将smem区域映射到内核虚拟内存中,后面在4.5.4中分析(建议先看)。

4.5.4 qcom_smem_map_memory

static int qcom_smem_map_memory(struct qcom_smem *smem, struct device *dev,

const char *name, int i)

{

struct device_node *np;

struct resource r;

resource_size_t size;

int ret;

np = of_parse_phandle(dev->of_node, name, 0);

if (!np) {

dev_err(dev, "No %s specified\n", name);

return -EINVAL;

}

ret = of_address_to_resource(np, 0, &r);

of_node_put(np);

if (ret)

return ret;

size = resource_size(&r);

smem->regions[i].virt_base = devm_ioremap_wc(dev, r.start, size);

if (!smem->regions[i].virt_base)

return -ENOMEM;

smem->regions[i].aux_base = (u32)r.start;

smem->regions[i].size = size;

return 0;

}(1) of_parse_phandle

在device_node下找到与字符串name匹配的device_node

np = of_parse_phandle(dev->of_node, name, 0);

// dev->of_node作为参数传入np,"memory-region"作为参数传入phandle_name

// index是0,args是of_phandle_args类型的变量

__of_parse_phandle_with_args(np, phandle_name, NULL, 0, index, &args)

// "memory-region"作为参数传入list_name,NULL传入cells_name,0传入cell_count

of_phandle_iterator_init(struct of_phandle_iterator *it, const struct device_node *np, const char *list_name, const char *cells_name, int cell_count)

list = of_get_property(np, list_name, &size)

struct property *pp = of_find_property(np, name, lenp);

pp = __of_find_property(np, name, lenp);

*lenp = pp->length;

return pp ? pp->value : NULL;

// 紧接着it的一系列初始化动作

it->cells_name = cells_name;

it->cell_count = cell_count;

it->parent = np;

it->list_end = list + size / sizeof(*list);

it->phandle_end = list;

it->cur = list;

err = of_phandle_iterator_next(it);

it->cur = it->phandle_end;

it->phandle = be32_to_cpup(it->cur++);

// 根据phandle获取device_node

it->node = of_find_node_by_phandle(it->phandle);

c = of_phandle_iterator_args(&it, out_args->args, MAX_PHANDLE_ARGS);

// out_args->args[0]就是上述list的值

out_args->np = it.node;

return args.np;ret = qcom_smem_map_memory(smem, &pdev->dev, "memory-region", 0);

// 在dev->of_node下找到与字符串name匹配的device_node

ret = of_address_to_resource(np, 0, &r);

of_node_put(np);

size = resource_size(&r);

smem->regions[i].virt_base = devm_ioremap_wc(dev, r.start, size);

smem->regions[i].aux_base = (u32)r.start;

smem->regions[i].size = size;(2) of_address_to_resource

int of_address_to_resource(struct device_node *dev, int index, struct resource *r)

addrp = of_get_address(dev, index, &size, &flags);

// parent其实就指向of_node节点,软链接到设备树节点/soc/qcom,smem

parent = of_get_parent(dev);

bus = of_match_bus(parent);

// 返回的bus如下:

{

.name = "default",

.addresses = "reg",

.match = NULL,

.count_cells = of_bus_default_count_cells,

.map = of_bus_default_map,

.translate = of_bus_default_translate,

.get_flags = of_bus_default_get_flags,// IORESOURCE_MEM

}

bus->count_cells(dev, &na, &ns);// of_bus_default_count_cells

*addrc = of_n_addr_cells(dev);// 找到根节点soc,读下面的#address-cells属性值

*sizec = of_n_size_cells(dev);// 找到根节点soc,读下面的#size-cells属性值

of_node_put(parent);

prop = of_get_property(dev, bus->addresses, &psize);//遍历当前节点下所有节点,找到"reg"属性

of_property_read_string_index(dev, "reg-names", index, &name);

of_property_read_string_helper(np, propname, output, 1, index);

__of_address_to_resource(dev, addrp, size, flags, name, r);

taddr = of_translate_address(dev, addrp);

ret = __of_translate_address(dev, of_get_parent, in_addr, "ranges", &host);// Translate an address from the device-tree into a CPU physical address

(3) devm_ioremap_wc

#define ioremap_wc(addr, size) \

ioremap_prot((addr), (size), PROT_NORMAL_NC)

2576

2576

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?