一、MMM简介:

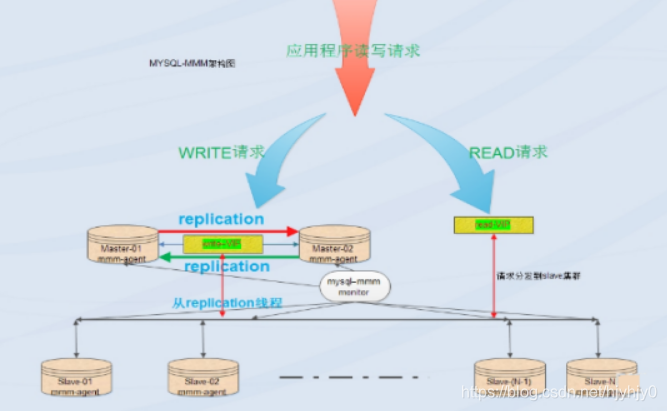

MMM即Multi-Master Replication Manager for MySQL:mysql多主复制管理器,基于perl实现,关于mysql主主复制配置的监控、故障转移和管理的一套可伸缩的脚本套件(在任何时候只有一个节点可以被写入),MMM也能对从服务器进行读负载均衡,所以可以用它来在一组用于复制的服务器启动虚拟ip,除此之外,它还有实现数据备份、节点之间重新同步功能的脚本。MySQL本身没有提供replication failover的解决方案,通过MMM方案能实现服务器的故障转移,从而实现mysql的高可用。MMM不仅能提供浮动IP的功能,如果当前的主服务器挂掉后,会将你后端的从服务器自动转向新的主服务器进行同步复制,不用手工更改同步配置。这个方案是目前比较成熟的解决方案。

官网:http://mysql-mmm.org

优点:

高可用性,扩展性好,出现故障自动切换,对于主主同步,在同一时间只提供一台数据库写操作,保证的数据的一致性。当主服务器挂掉以后,另一个主立即接管,其他的从服务器能自动切换,不用人工干预。

缺点:

monitor节点是单点,不过这个你也可以结合keepalived或者haertbeat做成高可用;至少三个节点,对主机的数量有要求,需要实现读写分离,还需要在前端编写读写分离程序。在读写非常繁忙的业务系统下表现不是很稳定,可能会出现复制延时、切换失效等问题。MMM方案并不太适应于对数据安全性要求很高,并且读、写繁忙的环境中。

适用场景:

MMM的适用场景为数据库访问量大,并且能实现读写分离的场景。 Mmm主要功能由下面三个脚本提供: mmm_mond 负责所有的监控工作的监控守护进程,决定节点的移除(mmm_mond进程定时心跳检测,失败则将write ip浮动到另外一台master)等等 mmm_agentd 运行在mysql服务器上的代理守护进程,通过简单远程服务集提供给监控节点 mmm_control 通过命令行管理mmm_mond进程 在整个监管过程中,需要在mysql中添加相关授权用户,授权的用户包括一个mmm_monitor用户和一个mmm_agent用户,如果想使用mmm的备份工具则还要添加一个mmm_tools用户。

二、部署实施

1.环境介绍

OS:centos7.2(64位)

数据库系统:mysql5.7.13

关闭selinux

配置ntp,同步时间

[root@master-01 ~]# vim /etc/chrony.conf

server aliyun.com iburst

开启#号

allow 192.168.1.0/16

提前规划好角色 IP

| 主机名 | IP | 写Vip | 读VIP |

|---|---|---|---|

| master1 | 192.168.1.10 | 192.168.1.61 | |

| master2 | 192.168.1.20 | 192.168.1.62 | |

| slave1 | 192.168.1.30 | 192.168.1.63 | |

| slave2 | 192.168.1.40 | 192.168.1.64 | |

| monitor | 192.168.1.50 |

2.在所有主机上配置/etc/hosts文件,

添加如下内容:

192.168.1.10 master1

192.168.1.20 master2

192.168.1.30 slave1

192.168.1.40 slave2

192.168.1.50 monitor

在所有主机上安装perl perl-devel

perl-CPAN libart_lgpl.x86_64 rrdtool.x86_64 rrdtool-perl.x86_64包

[root@master1 ~]# yum -y install perl-* libart_lgpl.x86_64 rrdtool.x86_64 rrdtool-perl.x86_64

初始化cpan库

[root@master1 ~]# cpan //一路回车

注:使用centos7在线yum源安装 安装perl的相关库

[root@master1 ~]# cpan -i Algorithm::Diff Class::Singleton DBI DBD::mysql Log::Dispatch Log::Log4perl Mail::Send Net::Ping Proc::Daemon Time::HiRes Params::Validate Net::ARP

**注意:**这里我下载的的时候 DBD::mysql;Net::Ping;Net::ARP 这三个都没下载下来,都报错了

我是在https://metacpan.org/ 这个网站下的这三个的包,如果这网址进不去,就去阿里云镜像站找cpan会有进入网站的方式,不过下方我写了。

[root@master1 ~]# wget https://cpan.metacpan.org/authors/id/D/DV/DVEEDEN/DBD-mysql-4.050.tar.gz

[root@master1 ~]# wget https://cpan.metacpan.org/authors/id/R/RU/RURBAN/Net-Ping-2.74.tar.gz

[root@master1 ~]# wget https://cpan.metacpan.org/authors/id/C/CR/CRAZYDJ/Net-ARP-1.0.11.tgz

DBD解包安装

[root@master1 ~]# tar zxf DBD-mysql-4.050.tar.gz

[root@master1 ~]# cd DBD-mysql-4.050/

[root@master1 DBD-mysql-4.050]# perl Makefile.PL

[root@master1 DBD-mysql-4.050]# make install

Ping解包安装

[root@master1 ~]# tar zxf Net-Ping-2.74.tar.gz

[root@master1 ~]# cd Net-Ping-2.74/

[root@master1 Net-Ping-2.74]# perl Makefile.PL

[root@master1 Net-Ping-2.74]# make install

ARP解包安装

[root@master1 ~]# tar zxf Net-ARP-1.0.11.tgz

[root@master1 ~]# cd Net-ARP-1.0.11/

[root@master1 Net-ARP-1.0.11]# perl Makefile.PL

[root@master1 Net-ARP-1.0.11]# make install

在执行查看一下

[root@master1 ~]# cpan -i Algorithm::Diff Class::Singleton DBI DBD::mysql Log::Dispatch Log::Log4perl Mail::Send Net::Ping Proc::Daemon Time::HiRes Params::Validate Net::ARP

CPAN: Storable loaded ok (v2.45)

Reading '/root/.cpan/Metadata'

Database was generated on Tue, 09 Mar 2021 00:41:02 GMT

CPAN: Module::CoreList loaded ok (v5.20210220)

Algorithm::Diff is up to date (1.201).

Class::Singleton is up to date (1.6).

DBI is up to date (1.643).

DBD::mysql is up to date (4.050).

Log::Dispatch is up to date (2.70).

Log::Log4perl is up to date (1.54).

Mail::Send is up to date (2.21).

Net::Ping is up to date (2.74).

Proc::Daemon is up to date (0.23).

Time::HiRes is up to date (1.9764).

Params::Validate is up to date (1.30).

Net::ARP is up to date (1.0.11).

进入cpan替换源

[root@master1 ~]# cpan

Terminal does not support AddHistory.

cpan shell -- CPAN exploration and modules installation (v1.9800)

Enter 'h' for help.

cpan[1]> o conf //查看当前源配置

···

urllist

0 [http://mirror.ox.ac.uk/sites/www.cpan.org/]

1 [http://ftp.belnet.be/mirror/ftp.cpan.org/]

2 [http://ftp.hosteurope.de/pub/CPAN/]

···

把这些源换成阿里云和搜狐的

移出源

cpan[2]> o conf urllist pop http://mirror.ox.ac.uk/sites/www.cpan.org/

cpan[3]> o conf urllist pop http://ftp.belnet.be/mirror/ftp.cpan.org/

cpan[4]> o conf urllist pop http://ftp.hosteurope.de/pub/CPAN/

在查看已经空了

增加源

cpan[6]> o conf urllist push http://mirrors.aliyun.com/CPAN/

cpan[7]> o conf urllist push ftp://mirrors.sohu.com/CPAN/

提交

cpan[9]> o conf commit

查看

cpan[10]> o conf

···

urllist

0 [http://mirrors.aliyun.com/CPAN/]

1 [ftp://mirrors.sohu.com/CPAN/]

···

注意:如果打错要按着Ctrl+Backspace删除

3.在master1、master2、slave1、slave2主机上安装mysql5.7和配置复制 master1和master2互为主从,slave1、slave2为master1的从 在每个mysql的配置文件/etc/my.cnf中加入以下内容, 注意server-id不能重复。

master1主机:

log-bin=mysql-bin

binlog_format=mixed

server-id=1

relay-log=relay-bin

relay-log-index=slave-relay-bin.index

log-slave-updates=1

auto-increment-increment=2

auto-increment-offset=1

master2主机:

log-bin=mysql-bin

binlog_format=mixed

server-id=2

relay-log=relay-bin

relay-log-index=slave-relay-bin.index

log-slave-updates=1

auto-increment-increment=2

auto-increment-offset=2

slave1主机:

server-id=3

relay-log=relay-bin

relay-log-index=slave-relay-bin.index

read_only=1

slave2主机:

server-id=4

relay-log=relay-bin

relay-log-index=slave-relay-bin.index

read_only=1

在完成了对my.cnf的修改后,通过systemctl restart mysqld重新启动mysql服务

4台数据库主机若要开启防火墙,要么关闭防火墙或者创建访问规则:

firewall-cmd --permanent --add-port=3306/tcp

firewall-cmd --reload

主从配置(master1和master2配置成主主,slave1和slave2配置成master1的从)

在master1上授权:

mysql> grant replication slave on *.* to rep@'192.168.1.%' identified by '123.com';

把master2、slave1和slave2配置成master1的从库:

在master1上执行show master status; 获取binlog文件和Position点

mysql> show master status;

+------------------+----------+--------------+------------------+-------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB | Executed_Gtid_Set |

+------------------+----------+--------------+------------------+-------------------+

| mysql-bin.000001 | 451 | | | |

+------------------+----------+--------------+------------------+-------------------+

在master2、slave1和slave2执行

change master to

master_host='192.168.1.10',

master_user='rep',

master_password='123.com',

master_log_file='mysql-bin.000001',

master_log_pos=451;

启动

mysql>slave start;

验证主从复制

master2主机:

mysql> show slave status\G

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 192.168.1.10

Master_User: rep

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: mysql-bin.000002

Read_Master_Log_Pos: 154

Relay_Log_File: relay-bin.000006

Relay_Log_Pos: 320

Relay_Master_Log_File: mysql-bin.000002

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 0

Last_Error:

Skip_Counter: 0

Exec_Master_Log_Pos: 154

Relay_Log_Space: 521

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: 0

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 0

Last_SQL_Error:

Replicate_Ignore_Server_Ids:

Master_Server_Id: 1

Master_UUID: 23755d32-80a9-11eb-be39-000c295f4258

Master_Info_File: /usr/local/mysql/data/master.info

SQL_Delay: 0

SQL_Remaining_Delay: NULL

Slave_SQL_Running_State: Slave has read all relay log; waiting for more updates

Master_Retry_Count: 86400

Master_Bind:

Last_IO_Error_Timestamp:

Last_SQL_Error_Timestamp:

Master_SSL_Crl:

Master_SSL_Crlpath:

Retrieved_Gtid_Set:

Executed_Gtid_Set:

Auto_Position: 0

Replicate_Rewrite_DB:

Channel_Name:

Master_TLS_Version:

如果Slave_IO_Running和Slave_SQL_Running都为yes,那么主从就已经配置OK了 把master1配置成master2的从库:

在master2授权

mysql> grant replication slave on *.* to rep@'192.168.1.%' identified by '123.com';

在master2上执行show master status ;获取binlog文件和Position点

mysql> show master status;

+------------------+----------+--------------+------------------+-------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB | Executed_Gtid_Set |

+------------------+----------+--------------+------------------+-------------------+

| mysql-bin.000003 | 451 | | | |

+------------------+----------+--------------+------------------+-------------------+

在master1上执行:

change master to

master_host='192.168.1.20',

master_user='rep',

master_password='123.com',

master_log_file='mysql-bin.000003',

master_log_pos=451;

启动

mysql> start slave;

验证主从复制:

master1主机:

mysql> show slave status\G

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 192.168.1.20

Master_User: rep

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: mysql-bin.000003

Read_Master_Log_Pos: 451

Relay_Log_File: relay-bin.000002

Relay_Log_Pos: 320

Relay_Master_Log_File: mysql-bin.000003

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 0

Last_Error:

Skip_Counter: 0

Exec_Master_Log_Pos: 451

Relay_Log_Space: 521

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: 0

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 0

Last_SQL_Error:

Replicate_Ignore_Server_Ids:

Master_Server_Id: 2

Master_UUID: 38231ae0-80a9-11eb-857d-000c29b04a88

Master_Info_File: /usr/local/mysql/data/master.info

SQL_Delay: 0

SQL_Remaining_Delay: NULL

Slave_SQL_Running_State: Slave has read all relay log; waiting for more updates

Master_Retry_Count: 86400

Master_Bind:

Last_IO_Error_Timestamp:

Last_SQL_Error_Timestamp:

Master_SSL_Crl:

Master_SSL_Crlpath:

Retrieved_Gtid_Set:

Executed_Gtid_Set:

Auto_Position: 0

Replicate_Rewrite_DB:

Channel_Name:

Master_TLS_Version:

如果Slave_IO_Running和Slave_SQL_Running都为yes,那么主从就已经配置OK了

4、mysql-mmm配置:

在4台mysql节点上创建用户 创建代理账号:

mysql> grant super,replication client,process on *.* to 'mmm_agent'@'192.168.1.%' identified by '123.com';

创建监控账号:

mysql> grant replication client on *.* to 'mmm_monitor'@'192.168.1.%' identified by '123.com';

注1:因为之前的主从复制,以及主从已经是ok的,所以我在master1服务器执行就ok了。

检查master2和slave1、slave2三台db上是否都存在监控和代理账号

mysql> select user,host from mysql.user where user in ('mmm_monitor','mmm_agent');

+-------------+-------------+

| user | host |

+-------------+-------------+

| mmm_agent | 192.168.1.% |

| mmm_monitor | 192.168.1.% |

+-------------+-------------+

或

mysql> show grants for 'mmm_agent'@'192.168.1.%';

+------------------------------------------------------------------------------+

| Grants for mmm_agent@192.168.1.% |

+------------------------------------------------------------------------------+

| GRANT PROCESS, SUPER, REPLICATION CLIENT ON *.* TO 'mmm_agent'@'192.168.1.%' |

+------------------------------------------------------------------------------+

mysql> show grants for 'mmm_monitor'@'192.168.1.%';

+----------------------------------------------------------------+

| Grants for mmm_monitor@192.168.1.% |

+----------------------------------------------------------------+

| GRANT REPLICATION CLIENT ON *.* TO 'mmm_monitor'@'192.168.1.%' |

+----------------------------------------------------------------+

注2:

mmm_monitor用户:mmm监控用于对mysql服务器进程健康检查

mmm_agent用户:mmm代理用来更改只读模式,复制的主服务器等

5.mysql-mmm安装 在monitor主机(192.168.1.50) 上安装监控程序

[root@master1 ~]# cd /usr/local/src/

[root@master1 src]# ls

mysql-mmm-2.2.1.tar.gz

[root@master1 src]# tar zxf mysql-mmm-2.2.1.tar.gz

[root@master1 src]# cd mysql-mmm-2.2.1/

[root@master1 mysql-mmm-2.2.1]# make install

在数据库服务器(master1、master2、slave1、slave2)上安装代理

[root@master1 ~]# cd /usr/local/src/

[root@master1 src]# ls

mysql-mmm-2.2.1.tar.gz

[root@master1 src]# tar xf mysql-mmm-2.2.1.tar.gz

[root@master1 src]# cd mysql-mmm-2.2.1/

[root@master1 mysql-mmm-2.2.1]# make install

6.配置mmm 编写配置文件

五台主机必须一致: 完成安装后,所有的配置文件都放到了/etc/mysqlmmm/下面。管理服务器和数据库服务器上都要包含一个共同的文件mmm_common.conf,内容如下:

[root@monitor ~]# vim /etc/mysql-mmm/mmm_common.conf

active_master_role writer

<host default>

cluster_interface ens33

pid_path /var/run/mmm_agentd.pid

bin_path /usr/lib/mysql-mmm/

replication_user rep

replication_password 123.com

agent_user mmm_agent

agent_password 123.com

</host>

<host master1>

ip 192.168.1.10

mode master

peer master2

</host>

<host master2>

ip 192.168.1.20

mode master

peer master1

</host>

<host slave1>

ip 192.168.1.30

mode slave

</host>

<host slave2>

ip 192.168.1.40

mode slave

</host>

<role writer>

hosts master1,master2

ips 192.168.1.61

mode exclusive

</role>

<role reader>

hosts master2,slave1,slave2

ips 192.168.1.62,192.168.1.63,192.168.1.64

mode balanced

</role>

active_master_role writer #积极的master角色的标示,所有的db服务器要开启read_only参数,对于writer服务器监控代理会自动将read_only属性关闭。

<host default>

cluster_interface ens33 #群集的网络接口

pid_path /var/run/mmm_agentd.pid #pid路径

bin_path /usr/lib/mysql-mmm/ #可执行文件路径

replication_user rep #复制用户

replication_password 123.com #复制用户密码

agent_user mmm_agent #代理用户

agent_password 123.com #代理用户密码

</host>

<host master1> #master1的host名

ip 192.168.1.10 #master1的ip

mode master #角色属性,master代表是主

peer master2 #与master1对等的服务器的host名,也就是master2的服务器host名

</host>

<host master2> #和master的概念一样

ip 192.168.1.20 #master2IP

mode master

peer master1

</host>

<host slave1> #从库的host名,如果存在多个从库可以重复一样的配置

ip 192.168.1.30 #从的ip

mode slave #slave的角色属性代表当前host是从

</host>

<host slave2> #和slave的概念一样

ip 192.168.1.40

mode slave

</host>

<role writer> #writer角色配置

hosts master1,master2 #能进行写操作的服务器的host名,如果不想切换写操作这里可以只配置master,这样也可以避免因为网络延时而进行write的切换,但是一旦master出现故障那么当前的MMM就没有writer了只有对外的read操作。

ips 192.168.1.61 #对外提供的写操作的虚拟IP

mode exclusive #exclusive代表只允许存在一个主,也就是只能提供一个写的IP

</role>

<role reader> #read角色配置

hosts master2,slave1,slave2 #对外提供读操作的服务器的host名,当然这里也可以把

master加进来

ips 192.168.1.62,192.168.1.63,192.168.1.64 #对外提供读操作的虚拟ip,这三个ip和host不是一一对应的,并且ips也hosts的数目也可以不相同,如果这样配置的话其中一个hosts会分配两个ip

mode balanced #balanced代表负载均衡

</role>

同时将这个文件拷贝到其它的服务器,配置不变

[root@monitor ~]# for host in master2 slave1 slave2 master1 ; do scp /etc/mysql-mmm/mmm_common.conf $host:/etc/mysql-mmm/ ;done

代理文件配置 编辑 4台mysql节点机上的/etc/mysql-mmm/mmm_agent.conf 在数据库服务器上,还有一个mmm_agent.conf需要修改,其内容是:

[root@master1 ~]# vim /etc/mysql-mmm/mmm_agent.conf

include mmm_common.conf

this master1 //每个主机改每个的主机名

注意:这个配置只配置db服务器,监控服务器不需要配置,this后面的host名改成当前服务器的主机名。 启动代理进程 在 /etc/init.d/mysql-mmm-agent的脚本文件的#!/bin/sh下面,加入如下内容

[root@master1 ~]# vim /etc/init.d/mysql-mmm-agent

source /root/.bash_profile

添加成系统服务并设置为自启动

[root@master1 ~]# chkconfig --add mysql-mmm-agent

[root@master1 ~]# chkconfig mysql-mmm-agent on

[root@master1 ~]# /etc/init.d/mysql-mmm-agent start

查看:

[root@master2 ~]# netstat -antp | grep mmm_agentd

tcp 0 0 192.168.1.20:9989 0.0.0.0:* LISTEN 3848/mmm_agentd

注:添加source /root/.bash_profile目的是为了mysql-mmm-agent服务能启机自启。 自动启动和手动启动的唯一区别,就是激活一个console 。那么说明在作为服务启动的时候,可能是由于缺少环境变量 服务启动失败,报错信息如下:

Daemon bin: '/usr/sbin/mmm_agentd'

Daemon pid: '/var/run/mmm_agentd.pid'

Starting MMM Agent daemon... Can't locate Proc/Daemon.pm in @INC (@INC contains:

/usr/local/lib64/perl5 /usr/local/share/perl5 /usr/lib64/perl5/vendor_perl

/usr/share/perl5/vendor_perl /usr/lib64/perl5 /usr/share/perl5 .) at

/usr/sbin/mmm_agentd line 7.

BEGIN failed--compilation aborted at /usr/sbin/mmm_agentd line 7.

failed

解决方法:

# cpanProc::Daemon

# cpan Log::Log4perl

# /etc/init.d/mysql-mmm-agent start

Daemon bin: '/usr/sbin/mmm_agentd'

Daemon pid: '/var/run/mmm_agentd.pid'

Starting MMM Agent daemon... Ok

# netstat -antp | grep mmm_agentd

tcp 0 0 192.168.31.83:9989 0.0.0.0:* LISTEN 9693/mmm_agentd

配置防火墙

firewall-cmd --permanent --add-port=9989/tcp

firewall-cmd --reload

编辑 monitor主机上的/etc/mysql-mmm/mmm_mon.conf

[root@monitor mysql-mmm]# vim mmm_mon.conf

include mmm_common.conf

<monitor>

ip 127.0.0.1

pid_path /var/run/mmm_mond.pid

bin_path /usr/lib/mysql-mmm/

status_path /var/lib/misc/mmm_mond.status

ping_ips 192.168.1.10,192.168.1.20,192.168.1.30,192.168.1.40

auto_set_online 0

</monitor>

<check default>

check_period 5

trap_period 10

timeout 2

restart_after 10000

max_backlog 86400

</check>

<host default>

monitor_user mmm_monitor

monitor_password 123.com

</host>

debug 0

解释

<monitor>

ip 127.0.0.1 ##为了安全性,设置只在本机监听,mmm_mond默认监听9988

pid_path /var/run/mmm_mond.pid

bin_path /usr/lib/mysql-mmm/

status_path/var/lib/misc/mmm_mond.status

ping_ips 192.168.1.10,192.168.1.20,192.168.1.30,192.168.1.40 #用于测试网络可用性 IP 地址列表,只要其中有一个地址 ping 通,就代表网络正常,这里不要写入本机地址

auto_set_online 0 #设置自动online的时间,默认是超过60s就将它设置为online,默认是60s,这里将其设为0就是立即online

</monitor>

<check default>

check_period 5

trap_period 10

timeout 2

restart_after 10000

max_backlog 86400

</check>

check_period

描述:检查周期默认为5s

默认值:5s

trap_period

描述:一个节点被检测不成功的时间持续trap_period秒,就慎重的认为这个节点失败了。

默认值:10s

timeout

描述:检查超时的时间

默认值:2s

restart_after

描述:在完成restart_after次检查后,重启checker进程

默认值:10000

max_backlog

描述:记录检查rep_backlog日志的最大次数

默认值:60

<host default>

monitor_usermmm_monitor #监控db服务器的用户

monitor_password 123456 #监控db服务器的密码

</host>

debug 0 #debug 0正常模式,1为debug模式

启动监控进程: 在 /etc/init.d/mysql-mmm-agent的脚本文件的#!/bin/sh下面,加入如下内容

[root@monitor ~]# vim /etc/init.d/mysql-mmm-agent

source /root/.bash_profile

添加成系统服务并设置为自启动

[root@monitor ~]# chkconfig --add mysql-mmm-monitor

[root@monitor ~]# chkconfig mysql-mmm-monitor on

[root@monitor ~]# /etc/init.d/mysql-mmm-monitor start

启动报错:

Starting MMM Monitor daemon: Can not locate Proc/Daemon.pm in @INC (@INC contains:

/usr/local/lib64/perl5 /usr/local/share/perl5 /usr/lib64/perl5/vendor_perl

/usr/share/perl5/vendor_perl /usr/lib64/perl5 /usr/share/perl5 .) at

/usr/sbin/mmm_mond line 11.

BEGIN failed--compilation aborted at /usr/sbin/mmm_mond line 11.

failed

解决方法:安装下列perl的库

#cpanProc::Daemon

#cpan Log::Log4perl

[root@monitor1 ~]# /etc/init.d/mysql-mmm-monitor start

Daemon bin: '/usr/sbin/mmm_mond'

Daemon pid: '/var/run/mmm_mond.pid'

Starting MMM Monitor daemon: Ok

[root@monitor ~]# netstat -anpt | grep 9988

tcp 0 0 127.0.0.1:9988 0.0.0.0:* LISTEN 4053/mmm_mond

注1:无论是在db端还是在监控端如果有对配置文件进行修改操作都需要重启代理进程和监控进程。

注2:MMM启动顺序:先启动monitor,再启动 agent 检查集群状态:

[root@monitor ~]# mmm_control show

master1(192.168.1.10) master/HARD_OFFLINE. Roles:

master2(192.168.1.20) master/HARD_OFFLINE. Roles:

slave1(192.168.1.30) slave/HARD_OFFLINE. Roles:

slave2(192.168.1.40) slave/HARD_OFFLINE. Roles:

如果服务器状态不是ONLINE,可以在monitor用如下命令将服务器上线,例如:

mmm_control set_online 主机名 //上线

mmm_control set_offline 主机名 //下线

注意:这里我在上线的时候出现报错

[root@monitor ~]# mmm_control set_online master1

ERROR: Host 'master1' is 'HARD_OFFLINE' at the moment. It can't be switched to ONLINE.

解决办法:

[root@master1 ~]# vim /etc/ld.so.conf

添加:

include ld.so.conf.d/*.conf

/usr/local/mysql/lib

/usr/lib

保存退出

[root@master1 ~]# ldconfig

所有主机都做了一下,就可以上线了

查看

[root@monitor ~]# mmm_control show

master1(192.168.1.10) master/ONLINE. Roles: writer(192.168.1.61)

master2(192.168.1.20) master/ONLINE. Roles: reader(192.168.1.63)

slave1(192.168.1.30) slave/ONLINE. Roles: reader(192.168.1.62)

slave2(192.168.1.40) slave/ONLINE. Roles: reader(192.168.1.64)

例如:[root@monitor1 ~]#mmm_controlset_onlinemaster1 从上面的显示可以看到,写请求的VIP在master1上,所有从节点也都把master1当做主节点。 查看是否启用vip

[root@master1 ~]# ip a

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:5f:42:58 brd ff:ff:ff:ff:ff:ff

inet 192.168.1.10/24 brd 192.168.1.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.1.61/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::f239:9293:5b9c:14ba/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::cd64:d312:1942:cafb/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::f907:1153:530:3fda/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

[root@master2 ~]# ip a

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:b0:4a:88 brd ff:ff:ff:ff:ff:ff

inet 192.168.1.20/24 brd 192.168.1.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.1.63/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::cd64:d312:1942:cafb/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::f239:9293:5b9c:14ba/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::f907:1153:530:3fda/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@slave1 ~]# ip a

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:e2:28:f0 brd ff:ff:ff:ff:ff:ff

inet 192.168.1.30/24 brd 192.168.1.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.1.62/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::f239:9293:5b9c:14ba/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::cd64:d312:1942:cafb/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::f907:1153:530:3fda/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

[root@slave2 ~]# ip a

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:62:a6:cf brd ff:ff:ff:ff:ff:ff

inet 192.168.1.40/24 brd 192.168.1.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.1.64/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::cd64:d312:1942:cafb/64 scope link noprefixroute

valid_lft forever preferred_lft forever

inet6 fe80::f239:9293:5b9c:14ba/64 scope link noprefixroute

valid_lft forever preferred_lft forever

inet6 fe80::f907:1153:530:3fda/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

在master2,slave1,slave2主机上查看主mysql的指向

mysql> show slave status\G

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 192.168.1.10

Master_User: rep

Master_Port: 3306

Connect_Retry: 60

MMM高可用性测试: 服务器读写采有VIP地址进行读写,出现故障时VIP会漂移到其它节点,由其它节点提供服务。 首先查看整个集群的状态,可以看到整个集群状态正常

[root@monitor ~]# mmm_control show

master1(192.168.1.10) master/ONLINE. Roles: writer(192.168.1.61)

master2(192.168.1.20) master/ONLINE. Roles: reader(192.168.1.63)

slave1(192.168.1.30) slave/ONLINE. Roles: reader(192.168.1.62)

slave2(192.168.1.40) slave/ONLINE. Roles: reader(192.168.1.64)

模拟master1宕机,手动停止mysql服务,观察monitor日志,master1的日志如下:

[root@monitor ~]# tail -f /var/log/mysql-mmm/mmm_mond.log

2021/03/10 13:26:57 WARN Check 'rep_threads' on 'master1' is in unknown state! Message: UNKNOWN: Connect error (host = 192.168.1.10:3306, user = mmm_monitor)! Can't connect to MySQL server on '192.168.1.10' (111)

2021/03/10 13:26:57 WARN Check 'rep_backlog' on 'master1' is in unknown state! Message: UNKNOWN: Connect error (host = 192.168.1.10:3306, user = mmm_monitor)! Can't connect to MySQL server on '192.168.1.10' (111)

2021/03/10 13:27:07 ERROR Check 'mysql' on 'master1' has failed for 10 seconds! Message: ERROR: Connect error (host = 192.168.1.10:3306, user = mmm_monitor)! Can't connect to MySQL server on '192.168.1.10' (111)

2021/03/10 13:27:10 FATAL State of host 'master1' changed from ONLINE to HARD_OFFLINE (ping: OK, mysql: not OK)

2021/03/10 13:27:10 INFO Removing all roles from host 'master1':

2021/03/10 13:27:10 INFO Removed role 'writer(192.168.1.61)' from host 'master1'

2021/03/10 13:27:10 INFO Orphaned role 'writer(192.168.1.61)' has been assigned to 'master2'

查看群集的最新状态

[root@monitor ~]# mmm_control show

master1(192.168.1.10) master/HARD_OFFLINE. Roles:

master2(192.168.1.20) master/ONLINE. Roles: reader(192.168.1.63), writer(192.168.1.61)

slave1(192.168.1.30) slave/ONLINE. Roles: reader(192.168.1.62)

slave2(192.168.1.40) slave/ONLINE. Roles: reader(192.168.1.64)

从显示结果可以看出master1的状态有ONLINE转换为HARD_OFFLINE,写VIP转移到了master2主机上。 检查所有的db服务器群集状态

[root@monitor ~]# mmm_control checks all

master1 ping [last change: 2021/03/10 13:12:34] OK

master1 mysql [last change: 2021/03/10 13:27:09] ERROR: Connect error (host = 192.168.1.10:3306, user = mmm_monitor)! Can't connect to MySQL server on '192.168.1.10' (111)

master1 rep_threads [last change: 2021/03/10 13:12:34] OK

master1 rep_backlog [last change: 2021/03/10 13:12:34] OK: Backlog is null

slave1 ping [last change: 2021/03/10 13:12:34] OK

slave1 mysql [last change: 2021/03/10 13:12:34] OK

slave1 rep_threads [last change: 2021/03/10 13:12:34] OK

slave1 rep_backlog [last change: 2021/03/10 13:12:34] OK: Backlog is null

master2 ping [last change: 2021/03/10 13:12:34] OK

master2 mysql [last change: 2021/03/10 13:12:34] OK

master2 rep_threads [last change: 2021/03/10 13:12:34] OK

master2 rep_backlog [last change: 2021/03/10 13:12:34] OK: Backlog is null

slave2 ping [last change: 2021/03/10 13:12:34] OK

slave2 mysql [last change: 2021/03/10 13:12:34] OK

slave2 rep_threads [last change: 2021/03/10 13:12:34] OK

slave2 rep_backlog [last change: 2021/03/10 13:12:34] OK: Backlog is null

从上面可以看到master1能ping通,说明只是服务死掉了。

查看master2主机的ip地址:

[root@master2 ~]# ip a

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:b0:4a:88 brd ff:ff:ff:ff:ff:ff

inet 192.168.1.20/24 brd 192.168.1.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.1.63/32 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.1.61/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::cd64:d312:1942:cafb/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::f239:9293:5b9c:14ba/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::f907:1153:530:3fda/64 scope link noprefixroute

valid_lft forever preferred_lft forever

slave1主机:

mysql> show slave status\G

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 192.168.1.20

Master_User: rep

Master_Port: 3306

Connect_Retry: 60

slave2主机:

mysql> show slave status\G

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 192.168.1.20

Master_User: rep

Master_Port: 3306

Connect_Retry: 60

启动master1主机的mysql服务,观察monitor日志,master1的日志如下:

[root@monitor ~]# tail -f /var/log/mysql-mmm/mmm_mond.log

2021/03/10 13:30:47 INFO Check 'mysql' on 'master1' is ok!

2021/03/10 13:30:48 FATAL State of host 'master1' changed from HARD_OFFLINE to AWAITING_RECOVERY

2021/03/10 13:30:48 INFO Check 'rep_threads' on 'master1' is ok!

2021/03/10 13:30:48 INFO Check 'rep_backlog' on 'master1' is ok!

从上面可以看到master1的状态由hard_offline改变为awaiting_recovery状态 用如下命令将服务器上线:

[root@monitor ~]# mmm_control set_online master1

查看群集最新状态

[root@monitor ~]# mmm_control show

master1(192.168.1.10) master/ONLINE. Roles:

master2(192.168.1.20) master/ONLINE. Roles: reader(192.168.1.63), writer(192.168.1.61)

slave1(192.168.1.30) slave/ONLINE. Roles: reader(192.168.1.62)

slave2(192.168.1.40) slave/ONLINE. Roles: reader(192.168.1.64)

可以看到主库启动不会接管主,只到现有的主再次宕机。

总结

(1)master2备选主节点宕机不影响集群的状态,就是移除了master2备选节点的读状态。 (2)master1主节点宕机,由master2备选主节点接管写角色,slave1,slave2指向新master2主库进行复制,slave1,slave2会自动change master到master2.

(3)如果master1主库宕机,master2复制应用又落后于master1时就变成了主可写状态,这时的数据主无法保证一致性。 如果master2,slave1,slave2延迟于master1主,这个时master1宕机,slave1,slave2将会等待数据追上db1后,再重新指向新的主node2进行复制操作,这时的数据也无法保证同步的一致性。

(4)如果采用MMM高可用架构,主,主备选节点机器配置一样,而且开启半同步进一步提高安全性或采用MariaDB/mysql5.7进行多线程从复制,提高复制的性能。

附:1. 日志文件: 日志文件往往是分析错误的关键,所以要善于利用日志文件进行问题分析。 db端:/var/log/mysql-mmm/mmm_agentd.log 监控端:/var/log/mysql-mmm/mmm_mond.log

2.命令文件: mmm_agentd:db代理进程的启动文件 mmm_mond:监控进程的启动文件

mmm_backup:备份文件 mmm_restore:还原文件 mmm_control:监控操作命令文件

db服务器端只有mmm_agentd程序,其它的都是在monitor服务器端。

3.mmm_control用法 mmm_control程序可以用于监控群集状态、切换writer、设置online\offline操作等。Valid commands are: help - show this message #帮助信息 ping - ping monitor #ping当前的群集是否正常 show - show status #群集在线状态检查 checks [|all [|all]] - show checks status#执行监控检查操作set_online - set host online #将host设置为online set_offline - set host offline #将host设置为offline mode - print current mode. #打印输出当前的mode set_active - switch into active mode.

set_manual - switch into manual mode. set_passive - switch into passive mode. move_role [–force] - moveexclusive role to host #移除writer服务器为指定的host服务器(Only use --force if you know what you aredoing!) set_ip - set role with ip to host 检查所有的db服务器群集状态:

[root@monitor ~]# mmm_control checks all

检查项包括:ping、mysql是否正常运行、复制线程是否正常等 检查群集环境在线状况:

[root@monitor ~]# mmm_control show

对指定的host执行offline操作:

[root@monitor ~]# mmm_controlset_offline slave2

对指定的host执行onine操作:

[root@monitor ~]# mmm_controlset_online slave2

执行write切换(手动切换): 查看当前的slave对应的master

[root@slave2 ~]# mysql -uroot -p123.com -e 'show slave status\G;'

mysql: [Warning] Using a password on the command line interface can be insecure.

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 192.168.1.20

writer切换,要确保mmm_common.conf文件中的writer属性有配置对应的host,否则无法切换

[root@monitor ~]# mmm_controlmove_role writer master1

OK: Role 'writer' has been moved from 'master2' to 'master1'. Now you can wait

some time and check new roles info!

[root@monitor ~]# mmm_control show

master1(192.168.1.10) master/ONLINE. Roles: writer(192.168.1.61)

master2(192.168.1.20) master/ONLINE. Roles: reader(192.168.1.63)

slave1(192.168.1.30) slave/ONLINE. Roles: reader(192.168.1.62)

slave2(192.168.1.40) slave/ONLINE. Roles: reader(192.168.1.64)

save从库自动切换到了新的master

[root@slave2 ~]# mysql -uroot -p123.com -e 'show slave status\G;'

mysql: [Warning] Using a password on the command line interface can be insecure.

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 192.168.1.10

4.其它处理问题 如果不想让writer从master切换到backup(包括主从的延时也会导致写VIP的切换),那么可以在配置/etc/mysql-mmm/mmm_common.conf时,去掉中的backup #writer角色配置 hosts master1 #这里只配置一个Hosts ips 192.168.31.2#对外提供的写操作的虚拟IP mode exclusive #exclusive代表只允许存在一个主,也就是只能提供一个写的IP 这样的话当master1出现故障了writer写操作不会切换到master2服务器,并且slave也不会指向新的master,此时当前的MMM之前对外提供写服务

。

5. 总结

1.对外提供读写的虚拟IP是由monitor程序控制。如果monitor没有启动那么db服务器不会被分配虚拟ip,但是如果已经分配好了虚拟ip,当monitor程序关闭了原先分配的虚拟ip不会立即关闭外部程序还可以连接访问(只要不重启网络),这样的好处就是对于monitor的可靠性要求就会低一些,但是如果这个时候其中的某一个db服务器故障了就无法处理切换,也就是原先的虚拟ip还是维持不变,挂掉的那台DB的虚拟ip会变的不可访问。

2.agent程序受monitor程序的控制处理write切换,从库切换等操作。如果monitor进程关闭了那么agent进程就起不到什么作用,它本身不能处理故障。

3.monitor程序负责监控db服务器的状态,包括Mysql数据库、服务器是否运行、复制线程是否正常、主从延时等;它还用于控制agent程序处理故障。

4.monitor会每隔几秒钟监控db服务器的状态,如果db服务器已经从故障变成了正常,那么monitor会自动在60s之后将其设置为online状态(默认是60s可以设为其它的值),有监控端的

配置文件参数“auto_set_online”决定,群集服务器的状态有三种分别是:HARD_OFFLINE→AWAITING_RECOVERY→online

5.默认monitor会控制mmm_agent会将writer db服务器read_only修改为OFF,其它的db服务器read_only修改为ON,所以为了严谨可以在所有的服务器的my.cnf文件中加入read_only=1由monitor控制来控制writer和read,root用户和复制用户不受read_only参数的影响。

356

356

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?