服务器搭建

FT2000+ arm64服务器 openEuler 部署hadoop单机伪集群并测试基准性能_hkNaruto的博客-CSDN博客

编译fuse相关程序

下载源码

wget https://dlcdn.apache.org/hadoop/common/hadoop-3.3.1/hadoop-3.3.1-src.tar.gz

tar -xvf hadoop-3.3.1-src.tar.gz 安装工具、编译依赖

c/c++

yum install -y gcc gcc-c++ make cmakeyum install -y openssl-devel protobuf-devel protobuf-c-devel cyrus-sasl-devel fuse fuse-devel fuse-libsjava、maven

yum install -y java-1.8.0-openjdk-devel# 下载

wget https://dlcdn.apache.org/maven/maven-3/3.8.6/binaries/apache-maven-3.8.6-bin.tar.gz

# 手动安装

tar -xvf apache-maven-3.8.6-bin.tar.gz -C /usr/local/

# 配置环境变量

echo "export JAVA_HOME=/usr/lib/jvm/java-1.8.0" >> /etc/profile

echo "export PATH=\$PATH:/usr/local/apache-maven-3.8.6/bin" >> /etc/profile

source /etc/profile

配置maven aliyun仓库地址

vi /usr/local/apache-maven-3.8.6/conf/settings.xml 在mirrors中间插入

<mirror>

<id>aliyunmaven</id>

<mirrorOf>*</mirrorOf>

<name>阿里云公共仓库</name>

<url>https://maven.aliyun.com/repository/public</url>

</mirror>

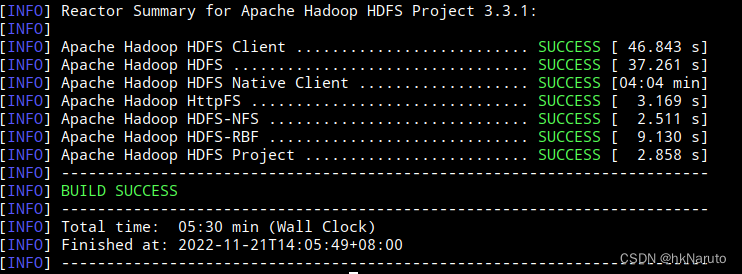

编译本地库

hadoop-hdfs-native-client (注意-DskipTests跳过了单元测试,其中部分测试会导致jvm崩溃,暂未解决)

cd /root/hadoop-3.3.1-src/hadoop-hdfs-project/

mvn clean package -T12 -Pnative -Dmaven.test.skip=true -DskipTests=true

手动安装fuse_dfs以及配套脚本

cd /root/hadoop-3.3.1-src/hadoop-hdfs-project/hadoop-hdfs-native-client

cp src/main/native/fuse-dfs/fuse_dfs_wrapper.sh /usr/local/bin/

cp target/main/native/fuse-dfs/fuse_dfs /usr/local/bin/

cp -vP target/native/target/usr/local/lib/* /usr/local/lib/

cp /usr/lib/jvm/java-1.8.0/jre/lib/aarch64/server/libjvm.so /usr/local/lib/配置ldconfig

echo /usr/local/lib >> /etc/ld.so.conf

ldconfig

下载hadoop-3.3.1-aarch64

cd ~

wget https://dlcdn.apache.org/hadoop/common/hadoop-3.3.1/hadoop-3.3.1-aarch64.tar.gz

tar -xvf hadoop-3.3.1-aarch64.tar.gz

压力测试

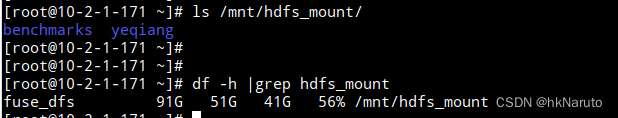

挂载

mkdir /mnt/hdfs_mount

export JAVA_HOME=/usr/lib/jvm/java-1.8.0

export HADOOP_HOME=/root/hadoop-3.3.1

export CLASSPATH=$(/root/hadoop-3.3.1/bin/hadoop classpath --glob)

export OS_ARCH=aarch64

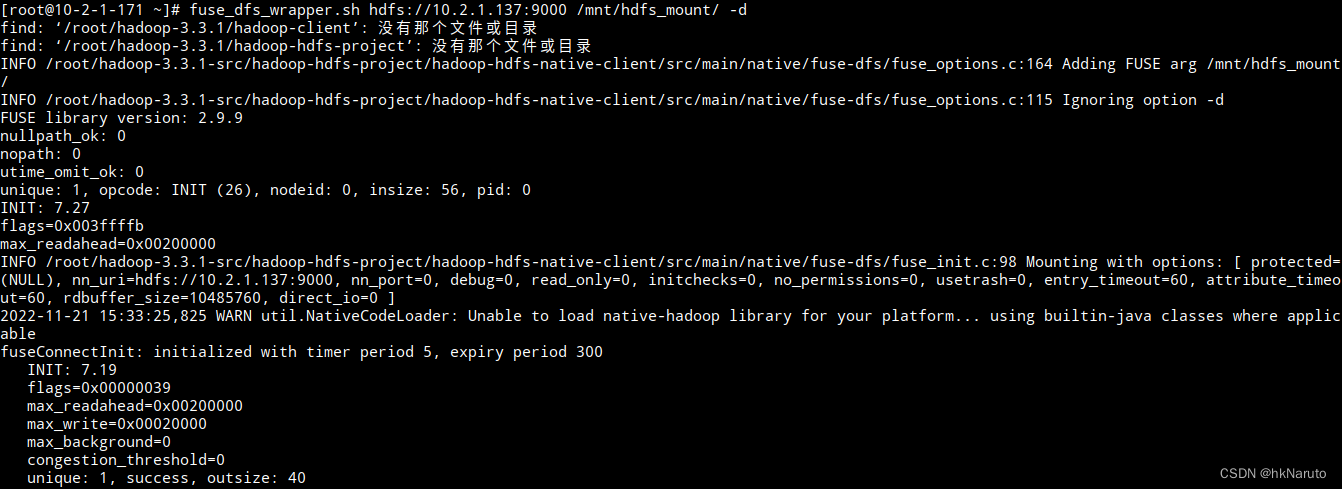

fuse_dfs_wrapper.sh hdfs://10.2.1.137:9000 /mnt/hdfs_mount/ -d

故障

1. fuse_init.c:134 FATAL: dfs_init: fuseConnectInit failed with error -22!

fuse_init.c:134 FATAL: dfs_init: fuseConnectInit failed with error -22!-d参数查看详细日志

[root@10-2-1-171 hadoop-hdfs-project]# fuse_dfs_wrapper.sh dfs://10.2.1.137:9000 /mnt/hdfs_mount -d

find: ‘/root/hadoop-3.3.1-src/hadoop-client’: 没有那个文件或目录

INFO /root/hadoop-3.3.1-src/hadoop-hdfs-project/hadoop-hdfs-native-client/src/main/native/fuse-dfs/fuse_options.c:164 Adding FUSE arg /mnt/hdfs_mount

INFO /root/hadoop-3.3.1-src/hadoop-hdfs-project/hadoop-hdfs-native-client/src/main/native/fuse-dfs/fuse_options.c:115 Ignoring option -d

FUSE library version: 2.9.9

nullpath_ok: 0

nopath: 0

utime_omit_ok: 0

unique: 1, opcode: INIT (26), nodeid: 0, insize: 56, pid: 0

INIT: 7.27

flags=0x003ffffb

max_readahead=0x00200000

INFO /root/hadoop-3.3.1-src/hadoop-hdfs-project/hadoop-hdfs-native-client/src/main/native/fuse-dfs/fuse_init.c:98 Mounting with options: [ protected=(NULL), nn_uri=hdfs://10.2.1.137:9000, nn_port=0, debug=0, read_only=0, initchecks=0, no_permissions=0, usetrash=0, entry_timeout=60, attribute_timeout=60, rdbuffer_size=10485760, direct_io=0 ]

could not find method getRootCauseMessage from class (null) with signature (Ljava/lang/Throwable;)Ljava/lang/String;

could not find method getStackTrace from class (null) with signature (Ljava/lang/Throwable;)Ljava/lang/String;

FileSystem: loadFileSystems failed error:

(unable to get root cause for java.lang.NoClassDefFoundError)

(unable to get stack trace for java.lang.NoClassDefFoundError)

getJNIEnv: getGlobalJNIEnv failed

Unable to determine the configured value for hadoop.fuse.timer.period.ERROR /root/hadoop-3.3.1-src/hadoop-hdfs-project/hadoop-hdfs-native-client/src/main/native/fuse-dfs/fuse_init.c:134 FATAL: dfs_init: fuseConnectInit failed with error -22!

ERROR /root/hadoop-3.3.1-src/hadoop-hdfs-project/hadoop-hdfs-native-client/src/main/native/fuse-dfs/fuse_init.c:34 LD_LIBRARY_PATH=/root/hadoop-3.3.1-src/hadoop-hdfs-project/hadoop-hdfs-native-client/target/usr/local/lib:/usr/lib/jvm/java-1.8.0/jre/lib/aarch64/server

ERROR /root/hadoop-3.3.1-src/hadoop-hdfs-project/hadoop-hdfs-native-client/src/main/native/fuse-dfs/fuse_init.c:35 CLASSPATH=::/root/hadoop-3.3.1-src/hadoop-hdfs-project/hadoop-hdfs-native-client/target/hadoop-hdfs-native-client-3.3.1.jar:/root/hadoop-3.3.1-src/hadoop-hdfs-project/hadoop-hdfs-nfs/target/hadoop-hdfs-nfs-3.3.1.jar:/root/hadoop-3.3.1-src/hadoop-hdfs-project/hadoop-hdfs-client/target/hadoop-hdfs-client-3.3.1.jar:/root/hadoop-3.3.1-src/hadoop-hdfs-project/hadoop-hdfs-httpfs/target/hadoop-hdfs-httpfs-3.3.1.jar:/root/hadoop-3.3.1-src/hadoop-hdfs-project/hadoop-hdfs/target/hadoop-hdfs-3.3.1.jar:/root/hadoop-3.3.1-src/hadoop-hdfs-project/hadoop-hdfs-rbf/target/hadoop-hdfs-rbf-3.3.1.jar

解决:

下载hadoop-3.3.1-aarch64,解压后配置

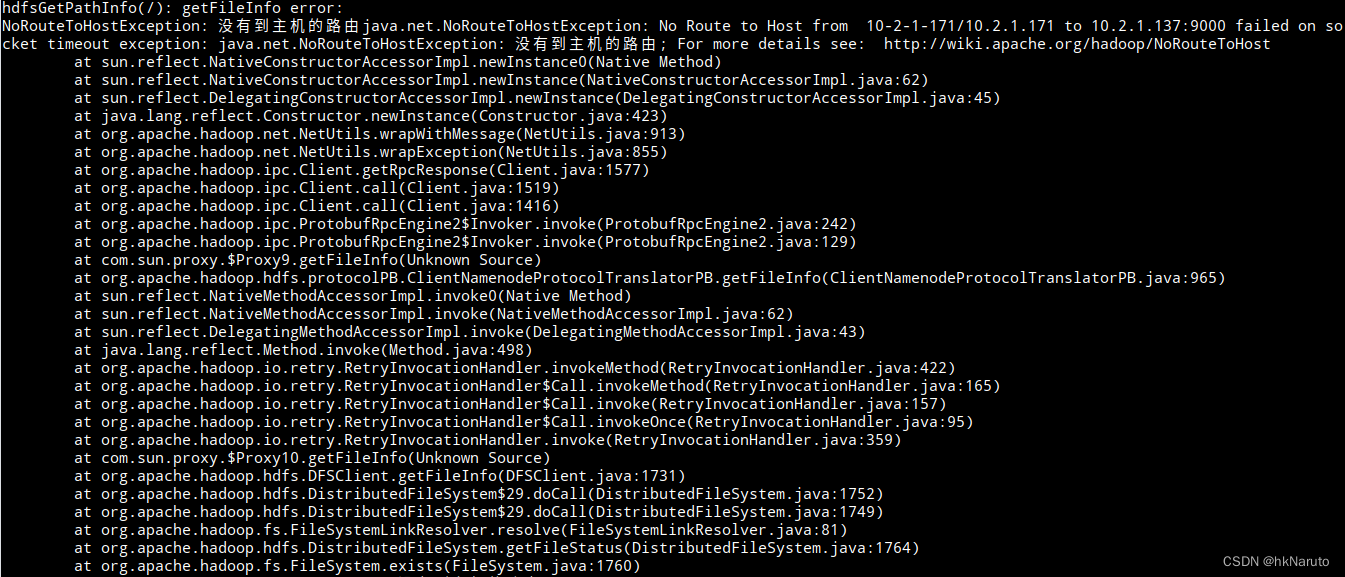

export CLASSPATH=$(/root/hadoop-3.3.1/bin/hadoop classpath --glob)2. ls /mnt/hdfs_mount ls: 无法访问 '/mnt/hdfs_mount': 没有那个文件或目录 没有到主机的路由

-d 启动时,终端日志输出

目标机器9000端口状态

修改目标机namenode配置

vim etc/hadoop/hdfs-site.xml添加配置

<property>

<name>dfs.namenode.rpc-bind-host</name>

<value>0.0.0.0</value>

</property>重启

暂时关闭防火墙

systemctl stop firewalld成功

899

899

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?