前言:通过 零基础入门darknet-YOLO3及YOLOv3-Tiny 文档操作,我们已经有了自己训练的YOLO3或YOLOv3-Tiny模型,接下来一步步演示如何转换自己的模型,并适配进我们khadas VIM3 开发板 android平台的khadas_android_npu_app。关于VIM3 ubuntu平台请参考此文档 1。

一,转换模型

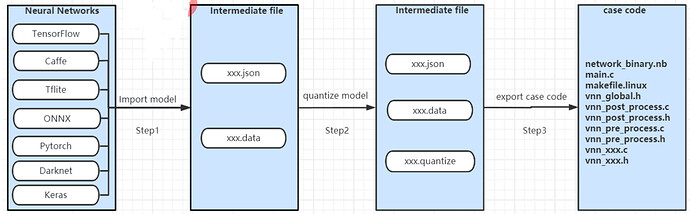

模型转换流程图:

1,导入模型

当前模型转换过程都是在 acuity-toolkit 目录下进行:

cd {workspace}/aml_npu_sdk/acuity-toolkit

cp {workspace}/yolov3-khadas_ai.cfg_train demo/model/

cp {workspace}/yolov3-khadas_ai_last.weights demo/model/

cp {workspace}/test.jpg demo/model/

如下修改:

hlm@Server:/users/hlm/npu/aml_npu_sdk/acuity-toolkit/demo$ git diff

diff --git a/acuity-toolkit/demo/0_import_model.sh b/acuity-toolkit/demo/0_import_model.sh

index 3198810..4671c9f 100755

--- a/acuity-toolkit/demo/0_import_model.sh

+++ b/acuity-toolkit/demo/0_import_model.sh

@@ -1,6 +1,6 @@

#!/bin/bash

-NAME=mobilenet_tf

+NAME=yolov3

ACUITY_PATH=../bin/

convert_caffe=${ACUITY_PATH}convertcaffe

@@ -11,13 +11,13 @@ convert_onnx=${ACUITY_PATH}convertonnx

convert_keras=${ACUITY_PATH}convertkeras

convert_pytorch=${ACUITY_PATH}convertpytorch

-$convert_tf \

- --tf-pb ./model/mobilenet_v1.pb \

- --inputs input \

- --input-size-list '224,224,3' \

- --outputs MobilenetV1/Predictions/Softmax \

- --net-output ${NAME}.json \

- --data-output ${NAME}.data

+#$convert_tf \

+# --tf-pb ./model/mobilenet_v1.pb \

+# --inputs input \

+# --input-size-list '224,224,3' \

+# --outputs MobilenetV1/Predictions/Softmax \

+# --net-output ${NAME}.json \

+# --data-output ${NAME}.data

#$convert_caffe \

# --caffe-model xx.prototxt \

@@ -30,11 +30,11 @@ $convert_tf \

# --net-output ${NAME}.json \

# --data-output ${NAME}.data

-#$convert_darknet \

-# --net-input xxx.cfg \

-# --weight-input xxx.weights \

-# --net-output ${NAME}.json \

-# --data-output ${NAME}.data

+$convert_darknet \

+ --net-input ./model/yolov3-khadas_ai.cfg_train \

+ --weight-input ./model/yolov3-khadas_ai_last.weights \

+ --net-output ${NAME}.json \

+ --data-output ${NAME}.data

--- a/acuity-toolkit/demo/data/validation_tf.txt

+++ b/acuity-toolkit/demo/data/validation_tf.txt

@@ -1 +1 @@

-./space_shuttle_224.jpg, 813

+./test.jpg

执行对应脚本:

bash 0_import_model.sh

2,对模型进行量化

hlm@Server:/users/hlm/npu/aml_npu_sdk/acuity-toolkit/demo$ git diff

diff --git a/acuity-toolkit/demo/1_quantize_model.sh b/acuity-toolkit/demo/1_quantize_model.sh

index 630ea7f..ee7bd00 100755

--- a/acuity-toolkit/demo/1_quantize_model.sh

+++ b/acuity-toolkit/demo/1_quantize_model.sh

@@ -1,6 +1,6 @@

#!/bin/bash

-NAME=mobilenet_tf

+NAME=yolov3

ACUITY_PATH=../bin/

tensorzone=${ACUITY_PATH}tensorzonex

@@ -11,12 +11,12 @@ $tensorzone \

--dtype float32 \

--source text \

--source-file data/validation_tf.txt \

- --channel-mean-value '128 128 128 128' \

- --reorder-channel '0 1 2' \

+ --channel-mean-value '0 0 0 256' \

+ --reorder-channel '2 1 0' \

--model-input ${NAME}.json \

--model-data ${NAME}.data \

--model-quantize ${NAME}.quantize \

- --quantized-dtype asymmetric_affine-u8 \

+ --quantized-dtype dynamic_fixed_point-i8 \

注意,这里 --quantized-dtype 跟ubuntu平台不一样。执行对应脚本:

bash 1_quantize_model.sh

3,生成 case 代码

hlm@Server:/users/hlm/npu/aml_npu_sdk/acuity-toolkit/demo$ git diff

diff --git a/acuity-toolkit/demo/2_export_case_code.sh b/acuity-toolkit/demo/2_export_case_code.sh

index 85b101b..867c5b9 100755

--- a/acuity-toolkit/demo/2_export_case_code.sh

+++ b/acuity-toolkit/demo/2_export_case_code.sh

@@ -1,6 +1,6 @@

#!/bin/bash

-NAME=mobilenet_tf

+NAME=yolov3

ACUITY_PATH=../bin/

export_ovxlib=${ACUITY_PATH}ovxgenerator

@@ -8,8 +8,8 @@ export_ovxlib=${ACUITY_PATH}ovxgenerator

$export_ovxlib \

--model-input ${NAME}.json \

--data-input ${NAME}.data \

- --reorder-channel '0 1 2' \

- --channel-mean-value '128 128 128 128' \

+ --reorder-channel '2 1 0' \

+ --channel-mean-value '0 0 0 256' \

执行对应脚本:

bash 2_export_case_code.sh

当然只要运行脚本没报错,后续你可以按照上面修改完成后,一次性执行脚本:

bash 0_import_model.sh && bash 1_quantize_model.sh && bas

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?