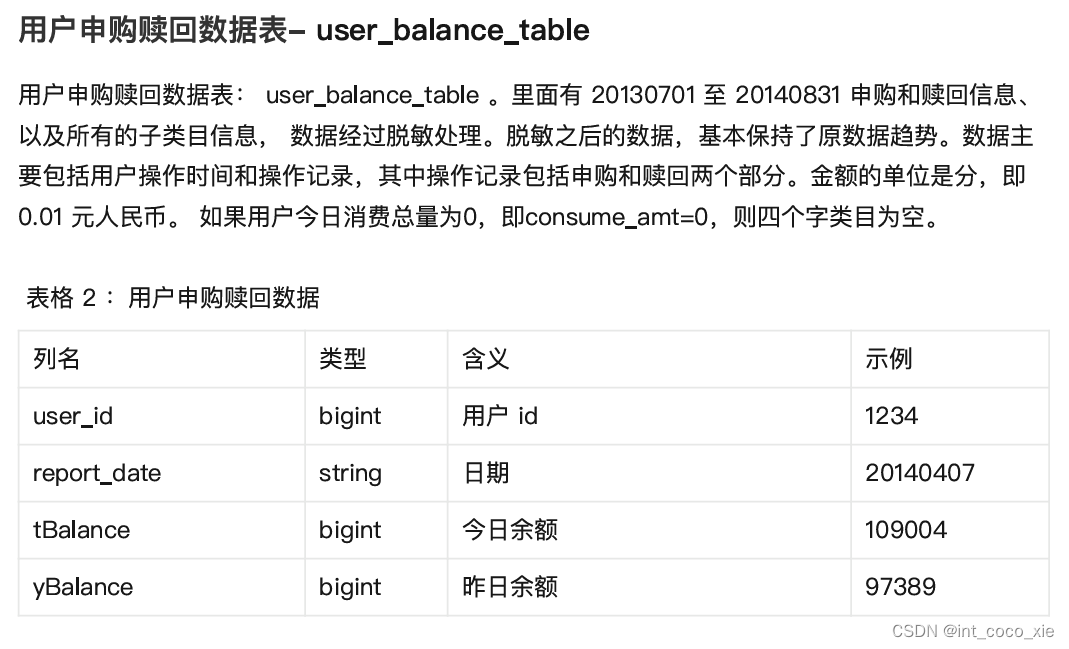

数据介绍

Purchase and Redemption Data from Alipay_数据集-阿里云天池

登录 · 语雀(数据压缩包)

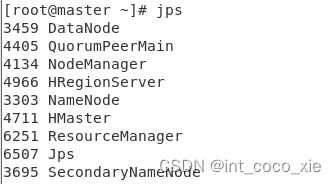

任务1-设计并创建HBase数据表

进入到hbase shell 命令行

start-all.sh

zkServer.sh start

start-hbase.sh

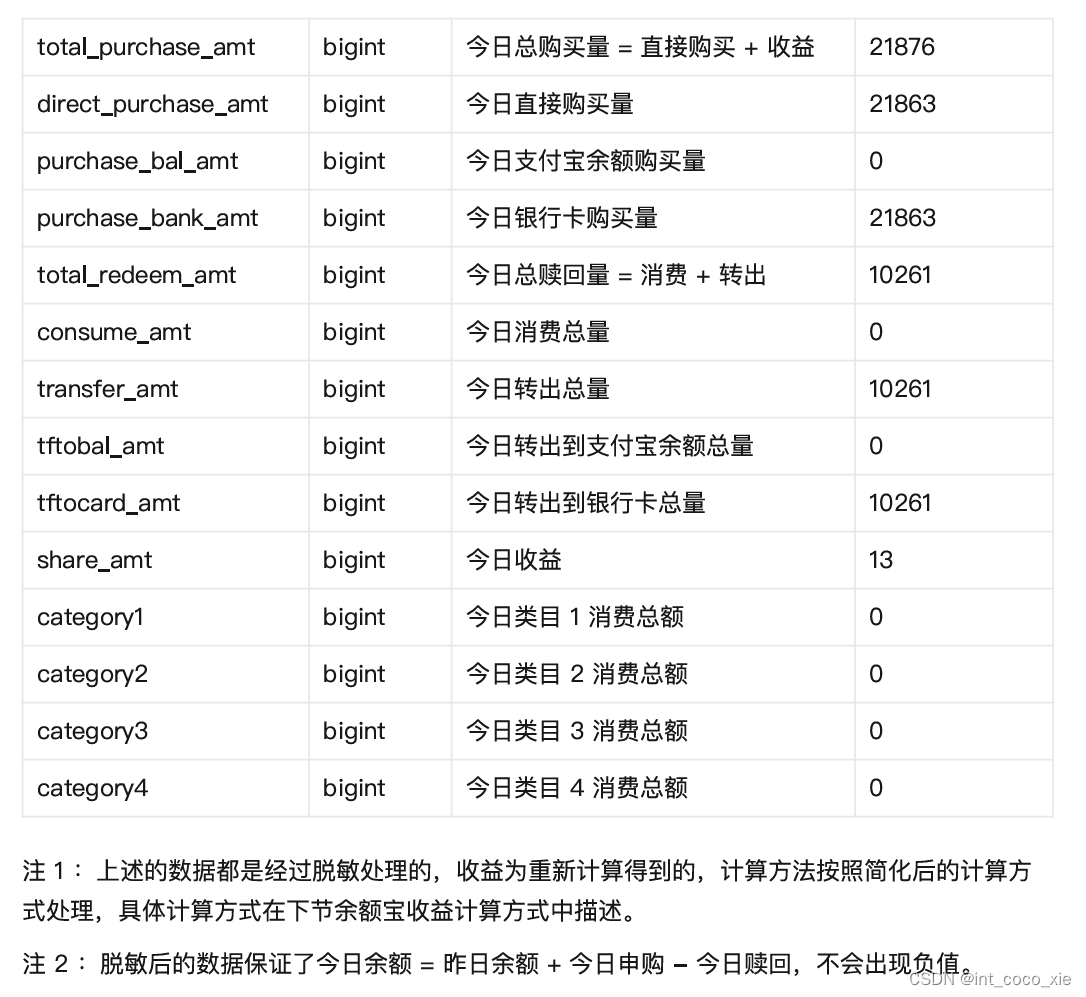

jps

hbase shell

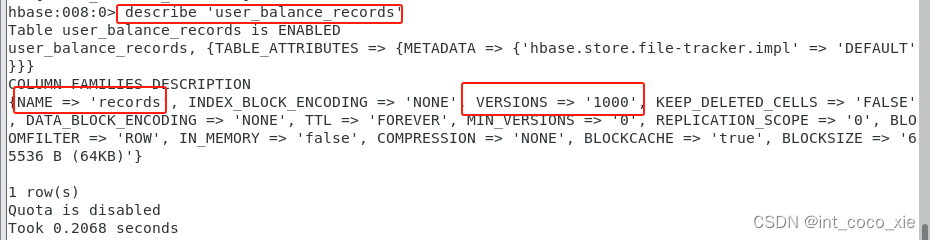

在hbase shell命令行中执行创建表命令

create 'user_balance_records','records',{NAME=>'records',VERSIONS=>1000}

查看表结构

describe 'user_balance_records'

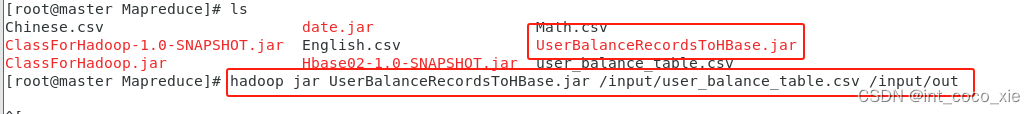

任务2-导⼊数据到HBase

1.hbase shell命令行代码

hadoop jar UserBalanceRecordsToHBase.jar /input/user_balance_table.csv /input/out

2.jar包主类代码

2.jar包主类代码

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.io.ImmutableBytesWritable;

import org.apache.hadoop.hbase.mapreduce.TableMapReduceUtil;

import org.apache.hadoop.hbase.util.Bytes;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import java.io.IOException;

import java.text.ParseException;

import java.text.SimpleDateFormat;

import java.util.Date;

import java.util.TimeZone;

public class UserBalanceRecordsToHBase {

public static class MyMapper extends Mapper<LongWritable, Text, ImmutableBytesWritable, Put> {

private final SimpleDateFormat dateFormat = new SimpleDateFormat("yyyyMMdd");

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String[] fields = value.toString().split(",");

if (fields.length < 2 || fields[0].equals("user_id")) {

return;

}

String userId = fields[0];

String reportDate = fields[1];

long timestamp = parseDateToTimestamp(reportDate);

String[] columnNames = {

"tBalance", "yBalance", "total_purchase_amt", "direct_purchase_amt",

"purchase_bal_amt", "purchase_bank_amt", "total_redeem_amt",

"consume_amt", "transfer_amt", "tftobal_amt", "tftocard_amt",

"share_amt", "category1", "category2", "category3", "category4"

};

Put put = new Put(Bytes.toBytes(userId), timestamp);

for (int i = 2; i < fields.length; i++) {

if (!fields[i].isEmpty()) {

put.addColumn(Bytes.toBytes("records"), Bytes.toBytes(columnNames[i - 2]), timestamp,

Bytes.toBytes(fields[i]));

}

}

// Write to context

context.write(new ImmutableBytesWritable(Bytes.toBytes(userId)), put);

}

private long parseDateToTimestamp(String dateStr) {

try {

dateFormat.setTimeZone(TimeZone.getTimeZone("UTC"));

Date date = dateFormat.parse(dateStr);

return date.getTime();

} catch (ParseException e) {

throw new RuntimeException("Error parsing date: " + dateStr, e);

}

}

}

public static void main(String[] args) throws Exception {

Configuration conf = HBaseConfiguration.create();

conf.set("hbase.zookeeper.quorum", "192.168.125.129:2181");

Job job = Job.getInstance(conf, "User Balance Records to HBase");

// 增加资源使用量

job.getConfiguration().set("mapreduce.map.memory.mb", "2048");

job.getConfiguration().set("mapreduce.reduce.memory.mb", "4096");

job.getConfiguration().set("mapreduce.map.cpu.vcores", "2");

job.getConfiguration().set("mapreduce.reduce.cpu.vcores", "4");

job.setNumReduceTasks(10);

job.setJarByClass(UserBalanceRecordsToHBase.class);

job.setMapperClass(MyMapper.class);

job.setInputFormatClass(TextInputFormat.class);

FileInputFormat.addInputPath(job, new Path(args[0]));

// 设置 Map 输出类型

job.setMapOutputKeyClass(ImmutableBytesWritable.class);

job.setMapOutputValueClass(Put.class);

TableMapReduceUtil.initTableReducerJob("user_balance_records", null, job);

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

3.pom.xml代码

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.blog</groupId>

<artifactId>Hbase02</artifactId>

<version>1.0-SNAPSHOT</version>

<name>Hbase02</name>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<maven.compiler.target>1.8</maven.compiler.target>

<maven.compiler.source>1.8</maven.compiler.source>

<junit.version>5.9.2</junit.version>

</properties>

<dependencies>

<dependency>

<groupId>javax.enterprise</groupId>

<artifactId>cdi-api</artifactId>

<version>2.0.SP1</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>javax.ws.rs</groupId>

<artifactId>javax.ws.rs-api</artifactId>

<version>2.1.1</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>javax.servlet</groupId>

<artifactId>javax.servlet-api</artifactId>

<version>4.0.1</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.junit.jupiter</groupId>

<artifactId>junit-jupiter-api</artifactId>

<version>${junit.version}</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.junit.jupiter</groupId>

<artifactId>junit-jupiter-engine</artifactId>

<version>${junit.version}</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>3.1.3</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>3.1.3</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-auth</artifactId>

<version>3.1.3</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>2.5.8</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-mapreduce</artifactId>

<version>2.5.8</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-server</artifactId>

<version>2.5.8</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-assembly-plugin</artifactId>

<configuration>

<descriptorRefs>

<descriptorRef>jar-with-dependencies</descriptorRef>

</descriptorRefs>

<archive>

<!-- <manifestFile>src/main/resources/META-INF/MANIFEST.MF/META-INF/MANIFEST.MF-->

<!-- <manifestFile>${project.build.outputDirectory}/META-INF/MANIFEST.MF</manifestFile>-->

<manifest>

<mainClass>UserBalanceRecordsToHBase</mainClass>

</manifest>

</archive>

</configuration>

<executions>

<execution>

<id>make-assembly</id> <!-- this is used for inheritance merges -->

<phase>package</phase> <!-- bind to the packaging phase -->

<goals>

<goal>single</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

</project>

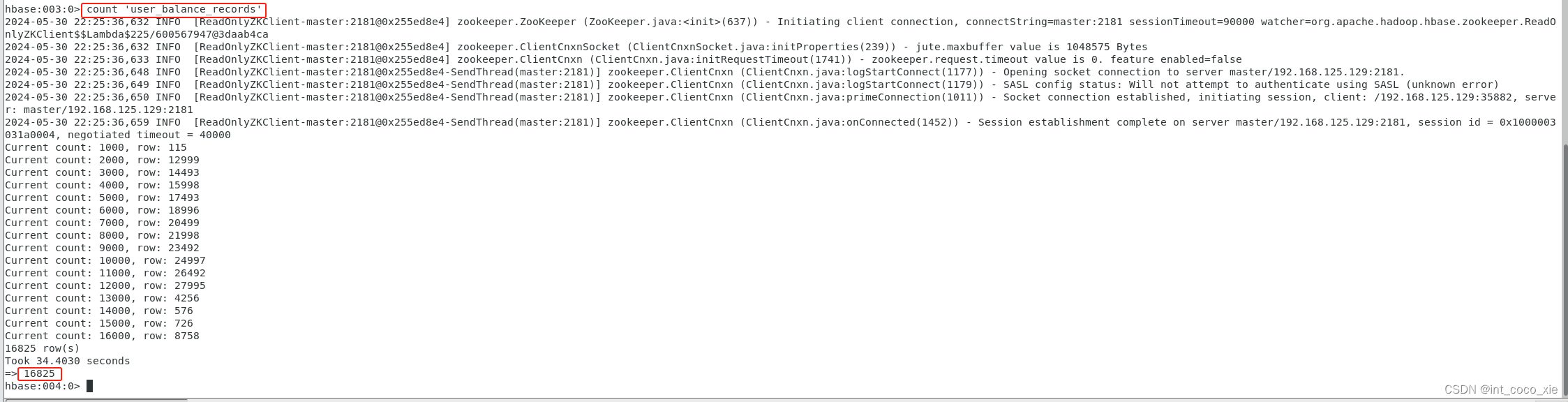

4.数据导⼊HBase成功后,在HBase命令⾏下,使⽤count查询HBase的记录数。

count 'user_balance_records'

清空表数据

truncate 'user_balance_records'

查看表数据

scan 'user_balance_records'

任务3-HBase任务查询

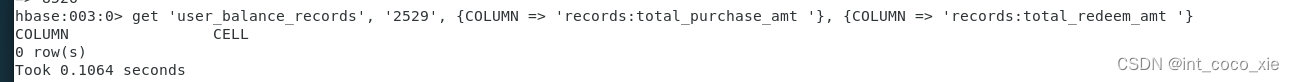

1.查询user_id为 2529 的全部申购和赎回信息。

get 'user_balance_records', '2529', {COLUMN => 'records:total_purchase_amt '}, {COLUMN => 'records:total_redeem_amt '}

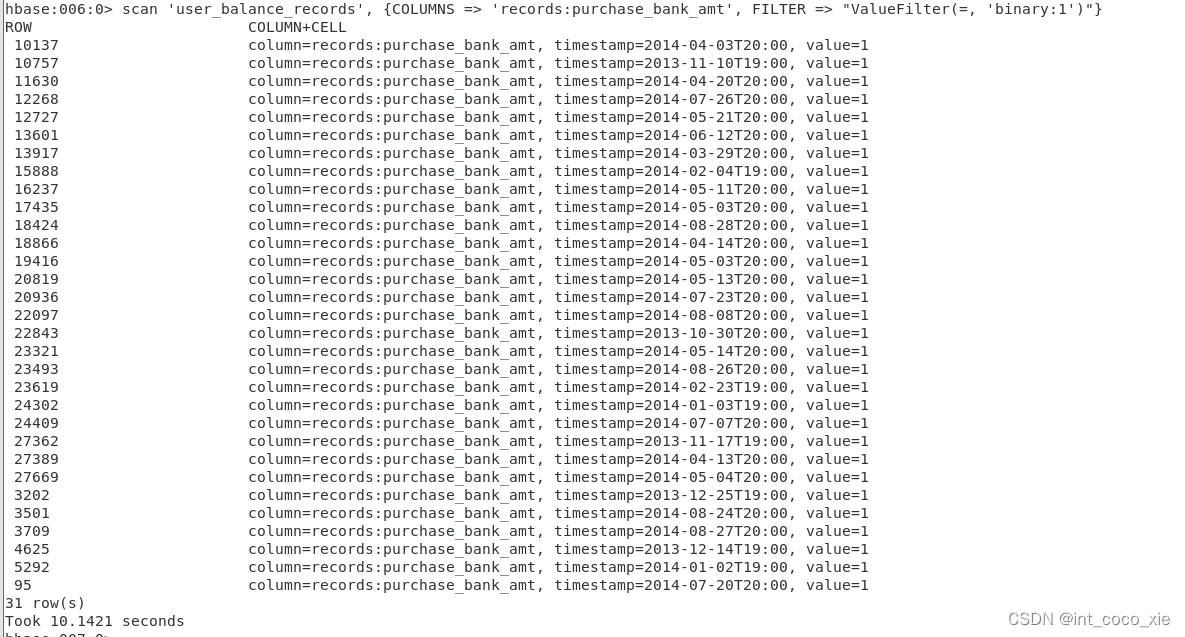

2. 查询今⽇银⾏卡购买量为1的全部记录信息。

scan 'user_balance_records', {COLUMNS => 'records:purchase_bank_amt', FILTER => "ValueFilter(=, 'binary:1')"}

粗略的写了一下吧!

543

543

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?