Apache Doris Broker快速体验之案例(3)

环境信息

硬件信息

- CPU :4C

- CPU型号:ARM64

- 内存 :10GB

- 硬盘 :66GB SSD

软件信息

- VM镜像版本 :CentOS-7

- Apahce Doris版本 :1.2.4.1

- Hadoop版本:3.3.4

- Mysql版本:mysql-8.0.32-linux-glibc2.17-aarch64

- Hive版本:3.1.3

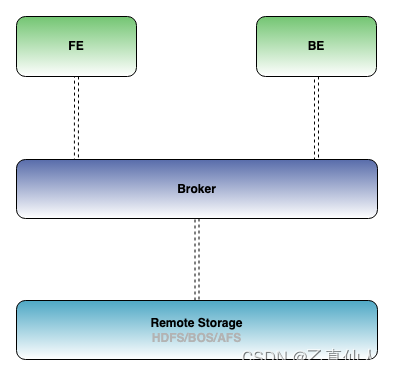

Broker介绍

Broker 是 Apache Doris 集群中一个可选进程,主要用于支持 Apache Doris 读写远端存储上的文件和目录。目前已支持以下远端存储:

- Apache HDFS

- 阿里云 OSS

- 腾讯云 CHDFS

- 腾讯云 GFS (1.2.0 版本支持)

- 华为云 OBS (1.2.0 版本后支持)

- 亚马逊 S3

- JuiceFS (2.0.0 版本支持)

Broker 通过提供一个 RPC 服务端口来提供服务,是一个无状态的 Java 进程,负责为远端存储的读写操作封装一些类 POSIX 的文件操作,如 open,pread,pwrite 等等。除此之外,Broker 不记录任何其他信息,所以包括远端存储的连接信息、文件信息、权限信息等等,都需要通过参数在 RPC 调用中传递给 Broker 进程,才能使得 Broker 能够正确读写文件。

Broker 仅作为一个数据通路,并不参与任何计算,因此仅需占用较少的内存。通常一个 Doris 系统中会部署一个或多个 Broker 进程。并且相同类型的 Broker 会组成一个组,并设定一个 名称(Broker name)。

以下会介绍 Broker 在 Apache Doris 中常用的几种导入和导出场景案例:

- Broker Load 异步数据导入

- Export 异步数据导出

- Select Into Outfile 同步数据导出

Broker导入案例

测试表创建

-- doris目标表

CREATE TABLE bl_test (

`id` varchar(1000) NOT NULL COMMENT "来源库表键",

`dt` date NOT NULL COMMENT '分区日期',

`test` BIGINT SUM DEFAULT "0" COMMENT "测试"

) ENGINE=OLAP

AGGREGATE KEY(`id`,`dt`)

PARTITION BY RANGE(`dt`)

(

PARTITION p202306 VALUES [('2023-06-01'), ('2023-07-01')))

DISTRIBUTED BY HASH(`id`) BUCKETS 1

PROPERTIES (

"replication_allocation" = "tag.location.default: 1",

"dynamic_partition.enable" = "true",

"dynamic_partition.time_unit" = "DAY",

"dynamic_partition.time_zone" = "Asia/Shanghai",

"dynamic_partition.start" = "-2147483648",

"dynamic_partition.end" = "1",

"dynamic_partition.prefix" = "p",

"dynamic_partition.replication_allocation" = "tag.location.default: 1",

"dynamic_partition.buckets" = "1",

"dynamic_partition.create_history_partition" = "true",

"dynamic_partition.history_partition_num" = "3",

"dynamic_partition.hot_partition_num" = "0",

"dynamic_partition.reserved_history_periods" = "NULL",

"in_memory" = "false",

"storage_format" = "V2"

);

-- hive源表

CREATE EXTERNAL TABLE bl_test (

`id` bigint comment 'id',

`test` bigint comment 'tf')

comment '表备注'

PARTITIONED BY (

dt string comment '日期分区')

row format delimited fields terminated by ','

stored as PARQUET;

数据初始化

-- hive

insert into bl_test values

(1,2,'2023-07-02');

select * from bl_test;

导入任务创建

LOAD LABEL bl_test

(

DATA INFILE("hdfs://192.168.1.61:9000/user/hive/warehouse/zbh_test.db/bl_test/*/*") -- 分区表格式/*/*

INTO TABLE bl_test

COLUMNS TERMINATED BY ","

FORMAT AS "PARQUET"

(id,test) -- 这里不需要写分区字段

COLUMNS FROM PATH AS (`dt`) -- 分区表才需要特别注明

SET

(dt=str_to_date(`dt`,'%Y-%m-%d'),id=id,test=test)

) WITH BROKER "broker_name" (

"username" = "hadoop",

"password" = ""

) PROPERTIES(

"timeout" = "3600",

"max_filter_ratio" = "1"

);

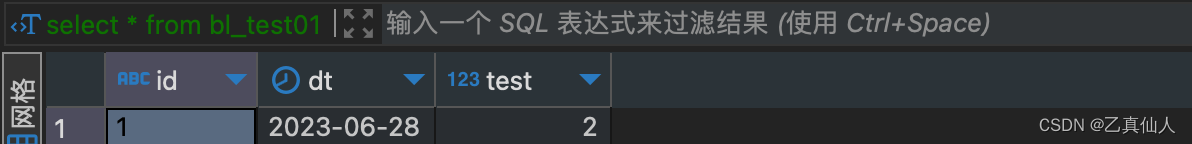

结果验证

-- doris数据结果查看

select * from bl_test;

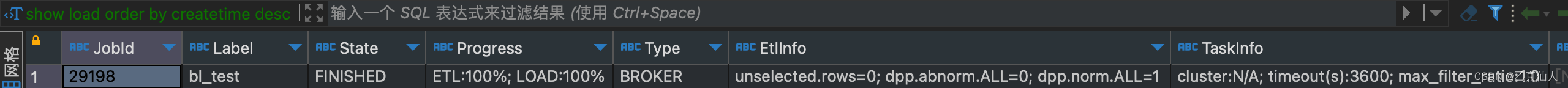

-- broker load任务结果查看

show load order by createtime desc;

Broker导出案例

测试表创建

-- doris源表创建

CREATE TABLE export_test01 (

`id` varchar(1000) NULL COMMENT "来源库表键",

`test01` BIGINT SUM DEFAULT "0" COMMENT "测试"

) ENGINE=OLAP

AGGREGATE KEY(`id`)

DISTRIBUTED BY HASH(`id`) BUCKETS 1

PROPERTIES (

"replication_allocation" = "tag.location.default: 1",

"in_memory" = "false",

"storage_format" = "V2"

);

-- hive目标表创建

CREATE EXTERNAL TABLE zbh_test.export_test01 (

`id` varchar(1000) comment 'id',

`test01` bigint comment 'tf')

comment '表备注'

row format delimited fields terminated by ','

stored as textfile;

数据初始化

-- doris

insert into export_test01 values

(1,1),(2,3),(1,2),(3,2)

select * from export_test01;

导出任务创建

EXPORT TABLE zbh_test.export_test01

TO "hdfs://doris:9000/user/hive/warehouse/zbh_test.db/export_test01"

PROPERTIES

(

"column_separator"=",",

"load_mem_limit"="2147483648",

"timeout" = "3600"

)

WITH BROKER "broker_name"

(

"username" = "hadoop",

"password" = ""

);

结果验证

SHOW EXPORT

OutFile案例

测试表创建

-- doris源表创建

CREATE TABLE export_test02 (

`id` varchar(1000) NULL COMMENT "来源库表键",

`test01` BIGINT SUM DEFAULT "0" COMMENT "测试"

) ENGINE=OLAP

AGGREGATE KEY(`id`)

DISTRIBUTED BY HASH(`id`) BUCKETS 1

PROPERTIES (

"replication_allocation" = "tag.location.default: 1",

"in_memory" = "false",

"storage_format" = "V2"

);

-- hive目标表创建

CREATE EXTERNAL TABLE zbh_test.export_test02 (

`id` varchar(1000) comment 'id',

`test01` bigint comment 'tf')

comment '表备注'

row format delimited fields terminated by ','

stored as textfile;

数据初始化

-- doris

insert into export_test02 values

(2,1),(2,3),(3,2),(3,2)

select * from export_test02

导出任务创建

SELECT * FROM export_test02

INTO OUTFILE "hdfs://doris:9000/user/hive/warehouse/zbh_test.db/export_test02/result_"

FORMAT AS CSV

PROPERTIES

(

"broker.name" = "broker_name",

"column_separator" = ",",

"line_delimiter" = "\n",

"broker.username"="hadoop",

"broker.password"=""

);

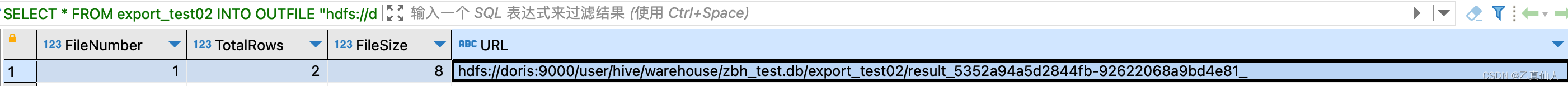

结果验证

select into outfile 为同步命令,导出后会直接显示结果

broker常用的broker load、export和select into outfile快速体验至此结束,体验过程中若遇到问题欢迎留言交流

1402

1402

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?