一、hadoop介绍

Hadoop是一个由Apache基金会所开发的分布式系统基础架构。用户可以在不了解分布式底层细节的情况下,开发分布式程序。充分利用集群的威力进行高速运算和存储。Hadoop实现了一个分布式文件系统( Distributed File System),其中一个组件是HDFS(Hadoop Distributed File System)

二、hadoop下载

三、hadoop环境变量配置

3.1、软件版本

jdk1.8

hadoop3.3.6

zookeeper 3.8.1

3.2、hosts配置

192.168.42.139 node1

192.168.42.140 node2

192.168.42.141 node3

3.3、profile

export JAVA_HOME=/usr/local/jdk1.8.0_391

export JRE_HOME=/usr/local/jdk1.8.0_391/jre

export HBASE_HOME=/usr/local/bigdata/hbase-2.5.6

export HADOOP_HOME=/usr/local/bigdata/hadoop-3.3.6

export FLINK_HOME=/usr/local/bigdata/flink-1.18.0

export SCALA_HOME=/usr/local/bigdata/scala-2.13.12

export SPARK_HOME=/usr/local/bigdata/spark-3.5.0-bin-hadoop3

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JAR_HOME/lib

export PATH=.:$JAVA_HOME/bin:$JRE_HOME/bin:$FLINK_HOME/bin:$SPARK_HOME/bin:$SCALA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HBASE_HOME/bin:$PYTHON_HOME/bin:$PATH

四、hadoop配置文件修改

4.1、先把三台服务器做免密登录处理

4.2、在hadoop创建目录

logs

data

data/

data/datanode

data/namenode

data/tmp

4.3、hadoop-env.sh

export JAVA_HOME=/usr/local/jdk1.8.0_391

4.4、 hdfs-site.xml

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:///usr/local/bigdata/hadoop-3.3.6/data/namenode</value> //注意前面部分路径修改为自己的

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:///usr/local/bigdata/hadoop-3.3.6/data/datanode</value> //注意前面部分路径修改为自己的

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>node2:9860</value>

</property>

4.5、yarn-site.xml

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce_shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>node1</value>

</property>

<property>

<name>yarn.application.classpath</name>

<value>/usr/local/bigdata/hadoop-3.3.6/etc/hadoop:/usr/local/bigdata/hadoop-3.3.6/share/hadoop/common/lib/*:/usr/local/bigdata/hadoop-3.3.6/share/hadoop/common/*:/usr/local/bigdata/hadoop-3.3.6/share/hadoop/hdfs:/usr/local/bigdata/hadoop-3.3.6/share/hadoop/hdfs/lib/*:/usr/local/bigdata/hadoop-3.3.6/share/hadoop/hdfs/*:/usr/local/bigdata/hadoop-3.3.6/share/hadoop/mapreduce/*:/usr/local/bigdata/hadoop-3.3.6/share/hadoop/yarn:/usr/local/bigdata/hadoop-3.3.6/share/hadoop/yarn/lib/*:/usr/local/bigdata/hadoop-3.3.6/share/hadoop/yarn/*</value>

</property>

4.6、 core-site.xml

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/local/bigdata/hadoop-3.3.6/data</value> //注意前面部分路径修改为自己的

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://node1:9000</value>

</property>

<property>

<name>hadoop.http.authentication.simple.anonymous.allowed</name>

<value>true</value>

</property>

4.7、mapred-site.xml

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

4.8、 workers

node1

node2

node3

五、主节点格式化文件系统

hdfs namenode -format2023-11-11 15:43:13,499 INFO util.GSet: 1.0% max memory 839.5 MB = 8.4 MB

2023-11-11 15:43:13,499 INFO util.GSet: capacity = 2^20 = 1048576 entries

2023-11-11 15:43:13,500 INFO namenode.FSDirectory: ACLs enabled? true

2023-11-11 15:43:13,500 INFO namenode.FSDirectory: POSIX ACL inheritance enabled? true

2023-11-11 15:43:13,500 INFO namenode.FSDirectory: XAttrs enabled? true

2023-11-11 15:43:13,500 INFO namenode.NameNode: Caching file names occurring more than 10 times

2023-11-11 15:43:13,504 INFO snapshot.SnapshotManager: Loaded config captureOpenFiles: false, skipCaptureAccessTimeOnlyChange: false, snapshotDiffAllowSnapRootDescendant: true, maxSnapshotLimit: 65536

2023-11-11 15:43:13,505 INFO snapshot.SnapshotManager: SkipList is disabled

2023-11-11 15:43:13,508 INFO util.GSet: Computing capacity for map cachedBlocks

2023-11-11 15:43:13,508 INFO util.GSet: VM type = 64-bit

2023-11-11 15:43:13,508 INFO util.GSet: 0.25% max memory 839.5 MB = 2.1 MB

2023-11-11 15:43:13,508 INFO util.GSet: capacity = 2^18 = 262144 entries

2023-11-11 15:43:13,749 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10

2023-11-11 15:43:13,749 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10

2023-11-11 15:43:13,749 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25

2023-11-11 15:43:13,753 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

2023-11-11 15:43:13,753 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

2023-11-11 15:43:13,755 INFO util.GSet: Computing capacity for map NameNodeRetryCache

2023-11-11 15:43:13,755 INFO util.GSet: VM type = 64-bit

2023-11-11 15:43:13,755 INFO util.GSet: 0.029999999329447746% max memory 839.5 MB = 257.9 KB

2023-11-11 15:43:13,755 INFO util.GSet: capacity = 2^15 = 32768 entries

2023-11-11 15:43:13,778 INFO namenode.FSImage: Allocated new BlockPoolId: BP-576865479-192.168.42.139-1699688593772

2023-11-11 15:43:13,791 INFO common.Storage: Storage directory /usr/local/bigdata/hadoop-3.3.6/data/namenode has been successfully formatted.

2023-11-11 15:43:13,816 INFO namenode.FSImageFormatProtobuf: Saving image file /usr/local/bigdata/hadoop-3.3.6/data/namenode/current/fsimage.ckpt_0000000000000000000 using no compression

2023-11-11 15:43:13,906 INFO namenode.FSImageFormatProtobuf: Image file /usr/local/bigdata/hadoop-3.3.6/data/namenode/current/fsimage.ckpt_0000000000000000000 of size 399 bytes saved in 0 seconds .

2023-11-11 15:43:13,912 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

2023-11-11 15:43:13,928 INFO namenode.FSNamesystem: Stopping services started for active state

2023-11-11 15:43:13,928 INFO namenode.FSNamesystem: Stopping services started for standby state

2023-11-11 15:43:13,931 INFO namenode.FSImage: FSImageSaver clean checkpoint: txid=0 when meet shutdown.

2023-11-11 15:43:13,932 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at node1/192.168.42.139

************************************************************/

六、hadoop启动

6.1、在主节点启动hdfs,./start-dfs.sh

[root@node1 sbin]# ./start-dfs.sh

Starting namenodes on [node1]

上一次登录:六 11月 11 14:54:45 CST 2023从 192.168.42.1pts/1 上

Starting datanodes

上一次登录:六 11月 11 15:47:00 CST 2023pts/0 上

Starting secondary namenodes [node2]

上一次登录:六 11月 11 15:47:02 CST 2023pts/0 上

6.2、在主节点启动yarn,./start-yarn.sh

[root@node1 sbin]# ./start-yarn.sh

Starting resourcemanager

上一次登录:六 11月 11 15:47:06 CST 2023pts/0 上

Starting nodemanagers

上一次登录:六 11月 11 15:48:16 CST 2023pts/0 上

6.3、在主节点jps查看

[root@node1 sbin]# jps

94961 NodeManager

94742 ResourceManager

96025 Jps

91930 NameNode

92186 DataNode

6.4、在node2节点jps查看

[root@node2 sbin]# jps

91826 SecondaryNameNode

95655 Jps

93996 NodeManager

91583 DataNode

6.5、在node3节点jps查看

[root@node3 bigdata]# jps

91257 DataNode

93595 NodeManager

96207 Jps

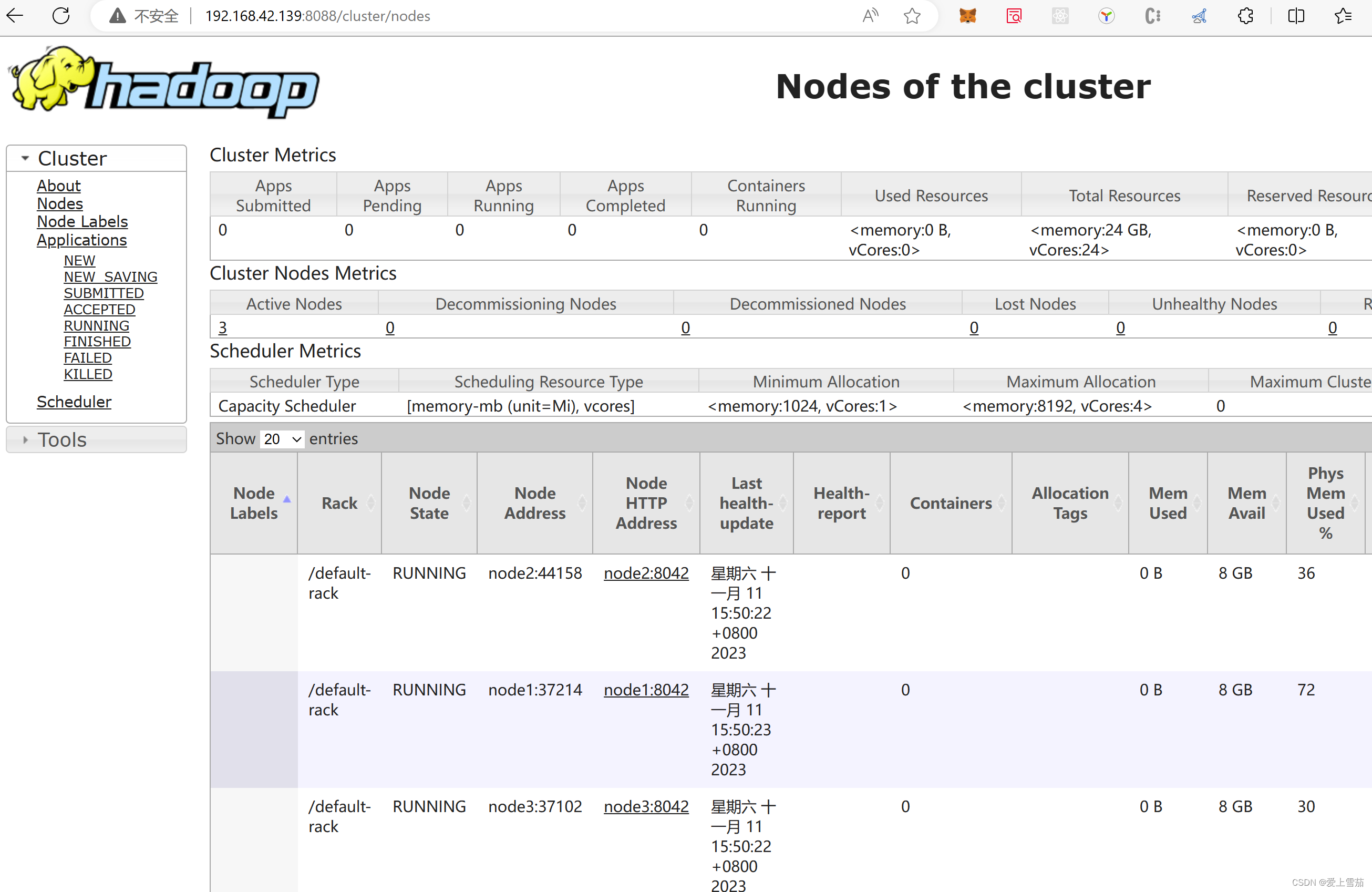

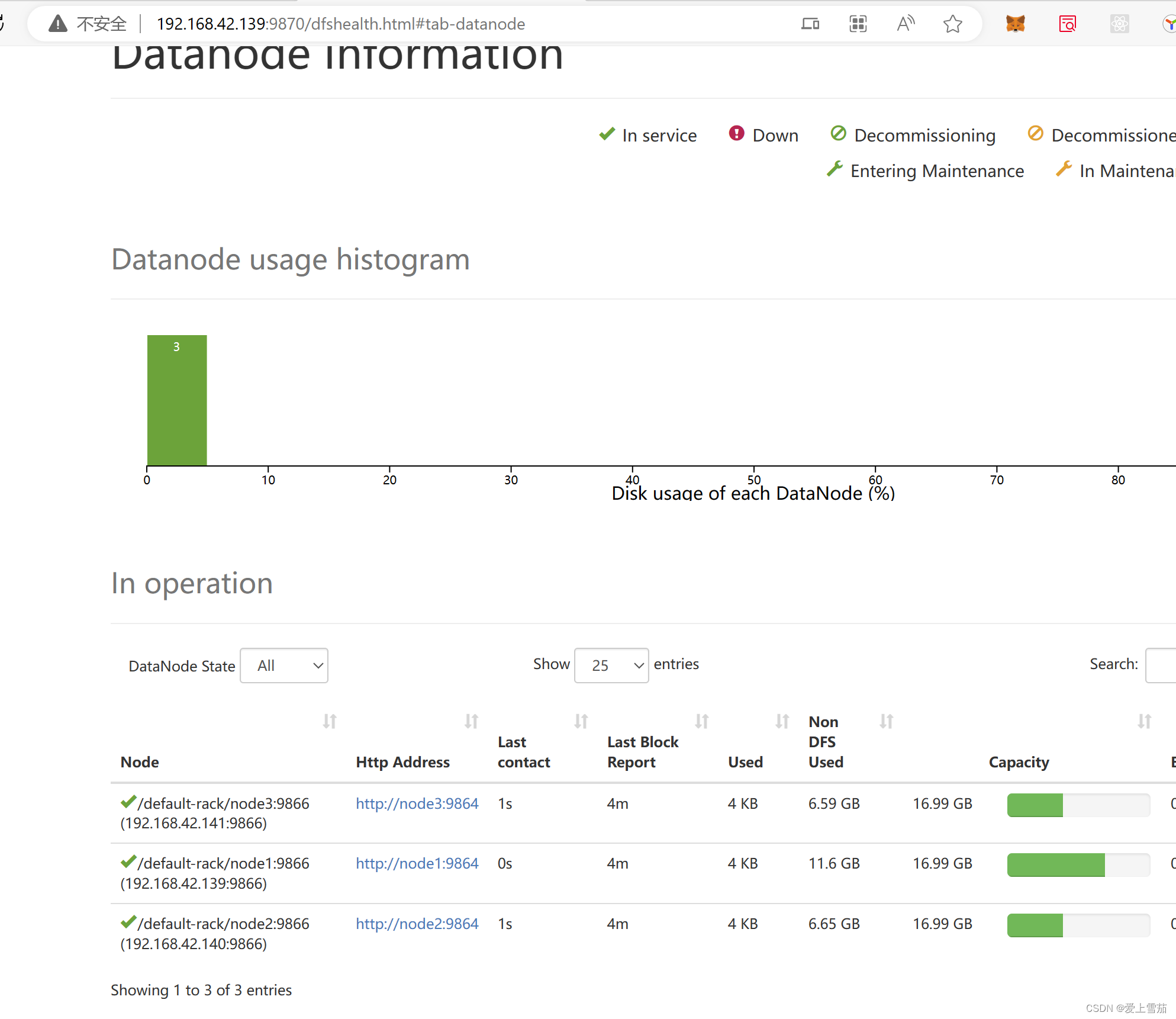

七、查看hadoop

http://192.168.42.139:8088/

http://192.168.42.139:9870/

这样hadoop就启动成功了

3645

3645

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?