文章目录

1、论文总述

刚看这篇paper时候,让我立马想到了何凯明大神的ResNet,因为SiamDW也是在论文开篇提了一个问题,即发现了一个 奇怪的现象,Siamese系列的跟踪网络到现在用的仍然是2012年的AlexNet,并没有用如今更强的backbone,如ResNet、VGG或Inception等,于是作者试了试,发现网络加深之后,效果不升反降,不过原因和ResNet的不一样,ResNet是因为用现有的训练方法,在网络学到一定程度后,再继续学高级的语义信息变得很难了,而本文中作者指出Siam跟踪系列,网络加深之后性能下降的原因主要是由于 padding 的影响。

于是作者做了大量实验(我也得赶紧做实验啊),发现这几个参数对网络性能影响很大:感受野 the receptive field size of neurons、网络步长network stride、有无padding 、最后特征输出层的尺寸 output feature size。

【注】:最近刚好看到一篇目标检测的论文讲的是 有效的感受野区域 ,推荐给大家。Understanding the effective receptive field in deep convolutional neural networks

然后作者分析实验结果,自己总结了Siamese 网路用于跟踪时应该遵循的几个准则,作者根据自己的准则设计了CIR模块让网络可以变深,接着把他们应用到SiamFC、SiamRPN,表示为SiamFC+、SiamRPN+,并取得了SOTA的效果。

2、The main reasons of not bring improvements

i) large increases in the receptive field of neurons lead to reduced feature discriminability and localization precision;

ii)the network padding for convolutions induces a positional bias in learning.

作者认为感受野变大之后特征的context虽然变大了,但是提取到的特征区别度就不够了,即减少了目标本身的局部信息和判别信息,而且本论文一作在直播中也讲到太大的感受野会让feature map相邻element之间的overlap 过大(??是步长小的时候overlap比较大还是步长大的时候overlap比较大?),特征冗余性过大;而感受野比较小的话,又不能获得足够的context信息,特征抽象的层次不够,所以作者指出:RF的大小要与样例图片的大小有关,最好是样例图像的60%-80%。

3、padding导致位置偏见的原因

图也是来自于张志鹏的极市直播PPT中

这张图表示的是没有padding的时候,E是模板,A所在的区域是上一帧的搜索区域(注意:不是A),而B是当前帧的搜索区域,可以看到,目标向左上移动了一定距离(视频中,两帧之间一般是不能移动这么大的距离的吧,这移动的有点快,这也暴露了目标跟踪的常见的问题,即当目标移动过快时,很容易丢失目标,想起了羽毛球跟踪那个项目),R1和R2是所对应的的区域互相关之后的数值,因为此时没有padding,进行互相关操作的时候,R1=R2,且应该都是最高得分,所以在 score map上,也能反映真实的目标移动的方向和大概距离。

这张图表示的是没有padding的时候,E是模板,A所在的区域是上一帧的搜索区域(注意:不是A),而B是当前帧的搜索区域,可以看到,目标向左上移动了一定距离(视频中,两帧之间一般是不能移动这么大的距离的吧,这移动的有点快,这也暴露了目标跟踪的常见的问题,即当目标移动过快时,很容易丢失目标,想起了羽毛球跟踪那个项目),R1和R2是所对应的的区域互相关之后的数值,因为此时没有padding,进行互相关操作的时候,R1=R2,且应该都是最高得分,所以在 score map上,也能反映真实的目标移动的方向和大概距离。

这张图是有padding的,可以看到由于有padding,模板E经过特征提取后,feature map所对应的已经不是原模板E了,而是周围了加了几圈0值的E撇,模板变大了,当然搜索区域B也相应的变大了,但是,重点来了,B撇和E撇在进行互相关时候,由于有padding和感受野的变大,使得搜索区域的B撇容易出 原搜索区域 的边界,当然他出不了加了零值的搜索区域,所以此时两者进行互相关产生的R2已经不等于R1了,所以SCORE MAP上的最大响应点并没有align 目标的移动,不满足平移不变性,至于此时score map上响应的最大点不知道在哪呢,有可能在最右侧,因为出边界是在最左侧,看他们实验结果看的。

这张图是有padding的,可以看到由于有padding,模板E经过特征提取后,feature map所对应的已经不是原模板E了,而是周围了加了几圈0值的E撇,模板变大了,当然搜索区域B也相应的变大了,但是,重点来了,B撇和E撇在进行互相关时候,由于有padding和感受野的变大,使得搜索区域的B撇容易出 原搜索区域 的边界,当然他出不了加了零值的搜索区域,所以此时两者进行互相关产生的R2已经不等于R1了,所以SCORE MAP上的最大响应点并没有align 目标的移动,不满足平移不变性,至于此时score map上响应的最大点不知道在哪呢,有可能在最右侧,因为出边界是在最左侧,看他们实验结果看的。

【注】:就是说带了padding之后,互相关时候有些是不带padding的零值进行互相关的,但是另一些是带着padding的边界零值进行互相关的,导致对不齐。

4、实验结果分析

关于感受野的原文解释:

For the maximum size of receptive field (RF), the

optima lies in a small range. Specifically, for AlexNet, it

ranges from 87–8 (Alex⑦) to 87+16 (Alex③) pixels; while

for Incep.-22, it ranges from 91–16 (Incep.⑦) to 91+8 (Incep.③) pixels. VGG-10 and ResNet-17 also exhibit similar

phenomena. In these cases, the optimal receptive field size

is about 60%∼80% of the input exemplar image z size (e.g.

91 vs 127). Intriguingly, this ratio is robust for various networks in our study, and it is insensitive to their structures.

It illustrates that the size of RF is crucial for feature embedding in a Siamese framework. The underlying reason is that

RF determines the image region used in computing a feature. A large receptive field covers much image context,

resulting in the extracted feature being insensitive to the

spatial location of target objects. On the contrary, a small

one may not capture the structural information of objects,

and thus it is less discriminative for matching. Therefore,

only RF in a certain size range allows the feature to abstract

the characteristics of the object, and its ideal size is closely

related to the size of the exemplar image.

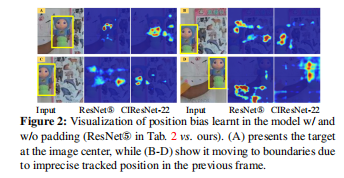

关于padding的原文解释:

If the networks contain

padding operations, the embedding features of an exemplar

image are extracted from the original exemplar image plus

additional (zero)-padding regions. Differently, for the features of a search image, some of them are extracted only

from image content itself, while some are extracted from

image content plus additional (zero)-padding regions (e.g.

the features near the border). As a result, there is inconsistency between the embeddings of target object appearing at different positions in search images, and therefore the

matching similarity comparison degrades. Fig. 2 presents a

visualization example of such inconsistency-induced effect

in the testing phase. It shows that when the target object

moves to image borders, its peak does not precisely indicate

the location of the target. This is a common case caused by

tracker drifts, when the predicted target location is not precise enough in the previous frame.

就是说模板肯定是带了外面的由于padding产生的零值,而搜索区域则是有些带 ,有些不带!!

5、4条网络设计Guidelines

Siamese trackers prefer a relatively small network stride.(8或4)

The receptive field of output features should be set based

on its ratio to the size of the exemplar image.(0.6或0.8)

Network stride, receptive field and output feature size

should be considered as a whole when designing a network architecture.(这几个因素应该被一起考虑)

For a fully convolutional Siamese matching network, it

is critical to handle the problem of perceptual inconsistency between the two network streams.(要解决padding 问题)

(注:padding问题是在Siamese网络中特有的,因为是 two stream网络结构)

6、deeper or wider网络结构

作者利用自己设计的CIR单元组建了比较深的和比较宽的网络,最后俩表现并不是特别好,所以作者都是利用CIResNet-22这个backbone.

数据对比

如上图所示,CIResNet-22的SiamRPN+在vot2017的EAO测试中已经达到了0.301,虽然不及 LSART 和CFWCR但这里速度很慢,但是奇怪的是,并没有与DaSIAMRPN这篇进行比较,后来去查了下,DaSIAMRPN在vot2017的EAO已经达到了0.326,比这篇论文要高。

7、为什么不能更深,43的反而不如22

It is worth noting that when the depth of CIResNets increases from 16 to 22 layers, the performance improves accordingly. But when increasing to 43 layers, CIResNet does

not obtain further gains. There are two main reasons. 1) The

network stride is changed to 4, such that the overlap between the receptive fields of two adjacent features is large.

Consequently, it is not as precise as networks with stride

of 8 in object localization. 2) The number of output feature channels is halved, compared to the other networks in

Tab. 3 (i.e. 256 vs. 512 channels). The overall parameter size is also smaller. These two reasons together limit the

performance of CIResNet-43. Furthermore, wider networks

also bring gains for Siamese trackers. Though CIResNeXt-

22 contain more transformation branches, its model size is

smaller (see Tab. 3). Therefore, its performance is inferior

to CIResIncep.-22 and CIResNet-22.

8、VOT16和VOT15区别

The video sequences in VOT-16 are the same

as those in VOT-15, but the ground-truth bounding boxes

are precisely re-annotated.

9、 改变receptive field, feature size and stride的具体操作

We tune

the sizes of these factors and show their impacts on final

performance. Specifically, we vary the convolutional kernel

size in the last cropping-inside residual block to change the

size of receptive field and output feature. Taking CIResNet-

22 as an example, we vary the kernel size from 1 to 6, which

causes the feature size to change from 7 to 2. To change the

network stride, we replace one CIR unit with a CIR-D unit

in the networks

参考文献:

1、SiamDW阅读笔记:Deeper and Wider Siamese Networks for Real-Time Visual Tracking

680

680

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?