聊聊Thrift(四) thrift 服务篇-TNonblockingServer

TNonblockingServer是thrift提供的NIO实现的服务模式,提供非阻塞的服务处理,用一个单线程来处理所有的RPC请求。

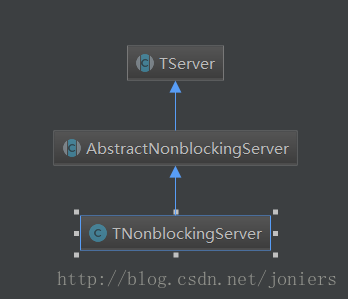

类关系如下:

AbstractNonblockingServer是TNonblockingServer和TThreadedSelectorServer的父类,TThreadedSelectorServer即为我们下篇将要讲解的高级非阻塞模式

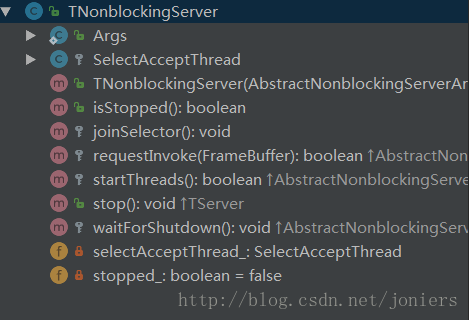

class结构如下图:

Args是静态内部类,用于传递参数,通过Args可以设置读缓冲区大小,序列化方式,传输协议以及RPC处理类。

通过AbstractNonblockingServer 的serve方法,启动服务,启动接受线程,启动监听,等待服务结束

/**

* Begin accepting connections and processing invocations.

*/

public void serve() {

// start any IO threads

if (!startThreads()) {

return;

}

// start listening, or exit

if (!startListening()) {

return;

}

setServing(true);

// this will block while we serve

waitForShutdown();

setServing(false);

// do a little cleanup

stopListening();

}重点需要我们关注的是startThreads,在startThreads的实现中,会创建一个SelectAcceptThread线程,并启动。

protected boolean startThreads() {

// start the selector

try {

selectAcceptThread_ = new SelectAcceptThread((TNonblockingServerTransport)serverTransport_);

return true;

} catch (IOException e) {

LOGGER.error("Failed to start selector thread!", e);

return false; }

}

SelectAcceptThread就是我们的NIO处理线程,用来accept客户端连接,读写请求

流程:startThreads-》SelectAcceptThread-》select

其中select用来处理所有io事件,select通过selector.select()等待IO事件,然后通过handleAccept、handleRead、handleWrite处理接收到的事件。

if (key.isAcceptable()) {

handleAccept();

} else if (key.isReadable()) {

// deal with reads

handleRead(key);

} else if (key.isWritable()) {

// deal with writes

handleWrite(key);

} else {

LOGGER.warn("Unexpected state in select! " + key.interestOps());

}由于TNonblockingServer是用一个SelectAcceptThread处理所有的请求,在并发场景下,其他的IO事件只能等待当前线程依次处理,处理效率略低。

这里记录一下handleRead的处理流程:

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?