文章目录

1.kubernetes简介

kubernetes,是一个全新的基于容器技术的分布式架构领先方案,是谷歌严格保密十几年的秘密武器----Borg系统的一个开源版本,于2014年9月发布第一个版本,2015年7月发布第一个正式版本。

kubernetes的本质是一组服务器集群,它可以在集群的每个节点上运行特定的程序,来对节点中的容器进行管理。目的是实现资源管理的自动化,主要提供了如下的主要功能:

自我修复:一旦某一个容器崩溃,能够在1秒中左右迅速启动新的容器

弹性伸缩:可以根据需要,自动对集群中正在运行的容器数量进行调整

服务发现:服务可以通过自动发现的形式找到它所依赖的服务

负载均衡:如果一个服务起动了多个容器,能够自动实现请求的负载均衡

版本回退:如果发现新发布的程序版本有问题,可以立即回退到原来的版本

存储编排:可以根据容器自身的需求自动创建存储卷

1.3 kubernetes组件

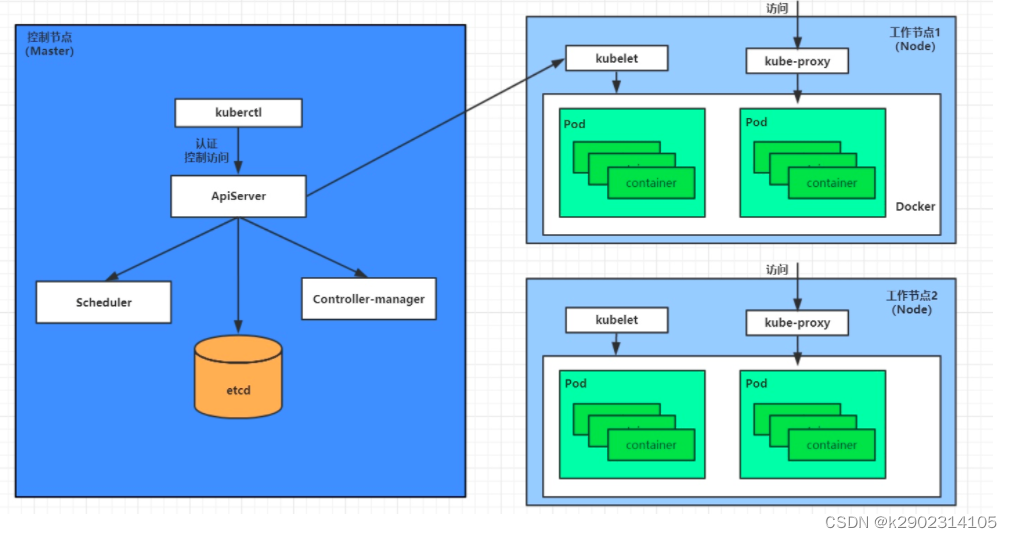

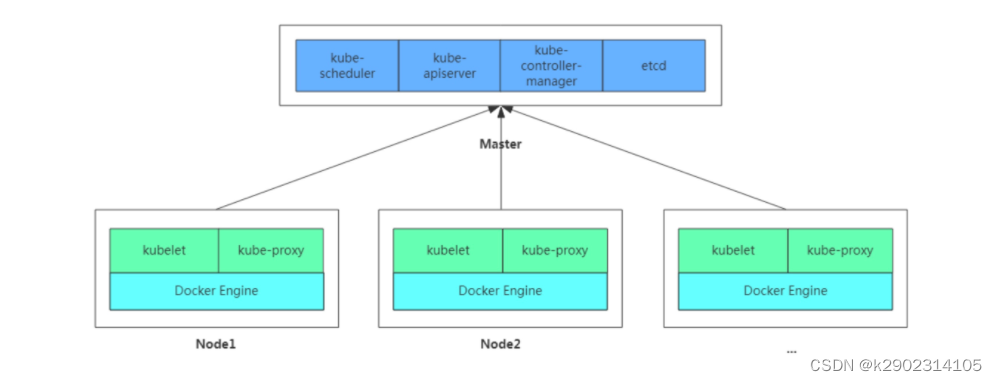

一个kubernetes集群主要是由控制节点(master)、工作节点(node)构成,每个节点上都会安装不同的组件。

master:集群的控制平面,负责集群的决策 ( 管理 )

ApiServer : 资源操作的唯一入口,接收用户输入的命令,提供认证、授权、API注册和发现等机制

Scheduler : 负责集群资源调度,按照预定的调度策略将Pod调度到相应的node节点上

ControllerManager : 负责维护集群的状态,比如程序部署安排、故障检测、自动扩展、滚动更新等

Etcd :负责存储集群中各种资源对象的信息

node:集群的数据平面,负责为容器提供运行环境 ( 干活 )

Kubelet : 负责维护容器的生命周期,即通过控制docker,来创建、更新、销毁容器

KubeProxy : 负责提供集群内部的服务发现和负载均衡

Docker : 负责节点上容器的各种操作

下面,以部署一个nginx服务来说明kubernetes系统各个组件调用关系:

首先要明确,一旦kubernetes环境启动之后,master和node都会将自身的信息存储到etcd数据库中

一个nginx服务的安装请求会首先被发送到master节点的apiServer组件

apiServer组件会调用scheduler组件来决定到底应该把这个服务安装到哪个node节点上

在此时,它会从etcd中读取各个node节点的信息,然后按照一定的算法进行选择,并将结果告知apiServer

apiServer调用controller-manager去调度Node节点安装nginx服务

kubelet接收到指令后,会通知docker,然后由docker来启动一个nginx的pod

pod是kubernetes的最小操作单元,容器必须跑在pod中至此,

一个nginx服务就运行了,如果需要访问nginx,就需要通过kube-proxy来对pod产生访问的代理

这样,外界用户就可以访问集群中的nginx服务了

1.4 kubernetes概念

Master:集群控制节点,每个集群需要至少一个master节点负责集群的管控

Node:工作负载节点,由master分配容器到这些node工作节点上,然后node节点上的docker负责容器的运行

Pod:kubernetes的最小控制单元,容器都是运行在pod中的,一个pod中可以有1个或者多个容器

Controller:控制器,通过它来实现对pod的管理,比如启动pod、停止pod、伸缩pod的数量等等

Service:pod对外服务的统一入口,下面可以维护者同一类的多个pod

Label:标签,用于对pod进行分类,同一类pod会拥有相同的标签

NameSpace:命名空间,用来隔离pod的运行环境

2.Kubernetes快速部署

学习目标

- 在所有节点上安装Docker和kubeadm

- 部署Kubernetes Master

- 部署容器网络插件

- 部署 Kubernetes Node,将节点加入Kubernetes集群中

- 部署Dashboard Web页面,可视化查看Kubernetes资源

3.准备环境:

实验环境:

当前最低配值

master:4核4G

nob1: 2核2G

nob2: 2核2G

| 角色 | IP |

|---|---|

| k8s-master | 192.168.70.134 |

| k8s-node1 | 192.168.70.138 |

| k8s-node2 | 192.168.70.139 |

//以下的操作所有主机都要做

//关闭所有主机的防火墙,selinux

[root@k8s-master ~]# systemctl disable --now firewalld

Removed /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@k8s-master ~]# setenforce 0

[root@k8s-master ~]# vim /etc/selinux/config

[root@k8s-node1 ~]# systemctl disable --now firewalld

Removed /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@k8s-node1 ~]# setenforce 0

[root@k8s-node1 ~]# vim /etc/selinux/config

[root@k8s-node2 ~]# systemctl disable --now firewalld

Removed /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@k8s-node2 ~]# setenforce 0

[root@k8s-node2 ~]# vim /etc/selinux/config

//关闭所有主机的swap分区:

# vim /etc/fstab

//注释掉swap分区

[root@k8s-master ~]# free -m

total used free shared buff/cache available

Mem: 3752 556 2656 10 539 2956

Swap: 4051 0 4051

[root@k8s-master ~]# vim /etc/fstab

[root@k8s-node1 ~]# free -m

total used free shared buff/cache available

Mem: 1800 550 728 10 521 1084

Swap: 2047 0 2047

[root@k8s-node1 ~]# vim /etc/fstab

[root@k8s-node2 ~]# free -m

total used free shared buff/cache available

Mem: 1800 559 711 10 529 1072

Swap: 2047 0 2047

[root@k8s-node2 ~]# vim /etc/fstab

//在master添加hosts:

[root@k8s-master ~]# cat >> /etc/hosts << EOF

192.168.70.134 k8s-master

192.168.70.138 k8s-node1

192.168.70.139 k8s-node2

EOF

[root@k8s-node1 ~]# cat >> /etc/hosts << EOF

> 192.168.70.134 k8s-master

> 192.168.70.138 k8s-node1

> 192.168.70.139 k8s-node2

> EOF

[root@k8s-node2 ~]# cat >> /etc/hosts << EOF

> 192.168.70.134 k8s-master

> 192.168.70.138 k8s-node1

> 192.168.70.139 k8s-node2

> EOF

[root@k8s-master ~]# ping k8s-master //测试

PING k8s-master (192.168.70.134) 56(84) bytes of data.

64 bytes from k8s-master (192.168.70.134): icmp_seq=1 ttl=64 time=0.072 ms

64 bytes from k8s-master (192.168.70.134): icmp_seq=2 ttl=64 time=0.080 ms

^C

--- k8s-master ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 41ms

rtt min/avg/max/mdev = 0.072/0.076/0.080/0.004 ms

[root@k8s-master ~]# ping k8s-node1

PING k8s-node1 (192.168.70.138) 56(84) bytes of data.

64 bytes from k8s-node1 (192.168.70.138): icmp_seq=1 ttl=64 time=0.512 ms

64 bytes from k8s-node1 (192.168.70.138): icmp_seq=2 ttl=64 time=0.285 ms

^C

[root@k8s-master ~]# ping k8s-node2

PING k8s-node2 (192.168.70.139) 56(84) bytes of data.

64 bytes from k8s-node2 (192.168.70.139): icmp_seq=1 ttl=64 time=1.60 ms

64 bytes from k8s-node2 (192.168.70.139): icmp_seq=2 ttl=64 time=0.782 ms

64 bytes from k8s-node2 (192.168.70.139): icmp_seq=3 ttl=64 time=1.32 ms

//将桥接的IPv4流量传递到iptables的链:

[root@k8s-master ~]# cat > /etc/sysctl.d/k8s.conf << EOF

> net.bridge.bridge-nf-call-ip6tables = 1

> net.bridge.bridge-nf-call-iptables = 1

> EOF

[root@k8s-master ~]# sysctl --system //生效

#省略过程

* Applying /etc/sysctl.d/99-sysctl.conf ...

* Applying /etc/sysctl.d/k8s.conf ... //看见这个说明应用了

* Applying /etc/sysctl.conf ...

//时间同步,所有主机

[root@k8s-master ~]# vim /etc/chrony.conf

# Use public servers from the pool.ntp.org project.

# Please consider joining the pool (http://www.pool.ntp.org/join.html).

pool time1.aliyun.com iburst //配置成阿里云的时间同步

[root@k8s-master ~]# systemctl enable chronyd

[root@k8s-master ~]# systemctl restart chronyd

[root@k8s-master ~]# systemctl status chronyd

● chronyd.service - NTP client/server

Loaded: loaded (/usr/lib/systemd/system/chronyd.service; enabled; vendor preset: enab>

Active: active (running) since Tue 2022-09-06 15:54:27 CST; 9s ago

[root@k8s-node1 ~]# vim /etc/chrony.conf

[root@k8s-node1 ~]# systemctl enable chronyd

[root@k8s-node1 ~]# systemctl restart chronyd

[root@k8s-node1 ~]# systemctl status chronyd

● chronyd.service - NTP client/server

Loaded: loaded (/usr/lib/systemd/system/chronyd.service; enabled; vendor preset: enab>

Active: active (running) since Tue 2022-09-06 15:57:52 CST; 8s ago

[root@k8s-node2 ~]# vim /etc/chrony.conf

[root@k8s-node2 ~]# systemctl enable chronyd

[root@k8s-node2 ~]# systemctl restart chronyd

[root@k8s-node2 ~]# systemctl status chronyd

● chronyd.service - NTP client/server

Loaded: loaded (/usr/lib/systemd/system/chronyd.service; enabled; vendor preset: enab>

Active: active (running) since Tue 2022-09-06

//配置免密登录

[root@k8s-master ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:LZeVhmrafNhs4eAGG8dNQltVYcGX/sXbKj/dPzR/wNo root@k8s-master

The key's randomart image is:

+---[RSA 3072]----+

| . ...o=o.|

| . o . o...|

| o o + .o |

| . * + .o|

| o S * . =|

| @ O . o+o|

| o * * o.++|

| . o o E.=|

| o..=|

+----[SHA256]-----+

[root@k8s-master ~]# ssh-copy-id k8s-master

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host 'k8s-master (192.168.70.134)' can't be established.

ECDSA key fingerprint is SHA256:1x2Tw0BYQrGTk7wpwsIy+TtFN72hWbHYYiU6WtI/Ojk.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@k8s-master's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'k8s-master'"

and check to make sure that only the key(s) you wanted were added.

[root@k8s-master ~]# ssh-copy-id k8s-node1

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host 'k8s-node1 (192.168.70.138)' can't be established.

ECDSA key fingerprint is SHA256:75svPGZTNSPdFX6K4lCDkoQfG10Y478mu0NzQD7HpnA.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@k8s-node1's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'k8s-node1'"

and check to make sure that only the key(s) you wanted were added.

[root@k8s-master ~]# ssh-copy-id k8s-node2

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host 'k8s-node2 (192.168.70.139)' can't be established.

ECDSA key fingerprint is SHA256:75svPGZTNSPdFX6K4lCDkoQfG10Y478mu0NzQD7HpnA.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@k8s-node2's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'k8s-node2'"

and check to make sure that only the key(s) you wanted were added.

[root@k8s-master ~]# ssh k8s-master

Activate the web console with: systemctl enable --now cockpit.socket

This system is not registered to Red Hat Insights. See https://cloud.redhat.com/

To register this system, run: insights-client --register

Last login: Tue Sep 6 15:10:17 2022 from 192.168.70.1

[root@k8s-master ~]# ssh k8s-node1

Activate the web console with: systemctl enable --now cockpit.socket

This system is not registered to Red Hat Insights. See https://cloud.redhat.com/

To register this system, run: insights-client --register

Last login: Tue Sep 6 15:10:18 2022 from 192.168.70.1

[root@k8s-node1 ~]# exit

注销

Connection to k8s-node1 closed.

[root@k8s-master ~]# ssh k8s-node2

Activate the web console with: systemctl enable --now cockpit.socket

This system is not registered to Red Hat Insights. See https://cloud.redhat.com/

To register this system, run: insights-client --register

Last login: Tue Sep 6 15:10:18 2022 from 192.168.70.1

[root@k8s-node2 ~]# exit

注销

Connection to k8s-node2 closed.

[root@k8s-master ~]# reboot //前面设置了seliunx,swap分区,重启确保他永久生效

[root@k8s-node1 ~]# reboot

[root@k8s-node2 ~]# reboot

#//注意重启完了检查防火墙和seliunx,swap分区是是否关闭

4.所有节点安装Docker/kubeadm/kubelet

Kubernetes默认CRI(容器运行时)为Docker,因此先安装Docker。

4.1 安装Docker

##注意所有docker的版本要一致,用dnf list all|grep docker 命令查看 docker-ce.x86_64 版本是否一致

#所有节点都做下面的操作

[root@k8s-master yum.repos.d]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo //下载docker

//省略过程

[root@k8s-master ~]# dnf list all|grep docker

containerd.io.x86_64 1.6.8-3.1.el8 @docker-ce-stable

docker-ce.x86_64 //这个 3:20.10.17-3.el8 @docker-ce-stable

docker-ce-cli.x86_64 1:20.10.17-3.el8 @docker-ce-stable

[root@k8s-master ~]# dnf -y install docker-ce --allowerasiong //正常情况不用加--allowerasiong来替换冲突的软件包 ,我的源有问题所有加这个

[root@k8s-master ~]# systemctl enable --now docker

Created symlink /etc/systemd/system/multi-user.target.wants/docker.service → /usr/lib/systemd/system/docker.service.

[root@k8s-master ~]# docker version

Client: Docker Engine - Community

Version: 20.10.17 //版本要统一

API version: 1.41

Go version: go1.17.11

Git commit: 100c701

Built: Mon Jun 6 23:03:11 2022

OS/Arch: linux/amd64

Context: default

Experimental: true

Server: Docker Engine - Community

Engine:

Version: 20.10.17

API version: 1.41 (minimum version 1.12)

Go version: go1.17.11

Git commit: a89b842

Built: Mon Jun 6 23:01:29 2022

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.6.8

GitCommit: 9cd3357b7fd7218e4aec3eae239db1f68a5a6ec6

runc:

Version: 1.1.4

GitCommit: v1.1.4-0-g5fd4c4d

docker-init:

Version: 0.19.0

GitCommit: de40ad0

[root@k8s-master ~]#

//配置加速器

[root@k8s-master ~]# cat > /etc/docker/daemon.json << EOF

> {

> "registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"],

> "exec-opts": ["native.cgroupdriver=systemd"],

> "log-driver": "json-file",

> "log-opts": {

> "max-size": "100m"

> },

> "storage-driver": "overlay2"

> }

> EOF

[root@k8s-master ~]# cat /etc/docker/daemon.json

{

"registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"], //加速器

"exec-opts": ["native.cgroupdriver=systemd"], //驱动

"log-driver": "json-file", //格式

"log-opts": {

"max-size": "100m" //100m开始运行

},

"storage-driver": "overlay2" //存储驱动

}

4.2添加kubernetes阿里云YUM软件源

#以下操作所有节点都要配置

[root@k8s-master ~]# cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

4.3 安装kubeadm,kubelet和kubectl

#以下操作所有节点都要配置

[root@k8s-master ~]# dnf list all|grep kubelet //查看,要统一

kubelet.x86_64 1.25.0-0 kubernetes

[root@k8s-master ~]# dnf list all|grep kubeadm

kubeadm.x86_64 1.25.0-0 kubernetes

[root@k8s-master ~]# dnf list all|grep kubectl

kubectl.x86_64 1.25.0-0 kubernetes

[root@k8s-master ~]# dnf -y install kubelet kubeadm kubectl

[root@k8s-master ~]# systemctl enable kubelet //不能启动

Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /usr/lib/systemd/system/kubelet.service.

[root@k8s-master ~]# systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor>

Drop-In: /usr/lib/systemd/system/kubelet.service.d

5.部署Kubernetes Master

在192.168.70.134(Master)执行

[root@k8s-master ~]# kubeadm init -h //看帮助文档

[root@k8s-master ~]# cd /etc/containerd/

[root@k8s-master containerd]# containerd config default > config.toml //生成

[root@k8s-master containerd]# vim config.toml

sandbox_image = "k8s.gcr.io/pause:3.6" //改为 sandbox_image = "registry.cn-beijing.aliyuncs.com/abcdocker/pause:3.6"

[root@k8s-master manifests]# systemctl stop kubelet

[root@k8s-master manifests]# systemctl restart containerd

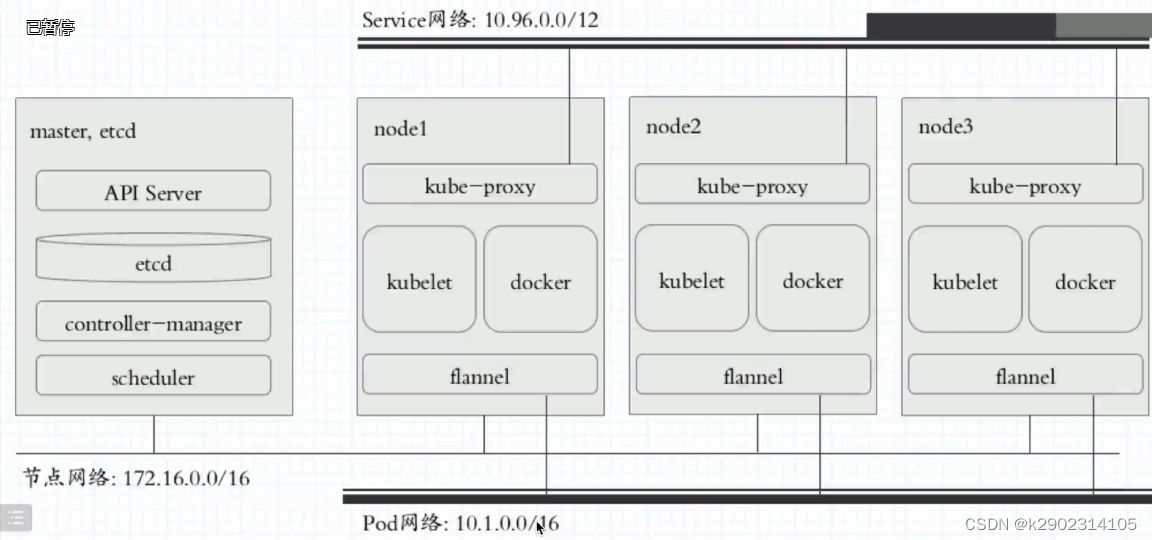

[root@k8s-master manifests]# kubeadm init --apiserver-advertise-address 192.168.70.134 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.25.0 --service-cidr 10.96.0.0/12 --pod-network-cidr 10.244.0.0/16

//省略过程

//除了192.168.70.134,其他基本都是固定写法

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube //普通用户用这些命令

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf //root用户用这些命令

You should now deploy a pod network to the cluster.

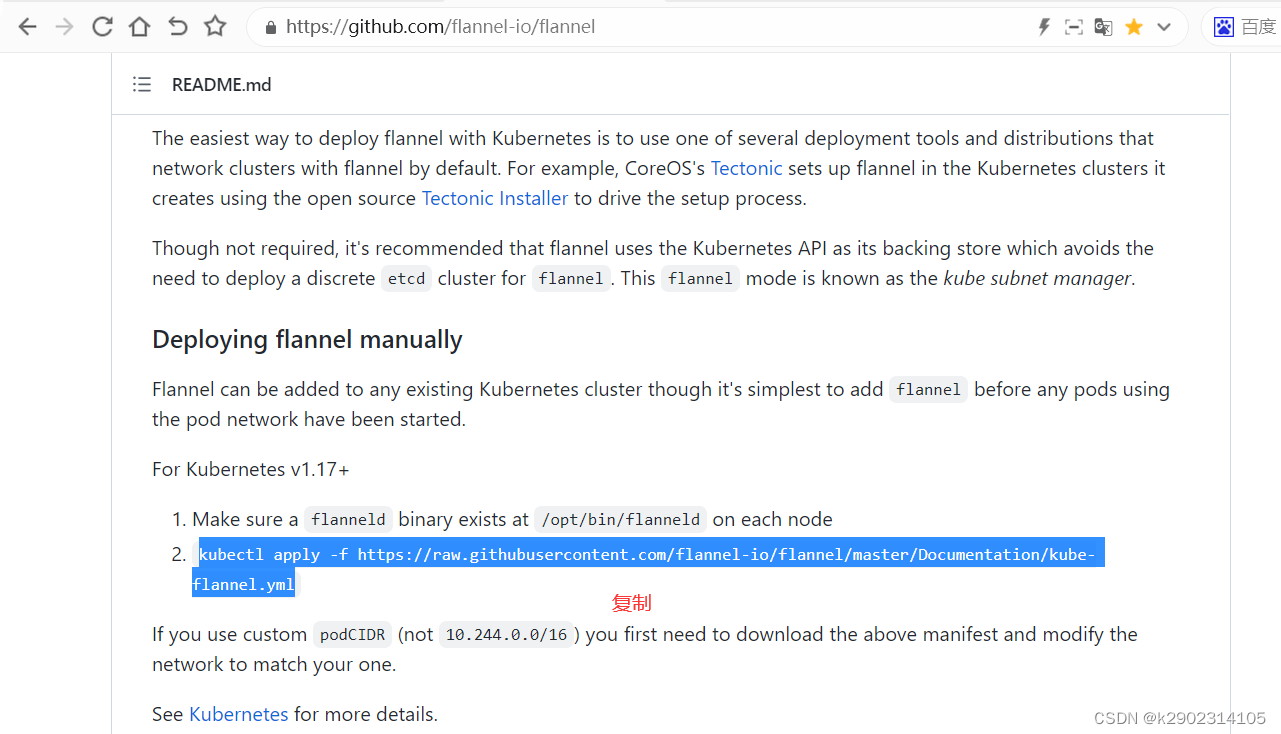

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: //要配置这个网络插件,在github.com ,上搜索flannel

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.70.134:6443 --token h9utko.9esdw3ge9j0urwae \

--discovery-token-ca-cert-hash sha256:8c36d378e51b8d01f1fe904e51e1b5d7215fc76dcbaf105c798c4cda70e84ca1

//看到着说明初始化成功

//如果初始化报错,可以试一试重置kubeadm, 命令:kubeadm reset

//设置永久环境变量,用root方式

[root@k8s-master ~]# vim /etc/profile.d/k8s.sh

[root@k8s-master ~]# cat /etc/profile.d/k8s.sh

export KUBECONFIG=/etc/kubernetes/admin.conf

[root@k8s-master ~]# source /etc/profile.d/k8s.sh

[root@k8s-master ~]# echo $KUBECONFIG

/etc/kubernetes/admin.conf

由于默认拉取镜像地址k8s.gcr.io国内无法访问,这里指定阿里云镜像仓库地址。

使用kubectl工具:

//这个普通用户要做的操作,root不用

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubectl get nodes

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master NotReady control-plane 14m v1.25.0

6.安装Pod网络插件(CNI)

部署flannel网络插件能让pod网络直接通信,不需要经过转发

//看到说明成功

[root@k8s-master ~]# kubectl apply -f https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml

namespace/kube-flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

确保能够访问到quay.io这个registery。

7.加入Kubernetes Node

在192.168.70.138、192.168.70.139上(Node)执行。

向集群添加新节点,执行在kubeadm init输出的kubeadm join命令:

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane 15h v1.25.0 //网络通了,变成Ready

//node节点这个文件都备份或者删除

[root@k8s-node2 containerd]# ls

config.toml

[root@k8s-node2 containerd]# mv config.toml{,.bak} //备份文件,里面都加#

[root@k8s-node2 containerd]# ls

config.toml.bak

[root@k8s-master ~]# cd /etc/containerd/

[root@k8s-master containerd]# ls

config.toml

//将已经生成好不会报错的文件传过去

[root@k8s-master containerd]# scp /etc/containerd/config.toml k8s-node1:/etc/containerd/

config.toml 100% 6952 3.4MB/s 00:00

[root@k8s-master containerd]# scp /etc/containerd/config.toml k8s-node2:/etc/containerd/

config.toml 100% 6952 3.8MB/s 00:00

[root@k8s-node1 containerd]# ls

config.toml

[root@k8s-node1 containerd]# systemctl restart containerd //重启文件生效

[root@k8s-node1 containerd]# kubeadm join 192.168.70.134:6443 --token h9utko.9esdw3ge9j0urwae --discovery-token-ca-cert-hash sha256:8c36d378e51b8d01f1fe904e51e1b5d7215fc76dcbaf105c798c4cda70e84ca1

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster: //看到下面这几行说明成功

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[root@k8s-node2 containerd]# ls

config.toml config.toml.bak

[root@k8s-node2 containerd]# systemctl restart containerd

//复制前面mester初始化的最后几行,加入到集群

[root@k8s-node2 containerd]# kubeadm join 192.168.70.134:6443 --token h9utko.9esdw3ge9j0urwae --discovery-token-ca-cert-hash sha256:8c36d378e51b8d01f1fe904e51e1b5d7215fc76dcbaf105c798c4cda70e84ca1

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[root@k8s-master containerd]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane 15h v1.25.0

k8s-node1 Ready <none> 2m39s v1.25.0

k8s-node2 NotReady <none> 81s v1.25.0 //NotReady 不是因为网络不同,而是他在拉镜像,等会

[root@k8s-master containerd]# kubectl get nodes //已连通添加节点

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane 15h v1.25.0

k8s-node1 Ready <none> 4m35s v1.25.0

k8s-node2 Ready <none> 3m17s v1.25.0

[root@k8s-node1 ~]# kubectl get nodes //访问不了因为没有添加环境变量和环境变量所指的文件

The connection to the server localhost:8080 was refused - did you specify the right host or port?

//添加环境变量和环境变量所指的文件

[root@k8s-master ~]# scp /etc/kubernetes/admin.conf k8s-node1:/etc/kubernetes/admin.conf

admin.conf 100% 5638 2.7MB/s 00:00

[root@k8s-master ~]# scp /etc/kubernetes/admin.conf k8s-node2:/etc/kubernetes/admin.conf

admin.conf 100% 5638 2.9MB/s 00:00

[root@k8s-master ~]# scp /etc/profile.d/k8s.sh k8s-node2:/etc/profile.d/k8s.sh

k8s.sh 100% 45 23.6KB/s 00:00

[root@k8s-master ~]# scp /etc/profile.d/k8s.sh k8s-node1:/etc/profile.d/k8s.sh

k8s.sh 100% 45 3.8KB/s 00:00

//在node节点查看

[root@k8s-node1 ~]# bash //让变量生效

[root@k8s-node1 ~]# echo $KUBECONFIG

/etc/kubernetes/admin.conf

[root@k8s-node1 ~]# kubectl get nodes //成功,可以查看节点

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane 16h v1.25.0

k8s-node1 Ready <none> 21m v1.25.0

k8s-node2 Ready <none> 20m v1.25.0

[root@k8s-node2 ~]# bash

[root@k8s-node2 ~]# echo $KUBECONFIG

/etc/kubernetes/admin.conf

[root@k8s-node2 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane 16h v1.25.0

k8s-node1 Ready <none> 21m v1.25.0

k8s-node2 Ready <none> 20m v1.25.0

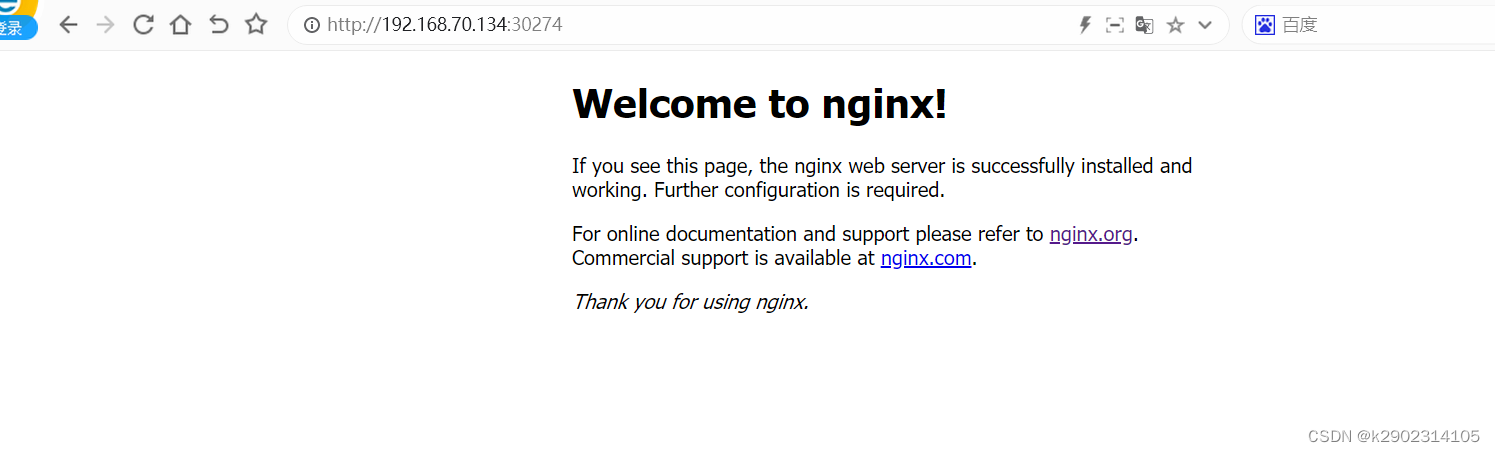

8.测试kubernetes集群

在Kubernetes集群中创建一个pod,验证是否正常运行:

[root@k8s-master ~]# kubectl create deployment nginx --image=nginx

deployment.apps/nginx created //deployment: 类型 //ngimx:pod名称 //nginx:镜像

[root@k8s-master ~]# kubectl expose deployment nginx --port=80 --type=NodePort //--part:暴露端口号 //type=NodePort:类型

service/nginx exposed

[root@k8s-master ~]# kubectl get pod,svc //获取pod,service的信息

//READY是0/1 说明是在拉镜像,等以下在查看 //ContainerCreating 没起来

NAME READY STATUS RESTARTS AGE

pod/nginx-76d6c9b8c-6vnnf 0/1 ContainerCreating 0 17s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 16h

service/nginx NodePort 10.98.79.60 <none> 80:30274/TCP 7s

[root@k8s-master ~]# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-76d6c9b8c-6vnnf 1/1 Running 0 5m28s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 16h

service/nginx NodePort 10.98.79.60 <none> 80:30274/TCP 5m18s

//NodePort :映射 集群IP端口80

访问端口,成功

集群IP只能内部访问,默认80端口

[root@k8s-master ~]# curl 10.98.79.60

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

9.kubectl命令使用:

| 命令分类 | 命令 | 翻译 | 命令作用 |

|---|---|---|---|

| 基本命令 | create | 创建 | 创建一个资源 |

| edit | 编辑 | 编辑一个资源 | |

| get | 获取 | 获取一个资源 | |

| patch | 更新 | 更新一个资源 | |

| delete | 删除 | 删除一个资源 | |

| explain | 解释 | 展示资源文档 | |

| 运行和调试 | run | 运行 | 在集群中运行一个指定的镜像 |

| expose | 暴露 | 暴露资源为Service | |

| describe | 描述 | 显示资源内部信息 | |

| logs | 日志输出容器在 pod 中的日志 | 输出容器在 pod 中的日志 | |

| attach | 缠绕进入运行中的容器 | 进入运行中的容器 | |

| exec | 执行容器中的一个命令 | 执行容器中的一个命令 | |

| cp | 复制 | 在Pod内外复制文件 | |

| rollout | 首次展示 | 管理资源的发布 | |

| scale | 规模 | 扩(缩)容Pod的数量 | |

| autoscale | 自动调整 | 自动调整Pod的数量 | |

| 高级命令 | apply | rc | 通过文件对资源进行配置 |

| label | 标签 | 更新资源上的标签 | |

| 其他命令 | cluster-info | 集群信息 | 显示集群信息 |

| version | 版本 | 显示当前Server和Client的版本 | |

| get命令: |

//查看节点

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane 173d v1.25.0

k8s-node1 Ready <none> 172d v1.25.0

k8s-node2 Ready <none> 172d v1.25.0

//查看pod

[root@k8s-master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-76d6c9b8c-6vnnf 1/1 Running 1 (172d ago) 172d

//查看命名空间

[root@k8s-master ~]# kubectl get ns

NAME STATUS AGE

default Active 173d

kube-flannel Active 172d

kube-node-lease Active 173d

kube-public Active 173d

kube-system Active 173d

//指定查看空间

[root@k8s-master ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-c676cc86f-pjddv 1/1 Running 1 (172d ago) 173d

coredns-c676cc86f-z9cwm 1/1 Running 1 (172d ago) 173d

etcd-k8s-master 1/1 Running 3 (172d ago) 173d

kube-apiserver-k8s-master 1/1 Running 3 (172d ago) 173d

kube-controller-manager-k8s-master 1/1 Running 3 (172d ago) 173d

kube-proxy-8rns9 1/1 Running 3 (172d ago) 173d

kube-proxy-mx2h2 1/1 Running 1 (172d ago) 172d

kube-proxy-p49nr 1/1 Running 1 (172d ago) 172d

kube-scheduler-k8s-master 1/1 Running 3 (172d ago) 173d

//查看pod详细信息

//查看名称空间来自那台主机,查看容器,KubeProxy提供负载均衡随机分配

[root@k8s-node1 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-76d6c9b8c-6vnnf 1/1 Running 1 (10m ago) 39m 10.244.2.3 k8s-node2 <none> <none>

//查看控制器,可以看到控制器管理的pod

[root@k8s-node1 ~]# kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 1/1 1 1 44m

// AVAILABLE 是否可用

// UP-TO-DATE 更新到最新状态

create命令

[root@k8s-master ~]# kubectl create deployment test1 --image busybox

deployment.apps/test1 created

[root@k8s-master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-76d6c9b8c-6vnnf 1/1 Running 2 (157m ago) 6h54m

test1-5b6fc8994b-7fdf4 0/1 ContainerCreating 0 13s

[root@k8s-master ~]#

run命令

[root@k8s-master ~]# kubectl run nginx --image nginx

pod/nginx created //启动 nginx的pod

[root@k8s-master ~]# kubectl delete pods nginx

pod "nginx" deleted //删除 nginx的pod

delete命令

//删除没有添加控制器pod

[root@k8s-master ~]# kubectl delete pod nginx-76d6c9b8c-pgwzj

pod "nginx-76d6c9b8c-pgwzj" deleted

//控制器使用这个方法删除

//删除控制器,控制器下的pod也会自动删除

[root@k8s-master ~]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 1/1 1 1 173d

test 1/1 1 1 8m15s

//删除控制器

[root@k8s-master ~]# kubectl delete deployment test

deployment.apps "test" deleted

[root@k8s-master ~]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 1/1 1 1 173d

expose命令

[root@k8s-master ~]# kubectl create deployment web --image busybox //列出所有的pod

deployment.apps/web created

[root@k8s-master ~]# kubectl expose deployment web --port 8080 --target-port 80 //显示所有pod的详细信息

service/web exposed

[root@k8s-master ~]# kubectl get svc 列出所有服务

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 23h

nginx NodePort 10.98.79.60 <none> 80:30274/TCP 7h1m

web ClusterIP 10.105.214.159 <none> 8080/TCP 48s

10.资源管理方式

pod分为俩种:

自主式pod,node节点挂了,pod就没了

控制器管理的pod,如果pod挂了,会从新在起一个pod

service,如果运行pod的node宕机会转到下个node在运行这个pod但是IP和端口也会发生变化,就无法找到这个pod,添加标签,标签是不会发生改变,就可以找到这个pod

pod会将自身的信息重新发给service(自带的协议),在给标签,相当于是一个pod里的负载均衡

命令式对象管理:直接使用命令去操作kubernetes资源

kubectl run nginx-pod --image=nginx:1.17.1 --port=80

命令式对象配置:通过命令配置和配置文件去操作kubernetes资源

//create/patch 打补丁或创建

kubectl create/patch -f nginx-pod.yaml

声明式对象配置:通过apply命令和配置文件去操作kubernetes资源

kubectl apply -f nginx-pod.yaml

| 类型 | 操作对象 | 适用环境 | 优点 | 缺点 |

|---|---|---|---|---|

| 命令式对象管理 | 对象 | 测试 | 简单 | 只能操作活动对象,无法审计、跟踪 |

| 命令式对象配置 | 文件 | 开发 | 可以审计、跟踪 | 项目大时,配置文件多,操作麻烦 |

| 声明式对象配置 | 目录 | 开发 | 支持目录操作 | 意外情况下难以调试 |

命令式对象管理

kubectl命令

kubectl是kubernetes集群的命令行工具,通过它能够对集群本身进行管理,并能够在集群上进行容器化应用的安装部署。kubectl命令的语法如下:

kubectl [command] [type] [name] [flags]

comand:指定要对资源执行的操作,例如create、get、delete

type:指定资源类型,比如deployment、pod、service

name:指定资源的名称,名称大小写敏感

flags:指定额外的可选参数

# 查看所有pod

kubectl get pod

# 查看某个pod

kubectl get pod pod_name

# 查看某个pod,以yaml格式展示结果

kubectl get pod pod_name -o yaml

资源类型

kubernetes中所有的内容都抽象为资源,可以通过下面的命令进行查看:

kubectl api-resources

经常使用的资源有下面这些:

可以用这个命令看有那些命令可以缩写

[root@k8s-master ~]# kubectl api-resources

NAME SHORTNAMES APIVERSION NAMESPACED KIND

bindings v1 true Binding

componentstatuses cs v1 false ComponentStatus

configmaps cm v1 true ConfigMap

endpoints ep v1 true Endpoints

events ev v1 true Event

limitranges limits v1 true LimitRange

namespaces ns v1 false Namespace

nodes no v1 false Node

persistentvolumeclaims pvc v1 true PersistentVolumeClaim

persistentvolumes pv v1 false PersistentVolume

pods po v1 true Pod

podtemplates v1 true PodTemplate

replicationcontrollers rc v1 true ReplicationController

resourcequotas quota v1 true ResourceQuota

secrets v1 true Secret

kubernetes允许对资源进行多种操作,可以通过–help查看详细的操作命令

kubectl --help

经常使用的操作有下面这些:

| 资源分类 | 资源名称 | 缩写 | 资源作用 |

|---|---|---|---|

| 集群级别资源 | nodes | no | 集群组成部分 |

| namespaces | ns | 隔离Pod | |

| pod资源 | pods | po | 装载容器 |

| pod资源控制器 | replicationcontrollers | rc | 控制pod资源 |

| replicasets | rs | 控制pod资源 | |

| deployments | deploy | 控制pod资源 | |

| daemonsets | ds | 控制pod资源 | |

| jobs | 控制pod资源 | ||

| cronjobs | cj | 控制pod资源 | |

| horizontalpodautoscalers | hpa | 控制pod资源 | |

| statefulsets | sts | 控制pod资源 | |

| 服务发现资源 | services | svc | 统一pod对外接口 |

| ingress | ing | 统一pod对外接口 | |

| 存储资源 | volumeattachments | 存储 | |

| persistentvolumes | pv | 存储 | |

| persistentvolumeclaims | pvc | 存储 | |

| 配置资源 | configmaps | cm | 配置 |

| secrets | 配置 |

下面命名空间一个简单的展示

//创建命名空间

[root@k8s-master ~]# kubectl create ns runtime

namespace/runtime created

[root@k8s-master ~]# kubectl get ns

NAME STATUS AGE

default Active 173d

kube-flannel Active 173d

kube-node-lease Active 173d

kube-public Active 173d

kube-system Active 173d

runtime Active 4s

//创建pod到命名空间

[root@k8s-master ~]# kubectl run pod --image=nginx:latest -n runtime

pod/pod created

//查看命名空间的pod

[root@k8s-master ~]# kubectl get pod -n runtime

NAME READY STATUS RESTARTS AGE

pod 1/1 Running 0 39s

//删除命名空间的pod

[root@k8s-master ~]# kubectl delete pod pod -n runtime

pod "pod" deleted

//查看确认删除

[root@k8s-master ~]# kubectl get pods -n runtime

No resources found in runtime namespace.

10.1命令式对象配置

[root@k8s-master ~]# vi nginxpod.yml

apiVersion: v1

kind: Namespace

metadata:

name: dev

---

apiVersion: v1

kind: Pod

metadata:

name: nginxpod

namespace: dev

spec:

containers:

- name: nginx-containers

image: nginx:latest

//执行create命令,创建资源

[root@k8s-master ~]# kubectl create -f nginxpod.yml

namespace/dev created

pod/nginxpod created

//get命令,查看资源

[root@k8s-master ~]# kubectl get ns

NAME STATUS AGE

default Active 22h

dev Active 61s

kube-flannel Active 22h

kube-node-lease Active 22h

kube-public Active 22h

kube-system Active 22h

[root@k8s-master ~]# kubectl get ns default

NAME STATUS AGE

default Active 22h

查看资源对象

[root@k8s-master manifest]# kubectl get -f nginxpod.yml

NAME STATUS AGE

namespace/dev Active 99s

NAME READY STATUS RESTARTS AGE

pod/nginxpod 0/1 Pending 0 99s

//delete命令,删除资源

[root@k8s-master manifest]# kubectl delete -f nginxpod.yml

namespace "dev" deleted

pod "nginxpod" deleted

[root@k8s-master manifest]# kubectl get ns

NAME STATUS AGE

default Active 22h

kube-flannel Active 22h

kube-node-lease Active 22h

kube-public Active 22h

10.2声明式对象配置

声明式对象配置跟命令式对象配置很相似,但是它只有一个命令apply。

//创建资源

[root@k8s-master ~]# kubectl apply -f nginxpod.yml

namespace/dev created

pod/nginxpod created

//执行两次,没有报错

[root@k8s-master ~]# kubectl apply -f nginxpod.yml

namespace/dev unchanged

pod/nginxpod unchanged

[root@k8s-master ~]# kubectl get -f nginxpod.yml

NAME STATUS AGE

namespace/dev Active 8s

NAME READY STATUS RESTARTS AGE

pod/nginxpod 0/1 Pending 0 8s

11.标签使用

[root@k8s-master ~]# kubectl get pods -n devNAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 66m

[root@k8s-master ~]# kubectl describe pod nginx -n dev|grep -i label

Labels: <none>

[root@k8s-master ~]#

[root@k8s-master ~]# kubectl get pods -n devNAME READY STATUS RESTARTS AGE

mynginx 1/1 Running 0 2m29s

nginx 1/1 Running 0 72m

nginx1 1/1 Running 0 2m48s

nginxpod 1/1 Running 0 4m4s

[root@k8s-master ~]#

# 为pod资源打标签

[root@k8s-master ~]# kubectl label pod nginx -n dev app=nginx

pod/nginx labeled

[root@k8s-master ~]#

[root@k8s-master ~]# kubectl label pod nginx1 -n dev app=nginx1

pod/nginx1 labeled

[root@k8s-master ~]# kubectl label pod nginxpod -n dev app=nginxpod

pod/nginxpod labeled

[root@k8s-master ~]# kubectl label pod mynginx -n dev app=mynginx

pod/mynginx labeled

[root@k8s-master ~]#

# 为pod资源更新标签

[root@k8s-master ~]# kubectl label pod nginx -n dev app=test

error: 'app' already has a value (nginx), and --overwrite is false

[root@k8s-master ~]# kubectl label pod nginx -n dev app=test --overwrite

pod/nginx labeled

[root@k8s-master ~]#

[root@k8s-master ~]# kubectl get pod nginx -n dev --show-labels

NAME READY STATUS RESTARTS AGE LABELS

nginx 1/1 Running 0 74m app=nginx

[root@k8s-master ~]# kubectl get pod nginx -n dev --show-labels

NAME READY STATUS RESTARTS AGE LABELS

nginx 1/1 Running 0 74m app=test

[root@k8s-master ~]#

# 查看标签

[root@k8s-master ~]# kubectl describe pod nginx -n dev|grep -i label

Labels: app=nginx

[root@k8s-master ~]# kubectl get pod nginx -n dev --show-labels

NAME READY STATUS RESTARTS AGE LABELS

nginx 1/1 Running 0 71m app=nginx

[root@k8s-master ~]#

查看所有标签

[root@k8s-master ~]# kubectl get pods -n dev --show-labels

NAME READY STATUS RESTARTS AGE LABELS

mynginx 1/1 Running 0 7m10s app=mynginx

nginx 1/1 Running 0 76m app=test

nginx1 1/1 Running 0 7m29s app=nginx1

nginxpod 1/1 Running 0 8m45s app=nginxpod

[root@k8s-master ~]#

# 筛选标签:kubectl get pods -n dev -l app=test --show-labels

[root@k8s-master ~]# kubectl get pods -n dev --show-labels

NAME READY STATUS RESTARTS AGE LABELS

mynginx 1/1 Running 0 7m48s app=mynginx

nginx 1/1 Running 0 77m app=test

nginx1 1/1 Running 0 8m7s app=nginx1

nginxpod 1/1 Running 0 9m23s app=nginxpod

筛选某一个标签

[root@k8s-master ~]# kubectl get pods -n dev -l app=test --show-labels

NAME READY STATUS RESTARTS AGE LABELS

nginx 1/1 Running 0 78m app=test

[root@k8s-master ~]#

除了被筛选的

[root@k8s-master ~]# kubectl get pods -n dev -l app!=test --show-labels

NAME READY STATUS RESTARTS AGE LABELS

mynginx 1/1 Running 0 10m app=mynginx

nginx1 1/1 Running 0 10m app=nginx1

nginxpod 1/1 Running 0 11m app=nginxpod

[root@k8s-master ~]#

#删除标签

[root@k8s-master ~]# kubectl label pod nginx1 -n dev app-

pod/nginx1 unlabeled

[root@k8s-master ~]# kubectl get pods -n dev --show-labels

NAME READY STATUS RESTARTS AGE LABELS

mynginx 1/1 Running 0 12m app=mynginx

nginx 1/1 Running 0 81m app=test

nginx1 1/1 Running 0 12m <none>

nginxpod 1/1 Running 0 13m app=nginxpod

786

786

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?