忍不住还是吐槽一下CSDN的编辑器,相同的内容是已经写第二次了。前一次都快写完,不小心再修改另一篇blog的内容,临时文章被冲掉了,真是哭死,CSDN还我2小时~~~

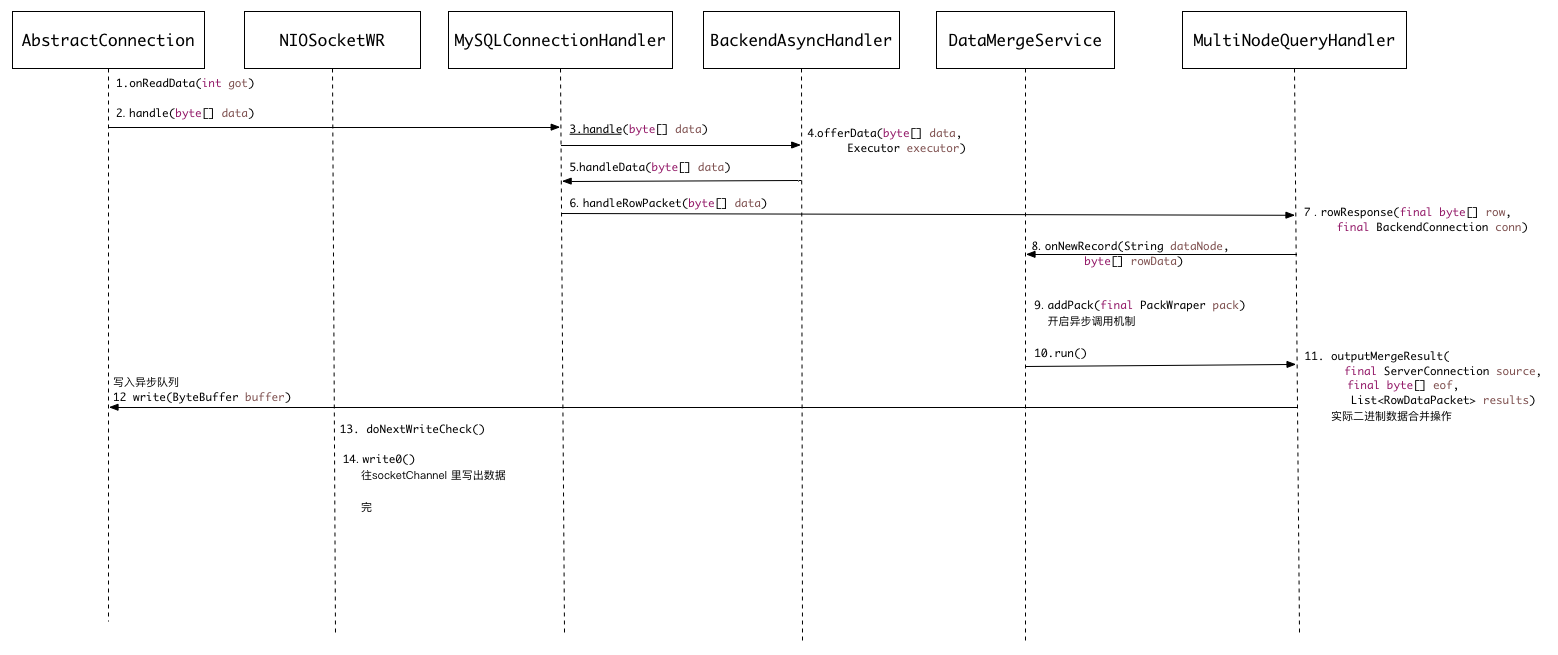

上一篇看了Mycat的启动与前端请求的处理,我们继续看看下报文 的响应与返回。

io.mycat.net.NIOSocketWR 做为前后端数据读写的实际操作类,在得到MySQL数据器反馈数据后会调用asynRead()方法,申请本次操作的所需要的buffer。

@Override

public void asynRead() throws IOException {

ByteBuffer theBuffer = con.readBuffer;

if (theBuffer == null) {

theBuffer = con.processor.getBufferPool().allocate();

con.readBuffer = theBuffer;

}

int got = channel.read(theBuffer);

con.onReadData(got);

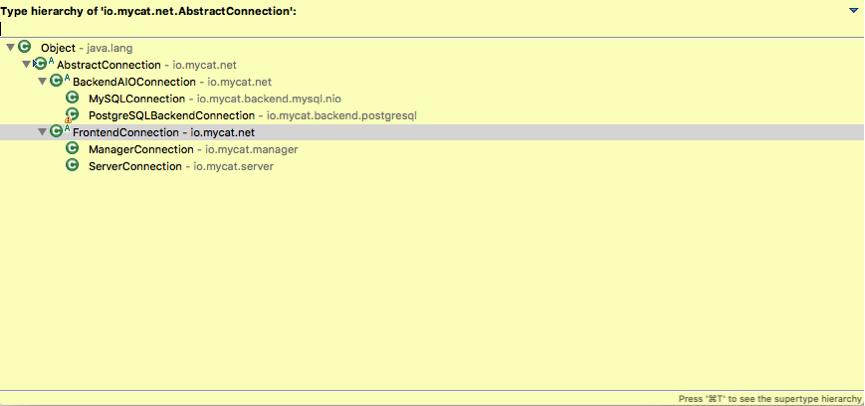

}其中io.mycat.net. AbstractConnection作为所有数据库链接的抽象父类,已经实现了onReadData()方法,用来从socketChannel中读取二进制字节流。循环加载数据后即调用指定的handle方法分析、拆解报文 。

public void onReadData(int got) throws IOException {

//……

if (position >= offset + length && readBuffer != null) {

// handle this package

readBuffer.position(offset);

byte[] data = new byte[length];

readBuffer.get(data, 0, length);

handle(data);

// maybe handle stmt_close

if(isClosed()) {

return ;

}

// offset to next position

offset += length;

// reached end

if (position == offset) {

// if cur buffer is temper none direct byte buffer and not

// received large message in recent 30 seconds

// then change to direct buffer for performance

if (readBuffer != null && !readBuffer.isDirect()

&& lastLargeMessageTime < lastReadTime - 30 * 1000L) { // used temp heap

if (LOGGER.isDebugEnabled()) {

LOGGER.debug("change to direct con read buffer ,cur temp buf size :" + readBuffer.capacity());

}

recycle(readBuffer);

readBuffer = processor.getBufferPool().allocateConReadBuffer();

} else {

if (readBuffer != null)

readBuffer.clear();

}

// no more data ,break

readBufferOffset = 0;

break;

// ……

@Override

public void handle(byte[] data) {

if (isSupportCompress()) {

List<byte[]> packs = CompressUtil.decompressMysqlPacket(data, decompressUnfinishedDataQueue);

for (byte[] pack : packs) {

if (pack.length != 0)

handler.handle(pack);

}

} else {

handler.handle(data);

}

}由于是MySQL后端反回的数据,因此我们这里用得是 io.mycat.backend.mysql.nio. MySQLConnectionHandler

MySQLConnectionHandler的 handleData(byte[] data) 方法是具体的处理入口。

@Override

protected void handleData(byte[] data) {

switch (resultStatus) {

case RESULT_STATUS_INIT:

switch (data[4]) {

case OkPacket.FIELD_COUNT:

handleOkPacket(data);

break;

case ErrorPacket.FIELD_COUNT:

handleErrorPacket(data);

break;

case RequestFilePacket.FIELD_COUNT:

handleRequestPacket(data);

break;

default:

resultStatus = RESULT_STATUS_HEADER;

header = data;

fields = new ArrayList<byte[]>((int) ByteUtil.readLength(data,

4));

}

break;

case RESULT_STATUS_HEADER:

switch (data[4]) {

case ErrorPacket.FIELD_COUNT:

resultStatus = RESULT_STATUS_INIT;

handleErrorPacket(data);

break;

case EOFPacket.FIELD_COUNT:

resultStatus = RESULT_STATUS_FIELD_EOF;

handleFieldEofPacket(data);

break;

default:

fields.add(data);

}

break;

case RESULT_STATUS_FIELD_EOF:

switch (data[4]) {

case ErrorPacket.FIELD_COUNT:

resultStatus = RESULT_STATUS_INIT;

handleErrorPacket(data);

break;

case EOFPacket.FIELD_COUNT:

resultStatus = RESULT_STATUS_INIT;

handleRowEofPacket(data);

break;

default:

handleRowPacket(data);

}

break;

default:

throw new RuntimeException("unknown status!");

}

}

/**

* OK数据包处理

*/

private void handleOkPacket(byte[] data) {

ResponseHandler respHand = responseHandler;

if (respHand != null) {

respHand.okResponse(data, source);

}

}handleOkPacket 里面又做了一次选择,做为单个数据结点反回还是比较简单的,我们来看一下多数据结点的处理逻辑。

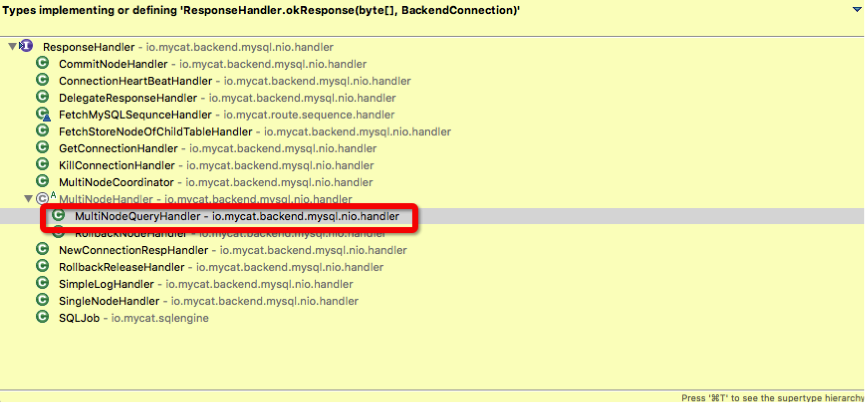

io.mycat.backend.mysql.nio.handler.MultiNodeQueryHandler

@Override

public void rowResponse(final byte[] row, final BackendConnection conn) {

if (errorRepsponsed.get()) {

// the connection has been closed or set to "txInterrupt" properly

//in tryErrorFinished() method! If we close it here, it can

// lead to tx error such as blocking rollback tx for ever.

// @author Uncle-pan

// @since 2016-03-25

//conn.close(error);

return;

}

lock.lock();

try {

RouteResultsetNode rNode = (RouteResultsetNode) conn.getAttachment();

String dataNode = rNode.getName();

if (dataMergeSvr != null) {

// even through discarding the all rest data, we can't

//close the connection for tx control such as rollback or commit.

// So the "isClosedByDiscard" variable is unnecessary.

// @author Uncle-pan

// @since 2016-03-25

dataMergeSvr.onNewRecord(dataNode, row);

} else {

// cache primaryKey-> dataNode

if (primaryKeyIndex != -1) {

RowDataPacket rowDataPkg = new RowDataPacket(fieldCount);

rowDataPkg.read(row);

String primaryKey = new String(

rowDataPkg.fieldValues.get(primaryKeyIndex));

LayerCachePool pool = MycatServer.getInstance()

.getRouterservice().getTableId2DataNodeCache();

pool.putIfAbsent(priamaryKeyTable, primaryKey, dataNode);

}

row[3] = ++packetId;

session.getSource().write(row);

}

} catch (Exception e) {

handleDataProcessException(e);

} finally {

lock.unlock();

}

}

上面的代码块中的dataMergeSvr 即为多数据结点时最重要的数据合并处理类,我们再来看下mycat 到底是怎么来做数据合并的。

/**

* process new record (mysql binary data),if data can output to client

* ,return true

*

* @param dataNode

* DN's name (data from this dataNode)

* @param rowData

* raw data

* @param conn

*/

public boolean onNewRecord(String dataNode, byte[] rowData) {

// 对于需要排序的数据,由于mysql传递过来的数据是有序的,

// 如果某个节点的当前数据已经不会进入,后续的数据也不会入堆

if (canDiscard.size() == rrs.getNodes().length) {

// "END_FLAG" only should be added by MultiNodeHandler.rowEofResponse()

// @author Uncle-pan

// @since 2016-03-23

//LOGGER.info("now we output to client");

//addPack(END_FLAG_PACK);

return true;

}

if (canDiscard.get(dataNode) != null) {

return true;

}

final PackWraper data = new PackWraper();

data.node = dataNode;

data.data = rowData;

addPack(data);

return false;

}

/**

* Add a row pack, and may be wake up a business thread to work if not running.

* @param pack row pack

* @return true wake up a business thread, otherwise false

*

* @author Uncle-pan

* @since 2016-03-23

*/

private final boolean addPack(final PackWraper pack){

packs.add(pack);

if(running.get()){

return false;

}

final MycatServer server = MycatServer.getInstance();

server.getBusinessExecutor().execute(this);

return true;

}

这里就能看到这个DataMergeServer 还是另一个多线程处理机制的实现。

在run方法里寻找出最后一个数据包,并通过 outputMergeResult 写入前端连接的 write buffer queue。

// loop-on-packs

for (; ; ) {

final PackWraper pack = packs.poll();

// async: handling row pack queue, this business thread should exit when no pack

// @author Uncle-pan

// @since 2016-03-23

if(pack == null){

nulpack = true;

break;

}

// eof: handling eof pack and exit

if (pack == END_FLAG_PACK) {

final int warningCount = 0;

final EOFPacket eofp = new EOFPacket();

final ByteBuffer eof = ByteBuffer.allocate(9);

BufferUtil.writeUB3(eof, eofp.calcPacketSize());

eof.put(eofp.packetId);

eof.put(eofp.fieldCount);

BufferUtil.writeUB2(eof, warningCount);

BufferUtil.writeUB2(eof, eofp.status);

final ServerConnection source = multiQueryHandler.getSession().getSource();

final byte[] array = eof.array();

multiQueryHandler.outputMergeResult(source, array, getResults(array));

break;

}

// merge: sort-or-group, or simple add

final RowDataPacket row = new RowDataPacket(fieldCount);

row.read(pack.data);

if (grouper != null) {

grouper.addRow(row);

} else if (sorter != null) {

if (!sorter.addRow(row)) {

canDiscard.put(pack.node, true);

}

} else {

result.add(row);

}

}// rof在io.mycat.backend.mysql.nio.handler.MultiNodeQueryHandler中调用最大的connection写入方法,再通过异步写出socket channel。

public void outputMergeResult(final ServerConnection source,

final byte[] eof, List<RowDataPacket> results) {

try {

lock.lock();

ByteBuffer buffer = session.getSource().allocate();

final RouteResultset rrs = this.dataMergeSvr.getRrs();

// 处理limit语句

int start = rrs.getLimitStart();

int end = start + rrs.getLimitSize();

if (start < 0)

start = 0;

if (rrs.getLimitSize() < 0)

end = results.size();

if (end > results.size())

end = results.size();

for (int i = start; i < end; i++) {

RowDataPacket row = results.get(i);

if( prepared ) {

BinaryRowDataPacket binRowDataPk = new BinaryRowDataPacket();

binRowDataPk.read(fieldPackets, row);

binRowDataPk.packetId = ++packetId;

binRowDataPk.write(source);

} else {

row.packetId = ++packetId;

buffer = row.write(buffer, source, true);

}

}

eof[3] = ++packetId;

if (LOGGER.isDebugEnabled()) {

LOGGER.debug("last packet id:" + packetId);

}

source.write(source.writeToBuffer(eof, buffer));

} catch (Exception e) {

handleDataProcessException(e);

} finally {

lock.unlock();

dataMergeSvr.clear();

}

}io.mycat.net.AbstractConnection

@Override

public final void write(ByteBuffer buffer) {

if (isSupportCompress()) {

ByteBuffer newBuffer = CompressUtil.compressMysqlPacket(buffer, this, compressUnfinishedDataQueue);

writeQueue.offer(newBuffer);

} else {

writeQueue.offer(buffer);

}

// if ansyn write finishe event got lock before me ,then writing

// flag is set false but not start a write request

// so we check again

try {

this.socketWR.doNextWriteCheck();

} catch (Exception e) {

LOGGER.warn("write err:", e);

this.close("write err:" + e);

}

}到这里为止,mycat 从后端多个数据库中拿到数据后,组合合并在一个响应里反馈客户端的流程大体上就这样子,再来补一张图看得会清楚一些。

到这里为止,非常粗力度的过一下 mycat 的基础运行原理。虽然mycat 在一些极端情况下不稳定,虽然一些代码细节还有待优化,但这些都不影响 mycat 是目前见过最好的来自中国民间自发力量的开源项目,而且还有非常大的实际使用价值,真心赞。

1825

1825

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?