源数据

需求,把两个表合一块,把pid拿名字替换

流程分析 首先是两种数据格式不一样的数据,但是有相同的pid,就可以根据它为key分区,然后在reduce写逻辑代码进行合并.

bean对象

package com.buba.mapreduce.table;

import org.apache.hadoop.io.Writable;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

public class TableBean implements Writable {

private String order_id; //订单id

private String p_id; //产品id

private int amout; //产品数量

private String pname; //产品名称

private String flag; //表的标记 order为0 pd为1

public TableBean() {

}

public TableBean(String order_id, String p_id, int amout, String pname, String flag) {

this.order_id = order_id;

this.p_id = p_id;

this.amout = amout;

this.pname = pname;

this.flag = flag;

}

@Override

public String toString() {

return this.order_id + "\t" + this.pname + "\t" + amout;

}

public String getOrder_id() {

return order_id;

}

public void setOrder_id(String order_id) {

this.order_id = order_id;

}

public String getP_id() {

return p_id;

}

public void setP_id(String p_id) {

this.p_id = p_id;

}

public int getAmout() {

return amout;

}

public void setAmout(int amout) {

this.amout = amout;

}

public String getPname() {

return pname;

}

public void setPname(String pname) {

this.pname = pname;

}

public String getFlag() {

return flag;

}

public void setFlag(String flag) {

this.flag = flag;

}

@Override

public void write(DataOutput dataOutput) throws IOException {

dataOutput.writeUTF(order_id);

dataOutput.writeUTF(p_id);

dataOutput.writeInt(amout);

dataOutput.writeUTF(pname);

dataOutput.writeUTF(flag);

}

@Override

public void readFields(DataInput dataInput) throws IOException {

this.order_id = dataInput.readUTF();

this.p_id = dataInput.readUTF();

this.amout = dataInput.readInt();

this.pname = dataInput.readUTF();

this.flag = dataInput.readUTF();

}

}

mapper

package com.buba.mapreduce.table;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.lib.input.FileSplit;

import java.io.IOException;

public class TableMapper extends Mapper<LongWritable, Text,Text,TableBean> {

TableBean bean = new TableBean();

Text k = new Text();

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

//1.获取文件类型

FileSplit inputSplit = (FileSplit)context.getInputSplit();

//2.获取切片路径 获取切片文件名称

String name = inputSplit.getPath().getName();

//3.获取输入数据

String line = value.toString();

//4.不同文件不同处理

if(name.startsWith("order")){//订单相关信息处理

//切割

String[] fields = line.split("\t");

//封装bean对象 1001 01 1

bean.setOrder_id(fields[0]);

bean.setP_id(fields[1]);

bean.setAmout(Integer.parseInt(fields[2]));

//没值的不能为null必须赋值 不然会报空指针,因为bean对象序列化了

bean.setPname("");

//以0标记为order表

bean.setFlag("0");

k.set(fields[1]);

}else{//产品表信息处理 01 小米

//切割

String[] fields = line.split("\t");

bean.setOrder_id("");

bean.setP_id(fields[0]);

bean.setAmout(0);

//没值的不能为null必须赋值

bean.setPname(fields[1]);

//以0标记为order表

bean.setFlag("1");

k.set(fields[0]);

}

context.write(k,bean);

}

}

reducer

package com.buba.mapreduce.table;

import org.apache.commons.beanutils.BeanUtils;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

import java.lang.reflect.InvocationTargetException;

import java.util.ArrayList;

public class TableReducer extends Reducer<Text,TableBean,TableBean, NullWritable> {

@Override

protected void reduce(Text key, Iterable<TableBean> values, Context context) throws IOException, InterruptedException {

//准备存储数据的缓存

TableBean pdBean = new TableBean();

ArrayList<TableBean> orderBeans = new ArrayList<>();

//根据文件的不同分别处理

for(TableBean bean:values){

if("0".equals(bean.getFlag())){//order表

TableBean orBean = new TableBean();//使用bean工具类进行重新赋值拷贝,不然出来的数据不对

try {

BeanUtils.copyProperties(orBean,bean);

} catch (IllegalAccessException e) {

e.printStackTrace();

} catch (InvocationTargetException e) {

e.printStackTrace();

}

orderBeans.add(orBean);

}else{//产品表处理

try { //把bean的属性拷贝到pbBean让他俩没联系

BeanUtils.copyProperties(pdBean,bean);

} catch (IllegalAccessException e) {

e.printStackTrace();

} catch (InvocationTargetException e) {

e.printStackTrace();

}

}

}

//数据拼接

for(TableBean bean : orderBeans){

bean.setPname(pdBean.getPname());

//循环写出

context.write(bean,NullWritable.get());

}

}

}

driver

package com.buba.mapreduce.table;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class TableDriver {

public static void main(String[] args)throws Exception {

//1.获取job信息

Configuration configuration = new Configuration();

Job job = Job.getInstance(configuration);

//2.获取jar的存储路径

job.setJarByClass(TableDriver.class);

//3.关联map和reduce的class类

job.setMapperClass(TableMapper.class);

job.setReducerClass(TableReducer.class);

//4.设置map阶段输出key和value类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(TableBean.class);

//5.设置最后输入数据的key和value的类型

job.setOutputKeyClass(TableBean.class);

job.setOutputValueClass(NullWritable.class);

//6.设置输入数据的路径和输出数据的路径

FileInputFormat.setInputPaths(job,new Path(args[0]));

FileOutputFormat.setOutputPath(job,new Path(args[1]));

//7.提交

boolean b = job.waitForCompletion(true);

System.exit(b?0:1);

}

}

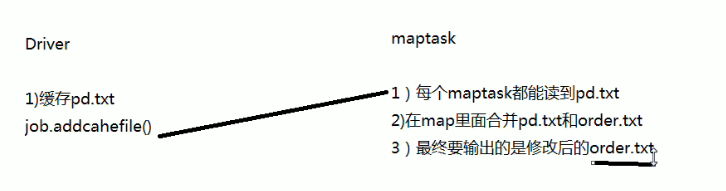

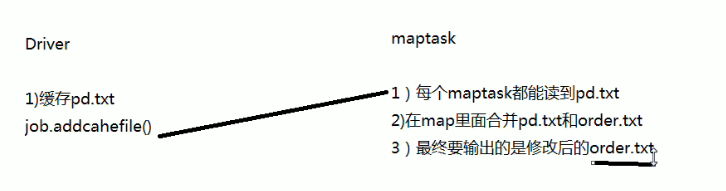

使用Distributedcache缓存文件方式完成需求

在driver里使用缓存文件缓存pd.txt,让每个maptask都可以读到pd.txt,然后在每个maptask里进行合并,然后输出.

上面这种做法,reduce端压力太大,而前面mapper又很清闲.有一种写法可以分担reduce的压力,pd.txt因为是商品表,一般都是定死的,没什么变化,所以可以给它在maptask阶段加载就可以了.

重写mapper

重写里面的setup方法.因为在mapper里面如果加载pd.txt的话每执行一次mapper都加载一次pd.txt很浪费资源.所以重写setup方法,再它里面进行加载,就加载一次了.

package com.buba.mapreduce.distributedcache;

import com.buba.mapreduce.table.TableBean;

import org.apache.commons.lang.StringUtils;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.*;

import java.util.HashMap;

import java.util.Map;

public class DistributedMapper extends Mapper<LongWritable, Text,Text, NullWritable> {

//保存pd.txt数据 01 小米

private Map<String,String> pdMap = new HashMap<>();

@Override

protected void setup(Context context) throws IOException, InterruptedException {

//读取pd.txt文件,并把数据存储到缓存(集合) driver里已经加载这个文件了,直接写相对路径就可以获取到,写绝对磁盘路径也可以

BufferedReader reader = new BufferedReader(new InputStreamReader(new FileInputStream(new File("pd2.txt"))));

String line;

while (StringUtils.isNotEmpty(line = reader.readLine())){

//截取

String[] fields = line.split("\t");

//存储到缓存中去

pdMap.put(fields[0],fields[1]);

}

//关闭资源

reader.close();

}

Text k = new Text();

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

//需求:要合并pd.txt和order.txt里面的内容

//获取一行

String line = value.toString();

//截取 1001 01 1

String[] fields = line.split("\t");

//获取产品名称

String pdName = pdMap.get(fields[1]);

//拼接

k.set(fields[0] + "\t" + pdName + "\t" + fields[2]);

context.write(k,NullWritable.get());

}

}

重写driver,不需要reducer了因为合并的事在maptask阶段都干完了,只不过没排序,加上reducer也行,做一下排序输出就行了.

package com.buba.mapreduce.distributedcache;

import com.buba.mapreduce.table.TableDriver;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.net.URI;

public class DristributedDriver {

public static void main(String[] args)throws Exception {

//1.获取job信息

Configuration configuration = new Configuration();

Job job = Job.getInstance(configuration);

//2.获取jar的存储路径

job.setJarByClass(TableDriver.class);

//3.关联map和reduce的class类

job.setMapperClass(DistributedMapper.class);

// job.setReducerClass(TableReducer.class);

//4.设置map阶段输出key和value类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(NullWritable.class);

//5.设置最后输入数据的key和value的类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(NullWritable.class);

//6.设置输入数据的路径和输出数据的路径

FileInputFormat.setInputPaths(job,new Path(args[0]));

FileOutputFormat.setOutputPath(job,new Path(args[1]));

//加载缓存数据

job.addCacheFile(new URI("file:/F:/pd2.txt"));

//不需要reduce阶段,所以设置为0

job.setNumReduceTasks(0);

//7.提交

boolean b = job.waitForCompletion(true);

System.exit(b?0:1);

}

}

执行的时候输入就放一个order就可以了,因为pd.txt已经提前加载了.

执行完一次后项目根目录下会生成缓存文件,它就是读取的这里的文件,如果pd.txt有变动的话,记得把这里的文件删掉,重新生成,不然一直读的是缓存.

522

522

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?