2020/3/10更新一点:增加了CVPR2020的华为诺亚方舟实验室的一款轻量型网络

GhostNet: More Features from Cheap Operations

之前沿着这样的路线:AlexNet,VGG,GoogLeNet v1,ResNet,DenseNet把主要的经典的分类网络的paper看完了,主要是人们发现很深的网络很难train,知道之后出现的Batch Normalization和ResNet才解决了深层网络的训练问题,因为网络深了之后准确率上升,所以之后的网络效果在解决了train的问题之后,就明显比之前的好,而且通过Bottleneck的结构,也能够控制参数量,减小过拟合的风险,同时计算量也不会失控。

看完paper,就想实操一下,复现ImageNet有难度的话,那就试试cifar-10吧,找了一个github代码跑一跑,顺便也是当作一个分类任务代码的模板吧,其实这个也可以去pytorch examples里面看那个MNIST的,但是MNIST太简单了,所以就找了下面这个:

质量还可以的,主要通过改pytorch官方网络中的一些参数(因为ImageNet针对的是输入分辨率为224×224的图片,这里是3×32×32的),构建了一些经典的网络,用来分类cifar-10。

A little supplement

我就改了一点点的地方,使她能自动调整学习率了,不用manually去调整(改动的地方非常少),然后用torchsummary来看看每个模型的参数量,这个项目其实非常小,只有一个主文件,但是github上已经有1.7k的stars。

ps:在用VGG16,ResNet18,ResNet50的时候我设置了400个epoch,后来感觉实在太久了,况且后面的提升也不大,所以后面的训练都设置为300个epoch,当然精度可能会受到一点影响

Main code

这里主要放一下主训练函数,其他模型的构建都可以参考torchvision.models里面的源码,虽然没有PreActResNet和DPN,但是PreActResNet和ResNet的搭建非常相似,只是是一个“预激活”的版本,所以可以参考ResNet代码构建,DPN我没看过,但是感觉看一遍论文中的结构部分,参照ResNet代码,构建也不是很难。

'''Train CIFAR10 with PyTorch.'''

import torch

import torch.nn as nn

import torch.optim as optim

import torch.nn.functional as F

import torch.backends.cudnn as cudnn

import torchvision

import torchvision.transforms as transforms

import os

import argparse

from models import *

from utils import progress_bar

parser = argparse.ArgumentParser(description='PyTorch CIFAR10 Training')

parser.add_argument('--lr', default=0.1, type=float, help='learning rate')

parser.add_argument('--trainbs', default=128, type=int, help='trainloader batch size')

parser.add_argument('--testbs', default=100, type=int, help='testloader batch size')

parser.add_argument('--resume', '-r', action='store_true', help='resume from checkpoint')

args = parser.parse_args()

device = 'cuda' if torch.cuda.is_available() else 'cpu'

best_acc = 0 # best test accuracy

start_epoch = 0 # start from epoch 0 or last checkpoint epoch

# Data

print('==> Preparing data..')

transform_train = transforms.Compose([

transforms.RandomCrop(32, padding=4),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010)),

])

transform_test = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010)),

])

trainset = torchvision.datasets.CIFAR10(root='./data', train=True, download=True, transform=transform_train)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=args.trainbs, shuffle=True, num_workers=2)

testset = torchvision.datasets.CIFAR10(root='./data', train=False, download=True, transform=transform_test)

testloader = torch.utils.data.DataLoader(testset, batch_size=args.testbs, shuffle=False, num_workers=2)

classes = ('plane', 'car', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck')

# Model

print('==> Building model..')

# net = VGG('VGG16')

# net = ResNet18()

net = PreActResNet18()

# net = GoogLeNet()

# net = DenseNet121()

# net = ResNeXt29_2x64d()

# net = ResNeXt29_32x4d()

# net = MobileNet()

# net = MobileNetV2()

# net = DPN92()

# net = ShuffleNetG2()

# net = SENet18()

# net = ShuffleNetV2(1)

# net = EfficientNetB0()

net_name = net.name

save_path = './checkpoint/{0}_ckpt.pth'.format(net.name)

net = net.to(device)

if device == 'cuda':

net = torch.nn.DataParallel(net)

cudnn.benchmark = True

if args.resume:

# Load best checkpoint trained last time.

print('==> Resuming from checkpoint..')

assert os.path.isdir('checkpoint'), 'Error: no checkpoint directory found!'

checkpoint = torch.load(save_path)

net.load_state_dict(checkpoint['net'])

best_acc = checkpoint['acc']

start_epoch = checkpoint['epoch']

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(net.parameters(), lr=args.lr, momentum=0.9, weight_decay=5e-4)

scheduler = optim.lr_scheduler.StepLR(optimizer, step_size=70, gamma=0.1)

# Training

def train(epoch):

print('\nEpoch: %d' % epoch)

net.train()

train_loss = 0

correct = 0

total = 0

for batch_idx, (inputs, targets) in enumerate(trainloader):

inputs, targets = inputs.to(device), targets.to(device)

optimizer.zero_grad()

outputs = net(inputs)

loss = criterion(outputs, targets)

loss.backward()

optimizer.step()

train_loss += loss.item()

_, predicted = outputs.max(1)

total += targets.size(0)

correct += predicted.eq(targets).sum().item()

progress_bar(batch_idx, len(trainloader), 'Loss: %.3f | Acc: %.3f%% (%d/%d)'

% (train_loss/(batch_idx+1), 100.*correct/total, correct, total))

def test(epoch):

global best_acc

net.eval()

test_loss = 0

correct = 0

total = 0

with torch.no_grad():

for batch_idx, (inputs, targets) in enumerate(testloader):

inputs, targets = inputs.to(device), targets.to(device)

outputs = net(inputs)

loss = criterion(outputs, targets)

test_loss += loss.item()

_, predicted = outputs.max(1)

total += targets.size(0)

correct += predicted.eq(targets).sum().item()

progress_bar(batch_idx, len(testloader), 'Loss: %.3f | Acc: %.3f%% (%d/%d)'

% (test_loss/(batch_idx+1), 100.*correct/total, correct, total))

# Save checkpoint.

acc = 100.*correct/total

if acc > best_acc:

print('Saving ' + net_name + ' ..')

state = {

'net': net.state_dict(),

'acc': acc,

'epoch': epoch,

}

if not os.path.isdir('checkpoint'):

os.mkdir('checkpoint')

torch.save(state, save_path)

best_acc = acc

for epoch in range(start_epoch, start_epoch+300):

# In PyTorch 1.1.0 and later,

# you should call them in the opposite order:

# `optimizer.step()` before `lr_scheduler.step()`

train(epoch)

test(epoch)

scheduler.step() # 每隔100 steps学习率乘以0.1

print("\nTesting best accuracy:", best_acc)

Accuracy

Note:下面的Total params,Estimated Total Size (MB),Trainable params,Params size (MB)均是指改过之后适用cifar-10的相对应的网络模型,不是原ImageNet的网络模型,且batch size大小统一为128,可根据自己的硬件条件进行调整。

| Model | My Acc. | Total params | Estimated Total Size (MB) | Trainable params | Params size (MB) | Saved model size (MB) | GPU memory usage(MB) |

|---|---|---|---|---|---|---|---|

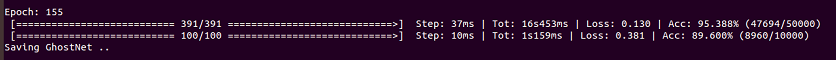

| GhostNet | 89.60% | 449,062 | 8.49 | 449,062 | 1.71 | 1.82 | 847 |

| MobileNetV2 | 92.64% | 2,296,922 | 36.14 | 2,296,922 | 8.76 | 8.96 | 3107 |

| VGG16 | 94.27% | 14,728,266 | 62.77 | 14,728,266 | 56.18 | 59.0 | 1229 |

| PreActResNet18 | 94.70% | 11,171,146 | 53.38 | 11,171,146 | 42.61 | 44.7 | 1665 |

| ResNeXt29(2x64d) | 95.09% | 9,128,778 | 99.84 | 9,128,778 | 34.82 | 36.7 | 5779 |

| ResNet50 | 95.22% | 23,520,842 | 155.86 | 23,520,842 | 89.72 | 94.4 | 5723 |

| DPN92 | 95.42% | 34,236,634 | 243.50 | 34,236,634 | 130.60 | 137.5 | 10535 |

| ResNeXt29(32x4d) | 95.49% | 4,774,218 | 83.22 | 4,774,218 | 18.21 | 19.2 | 5817 |

| DenseNet121 | 95.55% | 6,956,298 | 105.05 | 6,956,298 | 26.54 | 28.3 | 8203 |

| ResNet18 | 95.59% | 11,173,962 | 53.89 | 11,173,962 | 42.63 | 44.8 | 1615 |

| ResNet101 | 95.62% | 42,512,970 | 262.31 | 42,512,970 | 162.17 | 170.6 | 8857 |

以图为证:

GhostNet

MobileNetV2

VGG16

PreActResNet18

ResNeXt29(2x64d)

ResNet50

DPN92

ResNeXt29(32x4d)

DenseNet121

ResNet18

ResNet101

Pre-trained models

在这里我提供了上面提到的保存的模型,如果你们想测试或者modify一下都可以:

Baidu Drive

Google Drive

ps:

PreActResNet18在一开始训练的时候,初始学习率设置为0.1的时候,会发现不收敛的现象,所以只能将初始学习率设置为0.01

GhostNet并没有直接再CIFAR-10上训练,因为其参数太小了,所以performance不是特别好,多替换别的CNN的卷积操作,作为即插即用的module。lr=0.4, weight_decay=4e-5.

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?