[root@localhost ~]# ovs-ofctl dump-flows br0 cookie=0x0, duration=706691.312s, table=0, n_packets=84180530, n_bytes=7456796909, priority=0 actions=NORMAL cookie=0x0, duration=706444.352s, table=1, n_packets=0, n_bytes=0, ip,nw_dst=192.168.2.210 actions=mod_dl_dst:2e:a9:be:9e:4d:07 [root@localhost ~]# ovs-ofctl del-flows br0 [root@localhost ~]# ovs-ofctl del-flows br0 [root@localhost ~]# ovs-ofctl dump-flows br0 [root@localhost ~]#

虚拟机

qemu-system-aarch64 -name vm2 -nographic \ -enable-kvm -M virt,usb=off -cpu host -smp 2 -m 4096 \ -global virtio-blk-device.scsi=off \ -device virtio-scsi-device,id=scsi \ -kernel vmlinuz-4.18 --append "console=ttyAMA0 root=UUID=6a09973e-e8fd-4a6d-a8c0-1deb9556f477" \ -initrd initramfs-4.18 \ -drive file=vhuser-test1.qcow2 \ -m 2048M -numa node,memdev=mem -mem-prealloc \ -object memory-backend-file,id=mem,size=2048M,mem-path=/dev/hugepages,share=on \ -chardev socket,id=char1,path=$VHOST_SOCK_DIR/vhost-user1 \ -netdev type=vhost-user,id=mynet1,chardev=char1,vhostforce \ -device virtio-net-pci,netdev=mynet1,mac=00:00:00:00:00:01,mrg_rxbuf=off \

[root@localhost ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:00:00:00:00:01 brd ff:ff:ff:ff:ff:ff

inet6 fe80::200:ff:fe00:1/64 scope link

valid_lft forever preferred_lft forever

[root@localhost ~]# ip a add 10.10.103.229/24 dev eth0

[root@localhost ~]# ping 10.10.103.81

PING 10.10.103.81 (10.10.103.81) 56(84) bytes of data.

64 bytes from 10.10.103.81: icmp_seq=1 ttl=64 time=0.374 ms

64 bytes from 10.10.103.81: icmp_seq=2 ttl=64 time=0.144 ms

--- 10.10.103.81 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 0.144/0.259/0.374/0.115 ms

(gdb) b dp_netdev_upcall

Breakpoint 1 at 0x96c638: file lib/dpif-netdev.c, line 6434.

(gdb) c

Continuing.

[Switching to Thread 0xfffd53ffd510 (LWP 117402)]

Breakpoint 1, dp_netdev_upcall (pmd=pmd@entry=0x18f60810, packet_=packet_@entry=0x16e0f4b80, flow=flow@entry=0xfffd53ff48d8, wc=wc@entry=0xfffd53ff4b78, ufid=ufid@entry=0xfffd53ff4328, type=type@entry=DPIF_UC_MISS, userdata=userdata@entry=0x0,

actions=actions@entry=0xfffd53ff4348, put_actions=0xfffd53ff4388) at lib/dpif-netdev.c:6434

6434 {

(gdb) bt

#0 dp_netdev_upcall (pmd=pmd@entry=0x18f60810, packet_=packet_@entry=0x16e0f4b80, flow=flow@entry=0xfffd53ff48d8, wc=wc@entry=0xfffd53ff4b78, ufid=ufid@entry=0xfffd53ff4328, type=type@entry=DPIF_UC_MISS, userdata=userdata@entry=0x0,

actions=actions@entry=0xfffd53ff4348, put_actions=0xfffd53ff4388) at lib/dpif-netdev.c:6434

#1 0x0000000000976558 in handle_packet_upcall (put_actions=0xfffd53ff4388, actions=0xfffd53ff4348, key=0xfffd53ff9350, packet=0x16e0f4b80, pmd=0x18f60810) at lib/dpif-netdev.c:6823

#2 fast_path_processing (pmd=pmd@entry=0x18f60810, packets_=packets_@entry=0xfffd53ffca80, keys=keys@entry=0xfffd53ff7d60, flow_map=flow_map@entry=0xfffd53ff5640, index_map=index_map@entry=0xfffd53ff5620 "", in_port=<optimized out>)

at lib/dpif-netdev.c:6942

#3 0x0000000000977f58 in dp_netdev_input__ (pmd=pmd@entry=0x18f60810, packets=packets@entry=0xfffd53ffca80, md_is_valid=md_is_valid@entry=false, port_no=port_no@entry=2) at lib/dpif-netdev.c:7031

#4 0x0000000000978680 in dp_netdev_input (port_no=2, packets=0xfffd53ffca80, pmd=0x18f60810) at lib/dpif-netdev.c:7069

#5 dp_netdev_process_rxq_port (pmd=pmd@entry=0x18f60810, rxq=0x1721f440, port_no=2) at lib/dpif-netdev.c:4480

#6 0x0000000000978a24 in pmd_thread_main (f_=0x18f60810) at lib/dpif-netdev.c:5731

#7 0x00000000009fc5dc in ovsthread_wrapper (aux_=<optimized out>) at lib/ovs-thread.c:383

#8 0x0000ffffb19f7d38 in start_thread (arg=0xfffd53ffd510) at pthread_create.c:309

#9 0x0000ffffb16df690 in thread_start () from /lib64/libc.so.6

(gdb)

ovs

现在Open vSwitch主要由三个部分组成:

- ovsdb-server:OpenFlow本身被设计成网络数据包的一种处理流程,它没有考虑软件交换机的配 置,例如配置QoS,关联SDN控制器等。ovsdb-server是Open vSwitch对于OpenFlow实现的补 充,它作为OpenvSwitch的configuration database,保存Open vSwitch的持久化数据。

- ovs-vswitchd:运行在用户空间的转发程序,接收SDN控制器下发的OpenFlow规则。并且通知 OVS内核模块该如何处理网络数据包。

- OVS内核模块:运行在内核空间的转发程序,根据ovs-vswitchd的指示,处理网络数据包

Open vSwitch中有快速路径(fast path)和慢速路径(slow path)。其中ovs-vswitchd代表了slow path,OVS内核模块代表了fast path。现在OpenFlow存储在slow path中,但是为了快速转发,网络包 应该尽可能的在fast path中转发。因此,OpenVSwitch按照下面的逻辑完成转发。

当一个网络连接的第一个网络数据包(首包)被发出时,OVS内核模块会先收到这个数据包。但是内核 模块现在还不知道如何处理这个包,因为所有的OpenFlow都存在ovs-vswitchd,因此它的默认行为是 将这个包上送到ovs-vswitchd。ovs-vswitchd通过OpenFlow pipeline,处理完网络数据包送回给OVS内 核模块,同时,ovs-vswitchd还会生成一串类似于OpenFlow Action,但是更简单的datapath action。 这串datapath action会一起送到OVS内核模块。因为同一个网络连接的所有网络数据包特征(IP, MAC,端口号)都一样,当OVS内核模块收到其他网络包的时候,可以直接应用datapath action。

这样,成功的解决了之前的问题。首先,内核模块不用关心OpenFlow的变化,不用考虑OpenVSwitch的代码更新,这些都在ovs-vswitchd完成。其次,整个网络连接,只有首包需要经过OpenFlow pipeline的处理,其余的包在内核模块匹配网络包特征,直接应用datapath action,就可以完成转发。OVS内核模块通过缓存来保持datapath action的记录。稍微早期的内核模块实现了名为microflow的缓存,这个缓存就是一个hash map,key是所有OpenFlow可能匹配的值对应的hash值,包括了网络2-4层header数据和一些其他的metadata例如in_port,value就是datapath action。hash map可以实现O(1)的查找时间,这样可以在OVS内核模块中实现高效的查找转发。

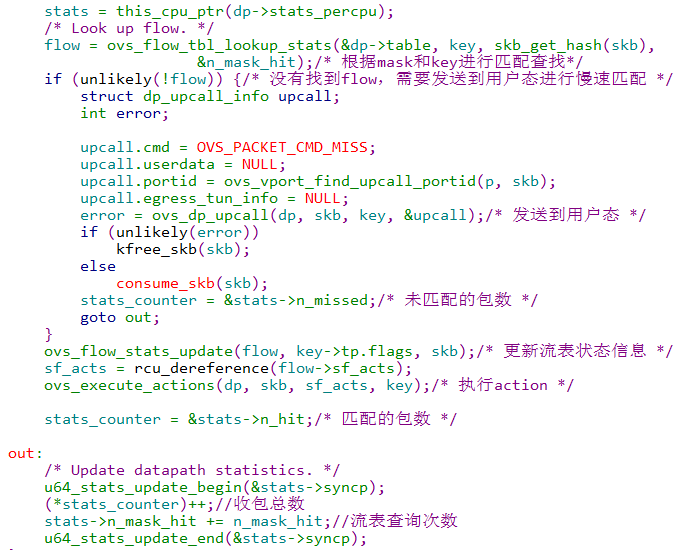

kernel收包

通过vport注册的回调函数netdev_frame_hook()->netdev_frame_hook()-> netdev_port_receive()->ovs_vport_receive()处理接收报文,ovs_flow_key_extract()函数生成flow的key内容用以接下来进行流表匹配,最后调用ovs_dp_process_packet()函数进入真正的ovs数据包处理,代码流程如下:

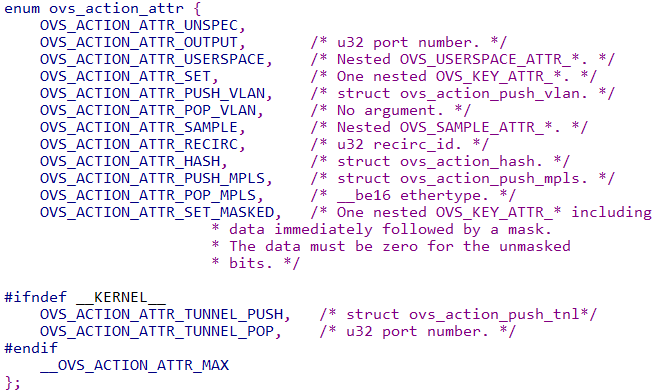

action处理

ovs的action类型如下,使用nla_type()函数获取nl_type的值,入口处理函数为do_execute_actions()。

enum ovs_action_attr {

OVS_ACTION_ATTR_UNSPEC,

OVS_ACTION_ATTR_OUTPUT, /* u32 port number. */

OVS_ACTION_ATTR_USERSPACE, /* Nested OVS_USERSPACE_ATTR_*. */

OVS_ACTION_ATTR_SET, /* One nested OVS_KEY_ATTR_*. */

OVS_ACTION_ATTR_PUSH_VLAN, /* struct ovs_action_push_vlan. */

OVS_ACTION_ATTR_POP_VLAN, /* No argument. */

OVS_ACTION_ATTR_SAMPLE, /* Nested OVS_SAMPLE_ATTR_*. */

OVS_ACTION_ATTR_RECIRC, /* u32 recirc_id. */

OVS_ACTION_ATTR_HASH, /* struct ovs_action_hash. */

OVS_ACTION_ATTR_PUSH_MPLS, /* struct ovs_action_push_mpls. */

OVS_ACTION_ATTR_POP_MPLS, /* __be16 ethertype. */

OVS_ACTION_ATTR_SET_MASKED, /* One nested OVS_KEY_ATTR_* including

* data immediately followed by a mask.

* The data must be zero for the unmasked

* bits. */

OVS_ACTION_ATTR_CT, /* Nested OVS_CT_ATTR_* . */

OVS_ACTION_ATTR_TRUNC, /* u32 struct ovs_action_trunc. */

OVS_ACTION_ATTR_PUSH_ETH, /* struct ovs_action_push_eth. */

OVS_ACTION_ATTR_POP_ETH, /* No argument. */

OVS_ACTION_ATTR_CT_CLEAR, /* No argument. */

OVS_ACTION_ATTR_PUSH_NSH, /* Nested OVS_NSH_KEY_ATTR_*. */

OVS_ACTION_ATTR_POP_NSH, /* No argument. */

OVS_ACTION_ATTR_METER, /* u32 meter ID. */

OVS_ACTION_ATTR_CLONE, /* Nested OVS_CLONE_ATTR_*. */

OVS_ACTION_ATTR_CHECK_PKT_LEN, /* Nested OVS_CHECK_PKT_LEN_ATTR_*. */

__OVS_ACTION_ATTR_MAX, /* Nothing past this will be accepted

* from userspace. */

#ifdef __KERNEL__

OVS_ACTION_ATTR_SET_TO_MASKED, /* Kernel module internal masked

* set action converted from

* OVS_ACTION_ATTR_SET. */

#endif

};

OVS_ACTION_ATTR_OUTPUT:获取port号,调用do_output()发送报文到该port;

OVS_ACTION_ATTR_USERSPACE:调用output_userspace()发送到用户态;

OVS_ACTION_ATTR_HASH:调用execute_hash()获取skb的hash赋值到ovs_flow_hash

OVS_ACTION_ATTR_PUSH_VLAN:调用push_vlan()增加vlan头部

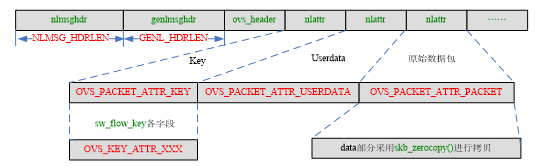

upcall处理

当没有找到匹配的流表时,内核通过netlink发送报文到用户层处理,入口函数ovs_dp_upcall(),该函数调用queue_userspace_packet()构造发往用户层的skb,通过netlink通信机制发送到用户层,其中形成的主要数据格式如下:

output_userspace

ovs_dp_upcall

queue_userspace_packet

skb_zerocopy(user_skb, skb, skb->len, hlen); //upcall信息对象添加报文

void ovs_dp_process_packet(struct sk_buff *skb, struct sw_flow_key *key)

{

const struct vport *p = OVS_CB(skb)->input_vport;

struct datapath *dp = p->dp;

struct sw_flow *flow;

struct sw_flow_actions *sf_acts;

struct dp_stats_percpu *stats;

u64 *stats_counter;

u32 n_mask_hit;

stats = this_cpu_ptr(dp->stats_percpu);

/* Look up flow. */

flow = ovs_flow_tbl_lookup_stats(&dp->table, key, &n_mask_hit);

if (unlikely(!flow)) {

struct dp_upcall_info upcall;

int error;

memset(&upcall, 0, sizeof(upcall));

upcall.cmd = OVS_PACKET_CMD_MISS;

upcall.portid = ovs_vport_find_upcall_portid(p, skb);

upcall.mru = OVS_CB(skb)->mru;

error = ovs_dp_upcall(dp, skb, key, &upcall, 0);

if (unlikely(error))

kfree_skb(skb);

else

consume_skb(skb);

stats_counter = &stats->n_missed;

goto out;

}

ovs_flow_stats_update(flow, key->tp.flags, skb);

sf_acts = rcu_dereference(flow->sf_acts);

ovs_execute_actions(dp, skb, sf_acts, key);

stats_counter = &stats->n_hit;

out:

/* Update datapath statistics. */

u64_stats_update_begin(&stats->syncp);

(*stats_counter)++;

stats->n_mask_hit += n_mask_hit;

u64_stats_update_end(&stats->syncp);

}

学习地址: Dpdk/网络协议栈/vpp/OvS/DDos/NFV/虚拟化/高性能专家-学习视频教程-腾讯课堂 【免费订阅】

更多DPDK相关学习资料有需要的可以自行报名学习,免费订阅,久学习,或点击这里加qun免费

领取,关注我持续更新哦! !

action

遍历所有的action,支持的有OFPACT_OUTPUT、OFPACT_GROUP、OFPACT_CONTROLLER、OFPACT_ENQUEUE、OFPACT_SET_VLAN_VID、OFPACT_SET_VLAN_PCP、OFPACT_STRIP_VLAN、OFPACT_PUSH_VLAN、OFPACT_SET_ETH_SRC、OFPACT_SET_ETH_DST、OFPACT_SET_IPV4_SRC、 OFPACT_SET_IPV4_DST、OFPACT_SET_IP_DSCP、OFPACT_SET_IP_ECN、OFPACT_SET_IP_TTL、OFPACT_SET_L4_SRC_PORT、OFPACT_SET_L4_DST_PORT、OFPACT_RESUBMIT、OFPACT_SET_TUNNEL、OFPACT_SET_QUEUE、OFPACT_POP_QUEUE、OFPACT_REG_MOVE、 OFPACT_SET_FIELD、OFPACT_STACK_PUSH、OFPACT_STACK_POP、OFPACT_PUSH_MPLS、OFPACT_POP_MPLS、OFPACT_SET_MPLS_LABEL、OFPACT_SET_MPLS_TC、OFPACT_SET_MPLS_TTL、OFPACT_DEC_MPLS_TTL、OFPACT_DEC_TTL、OFPACT_NOTE、OFPACT_MULTIPATH、 OFPACT_BUNDLE、OFPACT_OUTPUT_REG、OFPACT_OUTPUT_TRUNC、OFPACT_LEARN、OFPACT_CONJUNCTION、OFPACT_EXIT、OFPACT_UNROLL_XLATE、OFPACT_FIN_TIMEOUT、OFPACT_CLEAR_ACTIONS、OFPACT_WRITE_ACTIONS、OFPACT_WRITE_METADATA、OFPACT_METER、 OFPACT_GOTO_TABLE、OFPACT_SAMPLE、OFPACT_CLONE、OFPACT_CT、OFPACT_CT_CLEAR、OFPACT_NAT、OFPACT_DEBUG_RECIRC, action太多,我们先介绍几个常用的 OFPACT_OUTPUT,xlate_output_action会根据端口情况进行一些操作,这块不细看了 OFPACT_CONTROLLER,execute_controller_action生成一个packet_in报文,然后发送 OFPACT_SET_ETH_SRC、OFPACT_SET_ETH_DST、OFPACT_SET_IPV4_SRC、OFPACT_SET_IPV4_DST、OFPACT_SET_IP_DSCP、OFPACT_SET_IP_ECN、OFPACT_SET_IP_TTL、OFPACT_SET_L4_SRC_PORT、OFPACT_SET_L4_DST_PORT,修改源目的mac、IP以及DSCP、ECN、TTL和L4的源目的端口 OFPACT_RESUBMIT,xlate_ofpact_resubmit会继续查找指定的table的流表 OFPACT_SET_TUNNEL,设置tunnel id OFPACT_CT,compose_conntrack_action执行完ct的设置之后回调do_xlate_actions执行其他的action

packetin数据包

ovs-ofctl add-flow br0 in_port=1,actions=controller

OFPP_CONTROLLER

xlate_actions

┣━do_xlate_actions(ofpacts, ofpacts_len, &ctx, true, false);

┣━xlate_output_action

do_xlate_actions

{

switch (a->type) {

case OFPACT_OUTPUT:

xlate_output_action(ctx, ofpact_get_OUTPUT(a)->port,

ofpact_get_OUTPUT(a)->max_len, true, last,

false, group_bucket_action);

break;

}

xlate_output_action(struct xlate_ctx *ctx, ofp_port_t port,

uint16_t controller_len, bool may_packet_in,

bool is_last_action, bool truncate,

bool group_bucket_action)

{

case OFPP_CONTROLLER:

xlate_controller_action(ctx, controller_len,

(ctx->in_packet_out ? OFPR_PACKET_OUT

: group_bucket_action ? OFPR_GROUP

: ctx->in_action_set ? OFPR_ACTION_SET

: OFPR_ACTION),

0, UINT32_MAX, NULL, 0);

}

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

1945

1945

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?