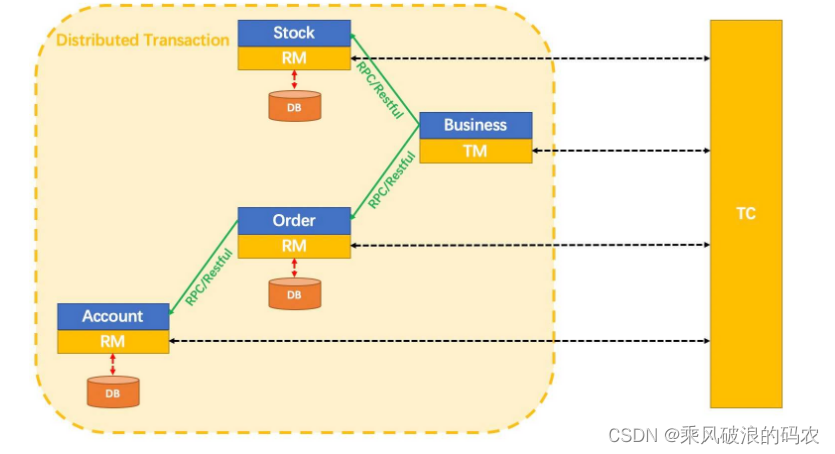

一、seata的理论

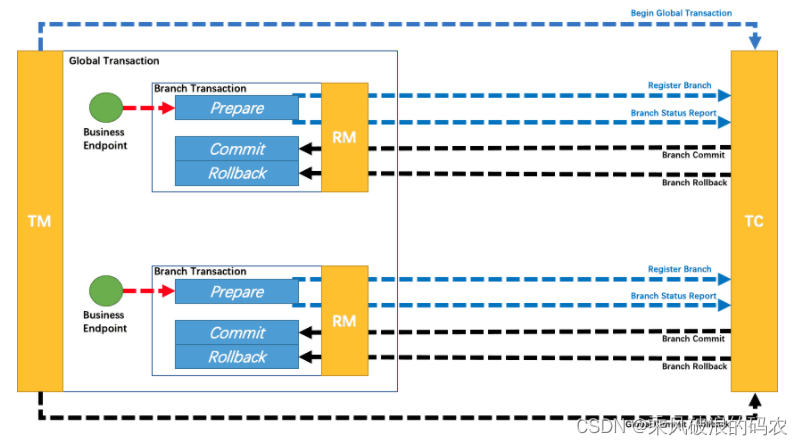

引用官方的两张图

AT模式详解

AT 模式(参考链接 TBD)基于 支持本地 ACID 事务 的 关系型数据库:

- 一阶段 prepare 行为:在本地事务中,一并提交业务数据更新和相应回滚日志记录。

- 二阶段 commit 行为:马上成功结束,自动 异步批量清理回滚日志。

- 二阶段 rollback 行为:通过回滚日志,自动 生成补偿操作,完成数据回滚。

二、搭建本地seata的服务端

1.使用nacos作为seata的配置和注册中心

修改CONF下的register.conf

registry {

# file 、nacos 、eureka、redis、zk、consul、etcd3、sofa

type = "nacos"

nacos {

application = "seata-server"

serverAddr = "127.0.0.1:8848"

group = "SEATA_GROUP"

namespace = "seata"

cluster = "default"

username = "nacos"

}

}

config {

# file、nacos 、apollo、zk、consul、etcd3

type = "nacos"

nacos {

serverAddr = "127.0.0.1:8848"

namespace = "seata"

group = "SEATA_GROUP"

username = "nacos"

dataId = "seataServer.properties"

}

}

2.使用seata自带脚本将SEATA服务端配置自动推送到NACOS

sh ./nacos-config.sh -h localhost -p 8848 -g SEATA_GROUP -t seata -u nacos -w nacos

\seata-develop\script\config-center\nacos\nacos-config.sh

3.在NACOS中配置seata的数据存储为mysql

| store.db.url=jdbc:mysql://127.0.0.1:3306/seata_db?useUnicode=true&rewriteBatchedStatements=true |

service.default.grouplist=127.0.0.1:8091

修改客户端RM的undolog序列化方式为protostuff,解决默认的JACKSON序列化不支持时间序列化的问题。

| client.undo.logSerialization=protostuff |

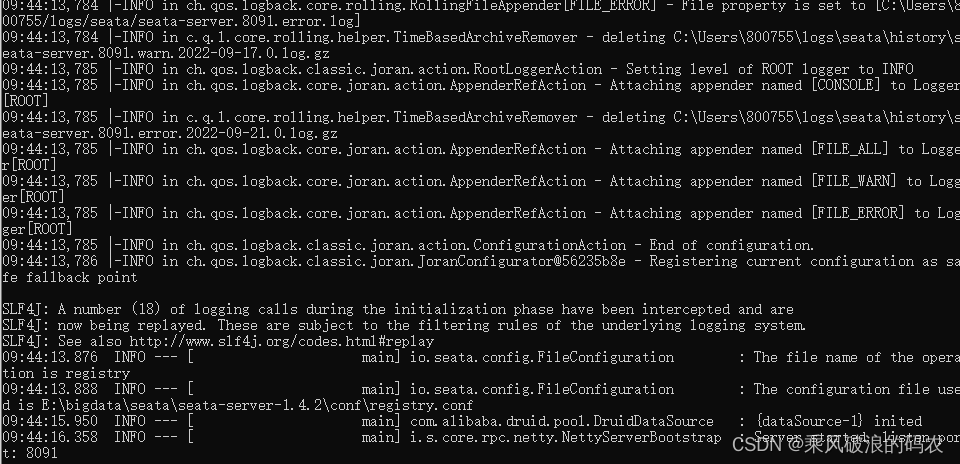

4.启动seata-server,运行bin下的seata-server.bat

seata-server的版本为1.4.2

./seata-server.bat -p 8091 -m db

这里注意,一定要指定DB模式运行,使用文件为单机方式,做不了集群。

三、搭建测试示例DEMO

1.创建两个项目joyday,loveday,由joyday提供一个HTTP接口,joyday实现往自己数据库插入一条记录,并调用loveday一个接口修改它的数据表信息。

2.项目工程的POM以及参数配置信息

seata-all的客户端版本为1.3.0

<!--引入protostuff依赖-->

<dependency>

<groupId>io.protostuff</groupId>

<artifactId>protostuff-core</artifactId>

<version>1.6.0</version>

</dependency>

<dependency>

<groupId>io.protostuff</groupId>

<artifactId>protostuff-runtime</artifactId>

<version>1.6.0</version>

</dependency>

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-starter-alibaba-seata</artifactId>

<version>${com.alibaba.cloud.revision}</version>

</dependency>3.application.properties参数配置

seata.tx-service-group=loveday-service-group,配置当前服务的自定义分组名。

注意:service.vgroupMapping.loveday-service-group=default,这个配置自定义事务分组对应的集群名应该要放在nacos中。

logging.level.io.seata=debug

seata.enabled=true

seata.service.disable-global-transaction=false

seata.tx-service-group=loveday-service-group

#在配置中心配置这个值。

#service.vgroupMapping.loveday-service-group=default

#配置中心

seata.config.type=nacos

seata.config.nacos.group=SEATA_GROUP

seata.config.nacos.server-addr=127.0.0.1:8848

seata.config.nacos.namespace=seata

seata.config.nacos.username=nacos

seata.config.nacos.password=nacos

seata.config.nacos.data-id=seataServer.properties

#注册中心

seata.registry.type=nacos

seata.registry.nacos.group=SEATA_GROUP

seata.registry.nacos.application=seata-server

seata.registry.nacos.server-addr=127.0.0.1:8848

seata.registry.nacos.namespace=seata

seata.registry.nacos.username=nacos

seata.registry.nacos.password=nacos4.代码示例

调用方服务

@Override

//@Transactional

@GlobalTransactional(timeoutMills = 10000,name = "loveday-saveAcc-tx")

public String saveRandomAcc() {

TbAccAccount tbAccAccount = new TbAccAccount();

tbAccAccount.setTid(UUID.randomUUID().toString());

tbAccAccount.setPhone("181" + RandomUtil.randomNumbers(8));

tbAccAccount.setPassword("123456");

tbAccAccount.setTenantId(2);

tbAccAccount.setCreateDate(new Date());

tbAccAccount.setUpdateDate(new Date());

boolean save = tbAccExtMapper.save(tbAccAccount);

log.info(" saveaccAccount tbAccAccount:{},save:{}", JSONUtil.toJsonStr(tbAccAccount),save);

if (!save){

return null;

}

int decreaseStoreRet = storageService.decreaseStore("C00322", 2);

log.info(" decreaseStoreRet:{}",decreaseStoreRet);

if (decreaseStoreRet <= 0){

throw new RuntimeException(" decreaseStore error");

}

return tbAccAccount.getTid();

}被调用方实现了一个DUBBO的远端服务。

@Slf4j

@DubboService(version = "1.0",group = "day")

public class StoreageServiceImpl implements StorageService {

@Autowired

private StorageMapper storageMapper;

@Override

public int decreaseStore(String commodityCode, Integer decreaseDelta) {

Storage queryStorage = new Storage();

queryStorage.setCommodityCode(commodityCode);

Storage storage = storageMapper.selectOne(new QueryWrapper<Storage>(queryStorage));

if (ObjectUtil.isNull(storage)){

return -1;

}

storageMapper.decreaseStore(commodityCode, decreaseDelta);

return storage.getCount()-decreaseDelta;

}

}

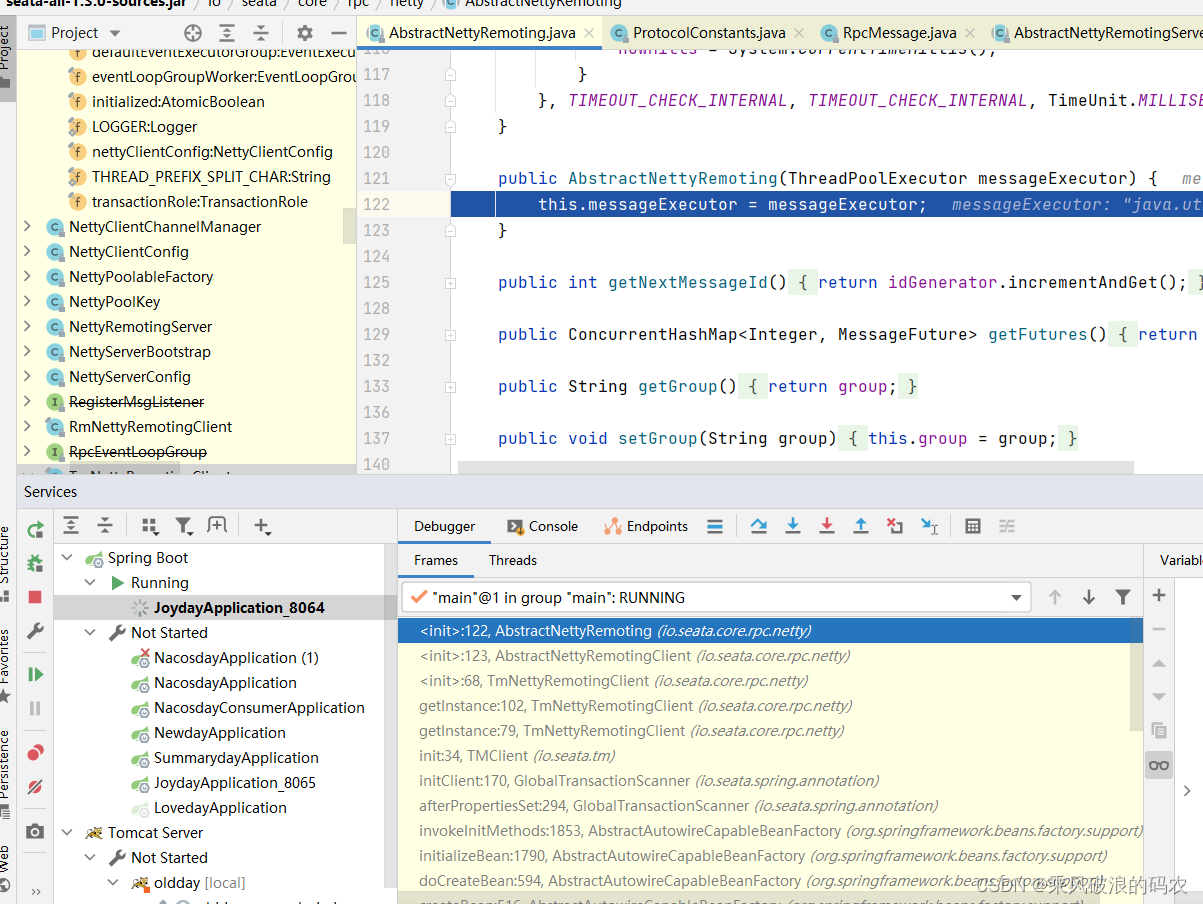

四、客户端初始化代理流程

1.SeataAutoConfiguration自动配置初始化

public class SeataAutoConfiguration {

private static final Logger LOGGER = LoggerFactory.getLogger(SeataAutoConfiguration.class);

@Bean

@DependsOn({BEAN_NAME_SPRING_APPLICATION_CONTEXT_PROVIDER, BEAN_NAME_FAILURE_HANDLER})

@ConditionalOnMissingBean(GlobalTransactionScanner.class)

public GlobalTransactionScanner globalTransactionScanner(SeataProperties seataProperties, FailureHandler failureHandler) {

if (LOGGER.isInfoEnabled()) {

LOGGER.info("Automatically configure Seata");

}

return new GlobalTransactionScanner(seataProperties.getApplicationId(), seataProperties.getTxServiceGroup(), failureHandler);

}

@Bean(BEAN_NAME_SEATA_AUTO_DATA_SOURCE_PROXY_CREATOR)

@ConditionalOnProperty(prefix = StarterConstants.SEATA_PREFIX, name = {"enableAutoDataSourceProxy", "enable-auto-data-source-proxy"}, havingValue = "true", matchIfMissing = true)

@ConditionalOnMissingBean(SeataAutoDataSourceProxyCreator.class)

public SeataAutoDataSourceProxyCreator seataAutoDataSourceProxyCreator(SeataProperties seataProperties) {

return new SeataAutoDataSourceProxyCreator(seataProperties.isUseJdkProxy(),seataProperties.getExcludesForAutoProxying());

}

}这两个初始化配置就是创建GlobalTransactionScanner和SeataAutoDataSourceProxyCreator两个类。这两个类都继承于AbstractAutoProxyCreator,用于对spring容器内的所有BEAN进行自动代理增强扩展点功能。

GlobalTransactionScanner:对所有加了@GlobalTransactional的类或者方法所在的类进行增加代理。

SeataAutoDataSourceProxyCreator:对所有的系统dataSource数据源进行代理增加。

2.GlobalTransactionScanner初始化过程。在其初始化时会同时初始化TM,RM,其实就是将当前应用TM,RM的角色注册到seata服务器中。

public class GlobalTransactionScanner extends AbstractAutoProxyCreator

implements InitializingBean, ApplicationContextAware,

DisposableBean {

private void initClient() {

if (LOGGER.isInfoEnabled()) {

LOGGER.info("Initializing Global Transaction Clients ... ");

}

if (StringUtils.isNullOrEmpty(applicationId) || StringUtils.isNullOrEmpty(txServiceGroup)) {

throw new IllegalArgumentException(String.format("applicationId: %s, txServiceGroup: %s", applicationId, txServiceGroup));

}

//init TM

TMClient.init(applicationId, txServiceGroup);

if (LOGGER.isInfoEnabled()) {

LOGGER.info("Transaction Manager Client is initialized. applicationId[{}] txServiceGroup[{}]", applicationId, txServiceGroup);

}

//init RM

RMClient.init(applicationId, txServiceGroup);

if (LOGGER.isInfoEnabled()) {

LOGGER.info("Resource Manager is initialized. applicationId[{}] txServiceGroup[{}]", applicationId, txServiceGroup);

}

if (LOGGER.isInfoEnabled()) {

LOGGER.info("Global Transaction Clients are initialized. ");

}

registerSpringShutdownHook();

}

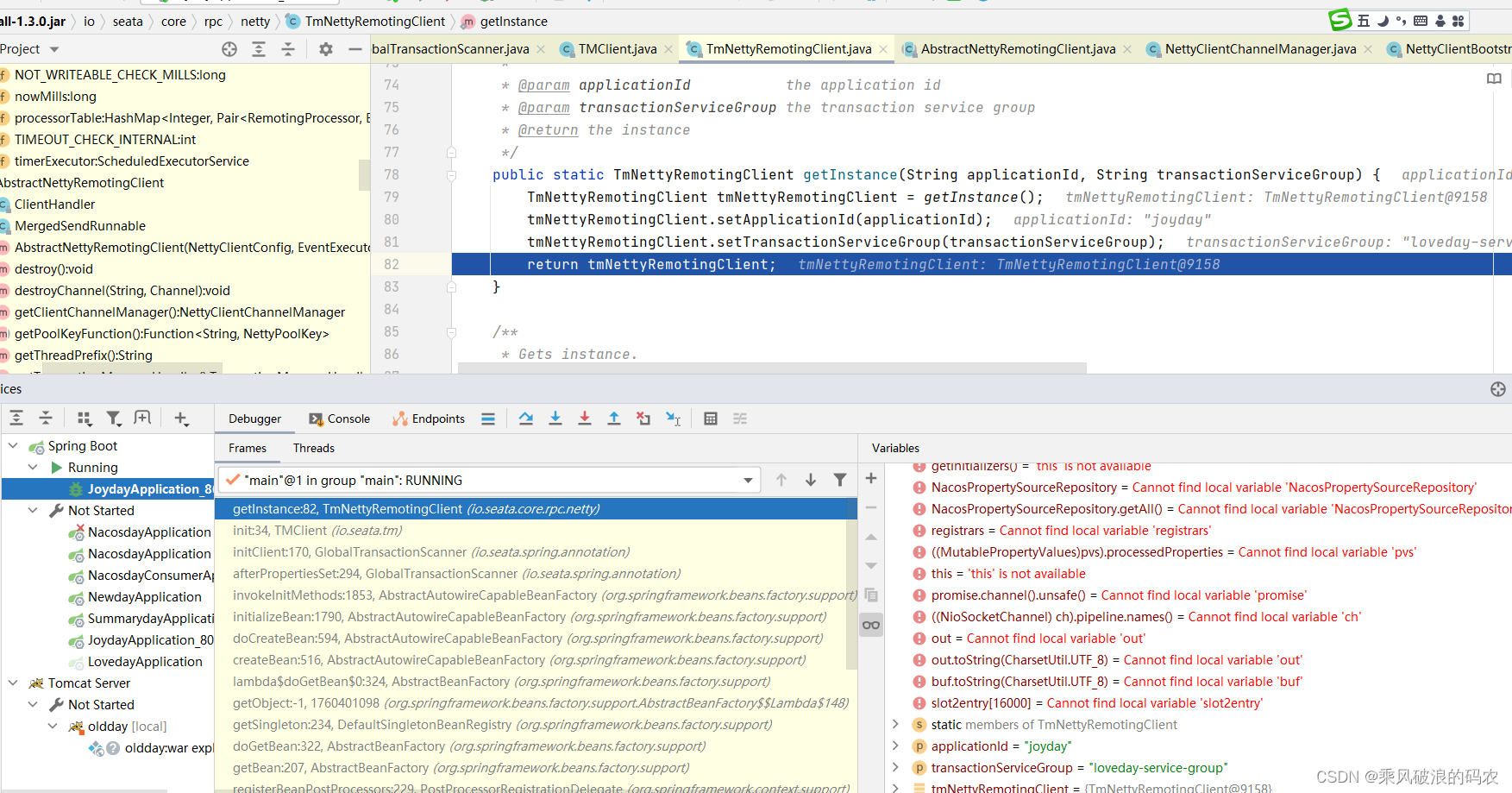

}3.注册TM,RM其实都是通过NETTY框架跟seata server的TCP长连接。

这里我们先看下TM注册的流程

public class TMClient {

/**

* Init.

*

* @param applicationId the application id

* @param transactionServiceGroup the transaction service group

*/

public static void init(String applicationId, String transactionServiceGroup) {

TmNettyRemotingClient tmNettyRemotingClient = TmNettyRemotingClient.getInstance(applicationId, transactionServiceGroup);

tmNettyRemotingClient.init();

}

}

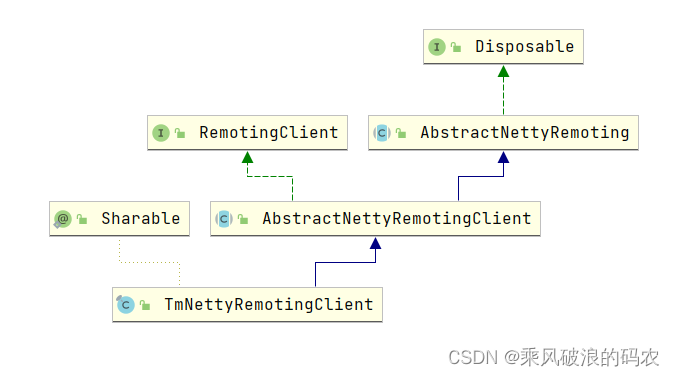

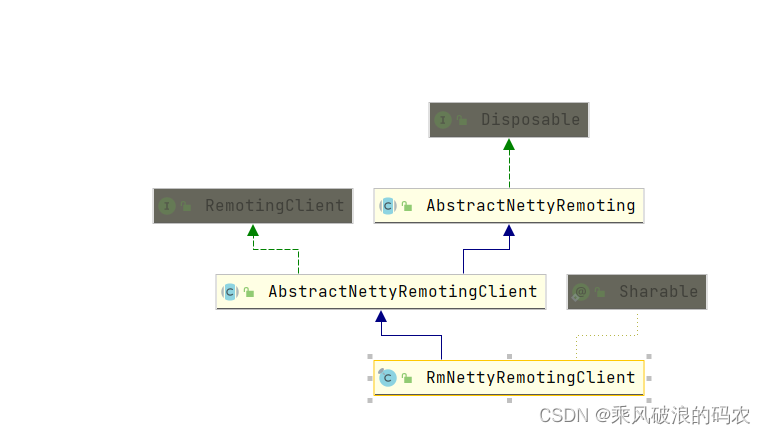

从这里可以看出TmNettyRemotingClient的继承关系。

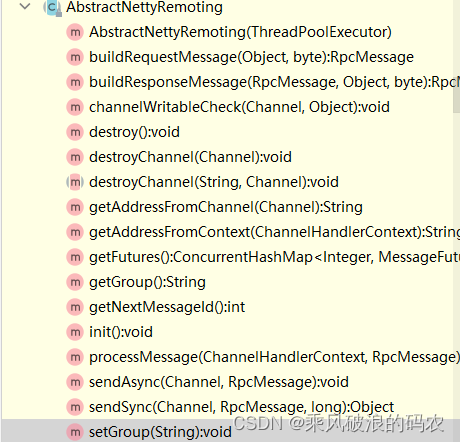

3.1 AbstractNettyRemoting:实现可以在客户端和服务端通用的SEATA-NETTY远程类,可以封装SEATA格式打包的RPC MSG,以及同步和异步发送request,response消息,编写根据msgType分发handle的通用processMessage消息处理方法。

通用的RpcMessage为SEATA客户端和服务端通信的协议数据结构。

public class RpcMessage {

private int id;

private byte messageType;

private byte codec;

private byte compressor;

private Map<String, String> headMap = new HashMap<>();

private Object body;

}这里的processorTable其实就是一个消息类型事件映射容器工厂类,根据收到的RPCMESSAGE中的BODY中的msgType为分发到不同的handler进行处理。

protected final HashMap<Integer/*MessageType*/, Pair<RemotingProcessor, ExecutorService>> processorTable = new HashMap<>(32);

protected void processMessage(ChannelHandlerContext ctx, RpcMessage rpcMessage) throws Exception {

if (LOGGER.isDebugEnabled()) {

LOGGER.debug(String.format("%s msgId:%s, body:%s", this, rpcMessage.getId(), rpcMessage.getBody()));

}

Object body = rpcMessage.getBody();

if (body instanceof MessageTypeAware) {

MessageTypeAware messageTypeAware = (MessageTypeAware) body;

final Pair<RemotingProcessor, ExecutorService> pair = this.processorTable.get((int) messageTypeAware.getTypeCode());

if (pair != null) {

if (pair.getSecond() != null) {

try {

pair.getSecond().execute(() -> {

try {

pair.getFirst().process(ctx, rpcMessage);

} catch (Throwable th) {

LOGGER.error(FrameworkErrorCode.NetDispatch.getErrCode(), th.getMessage(), th);

}

});

} catch (RejectedExecutionException e) {

}

}

} else {

LOGGER.error("This message type [{}] has no processor.", messageTypeAware.getTypeCode());

}

} else {

LOGGER.error("This rpcMessage body[{}] is not MessageTypeAware type.", body);

}

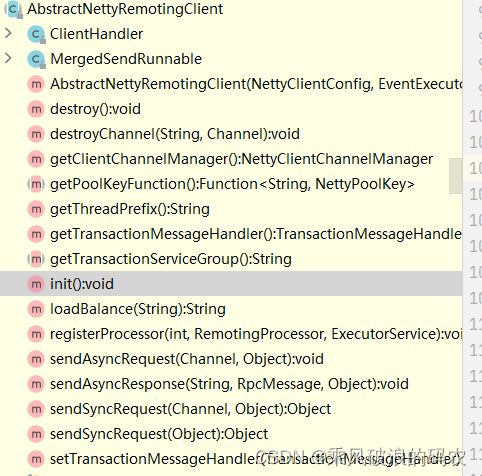

}3.2 AbstractNettyRemotingClient继承于AbstractNettyRemoting,并且组合了NettyClientBootstrap对象,用来实现netty的远程客户端实现类,包含了netty的bootstrap,channelHanler一类组件,以及注册统一的处理类。

这里封装了注册各个msgType的processer

这里的ClientHandler即为netty的一个复用双向handler,可以处理读写的过程。

class ClientHandler extends ChannelDuplexHandler {

@Override

public void channelRead(final ChannelHandlerContext ctx, Object msg) throws Exception {

if (!(msg instanceof RpcMessage)) {

return;

}

processMessage(ctx, (RpcMessage) msg);

}

}3.3 我们继续看TMCLIENT初始化后的过程

在初始化后注册各类MSGTYPE的HANDLER

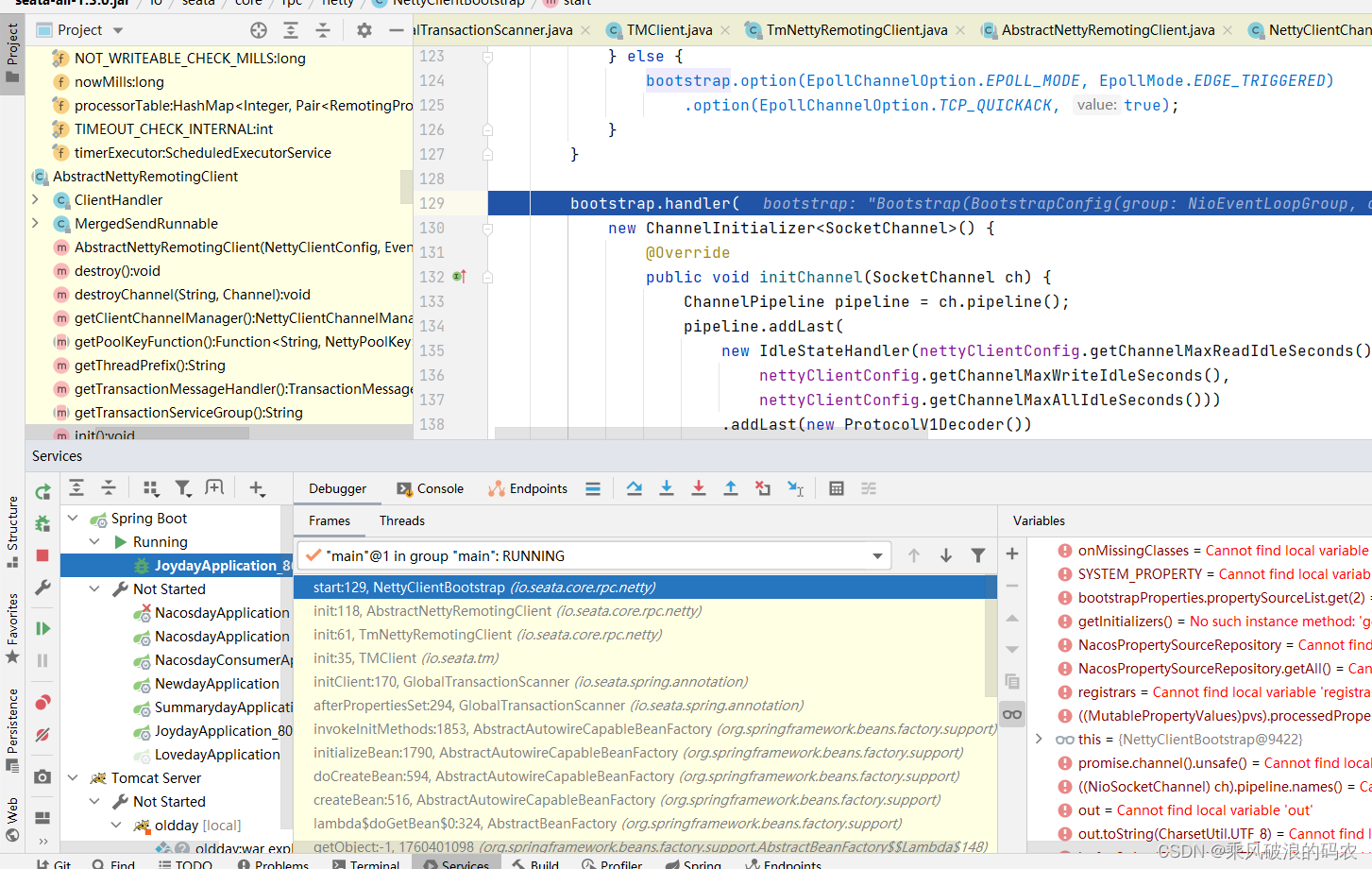

3.4 在初始化时会调用NettyClientBootstrap的start方法,开始初始化NETTY的各类组件。

public void start() {

this.bootstrap.group(this.eventLoopGroupWorker).channel(

nettyClientConfig.getClientChannelClazz()).option(

ChannelOption.TCP_NODELAY, true).option(ChannelOption.SO_KEEPALIVE, true).option(

ChannelOption.CONNECT_TIMEOUT_MILLIS, nettyClientConfig.getConnectTimeoutMillis()).option(

ChannelOption.SO_SNDBUF, nettyClientConfig.getClientSocketSndBufSize()).option(ChannelOption.SO_RCVBUF,

nettyClientConfig.getClientSocketRcvBufSize());

if (nettyClientConfig.enableNative()) {

if (PlatformDependent.isOsx()) {

if (LOGGER.isInfoEnabled()) {

LOGGER.info("client run on macOS");

}

} else {

bootstrap.option(EpollChannelOption.EPOLL_MODE, EpollMode.EDGE_TRIGGERED)

.option(EpollChannelOption.TCP_QUICKACK, true);

}

}

bootstrap.handler(

new ChannelInitializer<SocketChannel>() {

@Override

public void initChannel(SocketChannel ch) {

ChannelPipeline pipeline = ch.pipeline();

pipeline.addLast(

new IdleStateHandler(nettyClientConfig.getChannelMaxReadIdleSeconds(),

nettyClientConfig.getChannelMaxWriteIdleSeconds(),

nettyClientConfig.getChannelMaxAllIdleSeconds()))

.addLast(new ProtocolV1Decoder())

.addLast(new ProtocolV1Encoder());

if (channelHandlers != null) {

addChannelPipelineLast(ch, channelHandlers);

}

}

});

}这里的channelHandlers就是AbstractNettyRemotingClient.clientHandler,最后再调用processMessage,再分发到具体的processer.

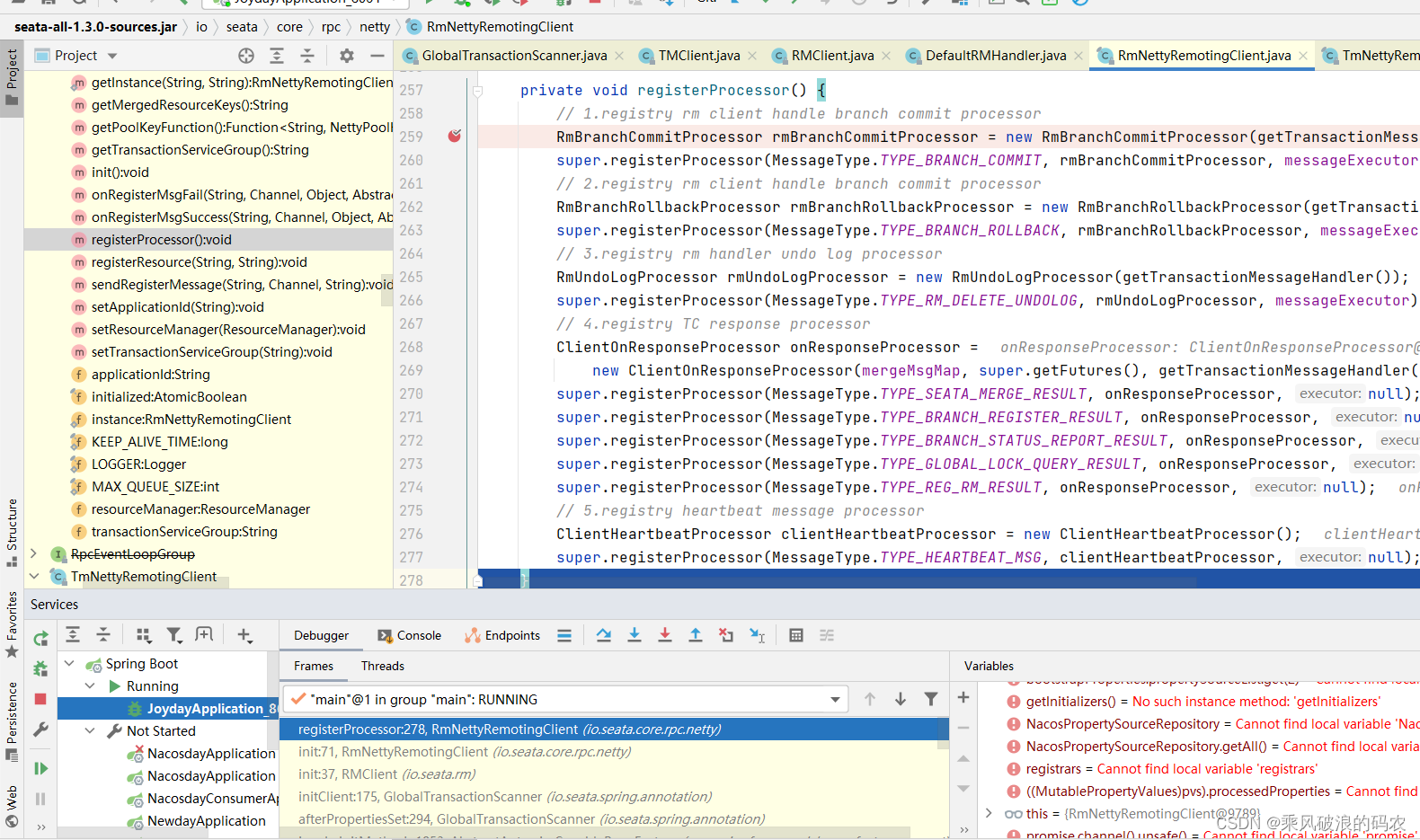

3.5 RM初始化的过程类似,RmNettyRemotingClient和TmNettyRemotingClient都是继承同一个基类,只是注册的processer不一样,因为RM,TM的角色负责的功能不一样,然后在注册成功后的回调处理不一样而已。

3.6 真正向服务端注册RM的角色

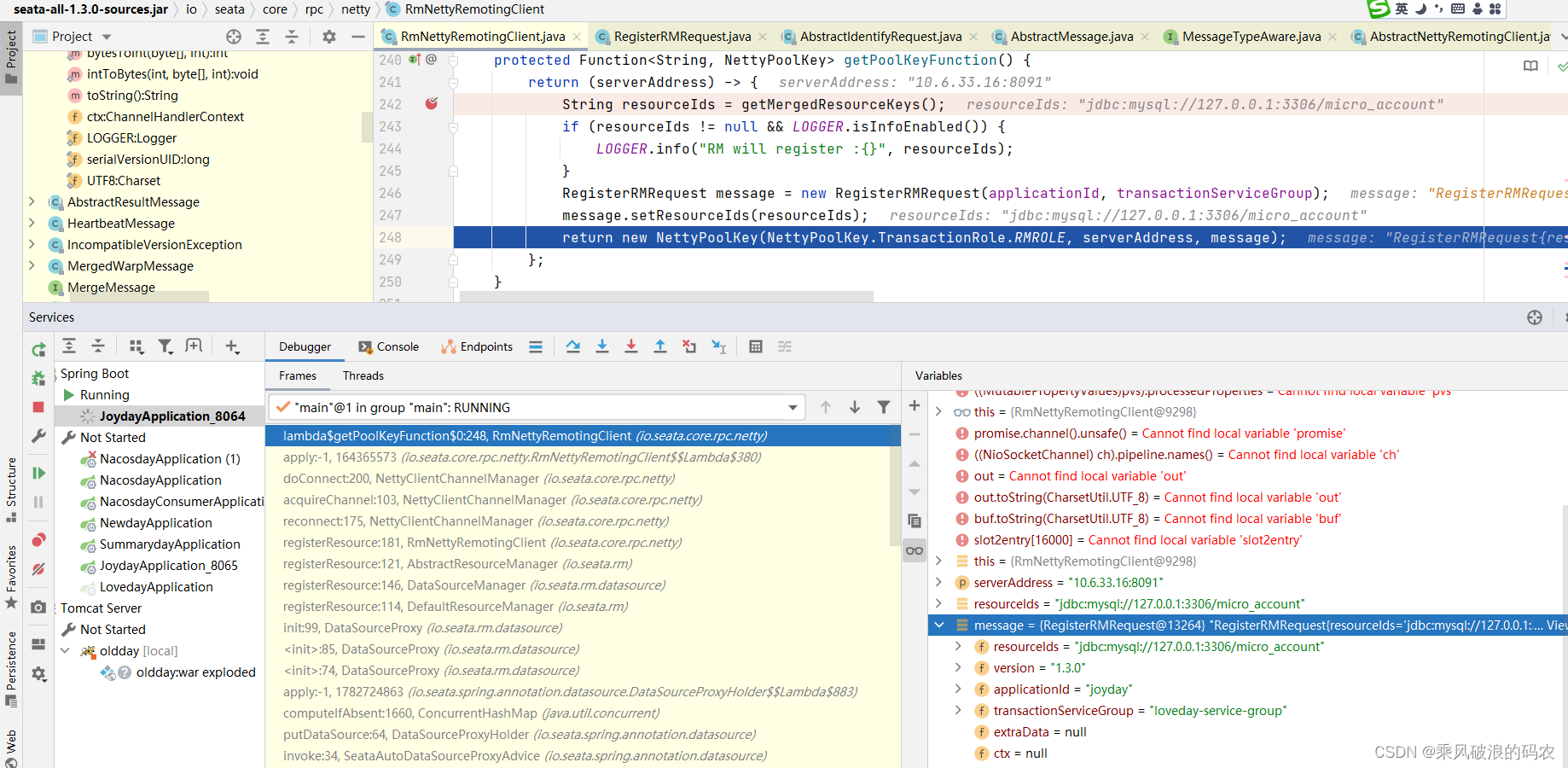

3.6.1 首先根据dataSource生成dataResource,然后构造 RegisterRMRequest。

3.6.2 连接server,生成新的channel,发送RegisterRMRequest到服务端

3.6.3 可以看到在这里,真正发起NETTY的TCP连接。

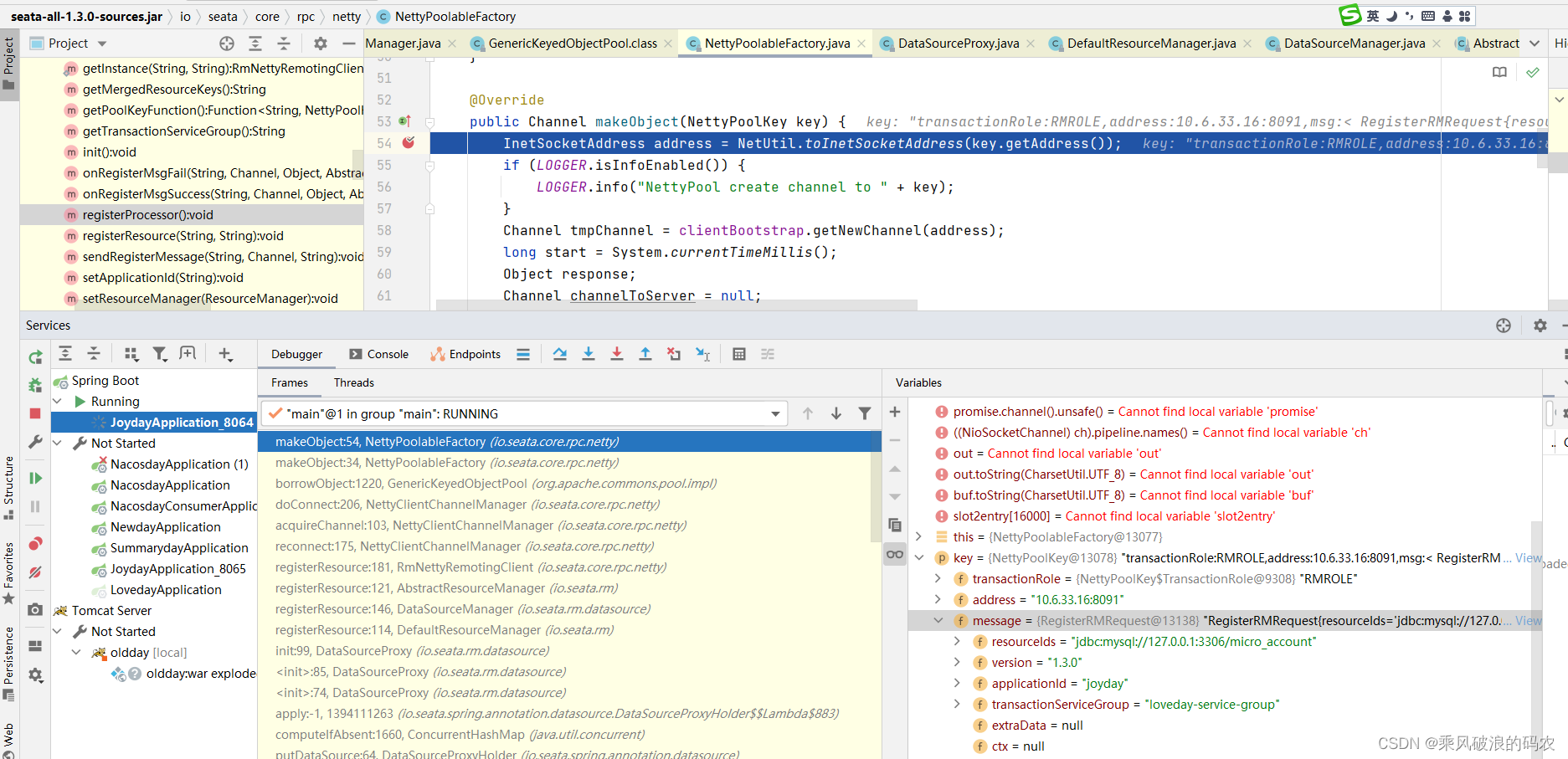

NettyPoolableFactory的makeObject方法,首先创建连接,然后发送注册请求。

public Channel makeObject(NettyPoolKey key) {

InetSocketAddress address = NetUtil.toInetSocketAddress(key.getAddress());

if (LOGGER.isInfoEnabled()) {

LOGGER.info("NettyPool create channel to " + key);

}

Channel tmpChannel = clientBootstrap.getNewChannel(address);

long start = System.currentTimeMillis();

Object response;

Channel channelToServer = null;

if (key.getMessage() == null) {

throw new FrameworkException("register msg is null, role:" + key.getTransactionRole().name());

}

try {

response = rpcRemotingClient.sendSyncRequest(tmpChannel, key.getMessage());

if (!isRegisterSuccess(response, key.getTransactionRole())) {

rpcRemotingClient.onRegisterMsgFail(key.getAddress(), tmpChannel, response, key.getMessage());

} else {

channelToServer = tmpChannel;

rpcRemotingClient.onRegisterMsgSuccess(key.getAddress(), tmpChannel, response, key.getMessage());

}

} catch (Exception exx) {

if (tmpChannel != null) {

tmpChannel.close();

}

throw new FrameworkException(

"register " + key.getTransactionRole().name() + " error, errMsg:" + exx.getMessage());

}

if (LOGGER.isInfoEnabled()) {

LOGGER.info("register success, cost " + (System.currentTimeMillis() - start) + " ms, version:" + getVersion(

response, key.getTransactionRole()) + ",role:" + key.getTransactionRole().name() + ",channel:"

+ channelToServer);

}

return channelToServer;

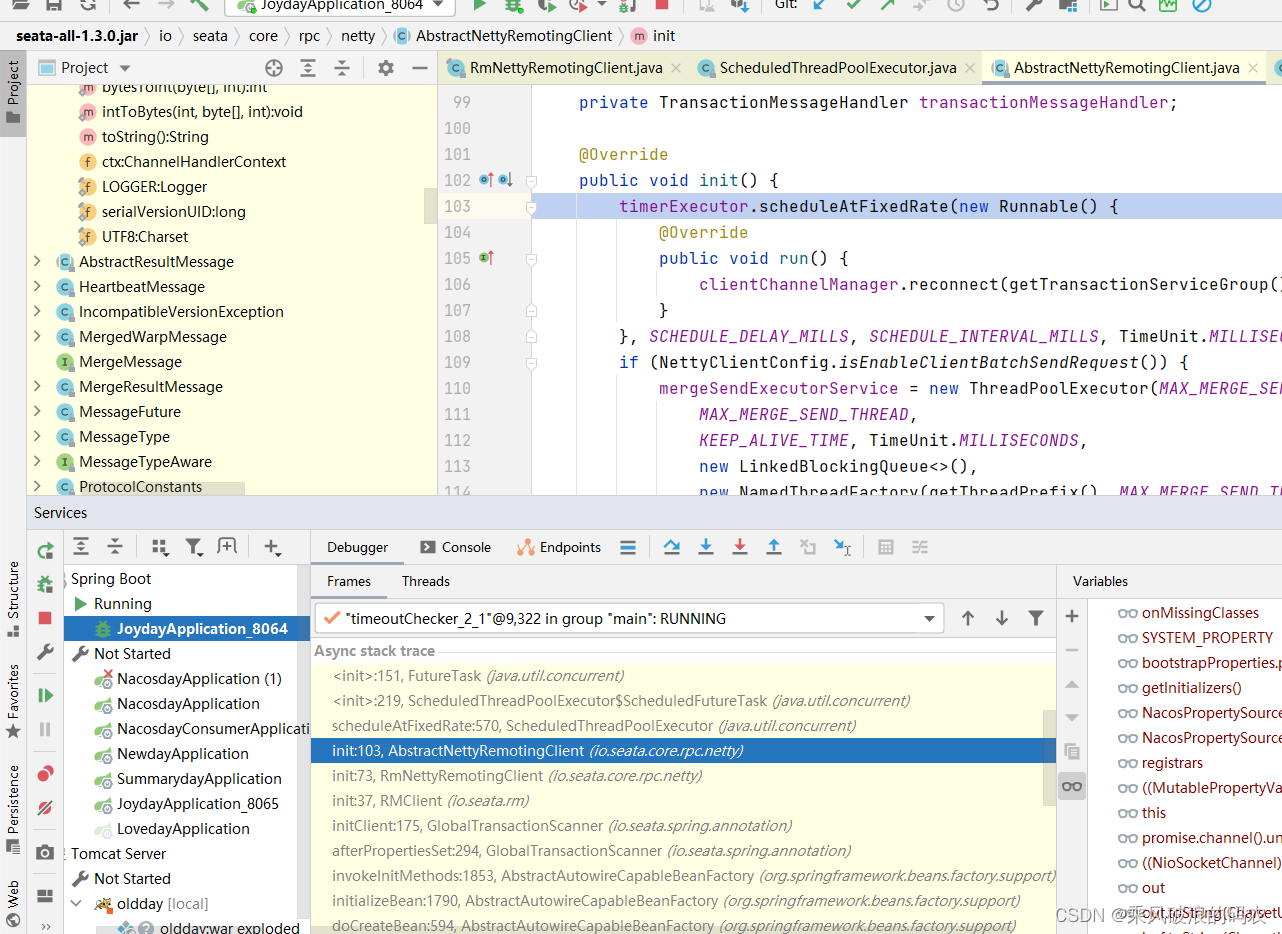

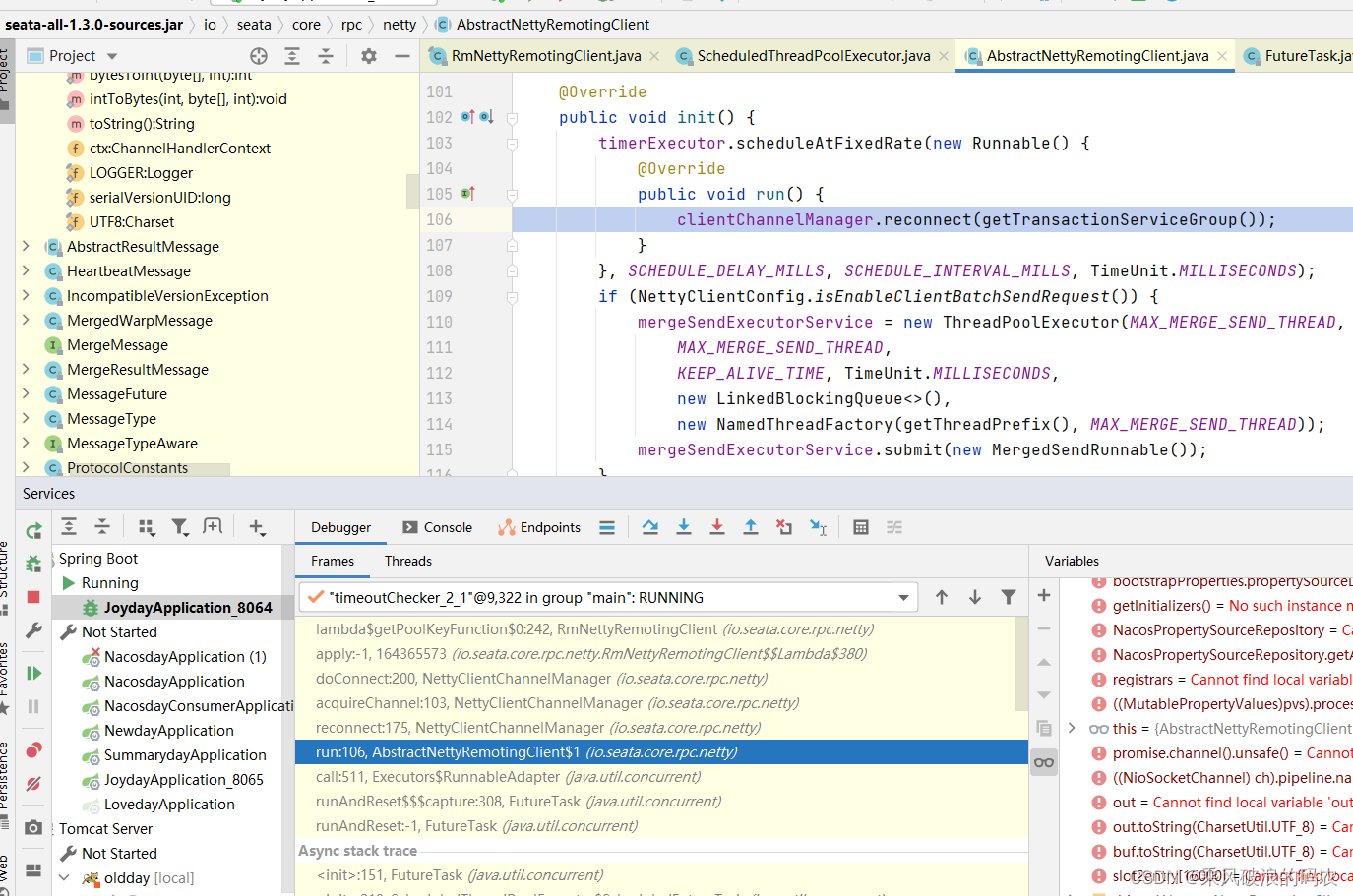

}还有一个定时任务会定时向服务端注册RM

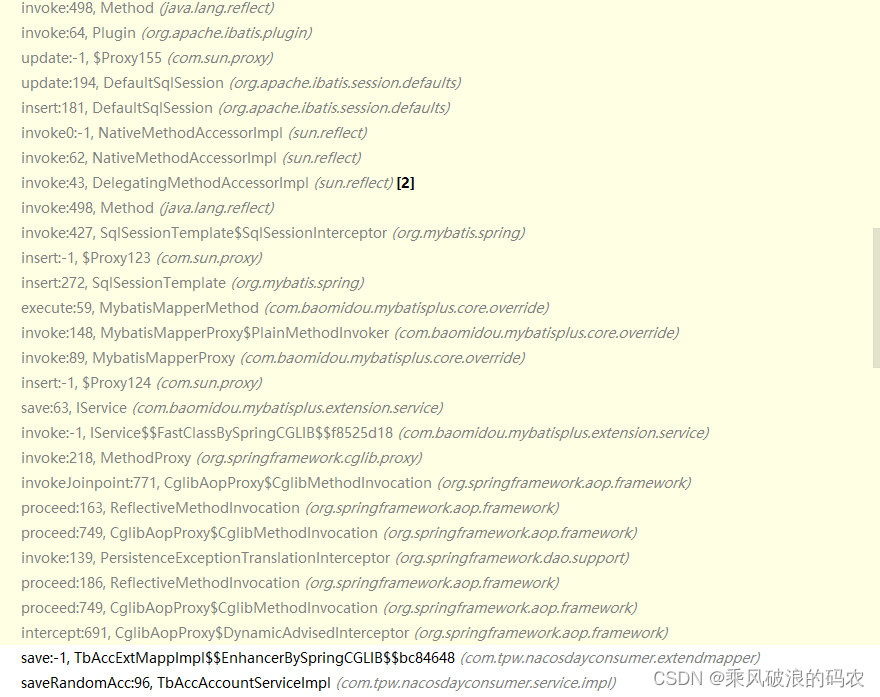

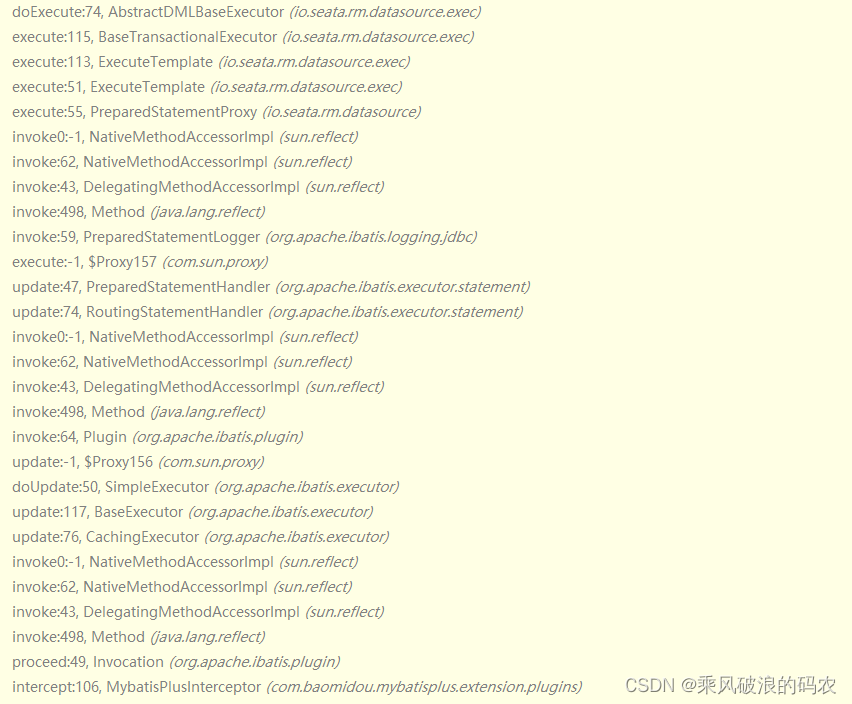

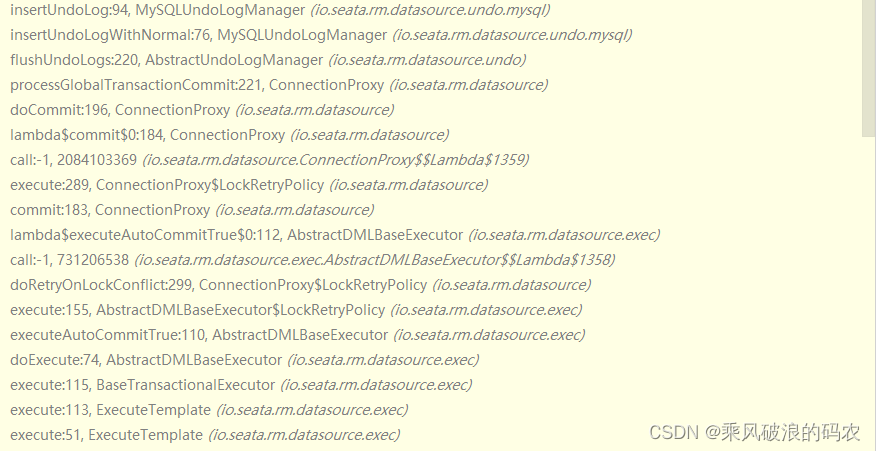

五、验证一阶段提交事务成功并且FLUSH UNDO LOGS的流程。

1. flush undo logs的调用堆栈

1603

1603

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?