一、背景

本人多次在生产环境部署,无坑版本。具体配置需要自己调整,该教程只提供快速部署方案

背景:由于to G项目大部分是私有化交付的,所以监控软件必须部署在内网。为了减少部署成本,决定采用docker-compose+docker 进行一键部署。即使是不懂docker的运维人员,也能通过简单的命令进行部署

介绍:

普通版本:prometheus+alertmanager+grafana+consul+node-exporter

多集群版本:prometheus+alertmanager+grafana+consul+node-exporter + vm(远程存储)

二、部署

1、初始化

mkdir -p /opt/monitor/prometheus/{data,conf}

mkdir -p /opt/monitor/alertmanager/conf

mkdir -p /opt/monitor/grafana/data

mkdir -p /opt/monitor/consul/data

2、将相应配置文件从容器中拷贝出来本机(prometheus、alertmanager)

docker run -d --name prometheus prom/prometheus

docker cp prometheus:/etc/prometheus/prometheus.yml ./

alertmanager同理

也可直接使用下面的配置文件

vi /opt/monitor/prometheus/conf/prometheus.yml

# my global config

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

# - alertmanager:9093

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

# - "first_rules.yml"

# - "second_rules.yml"

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: "prometheus"

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["localhost:9090"]

- job_name: "node-exporter"

consul_sd_configs:

- server: 'consul-server-ip:8500'

vi /opt/monitor/alertmanager/conf/alertmanager.yml

route:

group_by: ['alertname']

group_wait: 30s

group_interval: 5m

repeat_interval: 1h

receiver: 'web.hook'

receivers:

- name: 'web.hook'

webhook_configs:

- url: 'http://127.0.0.1:5001/'

inhibit_rules:

- source_match:

severity: 'critical'

target_match:

severity: 'warning'

equal: ['alertname', 'dev', 'instance']

3、编写配置文件

vi /opt/monitor/monitor-compose.yml

version: '3.3'

networks:

monitor:

driver: bridge

services:

prometheus:

image: prom/prometheus

container_name: prometheus

hostname: prometheus

restart: always

user: root

volumes:

- ./prometheus/conf:/etc/prometheus

- ./prometheus/data:/prometheus

ports:

- "9090:9090"

networks:

- monitor

command:

- '--config.file=/etc/prometheus/prometheus.yml'

- '--storage.tsdb.path=/prometheus'

alertmanager:

image: prom/alertmanager

container_name: alertmanager

hostname: alertmanager

user: root

restart: always

volumes:

- ./alertmanager/conf:/etc/alertmanager

ports:

- "9094:9093"

networks:

- monitor

grafana:

image: grafana/grafana

container_name: grafana

hostname: grafana

user: root

restart: always

ports:

- "3000:3000"

volumes:

- ./grafana/data:/var/lib/grafana

networks:

- monitor

node-exporter:

image: quay.io/prometheus/node-exporter

container_name: node-exporter

hostname: node-exporter

restart: always

user: root

ports:

- "9100:9100"

networks:

- monitor

consul:

image: consul:1.9.4

container_name: "consul"

restart: always

user: root

ports:

- "8500:8500"

volumes:

- ./consul/data:/consul/data

command: [agent,-server,-ui,-client=0.0.0.0,-bootstrap-expect=1]

对应的文件挂载请自行创建

3、运行

docker-compose -f monitor-compose.yml up -d

4、登录页面查看验证(省略,亲试多次无坑)

5、报错排查

1、查看容器健康状态

docker ps

2、如果有异常查看日志是否报错

docker logs -f [容器名]

6、node_exporter批量注册到consul

mkdir /opt/monitor/consul_script

[root@centos76-3 consul_script]# vi /opt/monitor/consul_script/add.sh

exporter_name=node_exporter

port=9100

consul_ip=192.168.10.10

env=prod

for ip in $(cat ips)

do

curl -X PUT -d '{"id": "'${exporter_name}_${ip}'","name": "'${exporter_name}'","address": "'${ip}'","port": '${port}',"tags": ["'${env}'"],"checks": [{"http": "http://'${ip}':'${port}'/metrics", "interval": "5s"}]}' http://${consul_ip}:8500/v1/agent/service/register

done

-------------------------------------------------

[root@centos76-3 consul_script]# vi /opt/monitor/consul_script/delete.sh

exporter_name=node_exporter

consul_ip=192.168.10.10

for ip in $(cat ips)

do

curl -X PUT http://${consul_ip}:8500/v1/agent/service/deregister/${exporter_name}_${ip}

done

-------------------------------------------------

[root@centos76-3 consul_script]# cat ips

192.168.10.11

192.168.10.12

将需要注册的node_exporter主机ip填入ips中,修改add.sh参数,执行add.sh脚本即可

三、远程存储方案VictoriaMetrics

VictoriaMetrics(简称VM) 是一个支持高可用、经济高效且可扩展的监控解决方案和时间序列数据库,可用于 Prometheus 监控数据做长期远程存储。解决prometheus多集群统一管理的痛点,我们将多prometheus-server的数据统一存储在vm集群中,从而实现数据的聚合

本次实验采用单节点的形式部署

1、在compose文件中添加

mkdir /opt/monitor/victoriametrics

victoriametrics:

image: victoriametrics/victoria-metrics

volumes:

- ./victoriametrics:/victoriametrics

ports:

- 8428:8428

command:

- '-storageDataPath=/victoriametrics'

- '-retentionPeriod=1'

docker-compose -f monitor-compose.yml up -d

2、修改Prometheus配置文件

vim prometheus/conf/prometheus.yml

...

remote_write:

- url: "http://victoriametrics:8428/api/v1/write"

scrape_configs:

...

- job_name: 'victoriametrics'

static_configs:

- targets: ['victoriametrics:8428']

...

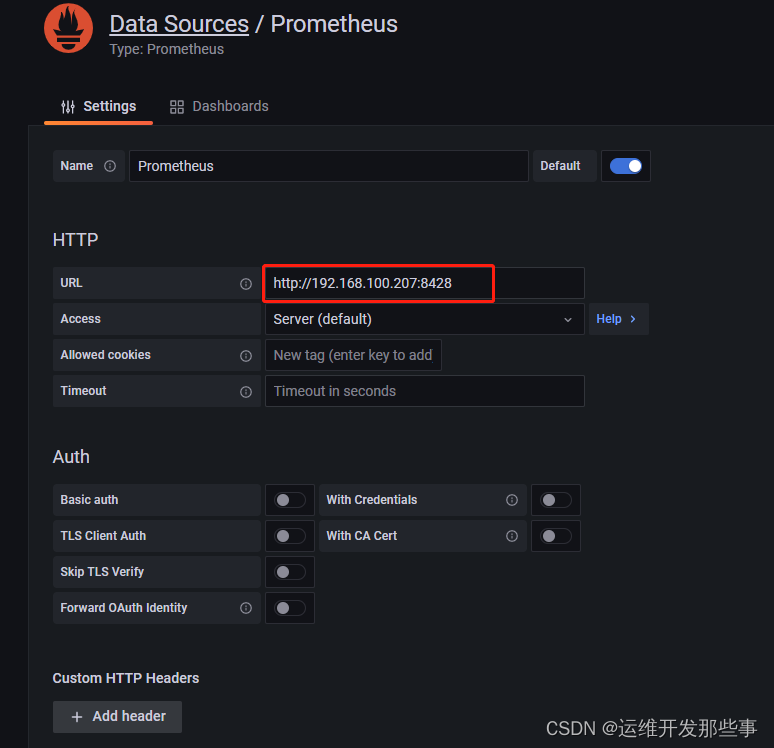

3、修改grafana数据源配置

vm可以兼容Prometheus数据源,只需要修改下url就行

1132

1132

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?