Sink

Flink没有类似于spark中foreach方法,让用户进行迭代的操作。虽有对外的输出操作都要利用Sink完成。最后通过类似如下方式完成整个任务最终输出操作。

myDstream.addSink(new MySink(xxxx))

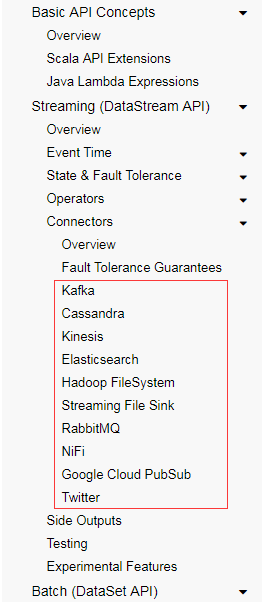

官方提供了一部分的框架的sink。除此以外,需要用户自定义实现sink。

为了保证端到端的一次精确记录传递(除了exactly-once状态语义),数据接收器需要参与检查点机制。下表列出了Flink和捆绑的接收器的交付保证(假设exactly-once更新):

一、Kafka

- pom.xml文件

<!-- https://mvnrepository.com/artifact/org.apache.flink/flink-connector-kafka-0.11 --> <dependency> <groupId>org.apache.flink</groupId> <!--<artifactId>flink-connector-kafka_2.12</artifactId>--> <artifactId>flink-connector-kafka-0.10_2.12</artifactId> <version>1.7.2</version> </dependency> <dependency> <groupId>com.alibaba</groupId> <artifactId>fastjson</artifactId> <version>1.2.47</version> </dependency>

- FlinkKafkaUtil中增加方法

package com.lxk.util

import java.util.Properties

import org.apache.flink.api.common.serialization.SimpleStringSchema

import org.apache.flink.streaming.connectors.kafka.{FlinkKafkaConsumer010, FlinkKafkaProducer010}

object FlinkKafkaUtil {

val prop = new Properties()

val brokerList = "192.168.18.103:9092,192.168.18.104:9092,192.168.18.105:9092"

prop.setProperty("bootstrap.servers", brokerList)

prop.setProperty("zookeeper.connect", "192.168.18.103:2181,192.168.18.104:2181,192.168.18.105:2181")

prop.setProperty("group.id", "gmall")

def getConsumer(topic: String): FlinkKafkaConsumer010[String] = {

//消费Kafka数据

//Flink’s Kafka consumer is called FlinkKafkaConsumer08 (

// or 09 for Kafka 0.9.0.x versions, etc.

// or just FlinkKafkaConsumer for Kafka >= 1.0.0 versions).

val myKafkaConsumer: FlinkKafkaConsumer010[String] = new FlinkKafkaConsumer010[String](topic, new SimpleStringSchema(), prop)

myKafkaConsumer

}

def getProducer(topic:String): FlinkKafkaProducer010[String] ={

new FlinkKafkaProducer010[String](brokerList,topic,new SimpleStringSchema())

}

}

- 主函数中添加sink

package com.lxk.service

import com.alibaba.fastjson.JSON

import com.lxk.bean.UserLog

import com.lxk.util.FlinkKafkaUtil

import org.apache.flink.streaming.api.scala.{DataStream, StreamExecutionEnvironment}

import org.apache.flink.streaming.connectors.kafka.{FlinkKafkaConsumer010, FlinkKafkaProducer010}

import org.apache.flink.api.scala._

object StartupApp {

def main(args: Array[String]): Unit = {

val environment: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

//val environment: StreamExecutionEnvironment = StreamExecutionEnvironment.createRemoteEnvironment("node03", 6123,"F:\\10_code\\scala\\flink2020\\target\\flink-streaming-scala_2.11-1.0-SNAPSHOT.jar")

val kafkaConsumer: FlinkKafkaConsumer010[String] = FlinkKafkaUtil.getConsumer("GMALL_STARTUP")

val dstream: DataStream[String] = environment.addSource(kafkaConsumer)

//val userLogStream: DataStream[UserLog] = dstream.map { userLog => JSON.parseObject(userLog, classOf[UserLog]) }

val myKafkaProducer: FlinkKafkaProducer010[String] = FlinkKafkaUtil.getProducer("sink_kafka")

dstream.addSink(myKafkaProducer)

//dstream.print()

environment.execute()

}

}- output:

二、Redis

- pom.xml文件

<!-- https://mvnrepository.com/artifact/org.apache.bahir/flink-connector-redis --> <dependency> <groupId>org.apache.bahir</groupId> <artifactId>flink-connector-redis_2.11</artifactId> <version>1.0</version> </dependency>

- FlinkRedisUtil中增加方法

package com.lxk.util

import org.apache.flink.streaming.connectors.redis.RedisSink

import org.apache.flink.streaming.connectors.redis.common.config.FlinkJedisPoolConfig

import org.apache.flink.streaming.connectors.redis.common.mapper.{RedisCommand, RedisCommandDescription, RedisMapper}

object FlinkRedisUtil {

val conf = new FlinkJedisPoolConfig.Builder().setHost("192.168.18.151").setPort(6379).setPassword("redis_zy_passpwd").build()

def getRedisSink(): RedisSink[(String, String)] = {

new RedisSink[(String, String)](conf, new MyRedisMapper)

}

class MyRedisMapper extends RedisMapper[(String, String)] {

override def getCommandDescription: RedisCommandDescription = {

new RedisCommandDescription(RedisCommand.HSET, "channel_count")

//new RedisCommandDescription(RedisCommand.SET)

}

override def getKeyFromData(t: (String, String)): String = t._1

override def getValueFromData(t: (String, String)): String = t._2.toString

}

}

- 主函数中添加sink

package com.lxk.service

import com.alibaba.fastjson.JSON

import com.lxk.bean.UserLog

import com.lxk.util.{FlinkKafkaUtil, FlinkRedisUtil}

import org.apache.flink.api.java.tuple.Tuple

import org.apache.flink.api.scala._

import org.apache.flink.streaming.api.scala.{DataStream, KeyedStream, SplitStream, StreamExecutionEnvironment}

import org.apache.flink.streaming.connectors.kafka.{FlinkKafkaConsumer010, FlinkKafkaProducer010}

import org.apache.flink.streaming.connectors.redis.RedisSink

object StartupApp04 {

def main(args: Array[String]): Unit = {

val environment: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

val kafkaConsumer: FlinkKafkaConsumer010[String] = FlinkKafkaUtil.getConsumer("GMALL_STARTUP")

val dstream: DataStream[String] = environment.addSource(kafkaConsumer)

//求各个渠道的累计个数

val userLogDstream: DataStream[UserLog] = dstream.map {

JSON.parseObject(_, classOf[UserLog])

}

val keyedStream: KeyedStream[(String, Int), Tuple] = userLogDstream.map(userLog => (userLog.channel, 1)).keyBy(0)

//reduce //sum

val sumStream: DataStream[(String, Int)] = keyedStream.reduce { (ch1, ch2) => (ch1._1, ch1._2 + ch2._2) }

val redisSink: RedisSink[(String, String)] = FlinkRedisUtil.getRedisSink()

sumStream.map(chCount => (chCount._1, chCount._2 + "")).addSink(redisSink)

dstream.print()

environment.execute()

}

}- output:

三、Elasticsearch

- pom.xml文件

<!-- https://mvnrepository.com/artifact/org.apache.flink/flink-connector-elasticsearch6 --> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-connector-elasticsearch6_2.12</artifactId> <version>1.7.2</version> </dependency> <dependency> <groupId>org.apache.httpcomponents</groupId> <artifactId>httpclient</artifactId> <version>4.5.3</version> </dependency>

- FlinkEsUtil中增加方法

package com.lxk.util

import java.util

import com.alibaba.fastjson.{JSON, JSONObject}

import org.apache.flink.api.common.functions.RuntimeContext

import org.apache.flink.streaming.connectors.elasticsearch.{ElasticsearchSinkFunction, RequestIndexer}

import org.apache.flink.streaming.connectors.elasticsearch6.ElasticsearchSink

import org.apache.http.HttpHost

import org.elasticsearch.action.index.IndexRequest

import org.elasticsearch.client.Requests

object FlinkEsUtil {

val httpHosts = new util.ArrayList[HttpHost]

httpHosts.add(new HttpHost("node03", 9200, "http"))

httpHosts.add(new HttpHost("node04", 9200, "http"))

httpHosts.add(new HttpHost("node05", 9200, "http"))

def getElasticSearchSink(indexName: String): ElasticsearchSink[String] = {

val esFunc = new ElasticsearchSinkFunction[String] {

override def process(element: String, ctx: RuntimeContext, indexer: RequestIndexer): Unit = {

println("试图保存:" + element)

val jsonObj: JSONObject = JSON.parseObject(element)

val indexRequest: IndexRequest = Requests.indexRequest().index(indexName).`type`("userlog").source(jsonObj)

indexer.add(indexRequest)

println("保存1条")

}

}

val sinkBuilder = new ElasticsearchSink.Builder[String](httpHosts, esFunc)

//刷新前缓冲的最大动作量

sinkBuilder.setBulkFlushMaxActions(10)

sinkBuilder.build()

}

}- 在main方法中调用

package com.lxk.service

import com.alibaba.fastjson.JSON

import com.lxk.bean.UserLog

import com.lxk.util.{FlinkEsUtil, FlinkKafkaUtil, FlinkRedisUtil}

import org.apache.flink.api.java.tuple.Tuple

import org.apache.flink.api.scala._

import org.apache.flink.streaming.api.scala.{DataStream, KeyedStream, StreamExecutionEnvironment}

import org.apache.flink.streaming.connectors.elasticsearch6.ElasticsearchSink

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer010

import org.apache.flink.streaming.connectors.redis.RedisSink

object StartupApp05 {

def main(args: Array[String]): Unit = {

val environment: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

val kafkaConsumer: FlinkKafkaConsumer010[String] = FlinkKafkaUtil.getConsumer("GMALL_STARTUP")

val dstream: DataStream[String] = environment.addSource(kafkaConsumer)

// 明细发送到es 中

val esSink: ElasticsearchSink[String] = FlinkEsUtil.getElasticSearchSink("gmall_startup")

dstream.addSink(esSink)

//dstream.print()

environment.execute()

}

}- output:

数据源

主函数结果:

es持久化结果:

四、JDBC 自定义sink--实现RichSinkFunction接口

- pom.xml文件

<!-- https://mvnrepository.com/artifact/mysql/mysql-connector-java --> <dependency> <groupId>mysql</groupId> <artifactId>mysql-connector-java</artifactId> <version>5.1.44</version> </dependency> <dependency> <groupId>com.alibaba</groupId> <artifactId>druid</artifactId> <version>1.1.10</version> </dependency>

- 添加MyJdbcSink

package com.lxk.util

import java.sql.{Connection, PreparedStatement}

import java.util.Properties

import com.alibaba.druid.pool.DruidDataSourceFactory

import javax.sql.DataSource

import org.apache.flink.configuration.Configuration

import org.apache.flink.streaming.api.functions.sink.RichSinkFunction

class MyJdbcSink(sql:String ) extends RichSinkFunction[Array[Any]] {

val driver="com.mysql.jdbc.Driver"

val url="jdbc:mysql://node04:3306/gmall2020?useSSL=false"

val username="root"

val password="123"

val maxActive="20"

var connection:Connection=null;

//创建连接

override def open(parameters: Configuration): Unit = {

val properties = new Properties()

properties.put("driverClassName",driver)

properties.put("url",url)

properties.put("username",username)

properties.put("password",password)

properties.put("maxActive",maxActive)

val dataSource: DataSource = DruidDataSourceFactory.createDataSource(properties)

connection = dataSource.getConnection()

}

//反复调用

override def invoke(values: Array[Any]): Unit = {

val ps: PreparedStatement = connection.prepareStatement(sql )

println(values.mkString(","))

for (i <- 0 until values.length) {

ps.setObject(i + 1, values(i))

}

ps.executeUpdate()

}

override def close(): Unit = {

if(connection!=null){

connection.close()

}

}

}

- 在main方法中增加( 把明细保存到mysql中)

package com.lxk.service

import com.alibaba.fastjson.JSON

import com.lxk.bean.UserLog

import com.lxk.util.{ FlinkKafkaUtil, MyJdbcSink}

import org.apache.flink.api.scala._

import org.apache.flink.streaming.api.scala.{DataStream, StreamExecutionEnvironment}

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer010

object StartupApp06 {

def main(args: Array[String]): Unit = {

val environment: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

val kafkaConsumer: FlinkKafkaConsumer010[String] = FlinkKafkaUtil.getConsumer("GMALL_STARTUP")

val dstream: DataStream[String] = environment.addSource(kafkaConsumer)

val userLogStream: DataStream[UserLog] = dstream.map { userLog => JSON.parseObject(userLog, classOf[UserLog]) }

//val userLogStream: DataStream[UserLog] = dstream.map{ JSON.parseObject(_,classOf[UserLog])}

val jdbcSink = new MyJdbcSink("insert into userlog values(?,?,?,?,?,?,?,?)")

userLogStream.map(userLog =>

Array(userLog.dateToday, userLog.area, userLog.uid, userLog.os, userLog.channel, userLog.appid, userLog.ver, userLog.timestamp))

.addSink(jdbcSink)

//dstream.print()

environment.execute()

}

}- output:

数据源

主函数结果:

mysql持久化结果:

858

858

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?