在 hadoop 中编写一个job一般都是采用下面的方式:

Job job=new Job();

job.setXxx();

...这样感觉代码很多,而且参数还不好控制。比如,我想对输入的参数进行控制,还要自己写一些控制解析之类的代码,如下:

其实可以实现Mahout中的AbstractJob类即可,如下:

package mahout.fansy.bayes.transform;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.SequenceFileOutputFormat;

import org.apache.hadoop.util.ToolRunner;

import org.apache.mahout.common.AbstractJob;

import org.apache.mahout.math.VectorWritable;

public class TFText2VectorWritable extends AbstractJob {

@Override

public int run(String[] args) throws Exception {

addInputOption();

addOutputOption();

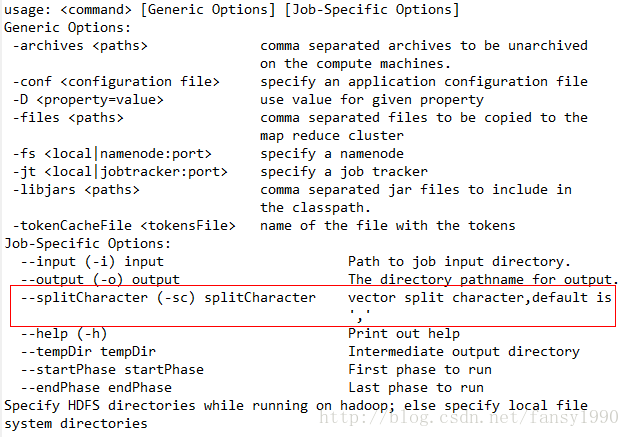

addOption("splitCharacter","sc", "vector split character,default is ','", ",");

if (parseArguments(args) == null) {

return -1;

}

Path input = getInputPath();

Path output = getOutputPath();

String sc=getOption("splitCharacter");

Job job=prepareJob(input,output,FileInputFormat.class,Mapper.class,LongWritable.class,Text.class,

null, Text.class,VectorWritable.class,SequenceFileOutputFormat.class);

job.getConfiguration().set("sc", sc);

if(job.waitForCompletion(true)){

return 0;

}

return -1;

}

/**

*实现AbstractJob

* @param args

* @throws Exception

*/

public static void main(String[] args) throws Exception {

String[] arg=new String[]{"-i","safdf","-sc","scccccccc","-o","sdf"};

ToolRunner.run(new Configuration(), new TFText2VectorWritable(),arg);

}

}

红色方框里面的内容,即是设置的参数;

同时调用prepareJob方法可以简化Job的参数设置。比如设置Mapper、MapperOutPutKey等等都要一行代码,现在全部只需一行即可;如果要设置参数以供Mapper和Reducer中使用,可以使用job.getConfiguration().set("sc", sc)来进行设置。

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?