目录

1、简介

| 报告时间 | 2022年7月2日 (星期六) 15:00 (北京时间) |

| 主 题 | Optimization Perspectives on Graph Neural Networks |

| 主持人 | 周敏(华为诺亚方舟实验室) |

报告嘉宾:黄增峰(复旦大学)

报告题目:Optimization Perspectives on Graph Neural Networks

报告摘要:

In the recent few years, graph neural network (GNN) has emerged as a major tool for graph machine learning and has found numerous applications. In this talk, I will introduce an optimization-based framework for understanding and deriving GNNs model, which involves treating graph propagation as unfolded descent iterations as applied to some graph-regularized energy function. Then I will talk about a graph attention model obtained from this framework through a robust objective. I will also briefly talk about the connection and difference between this unfolding scheme and implicit GNNs, which treats node representations as the fixed points of a deep equilibrium model.

报告人简介:

Zengfeng Huang is currently an Associate Professor in the School of Data Science, Fudan University. Before that he was a Research Fellow in CSE, UNSW and a Postdoc in MADALGO, Aarhus University. He obtained his PhD at Hong Kong University of Science and Technology in CSE and B.S. degree in Computer Science from Zhejiang University. His research interests are foundations of data science, machine learning algorithms, graph analytics, and theoretical computer science. His single-authored paper, “Near Optimal Frequent Directions for Sketching Dense and Sparse Matrices”, is the winner of ICML 2018 Best Paper Runner Up Award and 2020 World Artificial Intelligence Conference Youth Outstanding Paper Nomination Award.

2、从优化的角度理解图神经网络

背景

没叠一层GNN,能量下降

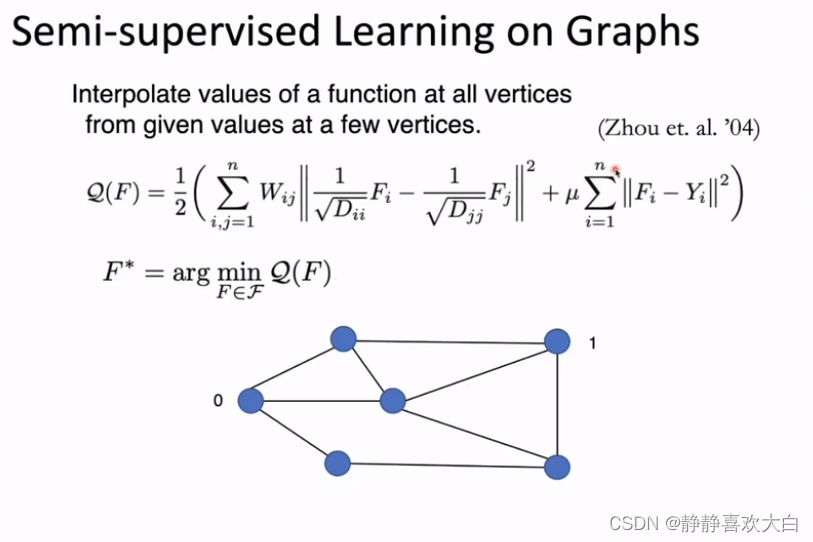

相较于04年Zhou的改进是带参的

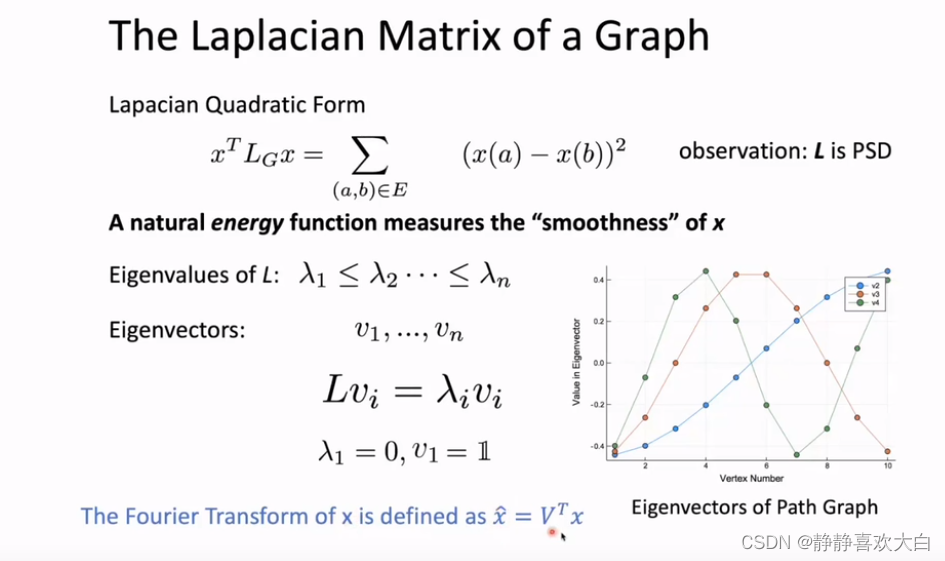

为什么梯度下降可以认为能量最小呢?他们具有等价性?

梯度下降刚好对应GNN每层,也就是刚好和GNN迭代一样的

3、小结

从能量的角度优化GNN,看不懂

4、参考

报告参考

LOGS第2022/07/02期复旦大学黄增峰:从优化的角度理解图神经网络(GNN)_哔哩哔哩_bilibili

录播视频链接 || LOGS 第2022/07/02期 || 复旦大学黄增峰:从优化的角度理解图神经网络

文献参考

1.Scaling up graph neural networks via graph coarsening. KDD 2021

2.Graph neural networks inspired by classical iterative algorithms. ICML 2021

3.Implicit vs unfolded graph neural networks. arXiv preprint arXiv:2111.06592

4.Transformers from an Optimization Perspective. arXiv preprint arXiv:2205.13891

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?