目录

1、简介

报告主题

重新思考图对比学习--极高效图自监督新范式

报告嘉宾

潘世瑞(格里菲斯大学)

摘要:

Deep learning on graphs has attracted significant interest recently. However, most of the works have focused on (semi-) supervised learning, resulting in shortcomings, including heavy label reliance, poor generalization, and weak robustness. To address these issues, self-supervised learning (SSL) [1], which extracts informative knowledge through well-designed pretext tasks without relying on manual labels, has become a promising and trending learning paradigm for graph data. However, current self-supervised learning methods, especially graph contrastive learning (GCL) methods, require heavy computations in their designs, hindering them from scaling to large graphs. In this talk, I will introduce our recent development in scaling up graph contrast learning for graph data. By examining existing graph contrastive learning methods [2, 3] and pinpointing their defects, we propose a new group discrimination method (to appear in NeurIPS 2022 [4]), which is orders of magnitude (10,000+ faster than GCL baselines) while consuming much less memory. The new solution opens a new door to self-supervised learning for large-scale datasets in the graph domain and potentially in other areas such as vision and language.

个人简介:

潘世瑞,澳大利亚基金委ARC Future Fellow,格里菲斯大学正教授(Full Professor)。连续两年入选全球AAAI/IJCAI最具影响力学者,入选全球前2%顶尖科学家榜单 (2022,2021), 获得2021蒙纳士大学信息技术学院研究卓越奖。指导学生获得数据挖掘会议ICDM最佳学生论文奖(2020),获得2020年JCDL会议最佳论文提名奖。在NeurIPS、ICML、KDD、TPAMI、TKDE、TNNLS等发表高水平论文150篇。同时担任TPAMI, TNNLS, TKDE等领域期刊审稿人,任IJCAI, AAAI, KDD, WWW, CVPR 等(高级)程序委员会委员。谷歌学术引用12,000+,H指数(H-Index) 43。主要研究方向为数据挖掘、机器学习。其发表于TNNLS-2021年关于图神经网络综述论文引用高达4200+,发表于KDD、IJCAI、AAAI、CIKM等顶级会议的共8篇文章被评为最具影响力论文(Most Influential Papers)。其研究受到澳大利亚基金委、亚马逊、澳大利亚国防科技部等资助。

2、重新思考图对比学习

大纲

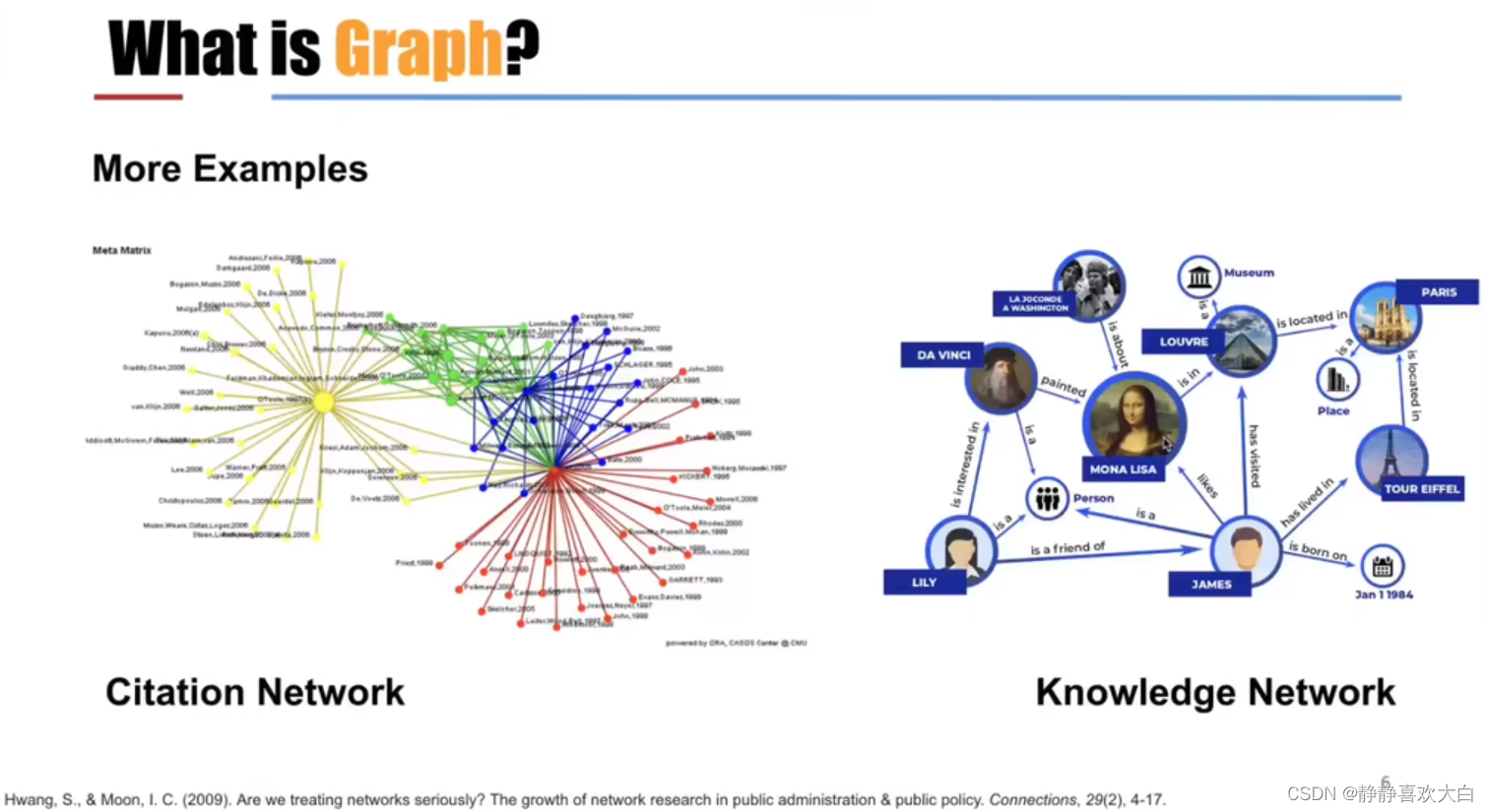

GRL背景

可以用邻接矩阵表示

表征学习

encoder->GNN

GRL现有问题/挑战

依赖于标签

具体表现

具体表现

引出SSL学习

SSL学习理解

经典DNN中SSL工作

NLP中,如当前热门Transformet

CV中:最大化互信息

CV中:最大化互信息

引出图上的SSL

GCL学习:

给定一个图,如何构造一个前置任务,辅助GNN学习

相关work

成功范例1:DGI

打乱原图后一起训练学习

成功范例2:GRACE

删除一些边或者抹除一些node feat

GCL现有问题/挑战

计算速度慢(node pair)

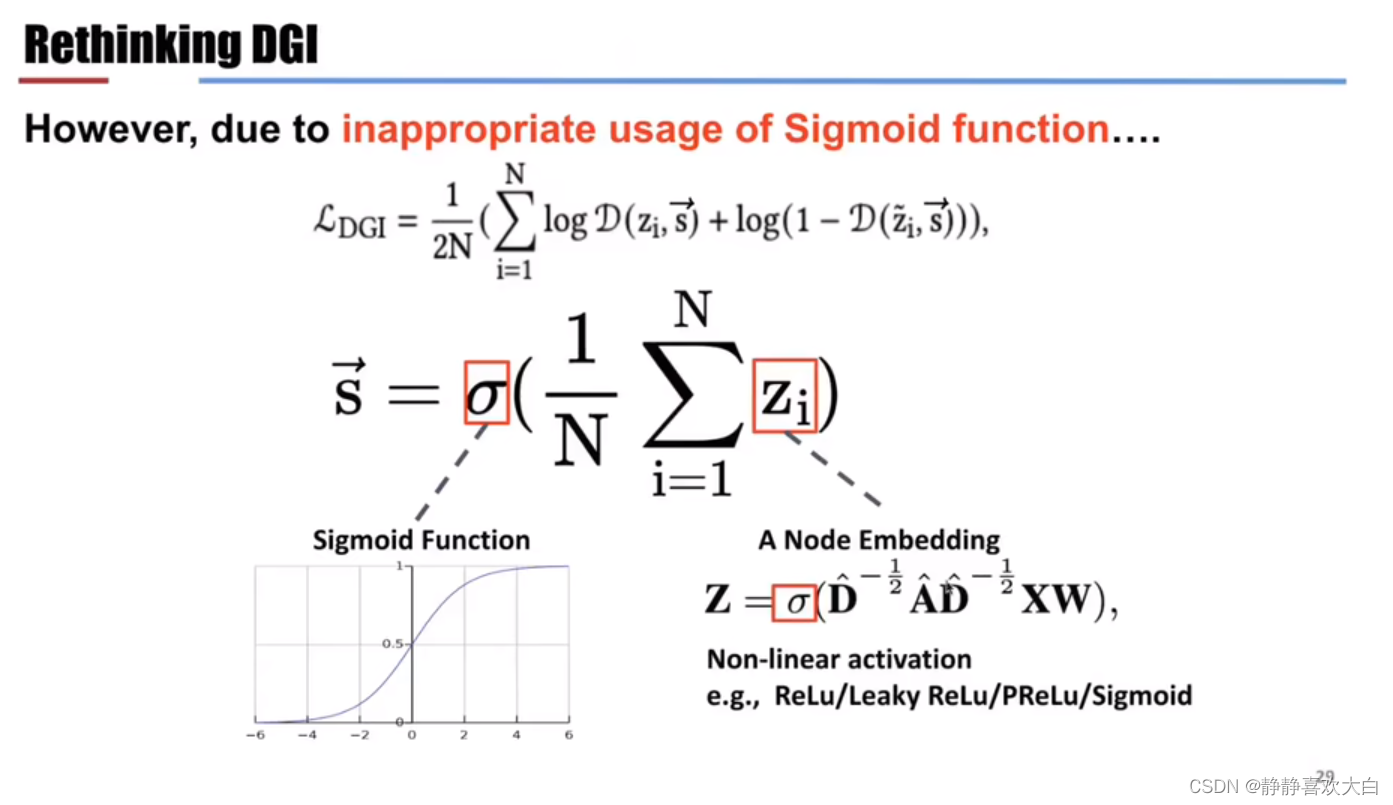

重新思考GCL

从DGI出发(进行魔改?)

DGI为何可以成功?

修改loss发现性能有一定小提升,且速度变快

组判别-一个新范式

目标:将节点划分为2类

正为1,否则0

训练速度快的原因分析

首先分析GCN的时间复杂度(就是三个矩阵相乘,即AXW):

- N是边的数量也是节点数量,D是维度大小

- AX的复杂度就是LND(标乘)

- AXW就是LND*D=LND^2

MLP时间复杂度就是KND^2(也是矩阵相乘,有K层)

低维向量加和(有N个节点,直接N个D相加)

BCE loss就是区分正负

总复杂度:与节点数量呈线性关系

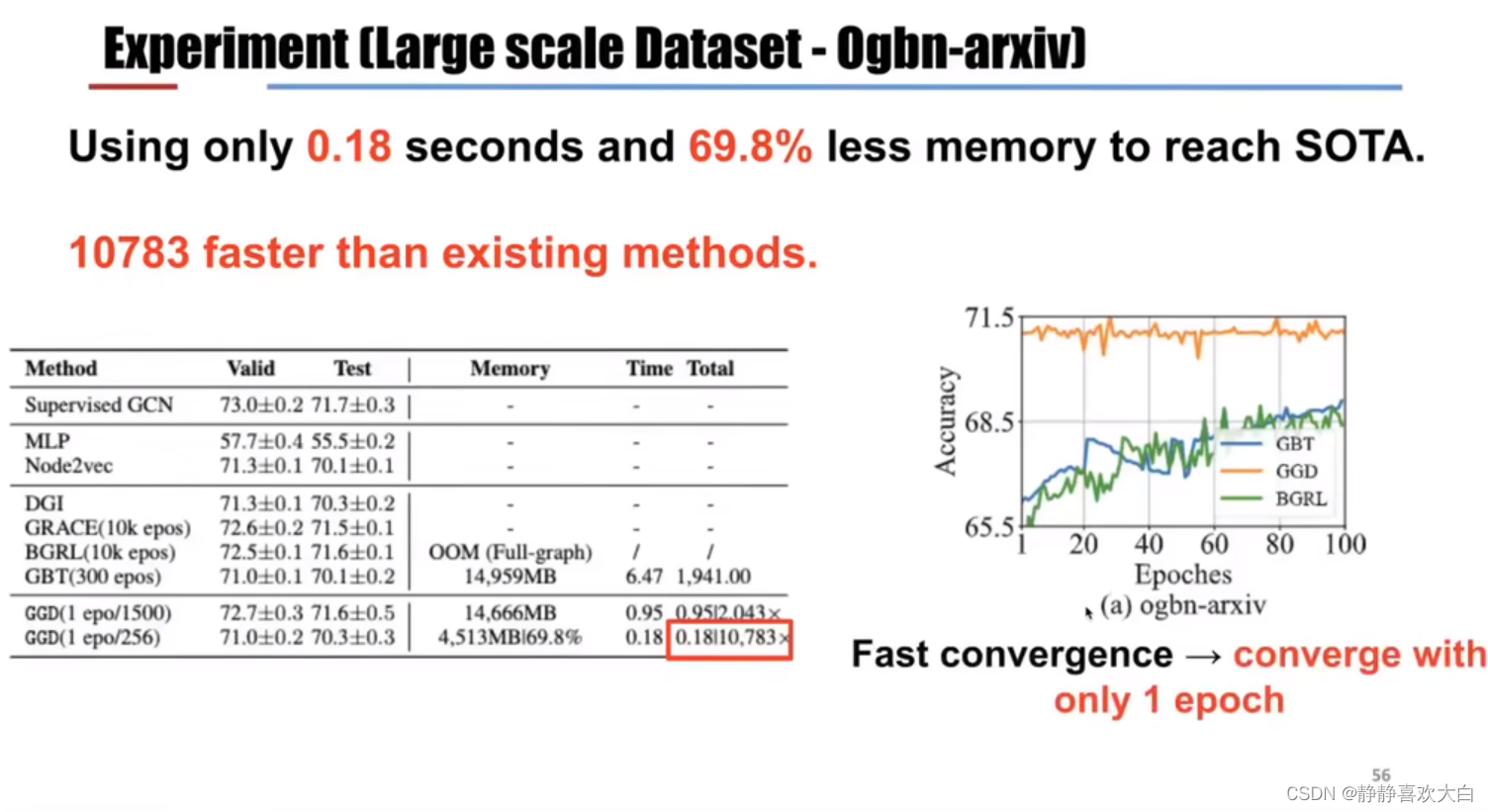

实验结果

如此高效原因分析

小结

收敛快、计算高效

讨论

1)GCL在图数量上有要求吗?

没,大小均可以用

2)高效原因?

loss简单;1x1维度计算

3)DGI没有激活函数怎么办?

性能下降,减少2个百分点左右;DGI作者没在这块做其他研究

4)GCL在异构图上可以应用嘛?

可以扩展到,目前异构上比较少,应该是有潜力的

5)节点属性缺失的话,GCL可以用吗?

应该可以的,比如节点属性补全后用

3、参考

研讨会回放|| 格里菲斯大学 潘世瑞: 重新思考图对比学习--极高效图自监督新范式

LOGS 第2022/10/15 期 || 格里菲斯大学 潘世瑞: 重新思考图对比学习--极高效图自监督新范式_哔哩哔哩_bilibili

Reference:

[1] Y Liu, M Jin, S Pan, C Zhou, Y Zheng, F Xia, PS Yu, Graph self-supervised learning: A survey, IEEE Transactions on Knowledge and Data Engineering (TKDE), 2022.

[2] P Velickovic, W Fedus, WL Hamilton, P Liò, Deep Graph Infomax. International Conference on Learning Representations (ICLR), 2019.

[3] K Hassani, AH Khasahmadi. Contrastive multi-view representation learning on graphs. International Conference on Machine Learning (ICLR), 2020.

[4] Y Zheng, S Pan, VC Lee, Y Zheng, PS Yu, Rethinking and Scaling Up Graph Contrastive Learning: An Extremely Efficient Approach with Group Discrimination, Advances in Neural Information Processing Systems (NeurIPS), 2022.

2022 ICDM Unifying Graph Contrastive Learning with Flexible Contextual Scopes.

报告探讨了图对比学习(GCL)的现有问题,如依赖标签、计算效率低下,并提出了组判别方法,这是一种速度比GCL基线快数千倍的新方法。该方法通过节点分类实现高效训练,适用于大规模图数据,为图领域的自我监督学习开辟了新途径。

报告探讨了图对比学习(GCL)的现有问题,如依赖标签、计算效率低下,并提出了组判别方法,这是一种速度比GCL基线快数千倍的新方法。该方法通过节点分类实现高效训练,适用于大规模图数据,为图领域的自我监督学习开辟了新途径。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?