1 安装前的准备

- VPS服务器三台(三台2台内存6G硬盘150G,一台4G硬盘30G)

- VPS安装的系统(CentOS 6.0)

- Hadoop2.7.2

2 准备系统环境

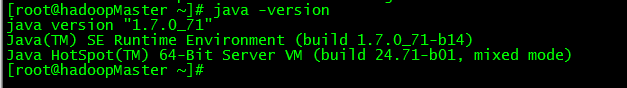

1 安装JDK

linux下载方法:wget –no-check-certificate –no-cookies –header “Cookie: oraclelicense=accept-securebackup-cookie” http://download.oracle.com/otn-pub/java/jdk/7u71-b14/jdk-7u71-linux-x64.rpm

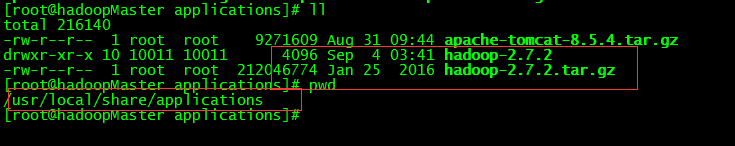

2 下载Hadoop2.7.2

linux下载方法

wget http://apache.fayea.com/hadoop/common/hadoop-2.7.2/hadoop-2.7.2.tar.gz

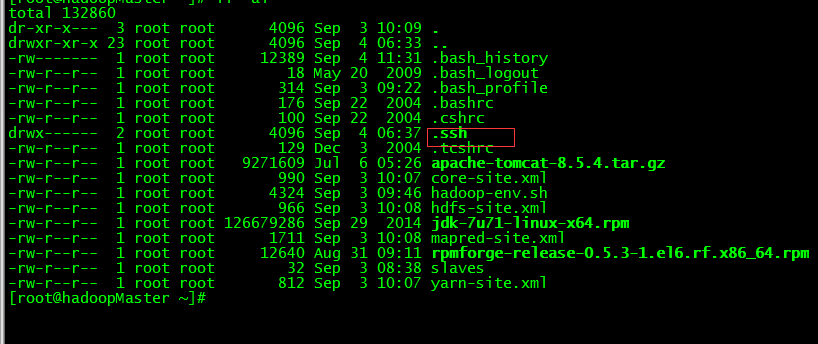

我下载到的目录

三台VPS服务器的Hadoop都是放在这个目录下面

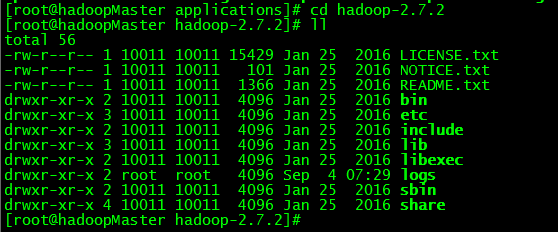

3 配置Hadoop的配置文件

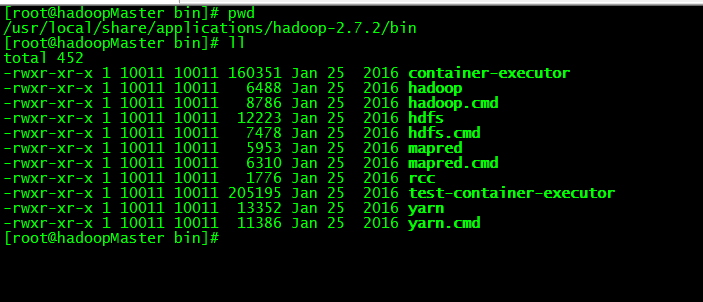

1 Hadoop解压后的目录结构

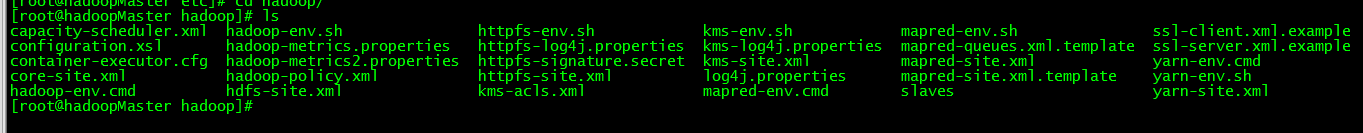

配置文件放在etc/hadoop里面

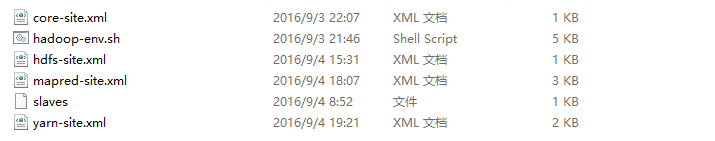

我们从中选取几个进行配置并不是所有的文件都需要配置(需要配置的如下图)

对于集群部署我这边就配置了这6个文件,下面来一个一个看

core-site.xml文件

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://(填写自己的NameNode服务器的IP):9000</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

</configuration>基础的配置就配置这两个就好了(Hadoop官网给出来就这两个对于core-site.xml更多配置信息可以参照http://hadoop.apache.org/docs/current/hadoop-project-dist/hadoop-common/core-default.xml自己也是初学Hadoop)配置文件和单机版Hadoop这个基本上一样。

hdfs-site.xml 文件

<configuration>

<property>

<!--数据复制数 默认3 我们这边设置1 节省空间-->

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<!--这个后面会讲解会用到最开始我也没加 我的Hadoop部署在公网上-->

<name>dfs.namenode.datanode.registration.ip-hostname-check</name>

<value>false</value>

</property>

</configuration>mapred-site.xml文件

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.map.memory.mb</name>

<value>1536</value>

</property>

<property>

<name>mapreduce.map.java.opts</name>

<value>-Xmx1024M</value>

</property>

<property>

<name>mapreduce.reduce.memory.mb</name>

<value>3072</value>

</property>

<property>

<name>mapreduce.reduce.java.opts</name>

<value>-Xmx2560M</value>

</property>

<property>

<name>mapreduce.task.io.sort.mb</name>

<value>512</value>

</property>

<property>

<name>mapreduce.task.io.sort.factor</name>

<value>100</value>

</property>

<property>

<name>mapreduce.reduce.shuffle.parallelcopies</name>

<value>50</value>

</property>

<property>

<name>yarn.app.mapreduce.am.resource.mb</name>

<value>1024</value>

</property>

<property>

<name>yarn.app.mapreduce.am.command-opts</name>

<value>-Xmx768m</value>

</property>

<property>

<name>mapreduce.jobtracker.address</name>

<value>(JobTracker的IP地址这个必填,填写yarn所在的IP也就是NameNode的IP)</value>

</property>

</configuration>yarn-site.xml

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>(yarn所在的IP也就是NameNode的IP)</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>${yarn.resourcemanager.hostname}:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>${yarn.resourcemanager.hostname}:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>${yarn.resourcemanager.hostname}:8031</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>5120</value>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>1024</value>

</property>

<property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>2.1</value>

</property>

</configuration>hadoop-env.sh

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# Set Hadoop-specific environment variables here.

# The only required environment variable is JAVA_HOME. All others are

# optional. When running a distributed configuration it is best to

# set JAVA_HOME in this file, so that it is correctly defined on

# remote nodes.

# The java implementation to use.

#export JAVA_HOME=${JAVA_HOME}

#填写自己的JAVA_HOME路径 直接用${JAVA_HOME}我的直接抛错,如果可以就不用换

export JAVA_HOME="/usr/java/jdk1.7.0_71"

# The jsvc implementation to use. Jsvc is required to run secure datanodes

# that bind to privileged ports to provide authentication of data transfer

# protocol. Jsvc is not required if SASL is configured for authentication of

# data transfer protocol using non-privileged ports.

#export JSVC_HOME=${JSVC_HOME}

export HADOOP_CONF_DIR=${HADOOP_CONF_DIR:-"/etc/hadoop"}

# Extra Java CLASSPATH elements. Automatically insert capacity-scheduler.

for f in $HADOOP_HOME/contrib/capacity-scheduler/*.jar; do

if [ "$HADOOP_CLASSPATH" ]; then

export HADOOP_CLASSPATH=$HADOOP_CLASSPATH:$f

else

export HADOOP_CLASSPATH=$f

fi

done

# The maximum amount of heap to use, in MB. Default is 1000.

#export HADOOP_HEAPSIZE=

#export HADOOP_NAMENODE_INIT_HEAPSIZE=""

# Extra Java runtime options. Empty by default.

export HADOOP_OPTS="$HADOOP_OPTS -Djava.net.preferIPv4Stack=true"

# Command specific options appended to HADOOP_OPTS when specified

export HADOOP_NAMENODE_OPTS="-Dhadoop.security.logger=${HADOOP_SECURITY_LOGGER:-INFO,RFAS} -Dhdfs.audit.logger=${HDFS_AUDIT_LOGGER:-INFO,NullAppender} $HADOOP_NAMENODE_OPTS"

export HADOOP_DATANODE_OPTS="-Dhadoop.security.logger=ERROR,RFAS $HADOOP_DATANODE_OPTS"

export HADOOP_SECONDARYNAMENODE_OPTS="-Dhadoop.security.logger=${HADOOP_SECURITY_LOGGER:-INFO,RFAS} -Dhdfs.audit.logger=${HDFS_AUDIT_LOGGER:-INFO,NullAppender} $HADOOP_SECONDARYNAMENODE_OPTS"

export HADOOP_NFS3_OPTS="$HADOOP_NFS3_OPTS"

export HADOOP_PORTMAP_OPTS="-Xmx512m $HADOOP_PORTMAP_OPTS"

# The following applies to multiple commands (fs, dfs, fsck, distcp etc)

export HADOOP_CLIENT_OPTS="-Xmx512m $HADOOP_CLIENT_OPTS"

#HADOOP_JAVA_PLATFORM_OPTS="-XX:-UsePerfData $HADOOP_JAVA_PLATFORM_OPTS"

# On secure datanodes, user to run the datanode as after dropping privileges.

# This **MUST** be uncommented to enable secure HDFS if using privileged ports

# to provide authentication of data transfer protocol. This **MUST NOT** be

# defined if SASL is configured for authentication of data transfer protocol

# using non-privileged ports.

export HADOOP_SECURE_DN_USER=${HADOOP_SECURE_DN_USER}

# Where log files are stored. $HADOOP_HOME/logs by default.

#export HADOOP_LOG_DIR=${HADOOP_LOG_DIR}/$USER

# Where log files are stored in the secure data environment.

export HADOOP_SECURE_DN_LOG_DIR=${HADOOP_LOG_DIR}/${HADOOP_HDFS_USER}

###

# HDFS Mover specific parameters

###

# Specify the JVM options to be used when starting the HDFS Mover.

# These options will be appended to the options specified as HADOOP_OPTS

# and therefore may override any similar flags set in HADOOP_OPTS

#

# export HADOOP_MOVER_OPTS=""

###

# Advanced Users Only!

###

# The directory where pid files are stored. /tmp by default.

# NOTE: this should be set to a directory that can only be written to by

# the user that will run the hadoop daemons. Otherwise there is the

# potential for a symlink attack.

export HADOOP_PID_DIR=${HADOOP_PID_DIR}

export HADOOP_SECURE_DN_PID_DIR=${HADOOP_PID_DIR}

# A string representing this instance of hadoop. $USER by default.

export HADOOP_IDENT_STRING=$USER

#我的SSH端口改在26120 三台VPS的端口都是26120

export HADOOP_SSH_OPTS="-p 26120"需要修改的:export JAVA_HOME=”/usr/java/jdk1.7.0_71”

需要添加的:export HADOOP_SSH_OPTS=”-p 26120”(如果SSH的端口不是22的默认端口就要添加这个)

slaves文件

168.168.168.2

168.168.168.3例如你的三台机器IP为

168.168.168.1(Master NameNode)

168.168.168.2(Slaver DataNode)

168.168.168.3(Slaver DataNode)

这里面放的就是DataNode

如果你把168.168.168.1也写在里面就是该服务器不但是NameNode同时也是DataNode

然后把对应的文件放到Hadoop的etc/hadoop的配置文件下。每个机器上都要。

4 设置机器的SSH免密登录

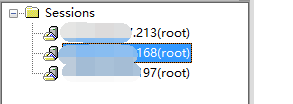

这是我的三台机器213的是NameNode机器其他的都是DataNode

1 登录到213机器 进入到用户的主目录(我这里都是用的root包括安装Hadoop集群)

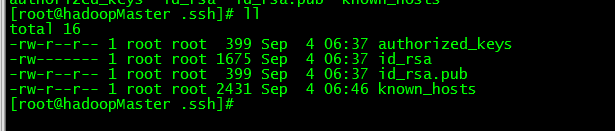

进入到.ssh目录

删除known_hosts的其他文件,这些是我已经生成好的。

ssh-keygen -t rsa

cat ~/id_rsa.pub >> ~/.ssh/authorized_keys

或者(下面是Hadoop官网给出来的)

ssh-keygen -t dsa -P ” -f ~/.ssh/id_dsa

cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

chmod 0600 ~/.ssh/authorized_keys

然后把id_dsa.pub 拷贝到其他的服务器上的对应的主目录下

使用cat ~/id_rsa.pub >> ~/.ssh/authorized_keys

2 然后在NameNode服务器上面

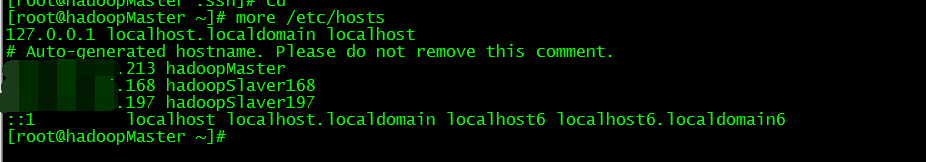

3 设置好每台机器的Hostname 最好不都用localhost

比如我的三台机器的名称:

213的为hadoopMaster

168的为hadoopSlaver168

197的为hadoopSlaver197

同时把对应的名称和ip配置到每台集群机器的/etc/hosts里面

下面是我213机器的Hosts文件

5 启动Hadoop集群

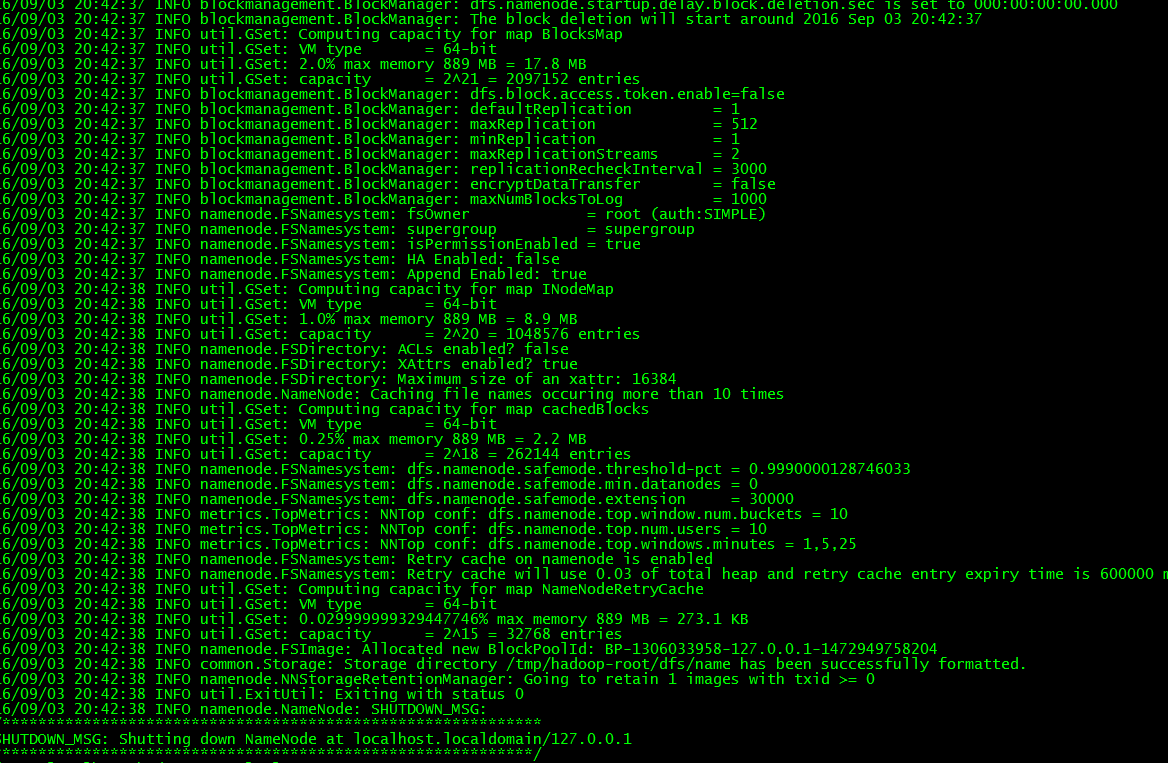

1 格式化HDFS

在NameNode的服务器运行Hadoop的格式化命令

./hdfs namenode -format <cluster_name>后面的cluster_name可选

没有抛错格式完成

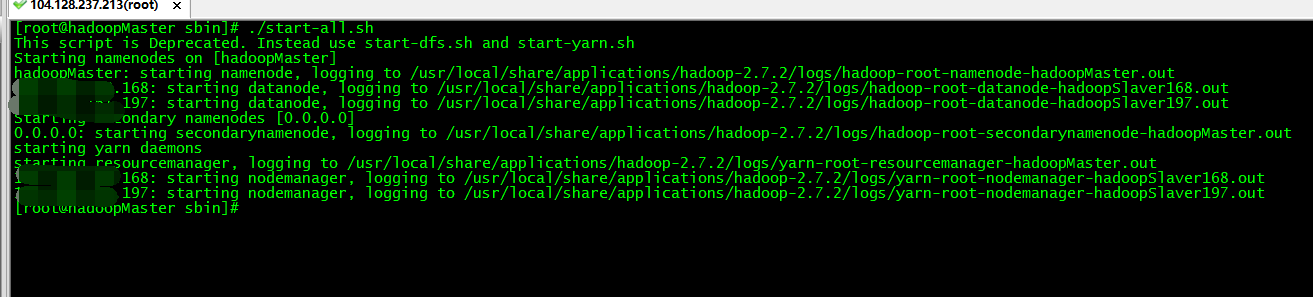

2 然后去sbin目录下面执行

./start-all.sh执行好脚本这个脚本执行完会出现下图数据

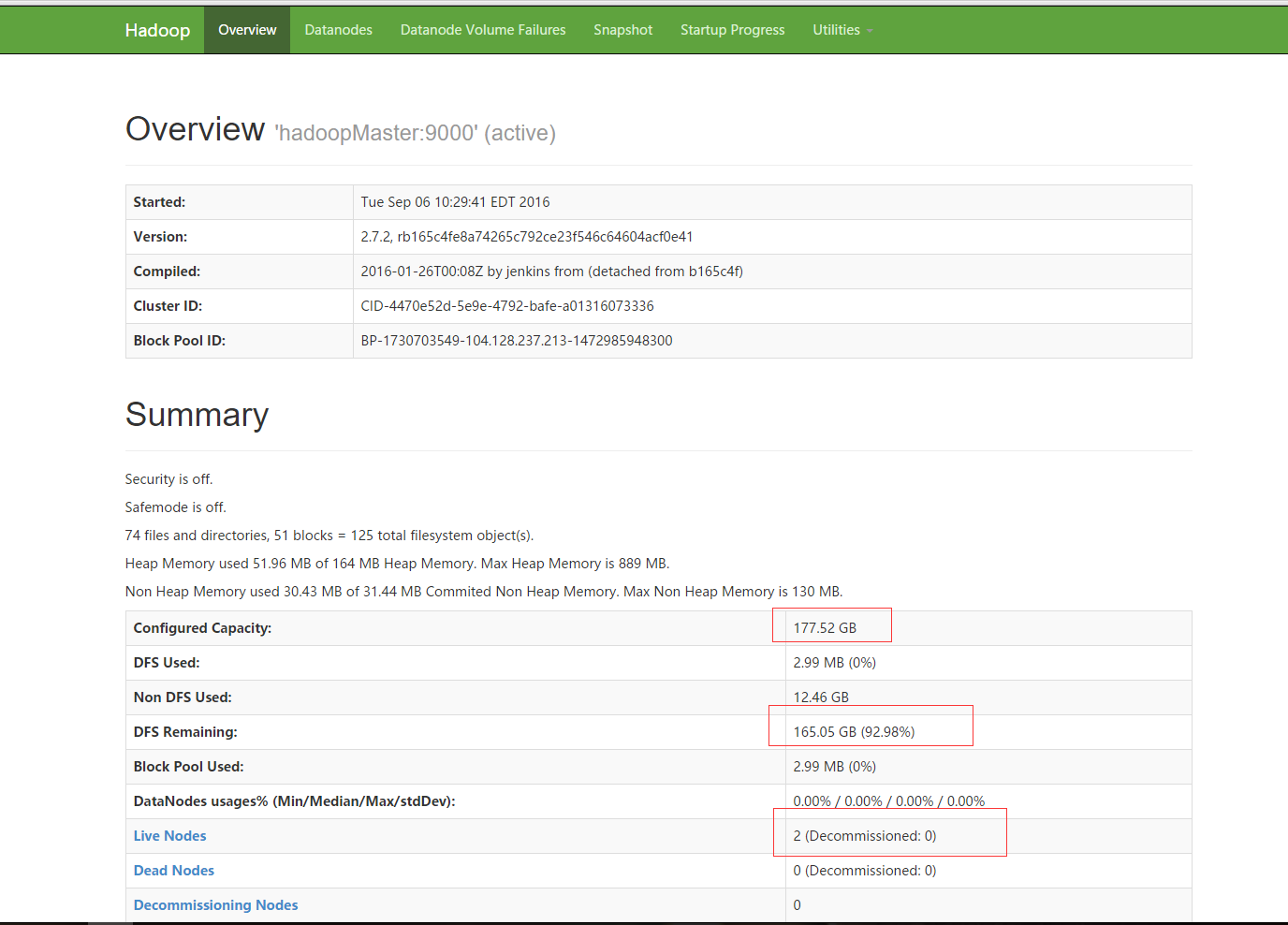

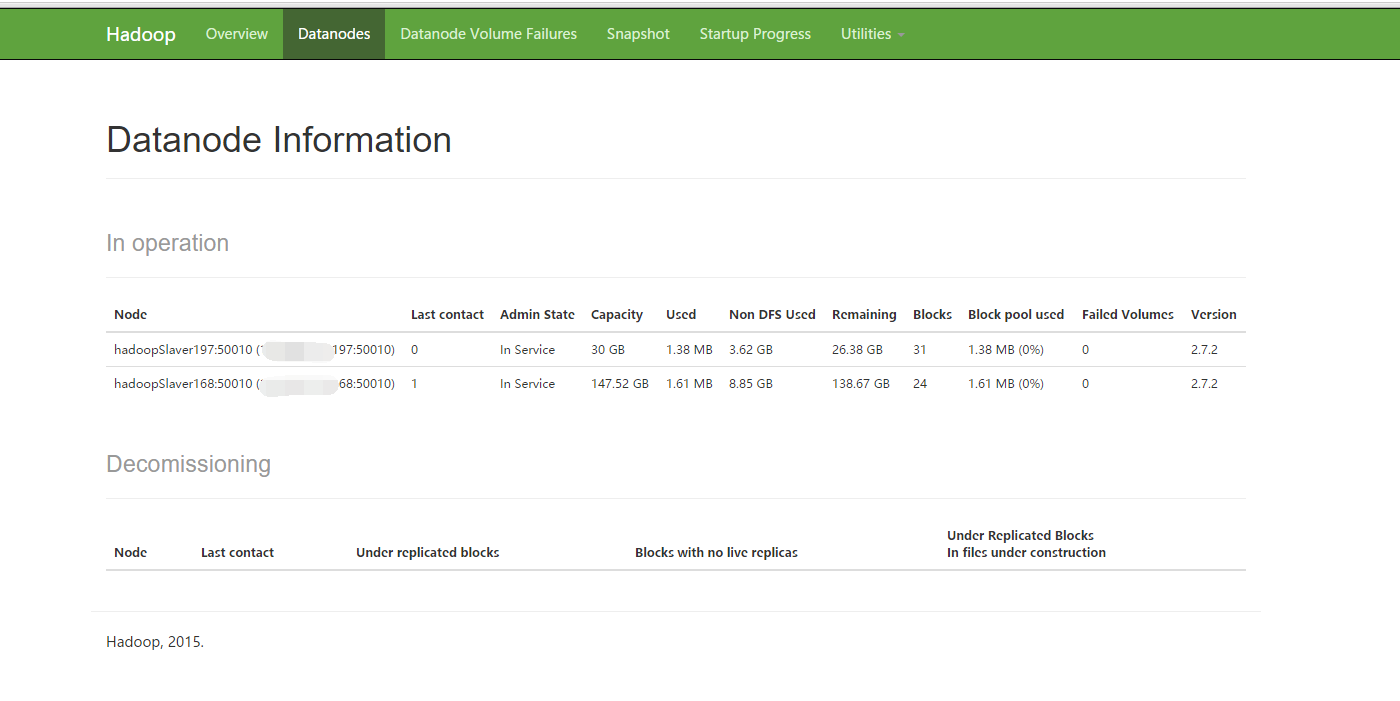

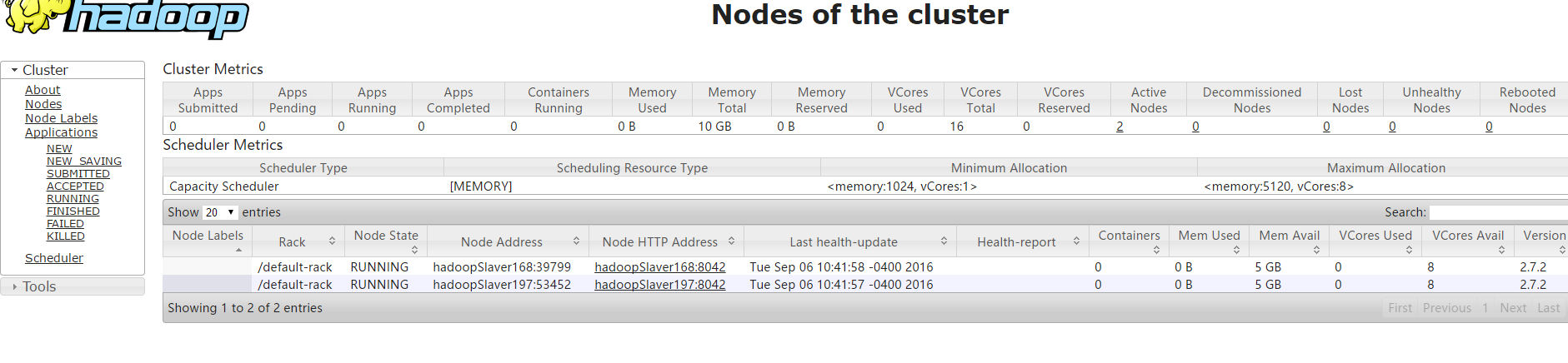

6 在Web页面查看

web接口

我这里有两个节点(三台机器为什么就两个节点呢?原因就是213那台机器是NameNode而不是DataNode)

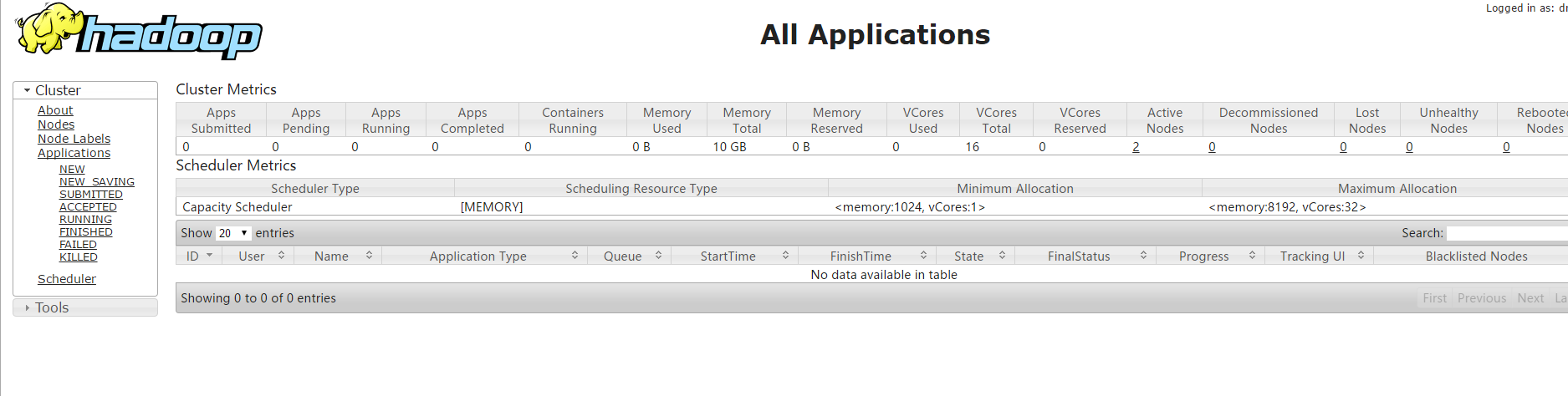

Yarn的界面

————-作为一个新学Hadoop的菜鸟搭建这个集群整整花了一天的时间各种问题各种坑。 各种Google才最终搭建了这个集群。服务器是我在国外买的VPS服务。搭建的问题我在重新整理下写成单独的文章。

433

433

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?