Seata 全局锁冲突以及分支注册两次的问题解决记录

###问题发现

测试环境,调用接口超时错误,通过调用方的日志发现,事务执行插入记录时,seata获取全局锁失败, 然后立即翻seata-server的日志

日志如下

2023-04-20 14:01:26

06:01:26.907 INFO --- [verHandlerThread_1_22_500] i.s.c.e.AbstractExceptionHandler : this request cannot acquire global lock, you can let Seata retry by setting config [client.rm.lock.retryPolicyBranchRollbackOnConflict] = false or manually retry by yourself. request: xid=10.244.4.180:8091:2837568797668576195,branchType=AT,resourceId=jdbc:mysql://xxxxxxx:xxxx/test,lockKey=undo_log:23534;user:606018;user_info:564480

2023-04-20 14:01:26

06:01:26.906 INFO --- [verHandlerThread_1_22_500] i.s.s.s.db.lock.LockStoreDataBaseDAO : Global lock batch acquire failed, xid 10.244.4.180:8091:2837568797668576195 branchId 2837568797668576206 pks [23534, 606018, 564480]

2023-04-20 14:01:26

Caused by: com.mysql.jdbc.exceptions.jdbc4.MySQLIntegrityConstraintViolationException: Duplicate entry 'jdbc:mysql://xxxxxxx:xxxx/test^^^user^^^606018' for key 'PRIMARY'

通过日志发现,是seata server 在插入lock_table 记录行锁时,出现了row_key的主键冲突 606018 就是插入user表记录后生成的主键。随后seata server 不停的重试获取锁,但每次都会冲突,直致1分钟后事务超时(seata 事务默认时间是60s)进行回滚。

了解业务得知,此处是一个插入语句,按照理论讲,插入记录生成主键都是递增的,怎么可能出现冲突呢?

后边仔细看日志又发现,现有一个分支注册并获取了此行锁,然后另外一个分支又来注册获取这行锁,因此出了冲突。

2023-04-20 14:01:26

06:01:26.903 ERROR --- [verHandlerThread_1_22_500] i.s.s.s.db.lock.LockStoreDataBaseDAO : Global lock batch acquire error: Duplicate entry 'jdbc:mysql://xxxxxxx:xxxx/test^^^user^^^606018' for key 'PRIMARY'

2023-04-20 14:01:26

,clientIp:10.244.4.1,vgroup:default_tx_group

2023-04-20 14:01:26

06:01:26.898 INFO --- [ batchLoggerPrint_1_1] i.s.c.r.p.server.BatchLogHandler : SeataMergeMessage xid=10.244.4.180:8091:2837568797668576195,branchType=AT,resourceId=jdbc:mysql://xxxxxxx:xxxx/test,lockKey=undo_log:23534;user:606018;user_info:564480

2023-04-20 14:01:26

06:01:26.653 INFO --- [verHandlerThread_1_21_500] i.seata.server.coordinator.AbstractCore : Register branch successfully, xid = 10.244.4.180:8091:2837568797668576195, branchId = 2837568797668576204, resourceId = jdbc:mysql://xxxxxxx:xxxx/test ,lockKeys = user:606018;user_info:564480

2023-04-20 14:01:26

,clientIp:10.244.4.1,vgroup:default_tx_group

2023-04-20 14:01:26

06:01:26.647 INFO --- [ batchLoggerPrint_1_1] i.s.c.r.p.server.BatchLogHandler : SeataMergeMessage xid=10.244.4.180:8091:2837568797668576195,branchType=AT,resourceId=jdbc:mysql://xxxxxxx:xxxx/test,lockKey=user:606018;user_info:564480

所以起初排查的方向是怀疑业务使用不当造成的冲突,比如两个分支同时使用一行数据,但随后看代码得知,此处就是很简单的插入语句

随后,怀疑是某个版本的bug,因为seata github 上的bug修复记录十分频繁,因此尝试了升级版本。从1.5.2 升级到 1.6.1 之后,发现接口能调通了,虽然还是有偶尔的冲突异常,但是因为seata-server自己有重试机制,重试两三次就通过了。

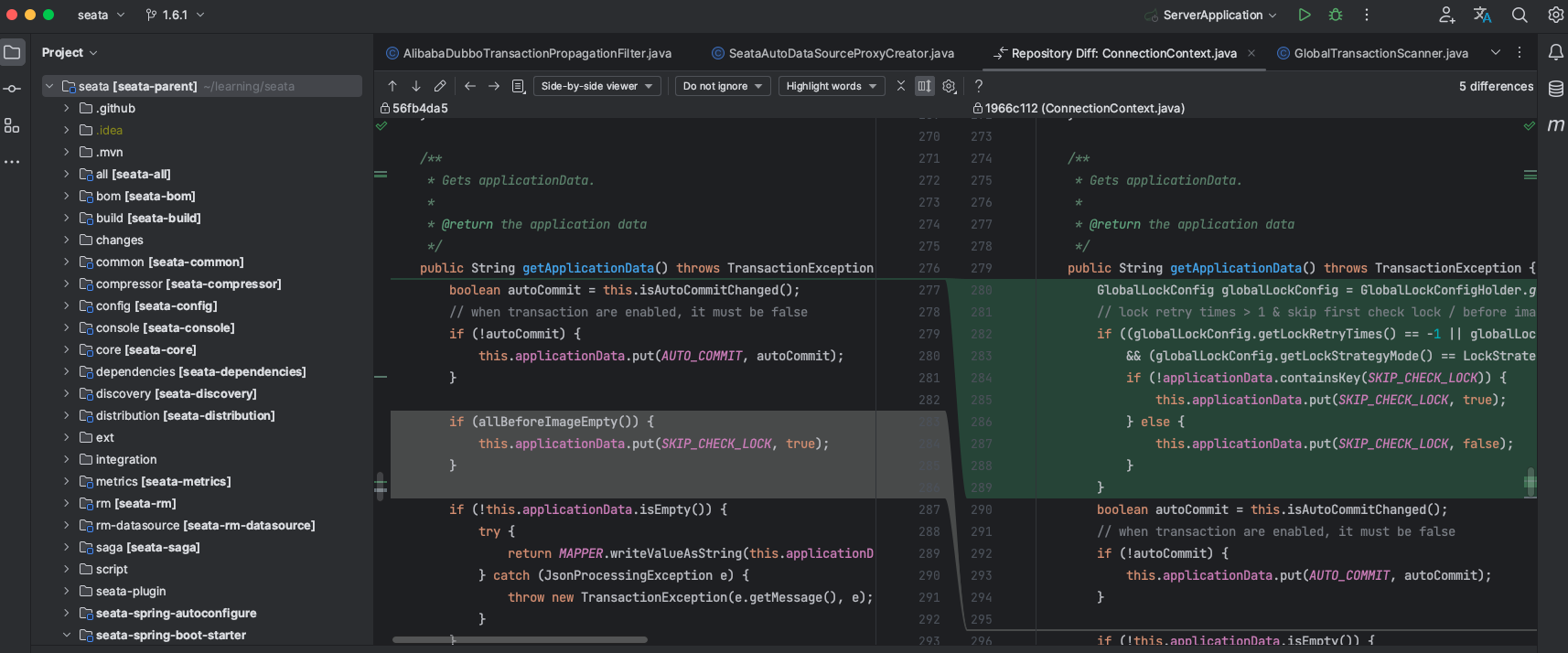

为了查询更深层次的原因,翻看了1.5.2 到 1.6.1 的 更新日录,发现了一个修改:

更改前:

if (allBeforeImageEmpty()) {

this.applicationData.put(SKIP_CHECK_LOCK, true);

}

更改后

GlobalLockConfig globalLockConfig = GlobalLockConfigHolder.getCurrentGlobalLockConfig();

// lock retry times > 1 & skip first check lock / before image is empty

if ((globalLockConfig.getLockRetryTimes() == -1 || globalLockConfig.getLockRetryTimes() > 1)

&& (globalLockConfig.getLockStrategyMode() == LockStrategyMode.OPTIMISTIC || allBeforeImageEmpty())) {

if (!applicationData.containsKey(SKIP_CHECK_LOCK)) {

this.applicationData.put(SKIP_CHECK_LOCK, true);

} else {

this.applicationData.put(SKIP_CHECK_LOCK, false);

}

}

增删改只有插入插入操作的before image 为空,这里 allBeforeImageEmpty 意味着所有操作都是插入操作,则跳过锁检查

更新后的逻辑 变为 获取全局锁模式, 如果重试次数为-1(无限重试) 或者 大于 1, 并且 锁模式是乐观锁或者全是插入操作,则判断 如果是第一次插入,不检查锁, 之后的操作全部检查锁。

那么检查锁会有什么操作呢?通过阅读代码,层层深入,我们看到了 LockStoreDataBaseDAO 是这么用的

@Override

public boolean acquireLock(List<LockDO> lockDOs, boolean autoCommit, boolean skipCheckLock) {

Connection conn = null;

PreparedStatement ps = null;

ResultSet rs = null;

Set<String> dbExistedRowKeys = new HashSet<>();

boolean originalAutoCommit = true;

if (lockDOs.size() > 1) {

lockDOs = lockDOs.stream().filter(LambdaUtils.distinctByKey(LockDO::getRowKey)).collect(Collectors.toList());

}

try {

conn = lockStoreDataSource.getConnection();

if (originalAutoCommit = conn.getAutoCommit()) {

conn.setAutoCommit(false);

}

List<LockDO> unrepeatedLockDOs = lockDOs;

//check lock

if (!skipCheckLock) {

//如果不跳过检查锁,会走这

boolean canLock = true;

//拼装查询锁的sql,大概这样子 "select * from LOCK_TABLE_PLACE_HOLD where row_key in (?) order by status desc ";

String checkLockSQL = LockStoreSqlFactory.getLogStoreSql(dbType).getCheckLockableSql(lockTable, lockDOs.size());

ps = conn.prepareStatement(checkLockSQL);

for (int i = 0; i < lockDOs.size(); i++) {

ps.setString(i + 1, lockDOs.get(i).getRowKey());

}

rs = ps.executeQuery();

//当前的全局事务id

String currentXID = lockDOs.get(0).getXid();

boolean failFast = false;

//如果查询到已经加锁

while (rs.next()) {

判断表里已加的锁所属的事务 是否 与当前全局事务一致

String dbXID = rs.getString(ServerTableColumnsName.LOCK_TABLE_XID);

if (!StringUtils.equals(dbXID, currentXID)) {

//打印日志

if (LOGGER.isInfoEnabled()) {

String dbPk = rs.getString(ServerTableColumnsName.LOCK_TABLE_PK);

String dbTableName = rs.getString(ServerTableColumnsName.LOCK_TABLE_TABLE_NAME);

long dbBranchId = rs.getLong(ServerTableColumnsName.LOCK_TABLE_BRANCH_ID);

LOGGER.info("Global lock on [{}:{}] is holding by xid {} branchId {}", dbTableName, dbPk, dbXID, dbBranchId);

}

//非自动提交,如果已经加锁的状态是回滚,说明正在进行事务回滚,此时为了保证数据安全,不再重试,直接抛异常

if (!autoCommit) {

int status = rs.getInt(ServerTableColumnsName.LOCK_TABLE_STATUS);

if (status == LockStatus.Rollbacking.getCode()) {

failFast = true;

}

}

//不一致说明冲突,当前事务加锁失败

canLock = false;

break;

}

//如果事务一致,那么将锁的row_key 放入当前事务已经存在的锁里(一个事物有多行锁,通过dbExistedRowKeys 记录哪些锁已存在,哪些未加锁)

dbExistedRowKeys.add(rs.getString(ServerTableColumnsName.LOCK_TABLE_ROW_KEY));

}

//如果加锁失败,并且快速失败,则抛异常,否则返回加锁失败 false

if (!canLock) {

conn.rollback();

if (failFast) {

throw new StoreException(new BranchTransactionException(LockKeyConflictFailFast));

}

return false;

}

// 如果锁已经在数据库存在了,那么从本次事务的lockDOs 中移除掉

if (CollectionUtils.isNotEmpty(dbExistedRowKeys)) {

unrepeatedLockDOs = lockDOs.stream().filter(lockDO -> !dbExistedRowKeys.contains(lockDO.getRowKey()))

.collect(Collectors.toList());

}

//如果没有剩余,说明全部加锁成功,直接返回true

if (CollectionUtils.isEmpty(unrepeatedLockDOs)) {

conn.rollback();

return true;

}

}

// 以上是检查锁的逻辑,如果不检查锁,则直接将锁插入lock_table, 我们也就是在这抛出了异常

if (unrepeatedLockDOs.size() == 1) {

//单行加锁

LockDO lockDO = unrepeatedLockDOs.get(0);

if (!doAcquireLock(conn, lockDO)) {

if (LOGGER.isInfoEnabled()) {

LOGGER.info("Global lock acquire failed, xid {} branchId {} pk {}", lockDO.getXid(), lockDO.getBranchId(), lockDO.getPk());

}

conn.rollback();

return false;

}

} else {

//多行加锁

if (!doAcquireLocks(conn, unrepeatedLockDOs)) {

if (LOGGER.isInfoEnabled()) {

LOGGER.info("Global lock batch acquire failed, xid {} branchId {} pks {}", unrepeatedLockDOs.get(0).getXid(),

unrepeatedLockDOs.get(0).getBranchId(), unrepeatedLockDOs.stream().map(lockDO -> lockDO.getPk()).collect(Collectors.toList()));

}

conn.rollback();

return false;

}

}

conn.commit();

return true;

} catch (SQLException e) {

throw new StoreException(e);

} finally {

IOUtil.close(rs, ps);

if (conn != null) {

try {

if (originalAutoCommit) {

conn.setAutoCommit(true);

}

conn.close();

} catch (SQLException e) {

}

}

}

}

阅读代码得知,1.5默认如果只有插入操作,哪怕是多次插入,都不检查锁,所以插入会直接锁住行。而1.6只有首次插入不检查锁,后续插入或别的操作会判断是否有锁,是否一个全局事务,如果是则视为已加锁,否则已存在的锁不是一个事务就造成了锁冲突,从而加锁失败。

看似已经解决问题,偶尔看日志突然发现提交行锁中居然有undo_log 的行锁,仔细看发现,哪怕是插入一行记录,也会提交两次

2023-04-10 15:19:09

07:19:09.016 INFO --- [verHandlerThread_1_12_500] i.seata.server.coordinator.AbstractCore : Register branch successfully, xid = 10.244.6.146:8091:1675707719303382163, branchId = 1675707719303390181, resourceId = jdbc:mysql://xxxxxxx:xxxx/test ,lockKeys = ent_enterprise_user:62651;undo_log:19493

2023-04-10 15:19:09

,clientIp:10.244.6.1,vgroup:default_tx_group

2023-04-10 15:19:09

07:19:09.012 INFO --- [ batchLoggerPrint_1_1] i.s.c.r.p.server.BatchLogHandler : SeataMergeMessage xid=10.244.6.146:8091:1675707719303382163,branchType=AT,resourceId=jdbc:mysql://xxxxxxx:xxxx/test,lockKey=ent_enterprise_user:62651;undo_log:19493

2023-04-10 15:19:09

07:19:09.009 INFO --- [verHandlerThread_1_11_500] i.seata.server.coordinator.AbstractCore : Register branch successfully, xid = 10.244.6.146:8091:1675707719303382163, branchId = 1675707719303390179, resourceId = jdbc:mysql://xxxxxxx:xxxx/test ,lockKeys = ent_enterprise_user:62651

2023-04-10 15:19:09

,clientIp:10.244.6.1,vgroup:default_tx_group

2023-04-10 15:19:09

07:19:09.005 INFO --- [ batchLoggerPrint_1_1] i.s.c.r.p.server.BatchLogHandler : SeataMergeMessage xid=10.244.6.146:8091:1675707719303382163,branchType=AT,resourceId=jdbc:mysql://xxxxxxx:xxxx/test,lockKey=ent_enterprise_user:62651

很不正常,一般一个分支事务,注册一次就够了,为什么会注册两次,而且第二次还带了一个undo_log 的行锁。我不得已去翻了seata的源码,

发现注册实际上是本地事务提交时发生的。

在AbstractDMLBaseExecutor 中有如下代码

protected T executeAutoCommitTrue(Object[] args) throws Throwable {

ConnectionProxy connectionProxy = statementProxy.getConnectionProxy();

try {

connectionProxy.changeAutoCommit();

return new LockRetryPolicy(connectionProxy).execute(() -> {

//执行本地sql,并生成undo_log(在内存中),根据undo_log 生成行锁

T result = executeAutoCommitFalse(args);

//向seata-server注册分支,并获取全局锁,undo_log插入本地库,并提交本地事务

connectionProxy.commit();

return result;

});

} catch (Exception e) {

// when exception occur in finally,this exception will lost, so just print it here

LOGGER.error("execute executeAutoCommitTrue error:{}", e.getMessage(), e);

if (!LockRetryPolicy.isLockRetryPolicyBranchRollbackOnConflict()) {

connectionProxy.getTargetConnection().rollback();

}

throw e;

} finally {

connectionProxy.getContext().reset();

connectionProxy.setAutoCommit(true);

}

}

继续追踪 connectionProxy.commit();

最终在ConnectionProxy找到以下代码

private void processGlobalTransactionCommit() throws SQLException {

try {

//发送给TC,注册分支并申请全局锁

register();

} catch (TransactionException e) {

recognizeLockKeyConflictException(e, context.buildLockKeys());

}

try {

//插入undo_log

UndoLogManagerFactory.getUndoLogManager(this.getDbType()).flushUndoLogs(this);

//本地事务提交

targetConnection.commit();

} catch (Throwable ex) {

LOGGER.error("process connectionProxy commit error: {}", ex.getMessage(), ex);

report(false);

throw new SQLException(ex);

}

if (IS_REPORT_SUCCESS_ENABLE) {

report(true);

}

context.reset();

}

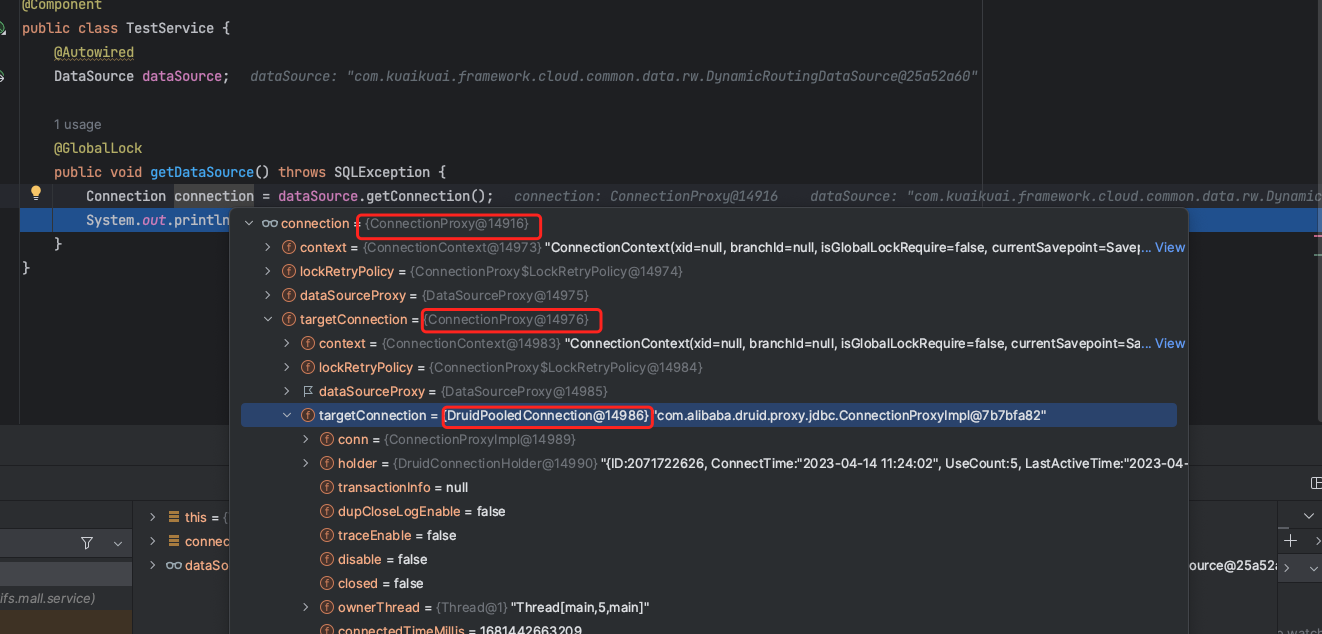

那什么情况下 本地分支会提交两次呢?第二次还会带着undolog的行锁?

很明显,targetConnection 也是 ConnectionProxy ,所以才会注册两次。

为了证明这个,我做了个测试

@Component

public class TestService {

@Autowired

DataSource dataSource;

@GlobalLock

public void getDataSource() throws SQLException {

Connection connection = dataSource.getConnection();

System.out.println(connection.toString());

}

}

通过debug看到

证实了我的猜想。

因为我们使用的是seata.enable-auto-data-source-proxy=true, seata自动将数据源进行代理,接下来我就去研究springboot 启动时seata的自动配置

@ConditionalOnBean(DataSource.class)

@ConditionalOnExpression("${seata.enabled:true} && ${seata.enableAutoDataSourceProxy:true} && ${seata.enable-auto-data-source-proxy:true}")

@AutoConfigureOrder(Ordered.LOWEST_PRECEDENCE)

@AutoConfigureAfter(value = {SeataCoreAutoConfiguration.class},

name = "org.springframework.boot.autoconfigure.jdbc.DataSourceAutoConfiguration")

public class SeataDataSourceAutoConfiguration {

/**

* The bean seataAutoDataSourceProxyCreator.

*/

@Bean(BEAN_NAME_SEATA_AUTO_DATA_SOURCE_PROXY_CREATOR)

@ConditionalOnMissingBean(SeataAutoDataSourceProxyCreator.class)

public static SeataAutoDataSourceProxyCreator seataAutoDataSourceProxyCreator(SeataProperties seataProperties) {

return new SeataAutoDataSourceProxyCreator(seataProperties.isUseJdkProxy(),

seataProperties.getExcludesForAutoProxying(), seataProperties.getDataSourceProxyMode());

}

}

可以看出 是 SeataAutoDataSourceProxyCreator 对数据源做的代理,

protected Object wrapIfNecessary(Object bean, String beanName, Object cacheKey) {

// 不是数据源不关心

if (!(bean instanceof DataSource)) {

return bean;

}

// 如果数据源没有被代理,我们创建一个代理,并放入到DataSourceProxyHolder中维护一个原始 与 代理之间的映射

if (!(bean instanceof SeataDataSourceProxy)) {

Object enhancer = super.wrapIfNecessary(bean, beanName, cacheKey);

// 如果bean的类型是被指定不需要代理的 或者 之前已经代理过了,直接返回

if (bean == enhancer) {

return bean;

}

// 不是,则创建代理并维护映射关系

DataSource origin = (DataSource) bean;

SeataDataSourceProxy proxy = buildProxy(origin, dataSourceProxyMode);

DataSourceProxyHolder.put(origin, proxy);

return enhancer;

}

//如果你手动创建了SeataDataSourceProxy,那么为了避免你自己创建了代理并起数据源还开了自动代理,从而代理了两层,seata 帮你拆开判断一下,如果不是两层,再给你封上

LOGGER.warn("Manually register SeataDataSourceProxy(or its subclass) bean is discouraged! bean name: {}", beanName);

SeataDataSourceProxy proxy = (SeataDataSourceProxy) bean;

DataSource origin = proxy.getTargetDataSource();

Object originEnhancer = super.wrapIfNecessary(origin, beanName, cacheKey);

// this mean origin is either excluded by user or had been proxy before

if (origin == originEnhancer) {

return origin;

}

// else, put <origin, proxy> to holder and return originEnhancer

DataSourceProxyHolder.put(origin, proxy);

return originEnhancer;

}

看到这,我突然想起了我们的读写库数据源,是一个两层的结构

@Configuration

@EnableAutoConfiguration(exclude = {DataSourceAutoConfiguration.class})

public class DataSourceConfig {

@Bean(DB.MASTER)

@ConfigurationProperties("spring.datasource.druid.master")

public DataSource dataSourceMaster() {

return DruidDataSourceBuilder.create().build();

}

@Bean(DB.SLAVE)

@ConfigurationProperties("spring.datasource.druid.slave")

public DataSource dataSourceSlave() {

return DruidDataSourceBuilder.create().build();

}

@Primary

@Bean("dataSourceDynamicRouting")

public DataSource dataSourceDynamicRouting() {

return new DynamicRoutingDataSource(dataSourceMaster(), dataSourceSlave());

}

}

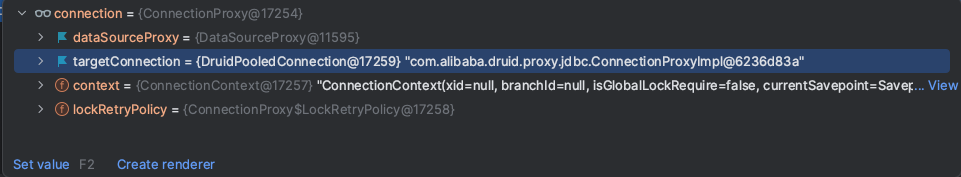

很明显,是master 与 slave 创建时被包了一层代理,而dataSourceDynamicRouting 又被包了一层代理,从而datasource 在 getConnection时获得了一个两层的ConnectionProxy

知道了原因,解决也比较方便,seata.excludes-for-auto-proxying=com.alibaba.druid.spring.boot.autoconfigure.DruidDataSourceWrapper

这个配置可以指定某个类型的数据源不被封装,可以帮我们解除一层代理

随后,测试结果

数据源正常

023-04-21 13:50:36

05:50:36.794 INFO --- [ AsyncCommitting_1_1] io.seata.server.coordinator.DefaultCore : Committing global transaction is successfully done, xid = 10.244.0.213:8091:3990517555649138164.

2023-04-21 13:50:36

05:50:36.780 INFO --- [ AsyncCommitting_1_1] io.seata.core.rpc.netty.ChannelManager : Choose [id: 0x0fbaad2f, L:/10.244.6.146:8091 - R:/10.244.6.1:51762] on the same application[test-user-service] as alternative of test-user-service:10.244.0.1:58008

2023-04-21 13:50:36

05:50:36.745 INFO --- [ AsyncCommitting_1_1] io.seata.core.rpc.netty.ChannelManager : Choose [id: 0xe5041be9, L:/10.244.6.146:8091 - R:/10.244.6.1:52190] on the same application[test-enterprise-service] as alternative of test-enterprise-service:10.244.0.1:38770

2023-04-21 13:50:36

05:50:36.534 INFO --- [ batchLoggerPrint_1_1] i.s.c.r.p.server.BatchLogHandler : xid=10.244.0.213:8091:3990517555649138164,extraData=null,clientIp:10.244.0.1,vgroup:default_tx_group

2023-04-21 13:50:36

05:50:36.424 INFO --- [verHandlerThread_1_19_500] i.seata.server.coordinator.AbstractCore : Register branch successfully, xid = 10.244.0.213:8091:3990517555649138164, branchId = 3990517555649138171, resourceId = jdbc:mysql://xxxxxxx:xxxx/test ,lockKeys = user:606019;user_info:564481

2023-04-21 13:50:36

,clientIp:10.244.0.1,vgroup:default_tx_group

2023-04-21 13:50:36

05:50:36.420 INFO --- [ batchLoggerPrint_1_1] i.s.c.r.p.server.BatchLogHandler : SeataMergeMessage xid=10.244.0.213:8091:3990517555649138164,branchType=AT,resourceId=jdbc:mysql://xxxxxxx:xxxx/test,lockKey=user:606019;user_info:564481

2023-04-21 13:50:36

05:50:36.105 INFO --- [verHandlerThread_1_18_500] i.seata.server.coordinator.AbstractCore : Register branch successfully, xid = 10.244.0.213:8091:3990517555649138164, branchId = 3990517555649138168, resourceId = jdbc:mysql://xxxxxxx:xxxx/test ,lockKeys = ent_enterprise_user:65221

2023-04-21 13:50:36

,clientIp:10.244.0.1,vgroup:default_tx_group

2023-04-21 13:50:36

05:50:36.013 INFO --- [ batchLoggerPrint_1_1] i.s.c.r.p.server.BatchLogHandler : SeataMergeMessage xid=10.244.0.213:8091:3990517555649138164,branchType=AT,resourceId=jdbc:mysql://xxxxxxx:xxxx/test,lockKey=ent_enterprise_user:65221

2023-04-21 13:50:33

05:50:33.531 INFO --- [verHandlerThread_1_17_500] i.s.s.coordinator.DefaultCoordinator : Begin new global transaction applicationId: test-user-service,transactionServiceGroup: default_tx_group, transactionName: insertBatch(java.util.List),timeout:120000,xid:10.244.0.213:8091:3990517555649138164

日志也正常了,至此问题解决

此间还有一个小插曲, DruidDataSourceBuilder.create().build() 生成的是DruidDataSourceWrapper 而不是DruidDataSource,这个踩了个坑。。。

818

818

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?