1、背景

公司要做人体姿态识别的项目,定死了硬件选用npu,系统选用安卓,而安卓上可以天然的调用nnapi的是tflite模型,然后测试后发现该硬件只有在使用8-bit量化的tflite模型时,才能达到最大速度,这里贴一下测试的用例:

(1)AI_benchmark_apk:https://ai-benchmark.com/index.html#title

(2)谷歌的例子:https://jwnote.com/tensorflow-lite-on-android/

2、

(1)因为后面需要用到tensorflow的转换工具toco,首先根据博客:https://blog.csdn.net/luozhichengaichenlei/article/details/117593320来编译tensorflow1.15.5;

(2)下载开源模型:https://gitee.com/luo_zhi_cheng/tf-models/blob/master/research/object_detection/g3doc/tf1_detection_zoo.md然后见下图:

下载框中的三个包,它们都是量化训练的模型,区别就是mobilenet的版本不同,backbone的深度不同;

(3)解压之后发现文件夹里面都已经有.pb文件(写博客的时候,我已经对pb文件有更深的理解了,pb文件是冻结图,但是这个pb不一定就是用来进行推理的,就像我们下载的这三个包里面的pb文件,它是一个包含伪量化节点的冻结图,可以根据该冻结图,使用toco工具来转换成uint8推理的tflite模型),此时我们根据https://gitee.com/luo_zhi_cheng/tf-models/blob/master/research/object_detection/g3doc/running_on_mobile_tensorflowlite.md的操作将.pb文件转化成支持uint8推理的tflite模型,转换的脚本如下:

# cd 到bazel编译的tensorflow目录下

cd /home/lhc/1lzc/tensorflow-bazel/tensorflow-1.15.5

# ssd-mobilenetv1-0.75-quant-uint8

#pb_path=/home/lhc/Desktop/lzc/ssd_mobilenet_v1_0.75_depth_quantized_300x300_coco14_sync_2018_07_18/tflite_graph.pb

#tflite_path=/home/lhc/Desktop/lzc/ssd_mobilenet_v1_0.75_depth_quantized_300x300_coco14_sync_2018_07_18/ssd-mobilenetv1-0.75-quant-uint8.tflite

# ssd-mobilenetv1-1.0-quant-uint8

#pb_path=/home/lhc/Desktop/lzc/ssd_mobilenet_v1_quantized_300x300_coco14_sync_2018_07_18/tflite_graph.pb

#tflite_path=/home/lhc/Desktop/lzc/ssd_mobilenet_v1_quantized_300x300_coco14_sync_2018_07_18/ssd-mobilenetv1-1.0-quant-uint8.tflite

# ssd-mobilenetv2-1.0-quant-uint8

pb_path=/home/lhc/Desktop/lzc/ssd_mobilenet_v2_quantized_300x300_coco_2019_01_03/tflite_graph.pb

tflite_path=/home/lhc/Desktop/lzc/ssd_mobilenet_v2_quantized_300x300_coco_2019_01_03/ssd-mobilenetv2-1.0-quant-uint8.tflite

modelSize=300

#bazel run -c opt tensorflow/lite/python:tflite_convert -- \

bazel-out/k8-py2-opt/bin/tensorflow/lite/python/tflite_convert --\

--enable_v1_converter \

--graph_def_file=$pb_path \

--output_file=$tflite_path \

--input_shapes=1,$modelSize,$modelSize,3 \

--input_arrays=normalized_input_image_tensor \

--output_arrays='TFLite_Detection_PostProcess','TFLite_Detection_PostProcess:1','TFLite_Detection_PostProcess:2','TFLite_Detection_PostProcess:3' \

--inference_type=QUANTIZED_UINT8 \

--mean_values=128 \

--std_dev_values=128 \

--change_concat_input_ranges=false \

--allow_custom_ops

如果你想转成fp16则用以下脚本:

cd /home/lhc/1lzc/tensorflow-bazel/tensorflow-1.15.5

# ssd-mobilenetv1-0.75-quant-fp16

pb_path=/home/lhc/Desktop/lzc/ssd_mobilenet_v1_0.75_depth_quantized_300x300_coco14_sync_2018_07_18/tflite_graph.pb

tflite_path=/home/lhc/Desktop/lzc/ssd_mobilenet_v1_0.75_depth_quantized_300x300_coco14_sync_2018_07_18/ssd-mobilenetv1-0.75-quant-fp16.tflite

# ssd-mobilenetv1-1.0-quant-fp16

#pb_path=/home/lhc/Desktop/lzc/ssd_mobilenet_v1_quantized_300x300_coco14_sync_2018_07_18/tflite_graph.pb

#tflite_path=/home/lhc/Desktop/lzc/ssd_mobilenet_v1_quantized_300x300_coco14_sync_2018_07_18/ssd-mobilenetv1-1.0-quant-fp16.tflite

# ssd-mobilenetv2-1.0-quant-fp16

#pb_path=/home/lhc/Desktop/lzc/ssd_mobilenet_v2_quantized_300x300_coco_2019_01_03/tflite_graph.pb

#tflite_path=/home/lhc/Desktop/lzc/ssd_mobilenet_v2_quantized_300x300_coco_2019_01_03/ssd-mobilenetv2-1.0-quant-fp16.tflite

modelSize=300

#bazel run -c opt tensorflow/lite/python:tflite_convert -- \

bazel-out/k8-py2-opt/bin/tensorflow/lite/python/tflite_convert --\

--enable_v1_converter \

--graph_def_file=$pb_path \

--output_file=$tflite_path \

--input_shapes=1,$modelSize,$modelSize,3 \

--input_arrays=normalized_input_image_tensor \

--output_arrays='TFLite_Detection_PostProcess','TFLite_Detection_PostProcess:1','TFLite_Detection_PostProcess:2','TFLite_Detection_PostProcess:3' \

--inference_type=FLOAT \

--allow_custom_ops

(4)测试生成的tflite模型,.py文件如下,前面一些参数修改成自己的路径:

import tensorflow as tf

import numpy as np

import cv2

network_w = 300

network_h = 300

det_score = 0.45

#inference_type = 'fp16'

inference_type = 'uint8'

image = r'D:\Data\pic\dog.jpg' #dog #staff

labels_path = r'F:\model\tflite\ssd-mobilenet\labelmap.txt'

if inference_type == 'int8' or inference_type == 'uint8':

#intput_tflite_file = r"F:\model\tflite\ssd-mobilenet\ssd-mobilenetv1-0.75-quant-uint8.tflite"

#intput_tflite_file = r"F:\model\tflite\ssd-mobilenet\ssd-mobilenetv1-1.0-quant-uint8.tflite"

intput_tflite_file = r"F:\model\tflite\ssd-mobilenet\ssd-mobilenetv2-1.0-quant-uint8.tflite"

#intput_tflite_file = r"F:\android\git\tf-examples\lite\examples\object_detection\android\app\src\main\assets\lite-model_ssd_mobilenet_v1_1_metadata_2.tflite"

elif inference_type == 'fp16' or inference_type == 'float16':

#intput_tflite_file = r'F:\model\tflite\ssd-mobilenet\ssd-mobilenetv1-0.75-quant-fp16.tflite'

#intput_tflite_file = r'F:\model\tflite\ssd-mobilenet\ssd-mobilenetv1-1.0-quant-fp16.tflite'

intput_tflite_file = r'F:\model\tflite\ssd-mobilenet\ssd-mobilenetv2-1.0-quant-fp16.tflite'

def get_labels_name(labels_path):

the_labels_name = []

with open(labels_path, 'r') as file:

for line in file:

the_labels_name.append(line.rstrip())#去掉末尾的\n

return the_labels_name

def test_tflite(input_test_tflite_file, new_img, inference_type):

interpreter = tf.lite.Interpreter(model_path=input_test_tflite_file)

tensor_details = interpreter.get_tensor_details()

for i in range(0, len(tensor_details)):

# print("tensor:", i, tensor_details[i])

interpreter.allocate_tensors()

input_details = interpreter.get_input_details()

print("=======================================")

print("input :", str(input_details))

output_details = interpreter.get_output_details()

print("ouput :", str(output_details))

print("=======================================")

# new_img = np.expand_dims(new_img, axis=0)#提升维度

if inference_type == 'int8' or inference_type == 'uint8':

new_img = new_img.astype('uint8') # 类型也要满足要求

elif inference_type == 'fp16' or inference_type == 'float16':

new_img = new_img.astype('float32')

interpreter.set_tensor(input_details[0]['index'], new_img)

# 注意注意,我要调用模型了

interpreter.invoke()

detection_boxes = interpreter.get_tensor(output_details[0]['index'])

detection_classes = interpreter.get_tensor(output_details[1]['index'])

detection_scores = interpreter.get_tensor(output_details[2]['index'])

num_detections = interpreter.get_tensor(output_details[3]['index'])

print("test_tflite finish!")

return detection_boxes, detection_classes, detection_scores, num_detections

labels_name = get_labels_name(labels_path)

frame = cv2.imread(image)

image_w = frame.shape[1]

image_h = frame.shape[0]

if inference_type == 'int8' or inference_type == 'uint8':

input = cv2.dnn.blobFromImage(frame, 1, (network_w, network_h), [0, 0, 0], 1)

elif inference_type == 'fp16' or inference_type == 'float16':

input = cv2.dnn.blobFromImage(frame, 2.0 / 255.0, (network_w, network_h), [127.5, 127.5, 127.5], 1)

input = input.transpose((0,2,3,1))#维度互换

detection_boxes, detection_classes, detection_scores, num_detections = test_tflite(intput_tflite_file, input, inference_type)

for i in range(int(num_detections[0])):

ymin, xmin, ymax, xmax = detection_boxes[0][i]

the_score = detection_scores[0][i]

the_label = detection_classes[0][i]

if the_score < det_score:

continue

cv2.rectangle(frame, (int(image_w*xmin),int(image_h*ymin)), (int(image_w*xmax),int(image_h*ymax)), (0,255,0), 2)

cv2.putText(frame, str(int(the_score*100)) + "%", (int(image_w*xmin),int(image_h*ymin) - 5), cv2.FONT_HERSHEY_SIMPLEX, 0.75, (255, 0, 0), 1)

cv2.putText(frame, labels_name[int(the_label)], (int(image_w*xmin) + 50,int(image_h*ymin) - 5), cv2.FONT_HERSHEY_SIMPLEX, 0.75, (0, 0, 255), 1)

cv2.imshow('lzc',frame)

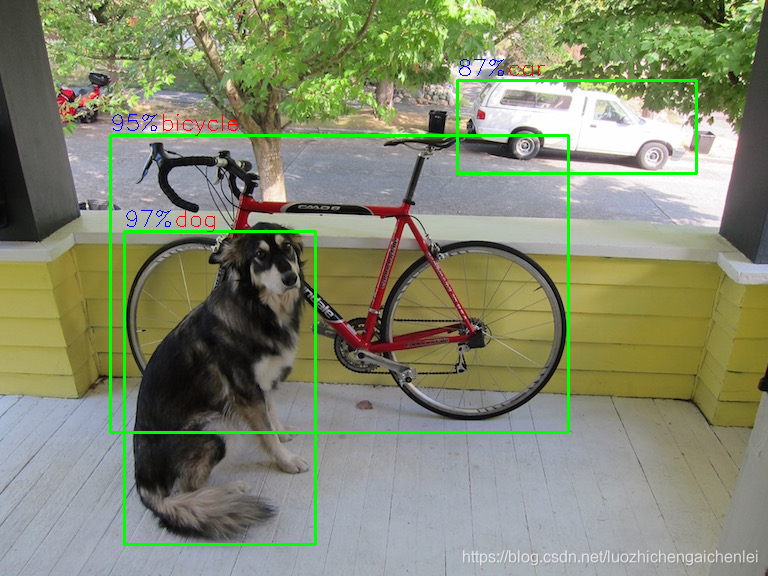

cv2.waitKey(0)(5)贴一张当前的结果图,分析和调试发现输出的阈值很低,然后检测物体最多只有10个,见tflite_graph.pbtxt最后一些行的max_detections;

3、我们看到三个包下面不仅仅有pb,还有ckpt,这个ckpt也是包含伪量化节点的,一般我们训练是得到ckpt,然后转成pb再转成tflite,ckpt2pb见下面脚本,这里我们实验的tf-model版本为1.13.0,下载地址为https://github.com/tensorflow/models/tags:

tf_model_research_path=/home/lhc/1lzc/tensorflow-bazel/tf-models-1.13.0/models-1.13.0/research

cd $tf_model_research_path

protoc -I=./ --python_out=./ ./object_detection/protos/*.proto

export PYTHONPATH=$PYTHONPATH:$tf_model_research_path

export PYTHONPATH=$PYTHONPATH:$tf_model_research_path/slim

base_path=/home/lhc/Desktop/lzc/ssd_mobilenet_v1_quantized_300x300_coco14_sync_2018_07_18

config_path=$base_path/pipeline.config

ckpt_path=$base_path/model.ckpt

out_path=$base_path/tmp

rm -R $base_path/tmp

mkdir $base_path/tmp

py_file=/home/lhc/1lzc/tensorflow-bazel/tf-models-1.13.0/models-1.13.0/research/object_detection/export_tflite_ssd_graph.py

python $py_file \

--pipeline_config_path=$config_path \

--trained_checkpoint_prefix=$ckpt_path \

--output_directory=$out_path \

--add_postprocessing_op=true

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?