案例说明:

kingbaseES R3集群一主多从的架构,一般有两个节点是集群的管理节点,所有的节点都可以为数据节点;对于非管理节点的数据节点可以在线删除;但是对于管理节点,无法在线删除,如果删除管理节点,需要重新部署集群。本案例是在一主二备的架构下,删除数据节点(非管理节点)的测试案例。

系统主机环境:

[kingbase@node3 bin]$ cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.7.248 node1 # 集群管理节点&数据节点

192.168.7.249 node2 # 数据节点

192.168.7.243 node3 # 集群管理节点&数据节点集群架构:

数据库版本:

TEST=# select version();

VERSION

-------------------------------------------------------------------------------------------------------------------------

Kingbase V008R003C002B0270 on x86_64-unknown-linux-gnu, compiled by gcc (GCC) 4.1.2 20080704 (Red Hat 4.1.2-46), 64-bit

(1 row)一、查看集群状态信息

=注意:在删除数据节点前,保证集群状态是正常的,包括集群节点状态和主备流复制状态=

# 集群节点状态

[kingbase@node3 bin]$ ./ksql -U SYSTEM -W 123456 TEST -p 9999

ksql (V008R003C002B0270)

Type "help" for help.

TEST=# show pool_nodes;

node_id | hostname | port | status | lb_weight | role | select_cnt | load_balance_node | replication_delay

---------+---------------+-------+--------+-----------+---------+------------+-------------------+-------------------

0 | 192.168.7.243 | 54321 | up | 0.333333 | primary | 0 | false | 0

1 | 192.168.7.248 | 54321 | up | 0.333333 | standby | 0 | true | 0

2 | 192.168.7.249 | 54321 | up | 0.333333 | standby | 0 | false | 0

(3 rows)

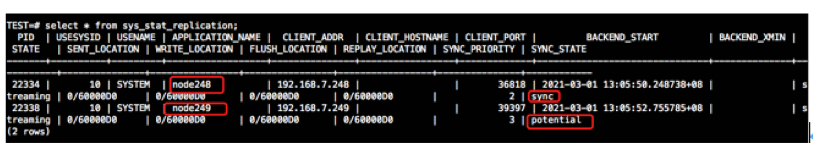

# 主备流复制状态

TEST=# select * from sys_stat_replication;

PID | USESYSID | USENAME | APPLICATION_NAME | CLIENT_ADDR | CLIENT_HOSTNAME | CLIENT_PORT | BACKEND_START | BACKEND_XMIN |

STATE | SENT_LOCATION | WRITE_LOCATION | FLUSH_LOCATION | REPLAY_LOCATION | SYNC_PRIORITY | SYNC_STATE

-------+----------+---------+------------------+---------------+-----------------+-------------+-------------------------------+--------------+--

12316 | 10 | SYSTEM | node249 | 192.168.7.249 | | 39337 | 2021-03-01 12:59:29.003870+08 | | s

treaming | 0/50001E8 | 0/50001E8 | 0/50001E8 | 0/50001E8 | 3 | potential

15429 | 10 | SYSTEM | node248 | 192.168.7.248 | | 35885 | 2021-03-01 12:59:38.317605+08 | | s

treaming | 0/50001E8 | 0/50001E8 | 0/50001E8 | 0/50001E8 | 2 | sync

(2 rows)

二、删除集群数据节点

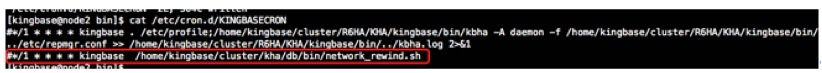

1、停止数据节点上cron服务(netwrok_rewind.sh计划任务)

[kingbase@node2 bin]$ cat /etc/cron.d/KINGBASECRON

#*/1 * * * * kingbase . /etc/profile;/home/kingbase/cluster/R6HA/KHA/kingbase/bin/kbha -A daemon -f /home/kingbase/cluster/R6HA/KHA/kingbase/bin/../etc/repmgr.conf >> /home/kingbase/cluster/R6HA/KHA/kingbase/bin/../kbha.log 2>&1

#*/1 * * * * kingbase /home/kingbase/cluster/kha/db/bin/network_rewind.sh

2、停止数据节点数据库服务

[kingbase@node2 bin]$ ./sys_ctl stop -D ../data

waiting for server to shut down.... done

server stopped3、在主节点删除复制槽

TEST=# select * from sys_replication_slots;

SLOT_NAME | PLUGIN | SLOT_TYPE | DATOID | DATABASE | ACTIVE | ACTIVE_PID | XMIN | CATALOG_XMIN | RESTART_LSN | CONFIRMED_FLUSH_LSN

--------------+--------+-----------+--------+----------+--------+------------+------+--------------+-------------+---------------------

slot_node243 | | physical | | | f | | | | |

slot_node248 | | physical | | | t | 29330 | 2076 | | 0/70000D0 |

slot_node249 | | physical | | | f | | 2076 | | 0/60001B0 |

(3 rows)

TEST=# select SYS_DROP_REPLICATION_SLOT('slot_node249');

SYS_DROP_REPLICATION_SLOT

---------------------------

(1 row)

TEST=# select * from sys_replication_slots;

SLOT_NAME | PLUGIN | SLOT_TYPE | DATOID | DATABASE | ACTIVE | ACTIVE_PID | XMIN | CATALOG_XMIN | RESTART_LSN | CONFIRMED_FLUSH_LSN

--------------+--------+-----------+--------+----------+--------+------------+------+--------------+-------------+---------------------

slot_node243 | | physical | | | f | | | | |

slot_node248 | | physical | | | t | 29330 | 2076 | | 0/70000D0 |

(2 rows)4、编辑配置文件(所有管理节点)

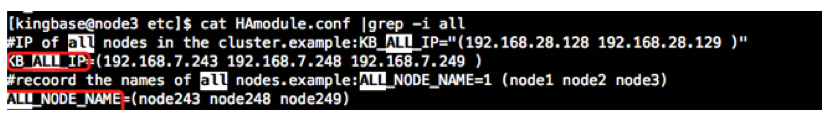

1) HAmodule.conf配置文件(db/etc和kingbasecluster/etc下)

=如下所示,集群所有节点的主机名和ip配置信息,需将删除节点的配置信息清除=

[kingbase@node3 etc]$ cat HAmodule.conf |grep -i all

#IP of all nodes in the cluster.example:KB_ALL_IP="(192.168.28.128 192.168.28.129 )"

KB_ALL_IP=(192.168.7.243 192.168.7.248 192.168.7.249 )

#recoord the names of all nodes.example:ALL_NODE_NAME=1 (node1 node2 node3)

ALL_NODE_NAME=(node243 node248 node249)

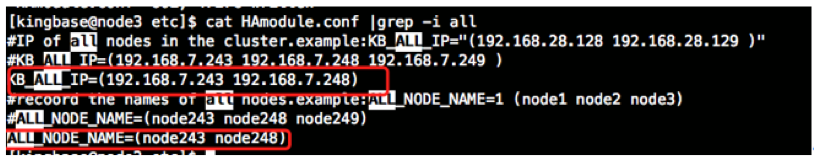

=如下图所示,已经将要删除节点的主机名和ip信息从配置中清除=

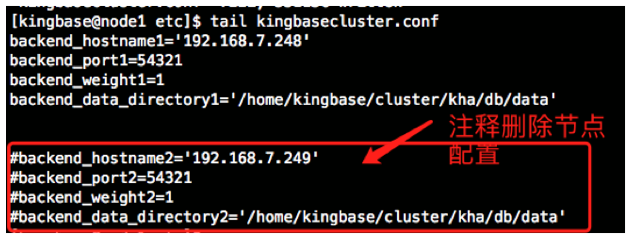

2)编辑kingbasecluster配置文件

=如下所示,从配置文件注释删除节点的配置信息=

[kingbase@node1 etc]$ tail kingbasecluster.conf

backend_hostname1='192.168.7.248'

backend_port1=54321

backend_weight1=1

backend_data_directory1='/home/kingbase/cluster/kha/db/data'

# 注释node249配置信息

#backend_hostname2='192.168.7.249'

#backend_port2=54321

#backend_weight2=1

#backend_data_directory2='/home/kingbase/cluster/kha/db/data'

三、重启集群测试

=== 注意:在生产环境下,不需要立刻重启集群,在适当时候重启集群即可===

[kingbase@node3 bin]$ ./kingbase_monitor.sh restart

-----------------------------------------------------------------------

2021-03-01 13:26:44 KingbaseES automation beging...

2021-03-01 13:26:44 stop kingbasecluster [192.168.7.243] ...

remove status file /home/kingbase/cluster/kha/run/kingbasecluster/kingbasecluster_status

DEL VIP NOW AT 2021-03-01 13:26:49 ON enp0s3

No VIP on my dev, nothing to do.

2021-03-01 13:26:50 Done...

2021-03-01 13:26:50 stop kingbasecluster [192.168.7.248] ...

remove status file /home/kingbase/cluster/kha/run/kingbasecluster/kingbasecluster_status

DEL VIP NOW AT 2021-03-01 13:09:36 ON enp0s3

No VIP on my dev, nothing to do.

2021-03-01 13:26:55 Done...

2021-03-01 13:26:55 stop kingbase [192.168.7.243] ...

set /home/kingbase/cluster/kha/db/data down now...

2021-03-01 13:27:01 Done...

2021-03-01 13:27:02 Del kingbase VIP [192.168.7.245/24] ...

DEL VIP NOW AT 2021-03-01 13:27:03 ON enp0s3

execute: [/sbin/ip addr del 192.168.7.245/24 dev enp0s3]

Oprate del ip cmd end.

2021-03-01 13:27:03 Done...

2021-03-01 13:27:03 stop kingbase [192.168.7.248] ...

set /home/kingbase/cluster/kha/db/data down now...

2021-03-01 13:27:06 Done...

2021-03-01 13:27:07 Del kingbase VIP [192.168.7.245/24] ...

DEL VIP NOW AT 2021-03-01 13:09:47 ON enp0s3

No VIP on my dev, nothing to do.

2021-03-01 13:27:07 Done...

......................

all stop..

ping trust ip 192.168.7.1 success ping times :[3], success times:[2]

ping trust ip 192.168.7.1 success ping times :[3], success times:[2]

start crontab kingbase position : [3]

Redirecting to /bin/systemctl restart crond.service

ADD VIP NOW AT 2021-03-01 13:27:17 ON enp0s3

execute: [/sbin/ip addr add 192.168.7.245/24 dev enp0s3 label enp0s3:2]

execute: /home/kingbase/cluster/kha/db/bin/arping -U 192.168.7.245 -I enp0s3 -w 1

ARPING 192.168.7.245 from 192.168.7.245 enp0s3

Sent 1 probes (1 broadcast(s))

Received 0 response(s)

start crontab kingbase position : [2]

Redirecting to /bin/systemctl restart crond.service

ping vip 192.168.7.245 success ping times :[3], success times:[3]

ping vip 192.168.7.245 success ping times :[3], success times:[2]

now,there is a synchronous standby.

wait kingbase recovery 5 sec...

start crontab kingbasecluster line number: [6]

Redirecting to /bin/systemctl restart crond.service

start crontab kingbasecluster line number: [3]

Redirecting to /bin/systemctl restart crond.service

......................

all started..

...

now we check again

=======================================================================

| ip | program| [status]

[ 192.168.7.243]| [kingbasecluster]| [active]

[ 192.168.7.248]| [kingbasecluster]| [active]

[ 192.168.7.243]| [kingbase]| [active]

[ 192.168.7.248]| [kingbase]| [active]

=======================================================================四、验证集群状态

1、查看流复制状态信息

# 主备流复制状态信息

[kingbase@node3 bin]$ ./ksql -U SYSTEM -W 123456 TEST

ksql (V008R003C002B0270)

Type "help" for help.

TEST=# select * from sys_stat_replication;

PID | USESYSID | USENAME | APPLICATION_NAME | CLIENT_ADDR | CLIENT_HOSTNAME | CLIENT_PORT | BACKEND_START | BACKEND_XMIN |

STATE | SENT_LOCATION | WRITE_LOCATION | FLUSH_LOCATION | REPLAY_LOCATION | SYNC_PRIORITY | SYNC_STATE

-------+----------+---------+------------------+---------------+-----------------+-------------+-------------------------------+--------------+--

29330 | 10 | SYSTEM | node248 | 192.168.7.248 | | 39484 | 2021-03-01 13:27:19.649897+08 | | s

treaming | 0/70000D0 | 0/70000D0 | 0/70000D0 | 0/70000D0 | 2 | sync

(1 row)

# 复制槽信息

TEST=# select * from sys_replication_slots;

SLOT_NAME | PLUGIN | SLOT_TYPE | DATOID | DATABASE | ACTIVE | ACTIVE_PID | XMIN | CATALOG_XMIN | RESTART_LSN | CONFIRMED_FLUSH_LSN

--------------+--------+-----------+--------+----------+--------+------------+------+--------------+-------------+---------------------

slot_node243 | | physical | | | f | | | | |

slot_node248 |

| physical | | | t | 29330 | 2076 | | 0/70000D0 |

(2 rows)2、查看集群节点状态

[kingbase@node3 bin]$ ./ksql -U SYSTEM -W 123456 TEST -p 9999

ksql (V008R003C002B0270)

Type "help" for help.

TEST=# show pool_nodes;

node_id | hostname | port | status | lb_weight | role | select_cnt | load_balance_node | replication_delay

---------+---------------+-------+--------+-----------+---------+------------+-------------------+-------------------

0 | 192.168.7.243 | 54321 | up | 0.500000 | primary | 0 | false | 0

1 | 192.168.7.248 | 54321 | up | 0.500000 | standby | 0 | true | 0

(2 rows)

TEST=# select * from sys_stat_replication;

PID | USESYSID | USENAME | APPLICATION_NAME | CLIENT_ADDR | CLIENT_HOSTNAME | CLIENT_PORT | BACKEND_START | BACKEND_XMIN |

STATE | SENT_LOCATION | WRITE_LOCATION | FLUSH_LOCATION | REPLAY_LOCATION | SYNC_PRIORITY | SYNC_STATE

-------+----------+---------+------------------+---------------+-----------------+-------------+-------------------------------+--------------+--

---------+---------------+----------------+----------------+-----------------+---------------+------------

29330 | 10 | SYSTEM | node248 | 192.168.7.248 | | 39484 | 2021-03-01 13:27:19.649897+08 | | s

treaming | 0/70001B0 | 0/70001B0 | 0/70001B0 | 0/70001B0 | 2 | sync

(1 row)五、删除数据节点安装目录

[kingbase@node2 cluster]$ rm -rf kha/

六、总结

1、在删除集群数据节点前,需保证整个集群的状态(集群节点和流复制)正常。

2、注释掉数据节点的cron计划任务。

3、停止数据节点数据库服务。

4、在主节点删除数据节点的slot。

5、编辑所有管理节点的配置文件(HAmoudle.conf和kingbasecluster.conf)。

6、重启集群(非必须)。

7、测试集群状态。

8、删除数据节点的安装目录。

3887

3887

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?