文章目录

1. Lucas Kanade光流法原理

1.1 Lucas Kanade光流定义

光流是空间运动目标在观察成像平面上像素运动的瞬时速度。在计算机视觉中,光流扮演着重要角色,在目标分割、识别、跟踪、机器人导航以及形状信息恢复等都有着非常重要的应用。

在 1981 1981 1981年, Bruce D. Lucas \text{Bruce D. Lucas} Bruce D. Lucas及 Takeo Kanade \text{Takeo Kanade} Takeo Kanade提出了 Lucas Kanade \text{Lucas Kanade} Lucas Kanade( LK \text{LK} LK)算法用于光流估计,在计算机视觉中被广泛的使用。

LK \text{LK} LK算法利用相邻两帧图像的差分来估计光流,该算法基于以下三个假设进行公式推导:

- 亮度恒定:场景中目标被跟踪的部分亮度保持不变。

- 时间持续性(微小移动):目标运动相对于帧率是缓慢、连贯的。这意味着时间的变化不会引起像素位置的剧烈变化,这样像素点的灰度值才能对位置求对应的偏导数。

- 空间一致性:相邻像素点具有相同的运动。

考察

t

t

t时刻,位于

(

x

,

y

)

(x,y)

(x,y)处的像素点,其灰度可写为:

I

(

x

,

y

,

t

)

I(x,y,t)

I(x,y,t)

我们假设

t

+

d

t

t+\text{d}t

t+dt时刻,该像素点运动到了

(

x

+

d

x

,

y

+

d

y

)

(x+\text{d}x,y+\text{d}y)

(x+dx,y+dy)处。由于亮度恒定的假设,我们有:

I

(

x

+

d

x

,

y

+

d

y

,

t

+

d

t

)

=

I

(

x

,

y

,

t

)

I(x+\text{d}x,y+\text{d}y,t+\text{d}t)=I(x,y,t)

I(x+dx,y+dy,t+dt)=I(x,y,t)

对左边进行泰勒展开,保留一阶项,可得:

I

(

x

+

d

x

,

y

+

d

y

,

t

+

d

t

)

≈

I

(

x

,

y

,

t

)

+

∂

I

∂

x

d

x

+

∂

I

∂

y

d

y

+

∂

I

∂

t

d

t

I(x+\text{d}x,y+\text{d}y,t+\text{d}t)\approx I(x,y,t)+\frac{\partial I}{\partial x}\text{d}x+\frac{\partial I}{\partial y}\text{d}y+\frac{\partial I}{\partial t}\text{d}t

I(x+dx,y+dy,t+dt)≈I(x,y,t)+∂x∂Idx+∂y∂Idy+∂t∂Idt

根据亮度恒定假设,我们有:

∂

I

∂

x

d

x

+

∂

I

∂

y

d

y

+

∂

I

∂

t

d

t

=

0

\frac{\partial I}{\partial x}\text{d}x+\frac{\partial I}{\partial y}\text{d}y+\frac{\partial I}{\partial t}\text{d}t=0

∂x∂Idx+∂y∂Idy+∂t∂Idt=0

两边除以

d

t

\text{d}t

dt,可得:

∂

I

∂

x

d

x

d

t

+

∂

I

∂

y

d

y

d

t

=

−

∂

I

∂

t

\frac{\partial I}{\partial x}\frac{\text{d}x}{\text{d}t}+\frac{\partial I}{\partial y}\frac{\text{d}y}{\text{d}t}=-\frac{\partial I}{\partial t}

∂x∂Idtdx+∂y∂Idtdy=−∂t∂I

其中

d

x

/

d

t

\text{d}x/\text{d}t

dx/dt为像素在

x

x

x轴上的运动速度,而

d

y

/

d

t

\text{d}y/\text{d}t

dy/dt为

y

y

y轴上的速度,把它们记为

u

u

u,

v

v

v。同时,

∂

I

/

∂

x

\partial I/\partial x

∂I/∂x为图像在该点处

x

x

x方向的梯度,另一项则是在

y

y

y方向的梯度,记为

I

x

I_{x}

Ix,

I

y

I_{y}

Iy。同理,把图像灰度对时间的偏导记为

I

t

I_{t}

It,写成矩阵形式,有:

[

I

x

I

y

]

[

u

v

]

=

−

I

t

\begin{bmatrix} I_{x}&I_{y} \end{bmatrix} \begin{bmatrix} u\\ v \end{bmatrix} =-I_{t}

[IxIy][uv]=−It

我们想计算的是像素的运动

u

u

u,

v

v

v,但是该式是带有两个变量的一次方程,仅凭它无法计算出

u

u

u,

v

v

v。因此,必须引入额外的约束来计算

u

u

u,

v

v

v。在

LK

\text{LK}

LK光流中,我们假设相邻像素点具有相同的运动。

考察一个大小为

(

2

w

+

1

)

×

(

2

w

+

1

)

(2w+1)\times (2w+1)

(2w+1)×(2w+1)的窗口,它含有

n

=

(

2

w

+

1

)

2

n=(2w+1)^2

n=(2w+1)2数量的像素点。该窗口内像素点具有同样的运动,因此我们共有

n

n

n个方程:

[

I

x

i

I

y

i

]

[

u

v

]

=

−

I

t

i

,

i

=

1

,

.

.

.

,

n

\begin{bmatrix} I_{xi}&I_{yi} \end{bmatrix} \begin{bmatrix} u\\ v \end{bmatrix} =-I_{ti},\ i=1,...,n

[IxiIyi][uv]=−Iti, i=1,...,n

令:

A

=

[

I

x

1

I

y

1

⋮

I

x

n

I

y

n

]

,

b

=

[

I

t

1

⋮

I

t

k

]

\boldsymbol{A}=\begin{bmatrix} I_{x1}&I_{y1}\\ \vdots\\ I_{xn}&I_{yn} \end{bmatrix},\ \boldsymbol{b}=\begin{bmatrix}I_{t1}\\\vdots\\I_{tk}\end{bmatrix}

A=

Ix1⋮IxnIy1Iyn

, b=

It1⋮Itk

于是有:

A

[

u

v

]

=

−

b

\boldsymbol{A}\begin{bmatrix}u\\v\end{bmatrix}=-\boldsymbol{b}

A[uv]=−b

这是一个关于

u

u

u,

v

v

v的超定线性方程,传统解法是求最小二乘解:

[

u

v

]

∗

=

−

(

A

T

A

)

−

1

A

T

b

\begin{bmatrix}u\\v\end{bmatrix}^*=-(\boldsymbol{A}^T\boldsymbol{A})^{-1}\boldsymbol{A}^T\boldsymbol{b}

[uv]∗=−(ATA)−1ATb

整理上式可得:

[

u

v

]

∗

=

[

∑

i

=

1

n

I

x

i

2

∑

i

=

1

n

I

x

i

I

y

i

∑

i

=

1

n

I

x

i

I

y

i

∑

i

=

1

n

I

y

i

2

]

−

1

[

−

∑

i

=

1

n

I

x

i

I

t

i

−

∑

i

=

1

n

I

y

i

I

t

i

]

\begin{bmatrix}u\\v\end{bmatrix}^*=\begin{bmatrix}\sum_{i=1}^nI^2_{xi}&\sum_{i=1}^nI_{xi}I_{yi}\\\sum_{i=1}^nI_{xi}I_{yi}&\sum_{i=1}^nI^2_{yi}\end{bmatrix}^{-1}\begin{bmatrix}-\sum_{i=1}^nI_{xi}I_{ti}\\-\sum_{i=1}^nI_{yi}I_{ti}\end{bmatrix}

[uv]∗=[∑i=1nIxi2∑i=1nIxiIyi∑i=1nIxiIyi∑i=1nIyi2]−1[−∑i=1nIxiIti−∑i=1nIyiIti]

最终求得的

u

u

u,

v

v

v就是

LK

\text{LK}

LK算法所对应的光流。

1.2 Lucas Kanade光流迭代求解

我们使用符号

I

I

I表示前一帧图像,符号

J

J

J表示后一帧图像。假设图像

I

I

I上的像素点

u

=

[

u

x

u

y

]

T

\boldsymbol{u}=\begin{bmatrix}u_x&u_y\end{bmatrix}^T

u=[uxuy]T经过

d

t

\text{d}t

dt的时间移动到了图像

J

J

J上的像素点

v

=

u

+

d

=

[

v

x

+

d

x

v

y

+

d

y

]

T

\boldsymbol{v}=\boldsymbol{u}+\boldsymbol{d}=\begin{bmatrix}v_x+d_x&v_y+d_y\end{bmatrix}^T

v=u+d=[vx+dxvy+dy]T处。位移

d

=

[

d

x

d

y

]

T

\boldsymbol{d}=\begin{bmatrix}d_x&d_y\end{bmatrix}^T

d=[dxdy]T即待求解的光流。我们在以

u

\boldsymbol{u}

u为中心的

(

2

w

+

1

)

×

(

2

w

+

1

)

(2w+1)\times(2w+1)

(2w+1)×(2w+1)图像区域内,通过最小化灰度匹配误差平方和的方式来确定

d

\boldsymbol{d}

d。因此,损失函数可写为:

ε

(

d

)

=

ε

(

d

x

,

d

y

)

=

∑

x

=

u

x

−

w

x

=

u

x

+

w

∑

y

=

u

y

−

w

y

=

u

y

+

w

(

I

(

x

,

y

)

−

J

(

x

+

d

x

,

y

+

d

y

)

)

2

\varepsilon(\boldsymbol{d})=\varepsilon(d_x,d_y)=\sum_{x=u_x-w}^{x=u_x+w}\sum_{y=u_y-w}^{y=u_y+w}\left(I(x,y)-J(x+d_x,y+d_y)\right)^2

ε(d)=ε(dx,dy)=x=ux−w∑x=ux+wy=uy−w∑y=uy+w(I(x,y)−J(x+dx,y+dy))2

关于光流

d

\boldsymbol{d}

d的求解,我们采用迭代方式来进行。假设上一轮迭代所求解的光流为

d

′

\boldsymbol{d}'

d′,残差为

Δ

d

=

d

−

d

′

\Delta\boldsymbol{d}=\boldsymbol{d}-\boldsymbol{d}'

Δd=d−d′,那么我们对损失函数求导有:

∂

ε

∂

d

=

−

2

∑

x

=

u

x

−

w

x

=

u

x

+

w

∑

y

=

u

y

−

w

y

=

u

y

+

w

(

I

(

x

,

y

)

−

J

(

x

+

d

x

′

+

Δ

d

x

,

y

+

d

y

′

+

Δ

d

y

)

)

[

J

x

J

y

]

\frac{\partial\varepsilon}{\partial\boldsymbol{d}}=-2\sum_{x=u_x-w}^{x=u_x+w}\sum_{y=u_y-w}^{y=u_y+w}\left(I(x,y)-J(x+d'_x+\Delta d_x,y+d'_y+\Delta d_y)\right)\begin{bmatrix}J_x\\J_y\end{bmatrix}

∂d∂ε=−2x=ux−w∑x=ux+wy=uy−w∑y=uy+w(I(x,y)−J(x+dx′+Δdx,y+dy′+Δdy))[JxJy]

其中 [ J x J y ] T \begin{bmatrix}J_x&J_y\end{bmatrix}^T [JxJy]T表示图像 J J J在像素点 ( x + d x , y + d y ) (x+d_x,y+d_y) (x+dx,y+dy)处的梯度,根据亮度恒定的假设,该梯度等于图像 I I I在像素点 ( x , y ) (x,y) (x,y)处的梯度,即 [ J x J y ] T = [ I x I y ] T \begin{bmatrix}J_x&J_y\end{bmatrix}^T=\begin{bmatrix}I_x&I_y\end{bmatrix}^T [JxJy]T=[IxIy]T。

将图像

J

J

J在像素点

(

x

+

d

x

′

,

y

+

d

y

′

)

(x+d'_x,y+d'_y)

(x+dx′,y+dy′)处进行泰勒展开,保留一阶项可得:

J

(

x

+

d

x

′

+

Δ

d

x

,

y

+

d

y

′

+

Δ

d

y

)

≈

J

(

x

+

d

x

′

,

y

+

d

y

′

)

+

[

Δ

d

x

Δ

d

y

]

[

J

x

J

y

]

J(x+d'_x+\Delta d_x,y+d'_y+\Delta d_y)\approx J(x+d'_x,y+d'_y)+\begin{bmatrix}\Delta d_x&\Delta d_y\end{bmatrix}\begin{bmatrix}J_x\\J_y\end{bmatrix}

J(x+dx′+Δdx,y+dy′+Δdy)≈J(x+dx′,y+dy′)+[ΔdxΔdy][JxJy]

其中 [ J x J y ] T \begin{bmatrix}J_x&J_y\end{bmatrix}^T [JxJy]T表示图像 J J J在像素点 ( x + d x ′ , y + d y ′ ) (x+d'_x,y+d'_y) (x+dx′,y+dy′)处的梯度,同理根据亮度恒定的假设,可以使用 [ I x I y ] T \begin{bmatrix}I_x&I_y\end{bmatrix}^T [IxIy]T近似 [ J x J y ] T \begin{bmatrix}J_x&J_y\end{bmatrix}^T [JxJy]T。

将上式带入损失函数导数可得:

∂

ε

∂

d

=

−

2

∑

x

=

u

x

−

w

x

=

u

x

+

w

∑

y

=

u

y

−

w

y

=

u

y

+

w

(

I

(

x

,

y

)

−

J

(

x

+

d

x

′

,

y

+

d

y

′

)

−

[

Δ

d

x

Δ

d

y

]

[

I

x

I

y

]

)

[

I

x

I

y

]

\frac{\partial\varepsilon}{\partial\boldsymbol{d}}=-2\sum_{x=u_x-w}^{x=u_x+w}\sum_{y=u_y-w}^{y=u_y+w}\left(I(x,y)-J(x+d'_x,y+d'_y)-\begin{bmatrix}\Delta d_x&\Delta d_y\end{bmatrix}\begin{bmatrix}I_x\\I_y\end{bmatrix}\right)\begin{bmatrix}I_x\\I_y\end{bmatrix}

∂d∂ε=−2x=ux−w∑x=ux+wy=uy−w∑y=uy+w(I(x,y)−J(x+dx′,y+dy′)−[ΔdxΔdy][IxIy])[IxIy]

令

δ

I

=

I

(

x

,

y

)

−

J

(

x

+

d

x

′

,

y

+

d

y

′

)

\delta I=I(x,y)-J(x+d'_x,y+d'_y)

δI=I(x,y)−J(x+dx′,y+dy′),整理上式有:

∂

ε

∂

d

=

−

2

∑

x

=

u

x

−

w

x

=

u

x

+

w

∑

y

=

u

y

−

w

y

=

u

y

+

w

(

[

I

x

2

I

x

I

y

I

x

I

y

I

y

2

]

Δ

d

−

[

δ

I

I

x

δ

I

I

y

]

)

\frac{\partial\varepsilon}{\partial\boldsymbol{d}}=-2\sum_{x=u_x-w}^{x=u_x+w}\sum_{y=u_y-w}^{y=u_y+w}\left(\begin{bmatrix}I^2_x&I_xI_y\\I_xI_y&I^2_y\end{bmatrix}\Delta\boldsymbol{d}-\begin{bmatrix}\delta II_x\\\delta II_y\end{bmatrix}\right)

∂d∂ε=−2x=ux−w∑x=ux+wy=uy−w∑y=uy+w([Ix2IxIyIxIyIy2]Δd−[δIIxδIIy])

取:

A

=

∑

x

=

u

x

−

w

x

=

u

x

+

w

∑

y

=

u

y

−

w

y

=

u

y

+

w

[

I

x

2

I

x

I

y

I

x

I

y

I

y

2

]

,

b

=

∑

x

=

u

x

−

w

x

=

u

x

+

w

∑

y

=

u

y

−

w

y

=

u

y

+

w

[

δ

I

I

x

δ

I

I

y

]

\boldsymbol{A}=\sum_{x=u_x-w}^{x=u_x+w}\sum_{y=u_y-w}^{y=u_y+w}\begin{bmatrix}I^2_x&I_xI_y\\I_xI_y&I^2_y\end{bmatrix},\boldsymbol{b}=\sum_{x=u_x-w}^{x=u_x+w}\sum_{y=u_y-w}^{y=u_y+w}\begin{bmatrix}\delta II_x\\\delta II_y\end{bmatrix}

A=x=ux−w∑x=ux+wy=uy−w∑y=uy+w[Ix2IxIyIxIyIy2],b=x=ux−w∑x=ux+wy=uy−w∑y=uy+w[δIIxδIIy]

由于损失函数在最优解

d

\boldsymbol{d}

d处导数为

0

\boldsymbol{0}

0,于是有:

A

Δ

d

−

b

=

0

\boldsymbol{A}\Delta\boldsymbol{d}-\boldsymbol{b}=\boldsymbol{0}

AΔd−b=0

那么残差可使用下式计算:

Δ

d

∗

=

A

−

1

b

\Delta\boldsymbol{d}^*=\boldsymbol{A}^{-1}\boldsymbol{b}

Δd∗=A−1b

根据计算出的残差,我们可以更新所求解的光流,并进行下一轮迭代。

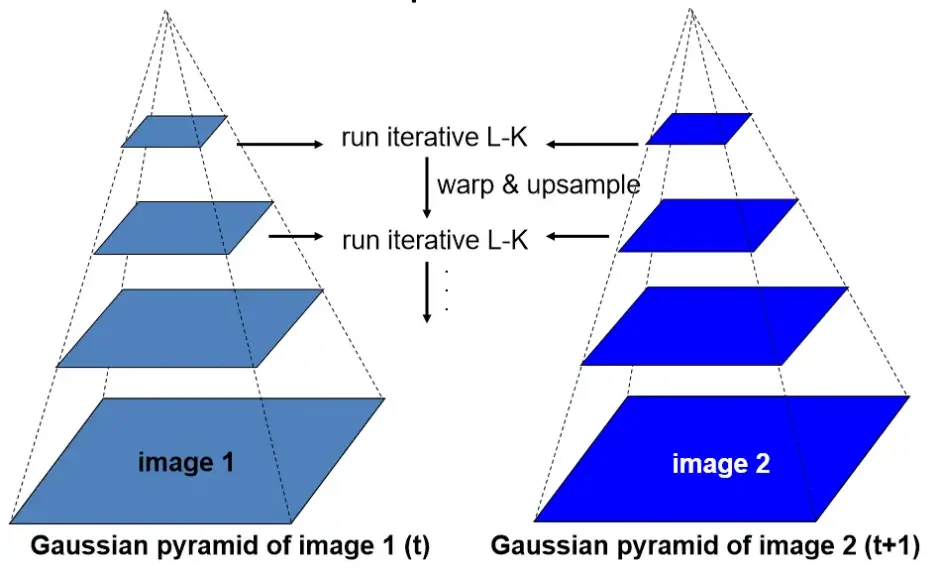

2. 金字塔Lucas Kanade光流法原理

LK \text{LK} LK光流法假设目标仅微小移动,如果目标剧烈运动,假设就不成立,这会使得最终求出的光流值存在较大误差。为了缓解这种情况,我们可以在计算中缩小图像尺寸,从而缩小像素点移动,这样就可以使得 LK \text{LK} LK光流法应用于目标剧烈运动的场景。

Bouguet \text{Bouguet} Bouguet提出了一种利用图像金子塔对原图逐层分解,并至上而下修正光流的算法。

首先,需要对前后两帧图像建立高斯金字塔,最低分辨率图像在顶层(编号 L m L_m Lm),原图在底层(编号 0 0 0)。

然后,我们使用迭代算法估计最顶层图像的光流:

g

L

m

+

d

L

m

\boldsymbol{g}^{L_m}+\boldsymbol{d}^{L_m}

gLm+dLm

其中

g

L

m

\boldsymbol{g}^{L_m}

gLm表示顶层光流估计的初值,默认为

0

\boldsymbol{0}

0,

d

L

m

\boldsymbol{d}^{L_m}

dLm表示顶层光流估计的残差,残差采用迭代的方式进行计算。

接着,我们将上层光流估计结果作为初值传递给下一层图像:

g

L

−

1

=

2

(

g

L

+

d

L

)

\boldsymbol{g}^{L-1}=2(\boldsymbol{g}^{L}+\boldsymbol{d}^{L})

gL−1=2(gL+dL)

最后,沿着金字塔至上而下,重复进行光流估计,直至到达金字塔底层:

d

=

g

0

+

d

0

\boldsymbol{d}=\boldsymbol{g}^{0}+\boldsymbol{d}^{0}

d=g0+d0

d

\boldsymbol{d}

d即由金字塔传递计算的最终光流。

通过这种搜索方式就可以剧烈运动目标的跟踪问题。

3. 金字塔光流算法代码解析

在 OpenCV \text{OpenCV} OpenCV中,使用函数 cv::calcOpticalFlowPyrLK \text{cv::calcOpticalFlowPyrLK} cv::calcOpticalFlowPyrLK实现金字塔光流计算以及特征追踪:

void cv::calcOpticalFlowPyrLK(

InputArray _prevImg, // _prevImg:前一帧图像(输入)

InputArray _nextImg, // _nextImg:后一帧图像(输入)

InputArray _prevPts, // _prevPts:前一帧特征点(输入)

InputOutputArray _nextPts, // _nextPts:后一帧特征点(输出)

OutputArray _status, // _status:状态矢量(输出)

// true:表示对应特征点存在有效光流,特征被追踪

// false:表示对应特征点不存在有效光流,特征未被追踪

OutputArray _err, // _err:误差矢量(输出),观察窗平均灰度匹配误差或最小特征根

Size winSize, // winSize:LK光流观察窗大小

int maxLevel, // maxLevel:金字塔最大高度

TermCriteria criteria, // TermCriteria criteria:迭代终止条件:达到最大迭代次数(COUNT)或达到最小迭代阈值(ESP)

// 默认最大迭代次数:30

// 默认最小迭代阈值:0.01

int flags, // flags:操作标志

// OPTFLOW_USE_INITIAL_FLOW:使用_nextPts作为光流

// OPTFLOW_LK_GET_MIN_EIGENVALS:使用最小特征值作为误差

double minEigThreshold // minEigThreshold:最小特征值阈值

)

在调用上述函数时,程序会调用 SparsePyrLKOpticalFlowImpl::calc \text{SparsePyrLKOpticalFlowImpl::calc} SparsePyrLKOpticalFlowImpl::calc建立图像金字塔并进行特征追踪:

void SparsePyrLKOpticalFlowImpl::calc( InputArray _prevImg, InputArray _nextImg,

InputArray _prevPts, InputOutputArray _nextPts,

OutputArray _status, OutputArray _err)

// _prevImg:前一帧图像(输入)

// _nextImg:后一帧图像(输入)

// _prevPts:前一帧特征点(输入)

// _nextPts:后一帧特征点(输出)

// _status:状态矢量(输出)

// _err:误差矢量(输出)

{

CV_INSTRUMENT_REGION();

CV_OCL_RUN(ocl::isOpenCLActivated() &&

(_prevImg.isUMat() || _nextImg.isUMat()) &&

ocl::Image2D::isFormatSupported(CV_32F, 1, false),

ocl_calcOpticalFlowPyrLK(_prevImg, _nextImg, _prevPts, _nextPts, _status, _err))

// OpenCL 加速

// Disabled due to bad accuracy

CV_OVX_RUN(false,

openvx_pyrlk(_prevImg, _nextImg, _prevPts, _nextPts, _status, _err))

// OpenVX 加速

Mat prevPtsMat = _prevPts.getMat();

const int derivDepth = DataType<cv::detail::deriv_type>::depth;

// 数据类型深度( typedef short deriv_type )

CV_Assert( maxLevel >= 0 && winSize.width > 2 && winSize.height > 2 );

// 参数检查

int level=0, i, npoints;

CV_Assert( (npoints = prevPtsMat.checkVector(2, CV_32F, true)) >= 0 );

// 检查 prevPtsMat 是否为 npoints x 2 的 float32 向量

/* 如果前一帧不存在特征点则返回 */

if( npoints == 0 )

{

_nextPts.release();

_status.release();

_err.release();

return;

}

if( !(flags & OPTFLOW_USE_INITIAL_FLOW) ) // 如果不使用初始估计

_nextPts.create(prevPtsMat.size(), prevPtsMat.type(), -1, true);

// _nextPts 设置为 npoints x 2 的 -1 矩阵

Mat nextPtsMat = _nextPts.getMat();

CV_Assert( nextPtsMat.checkVector(2, CV_32F, true) == npoints );

// 检查 nextPtsMat 是否为 npoints x 2 的 float32 向量

const Point2f* prevPts = prevPtsMat.ptr<Point2f>();

// 前一帧特征点指针

Point2f* nextPts = nextPtsMat.ptr<Point2f>();

// 后一帧特征点指针

_status.create((int)npoints, 1, CV_8U, -1, true);

// _status 设置为 npoints x 1 的 -1 矩阵

Mat statusMat = _status.getMat(), errMat;

CV_Assert( statusMat.isContinuous() ); // 检查 statusMat 是否连续存储

uchar* status = statusMat.ptr(); // 状态矢量指针

float* err = 0; // 误差矢量指针

/* 状态矢量整体初始化为true */

for( i = 0; i < npoints; i++ )

status[i] = true;

if( _err.needed() ) // 检查 _err 是否需要计算

{

_err.create((int)npoints, 1, CV_32F, -1, true);

// _err 设置为 npoints x 1 的 -1 矩阵

errMat = _err.getMat();

CV_Assert( errMat.isContinuous() ); // 检查 errMat 是否连续存储

err = errMat.ptr<float>(); // 误差矢量指针

}

std::vector<Mat> prevPyr, nextPyr;

int levels1 = -1; // 前一帧图像金字塔最高层数 - 1

int lvlStep1 = 1; // 前一帧图像金字塔层数步长(金字塔带差分图像:1,金字塔不带差分图像:2)

int levels2 = -1; // 后一帧图像金字塔最高层数 - 1

int lvlStep2 = 1; // 后一帧图像金字塔层数步长(金字塔带差分图像:1,金字塔不带差分图像:2)

if(_prevImg.kind() == _InputArray::STD_VECTOR_MAT)

// _prevImg 是否为图像金子塔

{

_prevImg.getMatVector(prevPyr);

levels1 = int(prevPyr.size()) - 1;

CV_Assert(levels1 >= 0);

if (levels1 % 2 == 1 && prevPyr[0].channels() * 2 == prevPyr[1].channels() && prevPyr[1].depth() == derivDepth)

{

lvlStep1 = 2;

levels1 /= 2;

}

// ensure that pyramid has required padding

if(levels1 > 0)

{

Size fullSize;

Point ofs;

prevPyr[lvlStep1].locateROI(fullSize, ofs);

CV_Assert(ofs.x >= winSize.width && ofs.y >= winSize.height

&& ofs.x + prevPyr[lvlStep1].cols + winSize.width <= fullSize.width

&& ofs.y + prevPyr[lvlStep1].rows + winSize.height <= fullSize.height);

}

if(levels1 < maxLevel)

maxLevel = levels1;

}

if(_nextImg.kind() == _InputArray::STD_VECTOR_MAT)

// _nextImg 是否为图像金子塔

{

_nextImg.getMatVector(nextPyr);

levels2 = int(nextPyr.size()) - 1;

CV_Assert(levels2 >= 0);

if (levels2 % 2 == 1 && nextPyr[0].channels() * 2 == nextPyr[1].channels() && nextPyr[1].depth() == derivDepth)

{

lvlStep2 = 2;

levels2 /= 2;

}

// ensure that pyramid has required padding

if(levels2 > 0)

{

Size fullSize;

Point ofs;

nextPyr[lvlStep2].locateROI(fullSize, ofs);

CV_Assert(ofs.x >= winSize.width && ofs.y >= winSize.height

&& ofs.x + nextPyr[lvlStep2].cols + winSize.width <= fullSize.width

&& ofs.y + nextPyr[lvlStep2].rows + winSize.height <= fullSize.height);

}

if(levels2 < maxLevel)

maxLevel = levels2;

}

if (levels1 < 0)

maxLevel = buildOpticalFlowPyramid(_prevImg, prevPyr, winSize, maxLevel, false);

// 构建前一帧图像金字塔

if (levels2 < 0)

maxLevel = buildOpticalFlowPyramid(_nextImg, nextPyr, winSize, maxLevel, false);

// 构建后一帧图像金字塔

/* 调整迭代终止条件 */

if( (criteria.type & TermCriteria::COUNT) == 0 )

criteria.maxCount = 30;

else

criteria.maxCount = std::min(std::max(criteria.maxCount, 0), 100);

if( (criteria.type & TermCriteria::EPS) == 0 )

criteria.epsilon = 0.01;

else

criteria.epsilon = std::min(std::max(criteria.epsilon, 0.), 10.);

criteria.epsilon *= criteria.epsilon;

// dI/dx ~ Ix, dI/dy ~ Iy

/* 如果金字塔不带差分图像,则创建缓冲区用于计算差分图像 */

Mat derivIBuf;

if(lvlStep1 == 1)

derivIBuf.create(prevPyr[0].rows + winSize.height*2, prevPyr[0].cols + winSize.width*2, CV_MAKETYPE(derivDepth, prevPyr[0].channels() * 2));

/* 遍历金字塔各层 */

for( level = maxLevel; level >= 0; level-- )

{

/* 如果金字塔不带差分图像,则计算差分图像,否则使用金字塔差分图像 */

Mat derivI;

if(lvlStep1 == 1)

{

Size imgSize = prevPyr[level * lvlStep1].size();

Mat _derivI( imgSize.height + winSize.height*2,

imgSize.width + winSize.width*2, derivIBuf.type(), derivIBuf.ptr() );

derivI = _derivI(Rect(winSize.width, winSize.height, imgSize.width, imgSize.height));

calcScharrDeriv(prevPyr[level * lvlStep1], derivI);

copyMakeBorder(derivI, _derivI, winSize.height, winSize.height, winSize.width, winSize.width, BORDER_CONSTANT|BORDER_ISOLATED);

}

else

derivI = prevPyr[level * lvlStep1 + 1];

CV_Assert(prevPyr[level * lvlStep1].size() == nextPyr[level * lvlStep2].size());

CV_Assert(prevPyr[level * lvlStep1].type() == nextPyr[level * lvlStep2].type());

typedef cv::detail::LKTrackerInvoker LKTrackerInvoker;

parallel_for_(Range(0, npoints), LKTrackerInvoker(prevPyr[level * lvlStep1], derivI, nextPyr[level * lvlStep2], prevPts, nextPts, status, err, winSize, criteria, level, maxLevel, flags, (float)minEigThreshold));

// 多线程调用LKTrackerInvoker计算特征点光流,进行特征追踪

}

}

关于图像金字塔建立的相关代码如下:

int cv::buildOpticalFlowPyramid(InputArray _img, OutputArrayOfArrays pyramid, Size winSize, int maxLevel, bool withDerivatives,

int pyrBorder, int derivBorder, bool tryReuseInputImage)

// _img:输入图像

// pyramid:输出金字塔

// winSize:LK光流观察窗大小,这里用于边界处理

// maxLevel:最大金字塔高度

// withDerivatives:是否构建差分金字塔

// pyrBorder:图像金字塔边界处理方法

// derivBorder:差分金字塔边界处理方法

// tryReuseInputImage:是否复用输入图像

{

CV_INSTRUMENT_REGION();

Mat img = _img.getMat();

CV_Assert(img.depth() == CV_8U && winSize.width > 2 && winSize.height > 2 );

// 参数检查

int pyrstep = withDerivatives ? 2 : 1; // 如果需要构建差分金字塔,那么图像金字塔各层在整体金字塔中的步长为 2(图像与差分依次排列),否则步长为 1

pyramid.create(1, (maxLevel + 1) * pyrstep, 0 /*type*/, -1, true);

// 构建 maxLevel + 1 层金字塔,类型为 CV_8UC1,

int derivType = CV_MAKETYPE(DataType<cv::detail::deriv_type>::depth, img.channels() * 2);

// 差分矩阵类型

//level 0

bool lvl0IsSet = false; // level 0 set 标志置 false

if(tryReuseInputImage && img.isSubmatrix() && (pyrBorder & BORDER_ISOLATED) == 0)

// 如果复用 img 且 img 为子矩阵且???

{

Size wholeSize;

Point ofs;

img.locateROI(wholeSize, ofs); // 定位 img 在父矩阵中的位置

if (ofs.x >= winSize.width && ofs.y >= winSize.height

&& ofs.x + img.cols + winSize.width <= wholeSize.width

&& ofs.y + img.rows + winSize.height <= wholeSize.height)

// 判断 img 是否距父矩阵边界距离大于等于 1 个 winSize 的尺寸

{

pyramid.getMatRef(0) = img; // 将金字塔第 0 层设置为 img 矩阵

lvl0IsSet = true; // level 0 set 标志置 true

}

}

if(!lvl0IsSet) // 如果金字塔第 0 层未设置

{

Mat& temp = pyramid.getMatRef(0); // 金字塔第 0 层

if(!temp.empty()) // 如果金字塔第 0 层非空

temp.adjustROI(winSize.height, winSize.height, winSize.width, winSize.width);

// 调整子矩阵位置以及大小,使其距父矩阵边界距离等于 1 个 winSize 的尺寸

if(temp.type() != img.type() || temp.cols != winSize.width*2 + img.cols || temp.rows != winSize.height * 2 + img.rows)

// 如果金字塔第 0 层矩阵类型与 img 矩阵类型不一致或金字塔第 0 层尺寸不等于 img 加 winSize 边界的尺寸

temp.create(img.rows + winSize.height*2, img.cols + winSize.width*2, img.type());

// 构建 (img.rows + winSize.height * 2) x (img.cols + winSize.width * 2) 大小矩阵,矩阵类型与 img 矩阵类型一致

if(pyrBorder == BORDER_TRANSPARENT) // 如果图像金字塔边界采用透明填充

img.copyTo(temp(Rect(winSize.width, winSize.height, img.cols, img.rows)));

// 将 img 拷贝至金字塔第 0 层距离左上角 (winSize.width, winSize.height) 的位置

else

copyMakeBorder(img, temp, winSize.height, winSize.height, winSize.width, winSize.width, pyrBorder);

// 将 img 拷贝至金字塔第 0 层并按 pyrBorder 要求生成 winSize 尺寸的边界

temp.adjustROI(-winSize.height, -winSize.height, -winSize.width, -winSize.width);

// ???

}

Size sz = img.size();

Mat prevLevel = pyramid.getMatRef(0);

Mat thisLevel = prevLevel;

for(int level = 0; level <= maxLevel; ++level)

{

if (level != 0)

{

Mat& temp = pyramid.getMatRef(level * pyrstep);

// 金字塔第 level 层(图像)

if(!temp.empty()) // 如果金字塔第 level 层非空

temp.adjustROI(winSize.height, winSize.height, winSize.width, winSize.width);

// 调整子矩阵位置以及大小,使其距父矩阵边界距离等于 1 个 winSize 的尺寸

if(temp.type() != img.type() || temp.cols != winSize.width*2 + sz.width || temp.rows != winSize.height * 2 + sz.height)

// 如果金字塔第 level 层矩阵类型与 img 矩阵类型不一致或金字塔第 level 层尺寸不等于 sz 加 winSize 边界的尺寸

temp.create(sz.height + winSize.height*2, sz.width + winSize.width*2, img.type());

// 构建 (sz.rows + winSize.height * 2) x (sz.cols + winSize.width * 2) 大小矩阵,矩阵类型与 img 矩阵类型一致

thisLevel = temp(Rect(winSize.width, winSize.height, sz.width, sz.height));

// 从金字塔第 level 层距离左上角 (winSize.width, winSize.height) 的位置提取 sz 尺寸的 thisLevel

pyrDown(prevLevel, thisLevel, sz); // 根据 prevLevel 内容对其进行下采样生成 sz 尺寸的 thisLevel

// 采用 5 x 5 高斯模板对图像进行滤波后再抽样降低分辨率,模板被分解为水平算子和竖直算子,采用的系数为:(1, 4, 6, 4, 1)

if(pyrBorder != BORDER_TRANSPARENT) // 如果图像金字塔边界不采用透明填充

copyMakeBorder(thisLevel, temp, winSize.height, winSize.height, winSize.width, winSize.width, pyrBorder|BORDER_ISOLATED);

// 将 thisLevel 拷贝至金字塔第 level 层并按 pyrBorder 要求生成 winSize 尺寸的边界

temp.adjustROI(-winSize.height, -winSize.height, -winSize.width, -winSize.width);

// ???

}

if(withDerivatives)

{

Mat& deriv = pyramid.getMatRef(level * pyrstep + 1);

// 金字塔第 level 层(差分)

if(!deriv.empty()) // 如果金字塔第 level 层非空

deriv.adjustROI(winSize.height, winSize.height, winSize.width, winSize.width);

// 调整子矩阵位置以及大小,使其距父矩阵边界距离等于 1 个 winSize 的尺寸

if(deriv.type() != derivType || deriv.cols != winSize.width*2 + sz.width || deriv.rows != winSize.height * 2 + sz.height)

// 如果金字塔第 level 层矩阵类型非 derivType 或金字塔第 level 层尺寸不等于 sz 加 winSize 边界的尺寸

deriv.create(sz.height + winSize.height*2, sz.width + winSize.width*2, derivType);

// 构建 (sz.rows + winSize.height * 2) x (sz.cols + winSize.width * 2) 大小矩阵,矩阵类型为 derivType

Mat derivI = deriv(Rect(winSize.width, winSize.height, sz.width, sz.height));

// 从金字塔第 level 层距离左上角 (winSize.width, winSize.height) 的位置提取 sz 尺寸的 thisLevel

calcScharrDeriv(thisLevel, derivI); // 利用 Scharr 算子计算 thisLevel 梯度 derivI

// Scharr 算子被分解为平滑算子:(3, 10, 3),以及差分算子:(-1,0,1)分别对图像进行卷积

if(derivBorder != BORDER_TRANSPARENT)

// 如果图像金字塔边界不采用透明填充

copyMakeBorder(derivI, deriv, winSize.height, winSize.height, winSize.width, winSize.width, derivBorder|BORDER_ISOLATED);

// 将 derivI 拷贝至金字塔第 level 层并按 derivBorder 要求生成 winSize 尺寸的边界

deriv.adjustROI(-winSize.height, -winSize.height, -winSize.width, -winSize.width);

// ???

}

sz = Size((sz.width+1)/2, (sz.height+1)/2);

// 将分辨率逐步降低

if( sz.width <= winSize.width || sz.height <= winSize.height )

// 如果分辨率小于 sz 尺寸

{

pyramid.create(1, (level + 1) * pyrstep, 0 /*type*/, -1, true);//check this

// 调整金字塔层数并

return level;

}

prevLevel = thisLevel; // 将 thisLevel 赋值给 prevLevel 并进行下一轮迭代

}

return maxLevel;

}

关于光流计算以及特征追踪的相关代码如下:

void cv::detail::LKTrackerInvoker::operator()(const Range& range) const

// range:(0, npoints)

{

CV_INSTRUMENT_REGION();

Point2f halfWin((winSize.width-1)*0.5f, (winSize.height-1)*0.5f);

const Mat& I = *prevImg;

const Mat& J = *nextImg;

const Mat& derivI = *prevDeriv;

int j, cn = I.channels(), cn2 = cn*2;

cv::AutoBuffer<deriv_type> _buf(winSize.area()*(cn + cn2));

int derivDepth = DataType<deriv_type>::depth;

Mat IWinBuf(winSize, CV_MAKETYPE(derivDepth, cn), _buf.data());

Mat derivIWinBuf(winSize, CV_MAKETYPE(derivDepth, cn2), _buf.data() + winSize.area()*cn);

for( int ptidx = range.start; ptidx < range.end; ptidx++ )

{

Point2f prevPt = prevPts[ptidx]*(float)(1./(1 << level));

// 对前一帧特征点 x,y 坐标进行缩放以适配当前层分辨率

Point2f nextPt;

if( level == maxLevel ) // 如果当前层是金字塔最后一层(对金字塔各层的遍历由最后一层开始)

{

if( flags & OPTFLOW_USE_INITIAL_FLOW )

// 如果对后一帧特征点使用了初始化值

nextPt = nextPts[ptidx]*(float)(1./(1 << level));

// 对后一帧特征点 x,y 坐标进行缩放以适配当前层分辨率

else

nextPt = prevPt; // 使用前一帧特征点初始化后一帧特征点

}

else

nextPt = nextPts[ptidx]*2.f; // 对后一帧特征点 x,y 坐标进行缩放以适配当前层分辨率

nextPts[ptidx] = nextPt; // 将处理后的结果放回 nextPts

Point2i iprevPt, inextPt;

prevPt -= halfWin; // 对前一帧特征点 x,y 坐标进行调整使其指向观察窗左上角顶点

iprevPt.x = cvFloor(prevPt.x);

iprevPt.y = cvFloor(prevPt.y);

if( iprevPt.x < -winSize.width || iprevPt.x >= derivI.cols ||

iprevPt.y < -winSize.height || iprevPt.y >= derivI.rows )

// 如果特征点观察窗偏离当前图像

{

if( level == 0 )

{

if( status )

status[ptidx] = false; // 特征点状态值赋 false

if( err )

err[ptidx] = 0; // 特征点误差值赋 0

}

continue;

}

float a = prevPt.x - iprevPt.x;

float b = prevPt.y - iprevPt.y;

const int W_BITS = 14, W_BITS1 = 14;

const float FLT_SCALE = 1.f/(1 << 20);

int iw00 = cvRound((1.f - a)*(1.f - b)*(1 << W_BITS));

int iw01 = cvRound(a*(1.f - b)*(1 << W_BITS));

int iw10 = cvRound((1.f - a)*b*(1 << W_BITS));

int iw11 = (1 << W_BITS) - iw00 - iw01 - iw10;

int dstep = (int)(derivI.step/derivI.elemSize1());

int stepI = (int)(I.step/I.elemSize1());

int stepJ = (int)(J.step/J.elemSize1());

acctype iA11 = 0, iA12 = 0, iA22 = 0;

float A11, A12, A22;

#if CV_SIMD128 && !CV_NEON

v_int16x8 qw0((short)(iw00), (short)(iw01), (short)(iw00), (short)(iw01), (short)(iw00), (short)(iw01), (short)(iw00), (short)(iw01));

v_int16x8 qw1((short)(iw10), (short)(iw11), (short)(iw10), (short)(iw11), (short)(iw10), (short)(iw11), (short)(iw10), (short)(iw11));

v_int32x4 qdelta_d = v_setall_s32(1 << (W_BITS1-1));

v_int32x4 qdelta = v_setall_s32(1 << (W_BITS1-5-1));

v_float32x4 qA11 = v_setzero_f32(), qA12 = v_setzero_f32(), qA22 = v_setzero_f32();

#endif

#if CV_NEON

float CV_DECL_ALIGNED(16) nA11[] = { 0, 0, 0, 0 }, nA12[] = { 0, 0, 0, 0 }, nA22[] = { 0, 0, 0, 0 };

const int shifter1 = -(W_BITS - 5); //negative so it shifts right

const int shifter2 = -(W_BITS);

const int16x4_t d26 = vdup_n_s16((int16_t)iw00);

const int16x4_t d27 = vdup_n_s16((int16_t)iw01);

const int16x4_t d28 = vdup_n_s16((int16_t)iw10);

const int16x4_t d29 = vdup_n_s16((int16_t)iw11);

const int32x4_t q11 = vdupq_n_s32((int32_t)shifter1);

const int32x4_t q12 = vdupq_n_s32((int32_t)shifter2);

#endif

// extract the patch from the first image, compute covariation matrix of derivatives

int x, y;

for( y = 0; y < winSize.height; y++ )

{

const uchar* src = I.ptr() + (y + iprevPt.y)*stepI + iprevPt.x*cn;

// 前一帧图像观察窗首地址

const deriv_type* dsrc = derivI.ptr<deriv_type>() + (y + iprevPt.y)*dstep + iprevPt.x*cn2;

// 前一帧差分图像观察窗首地址

deriv_type* Iptr = IWinBuf.ptr<deriv_type>(y);

deriv_type* dIptr = derivIWinBuf.ptr<deriv_type>(y);

x = 0;

#if CV_SIMD128 && !CV_NEON

for( ; x <= winSize.width*cn - 8; x += 8, dsrc += 8*2, dIptr += 8*2 )

{

v_int32x4 t0, t1;

v_int16x8 v00, v01, v10, v11, t00, t01, t10, t11;

v00 = v_reinterpret_as_s16(v_load_expand(src + x));

v01 = v_reinterpret_as_s16(v_load_expand(src + x + cn));

v10 = v_reinterpret_as_s16(v_load_expand(src + x + stepI));

v11 = v_reinterpret_as_s16(v_load_expand(src + x + stepI + cn));

v_zip(v00, v01, t00, t01);

v_zip(v10, v11, t10, t11);

t0 = v_dotprod(t00, qw0, qdelta) + v_dotprod(t10, qw1);

t1 = v_dotprod(t01, qw0, qdelta) + v_dotprod(t11, qw1);

t0 = t0 >> (W_BITS1-5);

t1 = t1 >> (W_BITS1-5);

v_store(Iptr + x, v_pack(t0, t1));

v00 = v_reinterpret_as_s16(v_load(dsrc));

v01 = v_reinterpret_as_s16(v_load(dsrc + cn2));

v10 = v_reinterpret_as_s16(v_load(dsrc + dstep));

v11 = v_reinterpret_as_s16(v_load(dsrc + dstep + cn2));

v_zip(v00, v01, t00, t01);

v_zip(v10, v11, t10, t11);

t0 = v_dotprod(t00, qw0, qdelta_d) + v_dotprod(t10, qw1);

t1 = v_dotprod(t01, qw0, qdelta_d) + v_dotprod(t11, qw1);

t0 = t0 >> W_BITS1;

t1 = t1 >> W_BITS1;

v00 = v_pack(t0, t1); // Ix0 Iy0 Ix1 Iy1 ...

v_store(dIptr, v00);

v00 = v_reinterpret_as_s16(v_interleave_pairs(v_reinterpret_as_s32(v_interleave_pairs(v00))));

v_expand(v00, t1, t0);

v_float32x4 fy = v_cvt_f32(t0);

v_float32x4 fx = v_cvt_f32(t1);

qA22 = v_muladd(fy, fy, qA22);

qA12 = v_muladd(fx, fy, qA12);

qA11 = v_muladd(fx, fx, qA11);

v00 = v_reinterpret_as_s16(v_load(dsrc + 4*2));

v01 = v_reinterpret_as_s16(v_load(dsrc + 4*2 + cn2));

v10 = v_reinterpret_as_s16(v_load(dsrc + 4*2 + dstep));

v11 = v_reinterpret_as_s16(v_load(dsrc + 4*2 + dstep + cn2));

v_zip(v00, v01, t00, t01);

v_zip(v10, v11, t10, t11);

t0 = v_dotprod(t00, qw0, qdelta_d) + v_dotprod(t10, qw1);

t1 = v_dotprod(t01, qw0, qdelta_d) + v_dotprod(t11, qw1);

t0 = t0 >> W_BITS1;

t1 = t1 >> W_BITS1;

v00 = v_pack(t0, t1); // Ix0 Iy0 Ix1 Iy1 ...

v_store(dIptr + 4*2, v00);

v00 = v_reinterpret_as_s16(v_interleave_pairs(v_reinterpret_as_s32(v_interleave_pairs(v00))));

v_expand(v00, t1, t0);

fy = v_cvt_f32(t0);

fx = v_cvt_f32(t1);

qA22 = v_muladd(fy, fy, qA22);

qA12 = v_muladd(fx, fy, qA12);

qA11 = v_muladd(fx, fx, qA11);

}

#endif

#if CV_NEON

for( ; x <= winSize.width*cn - 4; x += 4, dsrc += 4*2, dIptr += 4*2 )

{

uint8x8_t d0 = vld1_u8(&src[x]);

uint8x8_t d2 = vld1_u8(&src[x+cn]);

uint16x8_t q0 = vmovl_u8(d0);

uint16x8_t q1 = vmovl_u8(d2);

int32x4_t q5 = vmull_s16(vget_low_s16(vreinterpretq_s16_u16(q0)), d26);

int32x4_t q6 = vmull_s16(vget_low_s16(vreinterpretq_s16_u16(q1)), d27);

uint8x8_t d4 = vld1_u8(&src[x + stepI]);

uint8x8_t d6 = vld1_u8(&src[x + stepI + cn]);

uint16x8_t q2 = vmovl_u8(d4);

uint16x8_t q3 = vmovl_u8(d6);

int32x4_t q7 = vmull_s16(vget_low_s16(vreinterpretq_s16_u16(q2)), d28);

int32x4_t q8 = vmull_s16(vget_low_s16(vreinterpretq_s16_u16(q3)), d29);

q5 = vaddq_s32(q5, q6);

q7 = vaddq_s32(q7, q8);

q5 = vaddq_s32(q5, q7);

int16x4x2_t d0d1 = vld2_s16(dsrc);

int16x4x2_t d2d3 = vld2_s16(&dsrc[cn2]);

q5 = vqrshlq_s32(q5, q11);

int32x4_t q4 = vmull_s16(d0d1.val[0], d26);

q6 = vmull_s16(d0d1.val[1], d26);

int16x4_t nd0 = vmovn_s32(q5);

q7 = vmull_s16(d2d3.val[0], d27);

q8 = vmull_s16(d2d3.val[1], d27);

vst1_s16(&Iptr[x], nd0);

int16x4x2_t d4d5 = vld2_s16(&dsrc[dstep]);

int16x4x2_t d6d7 = vld2_s16(&dsrc[dstep+cn2]);

q4 = vaddq_s32(q4, q7);

q6 = vaddq_s32(q6, q8);

q7 = vmull_s16(d4d5.val[0], d28);

int32x4_t q14 = vmull_s16(d4d5.val[1], d28);

q8 = vmull_s16(d6d7.val[0], d29);

int32x4_t q15 = vmull_s16(d6d7.val[1], d29);

q7 = vaddq_s32(q7, q8);

q14 = vaddq_s32(q14, q15);

q4 = vaddq_s32(q4, q7);

q6 = vaddq_s32(q6, q14);

float32x4_t nq0 = vld1q_f32(nA11);

float32x4_t nq1 = vld1q_f32(nA12);

float32x4_t nq2 = vld1q_f32(nA22);

q4 = vqrshlq_s32(q4, q12);

q6 = vqrshlq_s32(q6, q12);

q7 = vmulq_s32(q4, q4);

q8 = vmulq_s32(q4, q6);

q15 = vmulq_s32(q6, q6);

nq0 = vaddq_f32(nq0, vcvtq_f32_s32(q7));

nq1 = vaddq_f32(nq1, vcvtq_f32_s32(q8));

nq2 = vaddq_f32(nq2, vcvtq_f32_s32(q15));

vst1q_f32(nA11, nq0);

vst1q_f32(nA12, nq1);

vst1q_f32(nA22, nq2);

int16x4_t d8 = vmovn_s32(q4);

int16x4_t d12 = vmovn_s32(q6);

int16x4x2_t d8d12;

d8d12.val[0] = d8; d8d12.val[1] = d12;

vst2_s16(dIptr, d8d12);

}

#endif

for( ; x < winSize.width*cn; x++, dsrc += 2, dIptr += 2 )

{

int ival = CV_DESCALE(src[x]*iw00 + src[x+cn]*iw01 +

src[x+stepI]*iw10 + src[x+stepI+cn]*iw11, W_BITS1-5);

// 观察窗中前一帧图像的像素点值(考虑到观察窗边界取整的关系,这里对该像素点周围的像素点进行了加权处理)

int ixval = CV_DESCALE(dsrc[0]*iw00 + dsrc[cn2]*iw01 +

dsrc[dstep]*iw10 + dsrc[dstep+cn2]*iw11, W_BITS1);

// 观察窗中前一帧差分图像 x 方向的像素点值(考虑到观察窗边界取整的关系,这里对该像素点周围的像素点进行了加权处理)

int iyval = CV_DESCALE(dsrc[1]*iw00 + dsrc[cn2+1]*iw01 + dsrc[dstep+1]*iw10 +

dsrc[dstep+cn2+1]*iw11, W_BITS1);

// 观察窗中前一帧差分图像 y 方向的像素点值(考虑到观察窗边界取整的关系,这里对该像素点周围的像素点进行了加权处理)

Iptr[x] = (short)ival;

dIptr[0] = (short)ixval;

dIptr[1] = (short)iyval;

iA11 += (itemtype)(ixval*ixval);// 累加 Ix * Ix

iA12 += (itemtype)(ixval*iyval);// 累加 Ix * Iy

iA22 += (itemtype)(iyval*iyval);// 累加 Iy * Iy

}

}

#if CV_SIMD128 && !CV_NEON

iA11 += v_reduce_sum(qA11);

iA12 += v_reduce_sum(qA12);

iA22 += v_reduce_sum(qA22);

#endif

#if CV_NEON

iA11 += nA11[0] + nA11[1] + nA11[2] + nA11[3];

iA12 += nA12[0] + nA12[1] + nA12[2] + nA12[3];

iA22 += nA22[0] + nA22[1] + nA22[2] + nA22[3];

#endif

A11 = iA11*FLT_SCALE;

A12 = iA12*FLT_SCALE;

A22 = iA22*FLT_SCALE;

float D = A11*A22 - A12*A12; // 行列式

float minEig = (A22 + A11 - std::sqrt((A11-A22)*(A11-A22) +

4.f*A12*A12))/(2*winSize.width*winSize.height);

// 较小特征值

if( err && (flags & OPTFLOW_LK_GET_MIN_EIGENVALS) != 0 )

err[ptidx] = (float)minEig; // 特征点误差值赋较小特征值

if( minEig < minEigThreshold || D < FLT_EPSILON )

// 如果较小特征值小于阈值(特征点特征不明显)或行列式小于阈值(矩阵不可逆)

{

if( level == 0 && status )

status[ptidx] = false; // 特征点状态值赋 false

continue;

}

D = 1.f/D;

nextPt -= halfWin; // 对后一帧特征点 x,y 坐标进行调整使其指向观察窗左上角顶点

Point2f prevDelta;

for( j = 0; j < criteria.maxCount; j++ )

{

inextPt.x = cvFloor(nextPt.x);

inextPt.y = cvFloor(nextPt.y);

if( inextPt.x < -winSize.width || inextPt.x >= J.cols ||

inextPt.y < -winSize.height || inextPt.y >= J.rows )

// 如果特征点观察窗偏离当前图像

{

if( level == 0 && status )

status[ptidx] = false; // 特征点状态值赋 false

break;

}

a = nextPt.x - inextPt.x;

b = nextPt.y - inextPt.y;

iw00 = cvRound((1.f - a)*(1.f - b)*(1 << W_BITS));

iw01 = cvRound(a*(1.f - b)*(1 << W_BITS));

iw10 = cvRound((1.f - a)*b*(1 << W_BITS));

iw11 = (1 << W_BITS) - iw00 - iw01 - iw10;

acctype ib1 = 0, ib2 = 0;

float b1, b2;

#if CV_SIMD128 && !CV_NEON

qw0 = v_int16x8((short)(iw00), (short)(iw01), (short)(iw00), (short)(iw01), (short)(iw00), (short)(iw01), (short)(iw00), (short)(iw01));

qw1 = v_int16x8((short)(iw10), (short)(iw11), (short)(iw10), (short)(iw11), (short)(iw10), (short)(iw11), (short)(iw10), (short)(iw11));

v_float32x4 qb0 = v_setzero_f32(), qb1 = v_setzero_f32();

#endif

#if CV_NEON

float CV_DECL_ALIGNED(16) nB1[] = { 0,0,0,0 }, nB2[] = { 0,0,0,0 };

const int16x4_t d26_2 = vdup_n_s16((int16_t)iw00);

const int16x4_t d27_2 = vdup_n_s16((int16_t)iw01);

const int16x4_t d28_2 = vdup_n_s16((int16_t)iw10);

const int16x4_t d29_2 = vdup_n_s16((int16_t)iw11);

#endif

for( y = 0; y < winSize.height; y++ )

{

const uchar* Jptr = J.ptr() + (y + inextPt.y)*stepJ + inextPt.x*cn;

// 后一帧图像观察窗首地址

const deriv_type* Iptr = IWinBuf.ptr<deriv_type>(y);

const deriv_type* dIptr = derivIWinBuf.ptr<deriv_type>(y);

x = 0;

#if CV_SIMD128 && !CV_NEON

for( ; x <= winSize.width*cn - 8; x += 8, dIptr += 8*2 )

{

v_int16x8 diff0 = v_reinterpret_as_s16(v_load(Iptr + x)), diff1, diff2;

v_int16x8 v00 = v_reinterpret_as_s16(v_load_expand(Jptr + x));

v_int16x8 v01 = v_reinterpret_as_s16(v_load_expand(Jptr + x + cn));

v_int16x8 v10 = v_reinterpret_as_s16(v_load_expand(Jptr + x + stepJ));

v_int16x8 v11 = v_reinterpret_as_s16(v_load_expand(Jptr + x + stepJ + cn));

v_int32x4 t0, t1;

v_int16x8 t00, t01, t10, t11;

v_zip(v00, v01, t00, t01);

v_zip(v10, v11, t10, t11);

t0 = v_dotprod(t00, qw0, qdelta) + v_dotprod(t10, qw1);

t1 = v_dotprod(t01, qw0, qdelta) + v_dotprod(t11, qw1);

t0 = t0 >> (W_BITS1-5);

t1 = t1 >> (W_BITS1-5);

diff0 = v_pack(t0, t1) - diff0;

v_zip(diff0, diff0, diff2, diff1); // It0 It0 It1 It1 ...

v00 = v_reinterpret_as_s16(v_load(dIptr)); // Ix0 Iy0 Ix1 Iy1 ...

v01 = v_reinterpret_as_s16(v_load(dIptr + 8));

v_zip(v00, v01, v10, v11);

v_zip(diff2, diff1, v00, v01);

qb0 += v_cvt_f32(v_dotprod(v00, v10));

qb1 += v_cvt_f32(v_dotprod(v01, v11));

}

#endif

#if CV_NEON

for( ; x <= winSize.width*cn - 8; x += 8, dIptr += 8*2 )

{

uint8x8_t d0 = vld1_u8(&Jptr[x]);

uint8x8_t d2 = vld1_u8(&Jptr[x+cn]);

uint8x8_t d4 = vld1_u8(&Jptr[x+stepJ]);

uint8x8_t d6 = vld1_u8(&Jptr[x+stepJ+cn]);

uint16x8_t q0 = vmovl_u8(d0);

uint16x8_t q1 = vmovl_u8(d2);

uint16x8_t q2 = vmovl_u8(d4);

uint16x8_t q3 = vmovl_u8(d6);

int32x4_t nq4 = vmull_s16(vget_low_s16(vreinterpretq_s16_u16(q0)), d26_2);

int32x4_t nq5 = vmull_s16(vget_high_s16(vreinterpretq_s16_u16(q0)), d26_2);

int32x4_t nq6 = vmull_s16(vget_low_s16(vreinterpretq_s16_u16(q1)), d27_2);

int32x4_t nq7 = vmull_s16(vget_high_s16(vreinterpretq_s16_u16(q1)), d27_2);

int32x4_t nq8 = vmull_s16(vget_low_s16(vreinterpretq_s16_u16(q2)), d28_2);

int32x4_t nq9 = vmull_s16(vget_high_s16(vreinterpretq_s16_u16(q2)), d28_2);

int32x4_t nq10 = vmull_s16(vget_low_s16(vreinterpretq_s16_u16(q3)), d29_2);

int32x4_t nq11 = vmull_s16(vget_high_s16(vreinterpretq_s16_u16(q3)), d29_2);

nq4 = vaddq_s32(nq4, nq6);

nq5 = vaddq_s32(nq5, nq7);

nq8 = vaddq_s32(nq8, nq10);

nq9 = vaddq_s32(nq9, nq11);

int16x8_t q6 = vld1q_s16(&Iptr[x]);

nq4 = vaddq_s32(nq4, nq8);

nq5 = vaddq_s32(nq5, nq9);

nq8 = vmovl_s16(vget_high_s16(q6));

nq6 = vmovl_s16(vget_low_s16(q6));

nq4 = vqrshlq_s32(nq4, q11);

nq5 = vqrshlq_s32(nq5, q11);

int16x8x2_t q0q1 = vld2q_s16(dIptr);

float32x4_t nB1v = vld1q_f32(nB1);

float32x4_t nB2v = vld1q_f32(nB2);

nq4 = vsubq_s32(nq4, nq6);

nq5 = vsubq_s32(nq5, nq8);

int32x4_t nq2 = vmovl_s16(vget_low_s16(q0q1.val[0]));

int32x4_t nq3 = vmovl_s16(vget_high_s16(q0q1.val[0]));

nq7 = vmovl_s16(vget_low_s16(q0q1.val[1]));

nq8 = vmovl_s16(vget_high_s16(q0q1.val[1]));

nq9 = vmulq_s32(nq4, nq2);

nq10 = vmulq_s32(nq5, nq3);

nq4 = vmulq_s32(nq4, nq7);

nq5 = vmulq_s32(nq5, nq8);

nq9 = vaddq_s32(nq9, nq10);

nq4 = vaddq_s32(nq4, nq5);

nB1v = vaddq_f32(nB1v, vcvtq_f32_s32(nq9));

nB2v = vaddq_f32(nB2v, vcvtq_f32_s32(nq4));

vst1q_f32(nB1, nB1v);

vst1q_f32(nB2, nB2v);

}

#endif

for( ; x < winSize.width*cn; x++, dIptr += 2 )

{

int diff = CV_DESCALE(Jptr[x]*iw00 + Jptr[x+cn]*iw01 +

Jptr[x+stepJ]*iw10 + Jptr[x+stepJ+cn]*iw11,

W_BITS1-5) - Iptr[x];

ib1 += (itemtype)(diff*dIptr[0]);

// 累加 dI * Ix

ib2 += (itemtype)(diff*dIptr[1]);

// 累加 dI * Iy

}

}

#if CV_SIMD128 && !CV_NEON

v_float32x4 qf0, qf1;

v_recombine(v_interleave_pairs(qb0 + qb1), v_setzero_f32(), qf0, qf1);

ib1 += v_reduce_sum(qf0);

ib2 += v_reduce_sum(qf1);

#endif

#if CV_NEON

ib1 += (float)(nB1[0] + nB1[1] + nB1[2] + nB1[3]);

ib2 += (float)(nB2[0] + nB2[1] + nB2[2] + nB2[3]);

#endif

b1 = ib1*FLT_SCALE;

b2 = ib2*FLT_SCALE;

Point2f delta( (float)((A12*b2 - A22*b1) * D),

(float)((A12*b1 - A11*b2) * D));

// 光流(速度矢量)

//delta = -delta;

nextPt += delta; // 根据光流调整后一帧特征点 x,y 坐标

nextPts[ptidx] = nextPt + halfWin;

if( delta.ddot(delta) <= criteria.epsilon )

// 光流满足最小迭代阈值

break;

if( j > 0 && std::abs(delta.x + prevDelta.x) < 0.01 &&

std::abs(delta.y + prevDelta.y) < 0.01 )

// 判断光流是否在振荡?

{

nextPts[ptidx] -= delta*0.5f;

break;

}

prevDelta = delta;

}

CV_Assert(status != NULL);

if( status[ptidx] && err && level == 0 && (flags & OPTFLOW_LK_GET_MIN_EIGENVALS) == 0 )

{

Point2f nextPoint = nextPts[ptidx] - halfWin;

Point inextPoint;

inextPoint.x = cvFloor(nextPoint.x);

inextPoint.y = cvFloor(nextPoint.y);

if( inextPoint.x < -winSize.width || inextPoint.x >= J.cols ||

inextPoint.y < -winSize.height || inextPoint.y >= J.rows )

{

if( status )

status[ptidx] = false;

continue;

}

float aa = nextPoint.x - inextPoint.x;

float bb = nextPoint.y - inextPoint.y;

iw00 = cvRound((1.f - aa)*(1.f - bb)*(1 << W_BITS));

iw01 = cvRound(aa*(1.f - bb)*(1 << W_BITS));

iw10 = cvRound((1.f - aa)*bb*(1 << W_BITS));

iw11 = (1 << W_BITS) - iw00 - iw01 - iw10;

float errval = 0.f;

for( y = 0; y < winSize.height; y++ )

{

const uchar* Jptr = J.ptr() + (y + inextPoint.y)*stepJ + inextPoint.x*cn;

const deriv_type* Iptr = IWinBuf.ptr<deriv_type>(y);

for( x = 0; x < winSize.width*cn; x++ )

{

int diff = CV_DESCALE(Jptr[x]*iw00 + Jptr[x+cn]*iw01 +

Jptr[x+stepJ]*iw10 + Jptr[x+stepJ+cn]*iw11,

W_BITS1-5) - Iptr[x];

errval += std::abs((float)diff);

// 累加 abs(I - J)

}

}

err[ptidx] = errval * 1.f/(32*winSize.width*cn*winSize.height);

// 特征点误差值赋平均灰度匹配误差

}

}

}

4495

4495

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?