操作系统配置:

1、为每台机器添加Livy用户,也可通过LDAP添加

| useradd livy -g hadoop |

2、将livy文件夹所属用户改为livy

| chown -R livy:hadoop livy |

3、创建livy的log目录及run目录

| mkdir /var/log/livy mkdir /var/run/livy chown livy:hadoop /var/log/livy chown livy:hadoop /var/run/livy |

4、在KDC节点创建livy的Kerberos信息,并将生成好的keytab文件拷贝到livy的配置文件夹下

| kadmin.local -q "addprinc -randkey livy/your_ip_host0@YOUR REALM.COM" kadmin.local -q "addprinc -randkey HTTP/your_ip_host0@YOUR REALM.COM" kadmin.local -q "xst -k /root/livy.keytab livy/your_ip_host0@YOUR REALM.COM " kadmin.local -q "xst -k /root/spnego.keytab HTTP/your_ip_host0@YOUR REALM.COM |

Livy配置:

修改livy.conf,配置如下属性:

| livy.server.port = 8998 livy.spark.master = yarn livy.spark.deploy-mode = cluster livy.server.session.timeout-check = true livy.server.session.timeout-check.skip-busy = false livy.server.session.timeout = 1h livy.server.session.state-retain.sec = 600s livy.impersonation.enabled = true livy.server.recovery.mode = recovery livy.server.recovery.state-store=filesystem livy.server.recovery.state-store.url=/tmp/livy livy.server.yarn.poll-interval = 20s livy.ui.enabled = true #配置livy服务通过kerberos与Yarn交互 livy.server.launch.kerberos.keytab = /bigdata/livy-0.7.1/conf/livy.keytab livy.server.launch.kerberos.principal = livy/your_ip_host0@YOUR REALM.COM #配置客户端访问Livy需过kerberos,并且会以客户端认证的keytab用户去yarn上提交程序;该配置同样限制了访问livy的webui需要kerberos livy.server.auth.type = kerberos livy.server.auth.kerberos.keytab = /bigdata/livy-0.7.1/conf/livy_http.keytab livy.server.auth.kerberos.principal = HTTP/your_ip_host0@YOUR REALM.COM |

修改HDFS配置:

| CM -> HDFS -> Configuration -> Cluster-wide Advanced Configuration Snippet (Safety Valve) for core-site.xml: hadoop.proxyuser.livy.groups = * hadoop.proxyuser.livy.hosts = * |

启停livy:

| cd /bigdata/livy-0.7.1/bin sudo -u livy ./livy-server start sudo -u livy ./livy-server stop |

Griffin 配置:

1、修改application.properties如下:

| # |

2、修改sparkProperties.json

|

3、修改env\env_batch.json

| { |

4、修改env_streaming.json

| { |

5、修改pom.xml

| <?xml version="1.0" encoding="UTF-8"?> |

6、修改HiveMetaStoreProxy.java类的initHiveMetastoreClient()方法

|

7、修改HiveMetaStoreServiceJdbcImpl.java中的init()方法

|

8、我们的ES是7.*,因此需要修改:

MetricStoreImpl.java

|

ElasticSearchSink.scala

|

9、关于正则的校验,前后端不匹配,前端把正则表达式写死了,不知道这怎么开源出来的,这么多问题。

修改\griffin\ui\angular\src\app\measure\create-measure\pr\pr.component.spec.ts

case "Regular Expression Detection Count": |

10、修改\griffin\ui\angular\dist\main.bundle.js

case "Regular Expression Detection Count": |

11、在mysql建表:(官方给的sql里少建了一张表DATACONNECTOR,气人不?)

| DROP TABLE IF EXISTS QRTZ_FIRED_TRIGGERS; |

12、在idea里的terminal里执行如下maven命令进行编译打包:

|

如果有包下载不下来,就去浏览器去maven下载手动install一下

13、打包完之后,将measure下面的target的measure-0.6.0.jar包上传到HDFS上,并重命名为griffin-measure.jar

我是传到了/griffin/griffin-measure.jar,注意要和前面的配置文件中写的路径一致。hive-site.xml也需要上传,还需要新建checkpoint和persist文件夹。Persist是用来存放metric数据的。

| # hdfs dfs -ls /griffin Found 4 items drwxrwxrwx - livy supergroup 0 2021-06-22 16:26 /griffin/checkpoint -rw-r--r-- 3 admin supergroup 46538594 2021-06-24 15:32 /griffin/griffin-measure.jar -rwxrwxrwx 3 admin supergroup 6982 2021-06-16 17:47 /griffin/hive-site.xml drwxrwxrwx - admin supergroup 0 2021-07-01 14:54 /griffin/persist |

14、至此griffin安装完毕。

15、启动:

可以直接在IDEA中启动进行调试,在服务器的话则是去/bigdata/griffin-0.6.0/service/target

nohup java -jar service-0.6.0.jar > out.log 2>&1 &

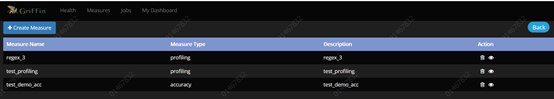

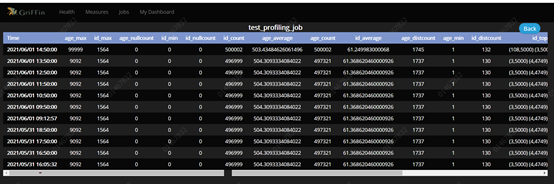

16、新建measure和job:

Accuracy是指测试 源表和目标表的准确率;

Data Profiling是指按一定的规则进行统计的,比如Null的数量、平均值、最大值、去重后的数量、符合正则的数量等。

Publish没发现有啥用;最后一个是自定义,没测试。

17、

18、简单的正则验证一下手机号吧:

19、将measure做成job定时调度(我是每个measure都做了对应的job):

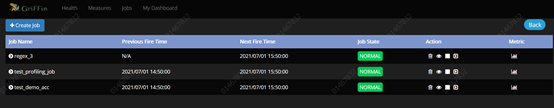

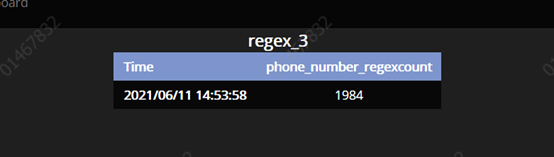

20、运行结果展示:

正则的:

准确率的:

数据统计的:

257

257

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?