ceph管理节点创建存储池

root@ceph-mgr01:~# su - cephadmin

cephadmin@ceph-mgr01:~$ cd ceph-cluster/

创建储存池

cephadmin@ceph-mgr01:~/ceph-cluster$ ceph osd pool create luo-rbd-pool1 32 32

pool ‘luo-rbd-pool1’ created

存储池启用 rbd

cephadmin@ceph-mgr01:~/ceph-cluster$ ceph osd pool application enable luo-rbd-pool1 rbd

初始化存储池

cephadmin@ceph-mgr01:~/ceph-cluster$ rbd pool init -p luo-rbd-pool1

创建 image

cephadmin@ceph-mgr01:~/ceph-cluster$ rbd create luo-img-img1 --size 3G --pool luo-rbd-pool1 --image-format 2 --image-feature layering

验证镜像

cephadmin@ceph-mgr01:~/ceph-cluster$ rbd ls --pool luo-rbd-pool1

luo-img-img1

master节点和node节点安装ceph-common

wget -q -O- ‘https://mirrors.aliyun.com/ceph/keys/release.asc’ | sudo apt-key add - #下载认证密钥

添加源

sudo apt-add-repository ‘deb https://mirrors.aliyun.com/ceph/debian-pacific/ focal main’

root@k8s-node2:~# apt update

root@k8s-master1:~# apt install ceph-common -y

创建 ceph 用户与授权

ceph管理节点创建luohw-luo用户

cephadmin@ceph-mgr01:~/ceph-cluster$ ceph auth get-or-create client.luohw-luo mon ‘allow r’ osd ‘allow * pool=luo-rbd-pool1’

[client.luohw-luo]

key = AQAjfIBk05fFBRAA6dx0HgEw+LeYV0HSoGGnag==

验证用户

cephadmin@ceph-mgr01:~/ceph-cluster$ ceph auth get client.luohw-luo

导出用户信息至 keyring 文件

cephadmin@ceph-mgr01:~/ceph-cluster$ ceph auth get client.luohw-luo -o ceph.client.luohw-luo.keyring

#同步认证文件到 k8s 各 master 及 node 节点

cephadmin@ceph-mgr01:~/ceph-cluster$ for i in seq 224 229 ;do scp ceph.client.luohw-luo.keyring root@192.168.1.$i:/etc/ceph ;done

k8s节点配置域名解析,将ceph mon域名解析加入

192.168.1.93 ceph-mon01.luohw.net ceph-mon01

192.168.1.94 ceph-mon02.luohw.net ceph-mon02

192.168.1.95 ceph-mon02.luohw.net ceph-mon03

通过 keyring 文件挂载 rbd

创建yaml文件

apiVersion: v1

kind: Pod

metadata:

name: busybox

namespace: default

spec:

containers:

- image: busybox

command:

- sleep

- "3600"

imagePullPolicy: Always

name: busybox

#restartPolicy: Always

volumeMounts:

- name: rbd-data1

mountPath: /data

volumes:

- name: rbd-data1

rbd:

monitors: #ceph mon 地址

- '192.168.1.93:6789'

- '192.168.1.94:6789'

- '192.168.1.95:6789'

pool: luo-rbd-pool1 #指定存储池

image: luo-img-img1 #那个镜像

fsType: ext4 #格式化格式

readOnly: false #是否只读

user: luohw-luo # 认证的用户

keyring: /etc/ceph/ceph.client.luohw-luo.keyring #认证的key node节点要有

应用yaml 文件

root@k8s-master1:~/ceph-yaml/20221006/ceph-case-n70# kubectl apply -f case1-busybox-keyring.yaml

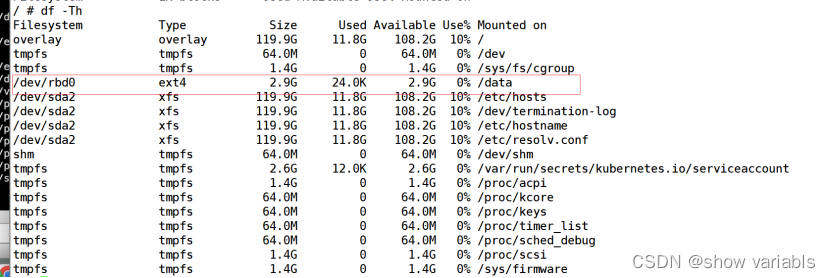

进入容器查看已经挂载

root@k8s-master1:~/ceph-yaml/20221006/ceph-case-n70# kubectl exec -it busybox – sh

挂载原理:通过宿主机挂载,然后通过联合文件系统再映射到容器里

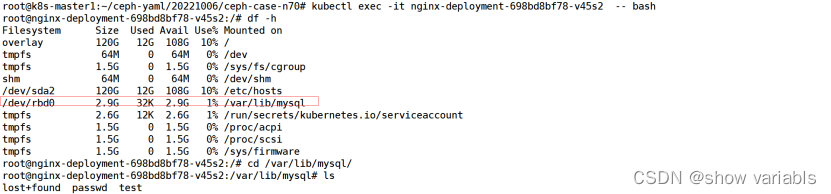

通过 keyring 文件直接挂载-nginx

root@k8s-master1:~/ceph-yaml/20221006/ceph-case-n70# vi case2-nginx-keyring.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

matchLabels: #rs or deployment

app: ng-deploy-80

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: nginx

#image: mysql:5.6.46

# env:

# Use secret in real usage

# - name: MYSQL_ROOT_PASSWORD

# value: 123456

ports:

- containerPort: 80

volumeMounts:

- name: rbd-data1

#mountPath: /data

mountPath: /var/lib/mysql

volumes:

- name: rbd-data1

rbd:

monitors:

- '192.168.1.93:6789'

- '192.168.1.94:6789'

- '192.168.1.95:6789'

pool: luo-rbd-pool1

image: luo-img-img1

fsType: ext4

readOnly: false

user: luohw-luo

keyring: /etc/ceph/ceph.client.luohw-luo.keyring

root@k8s-master1:~/ceph-yaml/20221006/ceph-case-n70# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deployment-698bd8bf78-v45s2 1/1 Running 0 94s

通过secret 挂载rbd

创建 secret

查看luohw-luo用户key

cephadmin@ceph-mgr01:~/ceph-cluster$ ceph auth print-key client.luohw-luo

AQAjfIBk05fFBRAA6dx0HgEw+LeYV0HSoGGnag==

将 key 进行编码

cephadmin@ceph-mgr01:~/ceph-cluster$ ceph auth print-key client.luohw-luo |base64

QVFBamZJQmswNWZGQlJBQTZkeDBIZ0V3K0xlWVYwSFNvR0duYWc9PQ==

创建cert yaml 配置文件

#cat case3-secret-client-shijie.yaml

apiVersion: v1

kind: Secret

metadata:

name: ceph-secret-luohw-luo

type: "kubernetes.io/rbd"

data:

key: QVFBamZJQmswNWZGQlJBQTZkeDBIZ0V3K0xlWVYwSFNvR0duYWc9PQ==

应用并查看 secrets

kubectl apply -f case3-secret-client-shijie.yaml

验证secrets

root@k8s-master1:~/ceph-yaml/20221006/ceph-case-n70# kubectl get secrets

NAME TYPE DATA AGE

ceph-secret-luohw-luo kubernetes.io/rbd 1 91s

部署nginx文件

root@k8s-master1:~/ceph-yaml/20221006/ceph-case-n70# vi case4-nginx-secret.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

matchLabels: #rs or deployment

app: ng-deploy-80

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: nginx

ports:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: nginx

ports:

- containerPort: 80

volumeMounts:

- name: rbd-data1

mountPath: /usr/share/nginx/html/rbd

volumes:

- name: rbd-data1

rbd:

monitors:

- '192.168.1.93:6789'

- '192.168.1.94:6789'

- '192.168.1.95:6789'

pool: luo-rbd-pool1

image: luo-img-img1

fsType: ext4

readOnly: false

user: luohw-luo

secretRef:

name: ceph-secret-luohw-luo

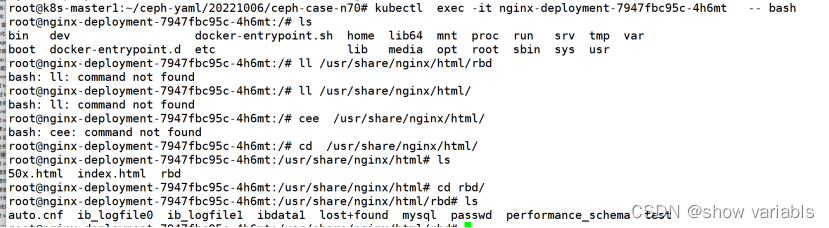

创建并应用,pod可以正常运行

root@k8s-master1:~/ceph-yaml/20221006/ceph-case-n70# kubectl apply -f case4-nginx-secret.yaml

root@k8s-master1:~/ceph-yaml/20221006/ceph-case-n70# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deployment-7947fbc95c-4h6mt 1/1 Running 0 25s

动态挂载卷

使用admin 用户 的secret

cephadmin@ceph-mgr01:~/ceph-cluster$ ceph auth print-key client.admin |base64

QVFBSTFuRmszUWdmTGhBQVV1OFpUR3U4cnhIUkc1NXZIVlV5dXc9PQ==

root@k8s-master1:~/ceph-yaml/20221006/ceph-case-n70# cat case5-secret-admin.yaml

apiVersion: v1

kind: Secret

metadata:

name: ceph-secret-admin

type: "kubernetes.io/rbd"

data:

key: QVFBSTFuRmszUWdmTGhBQVV1OFpUR3U4cnhIUkc1NXZIVlV5dXc9PQ==

创建 ceph admin 用户 secret 并验证

root@k8s-master1:~/ceph-yaml/20221006/ceph-case-n70# kubectl apply -f case5-secret-admin.yaml

secret/ceph-secret-admin created

查看

kubectl get secret

创建存储类:

创建动态存储类,为 pod 提供动态 pvc

存储类 yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: ceph-storage-class-luo

annotations:

storageclass.kubernetes.io/is-default-class: "false" #设置为默认存储类

provisioner: kubernetes.io/rbd

parameters:

monitors: 192.168.1.93:6789,192.168.1.94:6789,192.168.1.95:6789

adminId: admin

adminSecretName: ceph-secret-admin #admin 密钥

adminSecretNamespace: default #admin密钥再那个命名空间

pool: luo-rbd-pool1 #那个存储池

userId: luohw-luo #普通用户

userSecretName: ceph-secret-luohw-luo #普通用户密钥

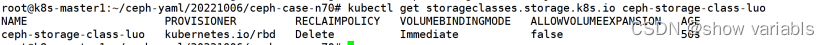

创建并查看

root@k8s-master1:~/ceph-yaml/20221006/ceph-case-n70# kubectl apply -f case6-ceph-storage-class.yaml

.

.

root@k8s-master1:~/ceph-yaml/20221006/ceph-case-n70# kubectl get storageclasses.storage.k8s.io ceph-storage-class-luo

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

ceph-storage-class-luo kubernetes.io/rbd Delete Immediate false 50s

创建基于存储类的 PVC:

root@k8s-master1:~/ceph-yaml/20221006/ceph-case-n70# vi case7-mysql-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mysql-data-pvc

spec:

accessModes: #访问模式

- ReadWriteOnce #对单个pod已读写权限挂载

storageClassName: ceph-storage-class-luo

resources:

requests:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mysql-data-pvc

spec:

accessModes:

- ReadWriteOnce

storageClassName: ceph-storage-class-luo

resources:

requests:

storage: '5Gi'

创建 PVC

验证 PV/PVC:

root@k8s-master1:~/ceph-yaml/20221006/ceph-case-n70# kubectl get pv

pvc-4f86b543-cd95-4d65-aaf0-db4e01f9cb85 5Gi RWO Delete Bound default/mysql-data-pvc ceph-storage-class-luo 21s

root@k8s-master1:~/ceph-yaml/20221006/ceph-case-n70# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

mysql-data-pvc Bound pvc-4f86b543-cd95-4d65-aaf0-db4e01f9cb85 5Gi RWO ceph-storage-class-luo 57s

ceph节点查看会自动创建镜像

cephadmin@ceph-mgr01:~/ceph-cluster$ rbd ls --pool luo-rbd-pool1

kubernetes-dynamic-pvc-71dfeae0-97c6-43f0-8dc7-6e310393aaae

luo-img-img1

运行单机 mysql 并验证

单机 mysql yaml 文件:

root@k8s-master1:~/ceph-yaml/20221006/ceph-case-n70# cat case8-mysql-single.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql

spec:

selector:

matchLabels:

app: mysql

strategy:

type: Recreate

template:

metadata:

labels:

app: mysql

spec:

containers:

- image: mysql:5.6.46

name: mysql

env:

# Use secret in real usage

- name: MYSQL_ROOT_PASSWORD

value: magedu123456

ports:

- containerPort: 3306

name: mysql

volumeMounts:

- name: mysql-persistent-storage

mountPath: /var/lib/mysql

volumes:

- name: mysql-persistent-storage

persistentVolumeClaim:

claimName: mysql-data-pvc

---

kind: Service

apiVersion: v1

metadata:

labels:

app: mysql-service-label

name: mysql-service

spec:

type: NodePort

ports:

- name: http

port: 3306

protocol: TCP

targetPort: 3306

nodePort: 33306

selector:

app: mysql

创建 MySQL pod:

root@k8s-master1:~/ceph-yaml/20221006/ceph-case-n70# kubectl apply -f case8-mysql-single.yaml

验证 mysql 访问:

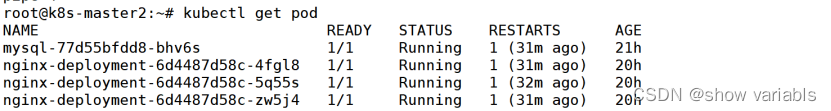

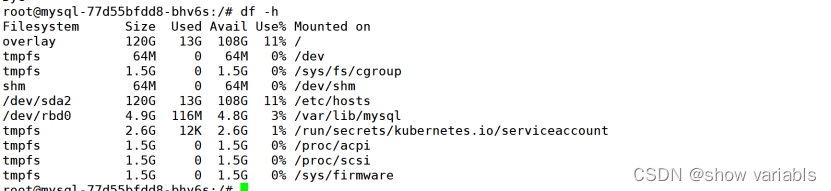

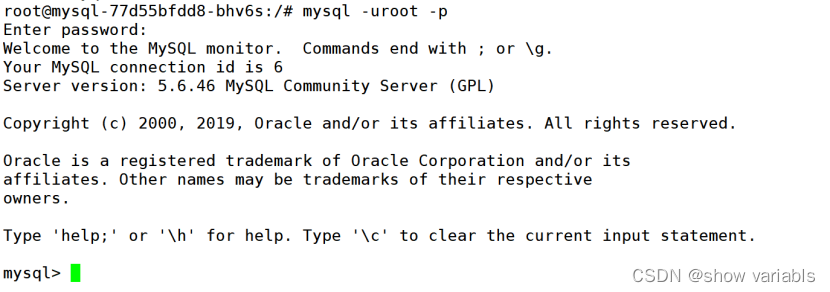

root@k8s-master2:~# kubectl exec -it mysql-77d55bfdd8-bhv6s – bash

root@mysql-77d55bfdd8-bhv6s:/# msyql -uroot -p

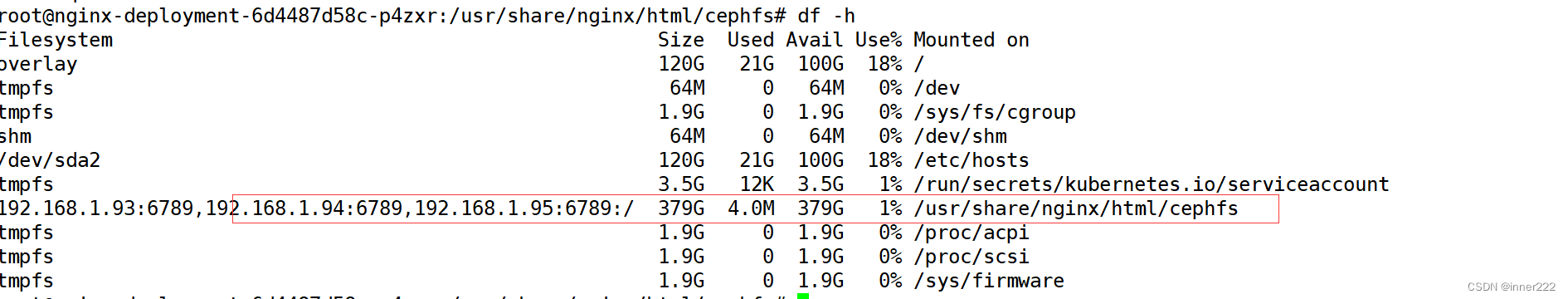

k8s使用cephfs

k8s 中的 pod 挂载 ceph 的 cephfs 共享存储,实现业务中数据共享、持久化、高性能、高

可用的目的

创建 pod:

root@k8s-master1:~/ceph-yaml/20221006/ceph-case-n70# cat case9-nginx-cephfs.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

selector:

matchLabels: #rs or deployment

app: ng-deploy-80

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: nginx

ports:

- containerPort: 80

volumeMounts:

- name: magedu-staticdata-cephfs

mountPath: /usr/share/nginx/html/cephfs

volumes:

- name: magedu-staticdata-cephfs

cephfs:

monitors:

- '192.168.1.93:6789'

- '192.168.1.94:6789'

- '192.168.1.95:6789'

path: /

user: admin

secretRef:

name: ceph-secret-admin

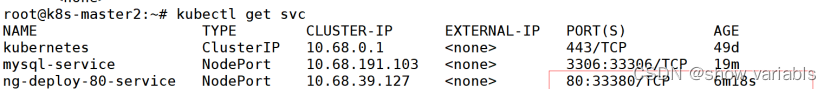

---

kind: Service

apiVersion: v1

metadata:

labels:

app: ng-deploy-80-service-label

name: ng-deploy-80-service

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

nodePort: 33380

selector:

app: ng-deploy-80

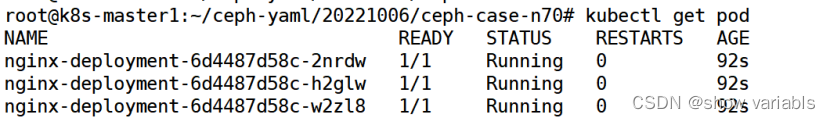

应用yaml, 并查看pod状态

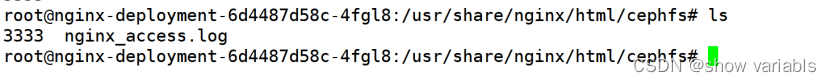

进入容器查看挂载的cephfs

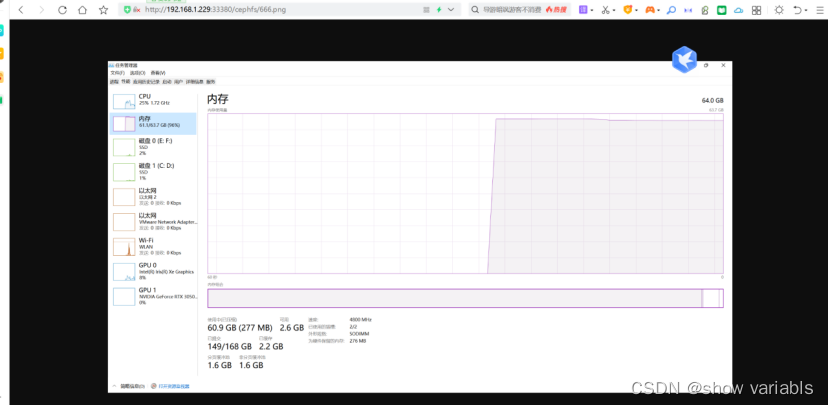

通过k8s的service+noded port 端口可以访问

k8s集群外192.168.11.51客户端挂载cephfs,上传图片,在k8s容器可以看到

[root@node01 ~]# mount -t ceph 192.168.1.91:6789,192.168.1.92:6789,192.168.1.93:6789:/ /cephfs/ -o name=luo,secret=AQDNbXxkNEb7GhAAfxgYvAy068t+KVcqE2wR9

[root@node01 ~]# cd /cephfs/

[root@node01 cephfs]# ls

3333 nginx_access.log

[root@node01 cephfs]# ls

3333 666.png nginx_access.log

可以k8s访问

1075

1075

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?