sparkSQL 读取hive表数据时候报如下错误 我的语句很简单如下:

val df: DataFrame = sqlContext.sql("select vipId,brandId,dt from pro60050.browse_wxapp_page_dt_partition where dt >= 20200227 and brandId = 253")

df.show()20/03/02 17:50:12 ERROR ApplicationMaster: User class threw exception: java.lang.RuntimeException: serious problem java.lang.RuntimeException: serious problem at org.apache.hadoop.hive.ql.io.orc.OrcInputFormat.generateSplitsInfo(OrcInputFormat.java:1021) at org.apache.hadoop.hive.ql.io.orc.OrcInputFormat.getSplits(OrcInputFormat.java:1048) at org.apache.spark.rdd.HadoopRDD.getPartitions(HadoopRDD.scala:199) at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:252) at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:250) at scala.Option.getOrElse(Option.scala:121) at org.apache.spark.rdd.RDD.partitions(RDD.scala:250) at org.apache.spark.rdd.MapPartitionsRDD.getPartitions(MapPartitionsRDD.scala:35) at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:252) at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:250) at scala.Option.getOrElse(Option.scala:121) at org.apache.spark.rdd.RDD.partitions(RDD.scala:250) at org.apache.spark.rdd.MapPartitionsRDD.getPartitions(MapPartitionsRDD.scala:35) at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:252) at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:250) at scala.Option.getOrElse(Option.scala:121) at org.apache.spark.rdd.RDD.partitions(RDD.scala:250) at org.apache.spark.rdd.UnionRDD$$anonfun$1.apply(UnionRDD.scala:84) at org.apache.spark.rdd.UnionRDD$$anonfun$1.apply(UnionRDD.scala:84) at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234) at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234) at scala.collection.immutable.List.foreach(List.scala:381) at scala.collection.TraversableLike$class.map(TraversableLike.scala:234) at scala.collection.immutable.List.map(List.scala:285) at org.apache.spark.rdd.UnionRDD.getPartitions(UnionRDD.scala:84) at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:252) at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:250) at scala.Option.getOrElse(Option.scala:121) at org.apache.spark.rdd.RDD.partitions(RDD.scala:250) at org.apache.spark.rdd.MapPartitionsRDD.getPartitions(MapPartitionsRDD.scala:35) at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:252) at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:250) at scala.Option.getOrElse(Option.scala:121) at org.apache.spark.rdd.RDD.partitions(RDD.scala:250) at org.apache.spark.rdd.MapPartitionsRDD.getPartitions(MapPartitionsRDD.scala:35) at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:252) at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:250) at scala.Option.getOrElse(Option.scala:121) at org.apache.spark.rdd.RDD.partitions(RDD.scala:250) at org.apache.spark.rdd.MapPartitionsRDD.getPartitions(MapPartitionsRDD.scala:35) at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:252) at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:250) at scala.Option.getOrElse(Option.scala:121) at org.apache.spark.rdd.RDD.partitions(RDD.scala:250) at org.apache.spark.sql.execution.SparkPlan.executeTake(SparkPlan.scala:314) at org.apache.spark.sql.execution.CollectLimitExec.executeCollect(limit.scala:38) at org.apache.spark.sql.Dataset.org$apache$spark$sql$Dataset$$collectFromPlan(Dataset.scala:2861) at org.apache.spark.sql.Dataset$$anonfun$head$1.apply(Dataset.scala:2150) at org.apache.spark.sql.Dataset$$anonfun$head$1.apply(Dataset.scala:2150) at org.apache.spark.sql.Dataset$$anonfun$55.apply(Dataset.scala:2842) at org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:65) at org.apache.spark.sql.Dataset.withAction(Dataset.scala:2841) at org.apache.spark.sql.Dataset.head(Dataset.scala:2150) at org.apache.spark.sql.Dataset.take(Dataset.scala:2363) at org.apache.spark.sql.Dataset.showString(Dataset.scala:241) at org.apache.spark.sql.Dataset.show(Dataset.scala:637) at org.apache.spark.sql.Dataset.show(Dataset.scala:596) at org.apache.spark.sql.Dataset.show(Dataset.scala:605) at ezr.bigdata.spark.hive.job.VipFirstSaleTest$.main(VipFirstSaleTest.scala:51) at ezr.bigdata.spark.hive.job.VipFirstSaleTest.main(VipFirstSaleTest.scala) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.spark.deploy.yarn.ApplicationMaster$$anon$2.run(ApplicationMaster.scala:635) Caused by: java.lang.NullPointerException at org.apache.hadoop.hive.ql.io.orc.OrcInputFormat$BISplitStrategy.getSplits(OrcInputFormat.java:560) at org.apache.hadoop.hive.ql.io.orc.OrcInputFormat.generateSplitsInfo(OrcInputFormat.java:1010) ... 64 more

问题分析:百度,google

1 启发与:https://blog.csdn.net/wuzhilon88/article/details/100524131 这篇文章

2 然后查看我的表结构:确实是orc格式

pro60050.browse_wxapp_page_dt_partition

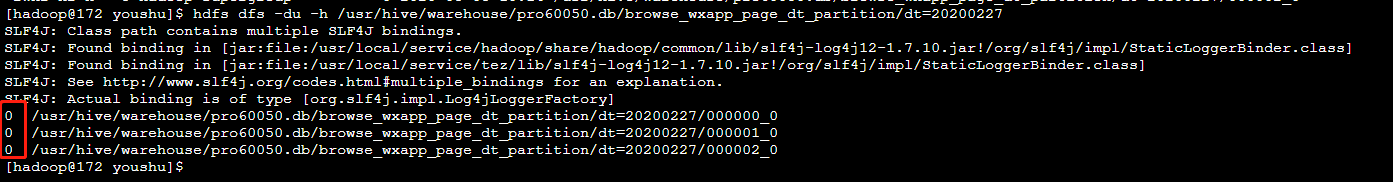

3 查找分区中有空文件的目录

确实存在分区目录下有空文件

0 /usr/hive/warehouse/pro60050.db/browse_wxapp_page_dt_partition/dt=20200227/000000_0

0 /usr/hive/warehouse/pro60050.db/browse_wxapp_page_dt_partition/dt=20200227/000001_0

0 /usr/hive/warehouse/pro60050.db/browse_wxapp_page_dt_partition/dt=20200227/000002_0

4 追溯这个表的数据导入的逻辑:

找到insert overwrite 的hive语句,发现这是个静态分区表,往里分区内插入数据的时候先指定了分区

insert overwrite table pro60050.browse_wxapp_page_dt_partition partition(dt=20200227) select \`(dt)?+.+\` from hivedb.hiveTableName where dt=20200227 and brandId in (253);并且原表里(hivedb.hiveTableName)20200227这一天的数据里有很多别的品牌Id对应的数据,因此在使用执行select 的时候 启动了3个Map 从20200227这一天筛选253这个品牌的数据,恰好这一天253没有数据,因此就3个map对应着3个空的文件:

5 试着在insert overwrite语句里设置防止空文件生成,

失败:这个空文件是根据map个数产生的小文件,启动select 查询必然启动MR 那就避免不了Map阶段的产生

6 计算空文件我没法避免那么,我就在spark 代码 里设置hive数据读取策略来控制

和同事一起想办法解决这个问题:

问题已经定位到是分区里空文件造成的,那就解决空文件读取问题就行了

比较符合我的情况的文章有这么一篇:

https://www.cnblogs.com/barneywill/p/10142244.html

The HYBRID mode reads the footers for all files if there are fewer files than expected mapper count, switching over to generating 1 split per file if the average file sizes are smaller than the default HDFS blocksize. ETL strategy always reads the ORC footers before generating splits, while the BI strategy generates per-file splits fast without reading any data from HDFS.

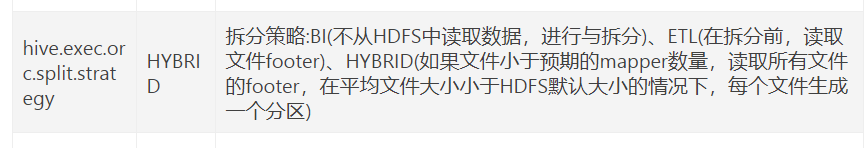

可见hive.exec.orc.split.strategy默认是HYBRID,HYBRID时如果不满足

if (avgFileSize > context.maxSize) {

则

splitStrategy = new BISplitStrategy(context, fs, dir, children, isOriginal, deltas,

covered);

也就是说默认是 HYBRID mode(混合模式读取)当读取的数据量小于hdfs 数据块block大小的时候直接使用根据文件数每个文件生成一个切割(我的这个分区的数据就是没达到block大小所以按照一个文件一个切分的方式读取,因此读取到了空文件报了Caused by: java.lang.NullPointerException 这个异常)。如果读取的数据量大于block 默认的块大小的时候就使用按照block块切分后读取数据。

知道读取数据的策略那么就设置避免混合模式使用根据文件大小分割读取,不根据文件来读取

set hive.exec.orc.split.strategy=ETL实际代码设置为:

然后问题解决

补充:

hive.exec.orc.split.strategy参数控制在读取ORC表时生成split的策略。BI策略以文件为粒度进行split划分;ETL策略会将文件进行切分,多个stripe组成一个split;HYBRID策略为:当文件的平均大小大于hadoop最大split值(默认256 * 1024 * 1024)时使用ETL策略,否则使用BI策略。 --- 对于一些较大的ORC表,可能其footer较大,ETL策略可能会导致其从hdfs拉取大量的数据来切分split,甚至会导致driver端OOM,因此这类表的读取建议使用BI策略。对于一些较小的尤其有数据倾斜的表(这里的数据倾斜指大量stripe存储于少数文件中),建议使用ETL策略。--- 另外,spark.hadoop.mapreduce.input.fileinputformat.split.minsize参数可以控制在ORC切分时stripe的合并处理。具体逻辑是,当几个stripe的大小小于spark.hadoop.mapreduce.input.fileinputformat.split.minsize时,会合并到一个task中处理。可以适当调小该值,以此增大读ORC表的并发。

385

385

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?