目录

1 下载hive安装包

http://ftp.twaren.net/Unix/Web/apache/hive/hive-3.1.2/apache-hive-3.1.2-bin.tar.gz

2 上传解压

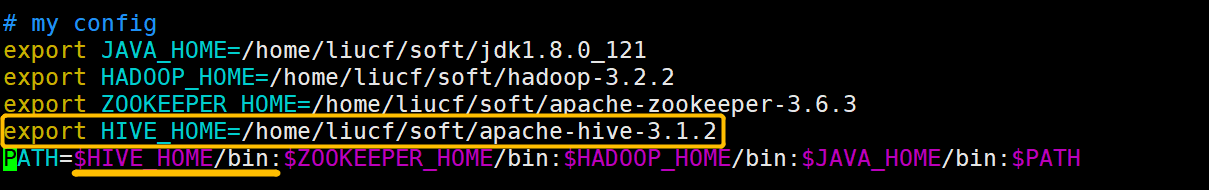

[liucf@node1 softfile]$ tar -zxf apache-hive-3.1.2-bin.tar.gz -C ../soft3 配置环境变量的HIVE_HOME

sudo vim /etc/profile

修改一下解压后的文件目录名,是自己看着舒服点,可以不做

[liucf@node1 soft]$ mv apache-hive-3.1.2-bin apache-hive-3.1.24 修改配置信息

4.1 修改配置文件hive-env.sh

在Hive的安装目录下,找到conf目录,我的目录路径如下/home/liucf/soft/apache-hive-3.1.2/conf。将原本的脚本重命名.

[liucf@node1 conf]$ cp hive-env.sh.template hive-env.sh编辑 hive-env.sh,添加HADOOP_HOME;HIVE_CONF_DIR,JAVA_HOME(可以不配置) 配置

# Set HADOOP_HOME to point to a specific hadoop install directory

# HADOOP_HOME=${bin}/../../hadoop

export HADOOP_HOME=/home/liucf/soft/hadoop-3.2.2

# Hive Configuration Directory can be controlled by:

# export HIVE_CONF_DIR=

export HIVE_CONF_DIR=/home/liucf/soft/apache-hive-3.1.2/conf4.2 新建hive-site.xml文件

/home/liucf/soft/apache-hive-3.1.2/conf 新建hive-site.xml文件

将以下内容拷贝到这个新创建的xml文件中,注意替换javax.jdo.option.ConnectionURL值的mysql所在主机域名,注意替换mysql的账号和用户名。

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://192.168.109.151:3306/hive?createDatabaseIfNotExist=true&useSSL=false</value>

<description>JDBC connect string for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

<description>username to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>Lcf#123456</value>

<description>password to use against metastore database</description>

</property>

<!-- 配置metastore进程主机和端口 -->

<property>

<name>hive.metastore.uris</name>

<value>thrift://node1:9083</value>

</property>

</configuration>

4.3 重命名文件hive-default.xml

cp hive-default.xml.template hive-default.xml5 拷贝mysql驱动包和创建元数据的mysql库

5.1 拷贝mysql驱动包

[liucf@node1 lib]$ cp mysql-connector-java-5.1.49.jar /home/liucf/soft/apache-hive-3.1.2/lib/

5.2 创建元数据的mysql库

create database hive; #这个hive数据库与hive-site.xml中localhost:3306/hive的hive对应,用来保存hive元数据

6 启动hadoop进程

根据自己的环境自行启动

我的启动被我单独改写到 hadoop_start_button.sh这个脚本里了,所以我的命令是:

sh hadoop_start_button.sh starthdfs

sh hadoop_start_button.sh startyarn

7 解决包冲突

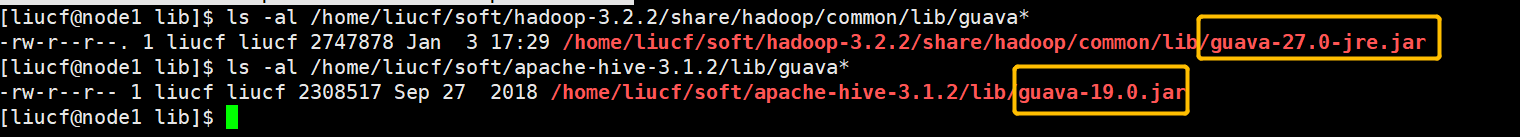

解决guava包冲突

com.google.common.base.Preconditions.checkArgument 这是因为hive内依赖的guava.jar和hadoop内的版本不一致造成的。

① 查看hadoop安装目录下share/hadoop/common/lib内guava.jar版本

② 查看hive安装目录下lib内guava.jar的版本 如果两者不一致,删除版本低的,并拷贝高版本的 问题解决!

[liucf@node1 lib]$ mv guava-19.0.jar guava-19.0.jar.bak

[liucf@node1 lib]$ cp /home/liucf/soft/hadoop-3.2.2/share/hadoop/common/lib/guava-27.0-jre.jar /home/liucf/soft/apache-hive-3.1.2/lib/

8 启动hive元数据服务

8.1 初始化hive的元数据库

在HIVE_HOME/bin下执行

[liucf@node1 bin]$ ./schematool -dbType mysql -initSchema[liucf@node1 bin]$ ./schematool -dbType mysql -initSchema

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/liucf/soft/apache-hive-3.1.2/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/liucf/soft/hadoop-3.2.2/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Metastore connection URL: jdbc:mysql://192.168.109.151:3306/hive?createDatabaseIfNotExist=true

Metastore Connection Driver : com.mysql.jdbc.Driver

Metastore connection User: root

....

schemaTool completed

如果报错:Error: Table 'CTLGS' already exists (state=42S01,code=1050)

解决办法:把hive-site.xml 里配置的 javax.jdo.option.ConnectionURL 指定的mysql数据库删掉然后重建再初始化就可以了

drop database hive

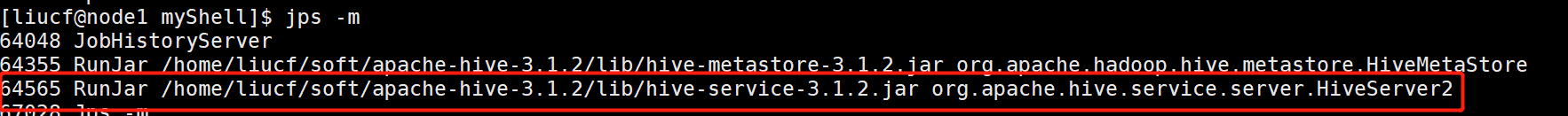

create database hive8.2 启动元数据服务HiveMetaStore

nohup hive --service metastore 1>/dev/null 2>&1 &

jps -m 查看结果

![]()

9 测试单节点Node1上启动hive

可见成功

10 使hadoop集群的其他节点也能使用hive

scp 考本node1上的apache-hive-3.1.2 到其他节点 比如node2,node3

scp -r apache-hive-3.1.2 liucf@node2:/home/liucf/soft

scp -r apache-hive-3.1.2 liucf@node3:/home/liucf/soft

scp node1 上的/etc/profile 文件到node2,node3是这两个节点也能使用HIVE_HOME环境变量

sudo scp -r /etc/profile root@node3:/etc/profile

sudo scp -r /etc/profile root@node2:/etc/profile

然后在node2,node3节点上 使用命令source /etc/profile 使环境变量的修改生效。

测试node2,node3 也能使用hive了

11 简单验证

建表:

create table if not exists liucf_db.stu(id int,name string)

row format delimited fields terminated by '\t';插入数据

hive> insert into stu(id,name) values(2,'zhangsan'),(3,'lisi');查询

hive> select * from stu;结果都没问题,到此hive安装完成

12 HiveServer2配置启动

12.1 hive-site.xml添加配置

<!-- hiveserver2服务的端口号以及绑定的主机名 -->

<property>

<name>hive.server2.thrift.port</name>

<value>10000</value>

</property>

<property>

<name>hive.server2.thrift.bind.host</name>

<value>node1</value>

</property>

配置文件分发到各个节点

scp hive-site.xml liucf@node2:/home/liucf/soft/apache-hive-3.1.2/conf

scp hive-site.xml liucf@node3:/home/liucf/soft/apache-hive-3.1.2/conf12.2 配置ProxyUser方式访问hadoop集群

ugi=liucf

<property>

<name>hadoop.proxyuser.liucf.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.liucf.groups</name>

<value>*</value>

</property>

如果不配置ProxyUser的话hiveserver2无法启动

报错如下:

2021-05-30T20:29:01,893 INFO [main] server.HiveServer2: Shutting down HiveServer2

2021-05-30T20:29:01,893 INFO [main] server.HiveServer2: Stopping/Disconnecting tez sessions.

2021-05-30T20:29:01,893 INFO [main] metastore.HiveMetaStoreClient: Closed a connection to metastore, current connections: 44

2021-05-30T20:29:01,896 WARN [pool-37-thread-1] metastore.RetryingMetaStoreClient: MetaStoreClient lost connection. Attempting to reconnect (1 of 1) after 1s. getAllDatabases

org.apache.thrift.transport.TTransportException: Cannot write to null outputStream

at org.apache.thrift.transport.TIOStreamTransport.write(TIOStreamTransport.java:142) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.thrift.protocol.TBinaryProtocol.writeI32(TBinaryProtocol.java:178) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.thrift.protocol.TBinaryProtocol.writeMessageBegin(TBinaryProtocol.java:106) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.thrift.TServiceClient.sendBase(TServiceClient.java:70) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.thrift.TServiceClient.sendBase(TServiceClient.java:62) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Client.send_get_databases(ThriftHiveMetastore.java:1189) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Client.get_databases(ThriftHiveMetastore.java:1181) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.getAllDatabases(HiveMetaStoreClient.java:1356) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.getAllDatabases(HiveMetaStoreClient.java:1351) ~[hive-exec-3.1.2.jar:3.1.2]

at sun.reflect.GeneratedMethodAccessor10.invoke(Unknown Source) ~[?:?]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_121]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_121]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.invoke(RetryingMetaStoreClient.java:212) ~[hive-exec-3.1.2.jar:3.1.2]

at com.sun.proxy.$Proxy34.getAllDatabases(Unknown Source) ~[?:?]

at sun.reflect.GeneratedMethodAccessor10.invoke(Unknown Source) ~[?:?]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_121]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_121]

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient$SynchronizedHandler.invoke(HiveMetaStoreClient.java:2773) ~[hive-exec-3.1.2.jar:3.1.2]

at com.sun.proxy.$Proxy34.getAllDatabases(Unknown Source) ~[?:?]

at org.apache.hadoop.hive.ql.metadata.Hive.getAllDatabases(Hive.java:1588) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.HiveMaterializedViewsRegistry$Loader.run(HiveMaterializedViewsRegistry.java:165) ~[hive-exec-3.1.2.jar:3.1.2]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) ~[?:1.8.0_121]

at java.util.concurrent.FutureTask.run(FutureTask.java:266) ~[?:1.8.0_121]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) ~[?:1.8.0_121]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) ~[?:1.8.0_121]

at java.lang.Thread.run(Thread.java:745) [?:1.8.0_121]

2021-05-30T20:29:01,893 ERROR [main] server.HiveServer2: Error starting HiveServer2

java.lang.Error: Max start attempts 30 exhausted

at org.apache.hive.service.server.HiveServer2.startHiveServer2(HiveServer2.java:1062) ~[hive-service-3.1.2.jar:3.1.2]

at org.apache.hive.service.server.HiveServer2.access$1600(HiveServer2.java:140) ~[hive-service-3.1.2.jar:3.1.2]

at org.apache.hive.service.server.HiveServer2$StartOptionExecutor.execute(HiveServer2.java:1305) [hive-service-3.1.2.jar:3.1.2]

at org.apache.hive.service.server.HiveServer2.main(HiveServer2.java:1149) [hive-service-3.1.2.jar:3.1.2]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.8.0_121]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[?:1.8.0_121]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_121]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_121]

at org.apache.hadoop.util.RunJar.run(RunJar.java:323) [hadoop-common-3.2.2.jar:?]

at org.apache.hadoop.util.RunJar.main(RunJar.java:236) [hadoop-common-3.2.2.jar:?]

Caused by: java.lang.RuntimeException: Error initializing notification event poll

at org.apache.hive.service.server.HiveServer2.init(HiveServer2.java:275) ~[hive-service-3.1.2.jar:3.1.2]

at org.apache.hive.service.server.HiveServer2.startHiveServer2(HiveServer2.java:1036) ~[hive-service-3.1.2.jar:3.1.2]

... 9 more

Caused by: java.io.IOException: org.apache.thrift.TApplicationException: Internal error processing get_current_notificationEventId

at org.apache.hadoop.hive.metastore.messaging.EventUtils$MSClientNotificationFetcher.getCurrentNotificationEventId(EventUtils.java:75) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.events.NotificationEventPoll.<init>(NotificationEventPoll.java:103) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.events.NotificationEventPoll.initialize(NotificationEventPoll.java:59) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hive.service.server.HiveServer2.init(HiveServer2.java:273) ~[hive-service-3.1.2.jar:3.1.2]

at org.apache.hive.service.server.HiveServer2.startHiveServer2(HiveServer2.java:1036) ~[hive-service-3.1.2.jar:3.1.2]

... 9 more

Caused by: org.apache.thrift.TApplicationException: Internal error processing get_current_notificationEventId

at org.apache.thrift.TApplicationException.read(TApplicationException.java:111) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.thrift.TServiceClient.receiveBase(TServiceClient.java:79) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Client.recv_get_current_notificationEventId(ThriftHiveMetastore.java:5575) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Client.get_current_notificationEventId(ThriftHiveMetastore.java:5563) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.getCurrentNotificationEventId(HiveMetaStoreClient.java:2723) ~[hive-exec-3.1.2.jar:3.1.2]

at sun.reflect.GeneratedMethodAccessor9.invoke(Unknown Source) ~[?:?]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_121]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_121]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.invoke(RetryingMetaStoreClient.java:212) ~[hive-exec-3.1.2.jar:3.1.2]

at com.sun.proxy.$Proxy34.getCurrentNotificationEventId(Unknown Source) ~[?:?]

at sun.reflect.GeneratedMethodAccessor9.invoke(Unknown Source) ~[?:?]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_121]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_121]

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient$SynchronizedHandler.invoke(HiveMetaStoreClient.java:2773) ~[hive-exec-3.1.2.jar:3.1.2]

at com.sun.proxy.$Proxy34.getCurrentNotificationEventId(Unknown Source) ~[?:?]

at org.apache.hadoop.hive.metastore.messaging.EventUtils$MSClientNotificationFetcher.getCurrentNotificationEventId(EventUtils.java:73) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.events.NotificationEventPoll.<init>(NotificationEventPoll.java:103) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.events.NotificationEventPoll.initialize(NotificationEventPoll.java:59) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hive.service.server.HiveServer2.init(HiveServer2.java:273) ~[hive-service-3.1.2.jar:3.1.2]

at org.apache.hive.service.server.HiveServer2.startHiveServer2(HiveServer2.java:1036) ~[hive-service-3.1.2.jar:3.1.2]

... 9 more

2021-05-30T20:29:02,040 INFO [shutdown-hook-0] server.HiveServer2: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down HiveServer2 at node1/192.168.109.151

************************************************************/12.3 启动 hiveserver2

因为我配置了HIVE_HOME

所以我可以直接执行hiveserver2

[liucf@node1 myShell]$ nohup hive --service hiveserver2 > /dev/null 2>&1 &jps 查看

12.4 验证jdbc连接

[liucf@node1 myShell]$ beeline -u jdbc:hive2://node1:10000

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/liucf/soft/apache-hive-3.1.2/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/liucf/soft/hadoop-3.2.2/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Connecting to jdbc:hive2://node1:10000

Connected to: Apache Hive (version 3.1.2)

Driver: Hive JDBC (version 3.1.2)

Transaction isolation: TRANSACTION_REPEATABLE_READ

Beeline version 3.1.2 by Apache Hive

0: jdbc:hive2://node1:10000> show databases;

INFO : Compiling command(queryId=liucf_20210530220955_8bf04d9d-e0ff-43a8-bdb0-8e0a58fef78a): show databases

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:database_name, type:string, comment:from deserializer)], properties:null)

INFO : Completed compiling command(queryId=liucf_20210530220955_8bf04d9d-e0ff-43a8-bdb0-8e0a58fef78a); Time taken: 0.764 seconds

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Executing command(queryId=liucf_20210530220955_8bf04d9d-e0ff-43a8-bdb0-8e0a58fef78a): show databases

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=liucf_20210530220955_8bf04d9d-e0ff-43a8-bdb0-8e0a58fef78a); Time taken: 0.022 seconds

INFO : OK

INFO : Concurrency mode is disabled, not creating a lock manager

+----------------+

| database_name |

+----------------+

| default |

| liucf_db |

+----------------+

2 rows selected (1.04 seconds)

0: jdbc:hive2://node1:10000> select * from liucf_db.stu;

INFO : Compiling command(queryId=liucf_20210530221015_4eb72cde-88b1-4c24-8346-242244dd8301): select * from liucf_db.stu

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:stu.id, type:int, comment:null), FieldSchema(name:stu.name, type:string, comment:null)], properties:null)

INFO : Completed compiling command(queryId=liucf_20210530221015_4eb72cde-88b1-4c24-8346-242244dd8301); Time taken: 1.451 seconds

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Executing command(queryId=liucf_20210530221015_4eb72cde-88b1-4c24-8346-242244dd8301): select * from liucf_db.stu

INFO : Completed executing command(queryId=liucf_20210530221015_4eb72cde-88b1-4c24-8346-242244dd8301); Time taken: 0.0 seconds

INFO : OK

INFO : Concurrency mode is disabled, not creating a lock manager

+---------+-----------+

| stu.id | stu.name |

+---------+-----------+

| 1 | zhangsan |

| 2 | zhangsan |

| 3 | lisi |

| 2 | zhangsan |

| 3 | lisi |

+---------+-----------+

5 rows selected (1.73 seconds)

0: jdbc:hive2://node1:10000> web ui 连接

13 启动hive服务

#!/bin/bash

HIVE_LOG_DIR=$HIVE_HOME/logs

mkdir -p $HIVE_LOG_DIR

#检查进程是否运行正常,参数1为进程名,参数2为进程端口

function check_process()

{

pid=$(ps -ef 2>/dev/null | grep -v grep | grep -i $1 | awk '{print $2}')

ppid=$(netstat -nltp 2>/dev/null | grep $2 | awk '{print $7}' | cut -d '/' -f 1)

echo $pid

[[ "$pid" =~ "$ppid" ]] && [ "$ppid" ] && return 0 || return 1

}

function hive_start()

{

metapid=$(check_process HiveMetastore 9083)

cmd="nohup hive --service metastore >$HIVE_LOG_DIR/metastore.log 2>&1 &"

cmd=$cmd" sleep 4; hdfs dfsadmin -safemode wait >/dev/null 2>&1"

[ -z "$metapid" ] && eval $cmd || echo "Metastroe服务已启动"

server2pid=$(check_process HiveServer2 10000)

cmd="nohup hive --service hiveserver2 >$HIVE_LOG_DIR/hiveServer2.log 2>&1 &"

[ -z "$server2pid" ] && eval $cmd || echo "HiveServer2服务已启动"

}

function hive_stop()

{

metapid=$(check_process HiveMetastore 9083)

[ "$metapid" ] && kill $metapid || echo "Metastore服务未启动"

server2pid=$(check_process HiveServer2 10000)

[ "$server2pid" ] && kill $server2pid || echo "HiveServer2服务未启动"

}

case $1 in

"start")

hive_start

;;

"stop")

hive_stop

;;

"restart")

hive_stop

sleep 2

hive_start

;;

"status")

check_process HiveMetastore 9083 >/dev/null && echo "Metastore服务运行正常" || echo "Metastore服务运行异常"

check_process HiveServer2 10000 >/dev/null && echo "HiveServer2服务运行正常" || echo "HiveServer2服务运行异常"

;;

*)

echo Invalid Args!

echo 'Usage: '$(basename $0)' start|stop|restart|status'

;;

esac

1936

1936

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?