7. 审计⽇志

问题:

在 cluster 中启用审计日志。为此,请启用日志后端,并确保:

**· 日志存储在

/var/log/kubernetes/kubernetes-logs.txt

· 日志文件能保留 30 天

· 最多保留 10 个旧审计日志文件

/etc/kubernetes/logpolicy/sample-policy.yaml 提供了基本策略。它仅指定不记录的内容。

:::info

基本策略位于 cluster 的 master 节点

:::

编辑和扩展基本策略以记录:

· RequestResponse 级别的 cronjobs 更改

· namespace front-apps 中persistentvolumes 更改的请求体

· Metadata 级别的所有 namespace 中的ConfigMap和Secret的更改,

· **此外,添加一个全方位的规则以在 Metadata 级别记录所有其他请求。

不要忘记应用修改后的策略。

正确答案:

已知默认条件:

首先依据已知条件提示需知,默认读懂这两个文件需要挂载。为什么第二个文件还需要挂载呢?

看官方文档是这么定义的:“可以使用 --audit-policy-file 标志将包含策略的文件传递给 kube-apiserver。 如果不设置该标志,则不记录事件。 注意 rules 字段必须在审计策略文件中提供。”所以必须得挂载这个路径/etc/kubernetes/logpolicy/

日志存储在/var/log/kubernetes/kubernetes-logs.txt

/etc/kubernetes/logpolicy/sample-policy.yaml 提供了基本策略

条件1:编辑yaml文件,这个条件是在apiservice中定义的。

· 日志存储在

/var/log/kubernetes/kubernetes-logs.txt

· 日志文件能保留 30 天

· 最多保留 10 个旧审计日志文件

/etc/kubernetes/logpolicy/sample-policy.yaml 提供了基本策略。

条件2:编辑和扩展基本策略并记录,这个基本策略是在/etc/kubernetes/logpolicy/sample-policy.yaml 提供的基本策略,所以直接修改这个即可。

编辑和扩展基本策略以记录:

**· RequestResponse 级别的 cronjobs 更改

· namespace front-apps 中persistentvolumes 更改的请求体

· Metadata 级别的所有 namespace 中的ConfigMap和Secret的更改,

· **此外,添加一个全方位的规则以在 Metadata 级别记录所有其他请求。

考试时不要忘记切换 context

#创建审计⽇志规则(切换 Context 后, ssh 到对应的 master 节点):

root@hk8s-master01:/var/log# cd /etc/kubernetes/manifests/

# 先备份配置⽂件, ⽅便还原

root@hk8s-master01:/etc/kubernetes/manifests#cp /etc/kubernetes/manifests/kube-apiserver.yaml ./

root@hk8s-master01:/etc/kubernetes/manifests# ls

etcd.yaml kube-apiserver.yaml kube-controller-manager.yaml kube-scheduler.yaml

# 修改配置

root@hk8s-master01:/etc/kubernetes/manifests# vim kube-apiserver.yaml

1 apiVersion: v1

2 kind: Pod

3 metadata:

... ...

12 spec:

13 containers:

14 - command:

15 - kube-apiserver

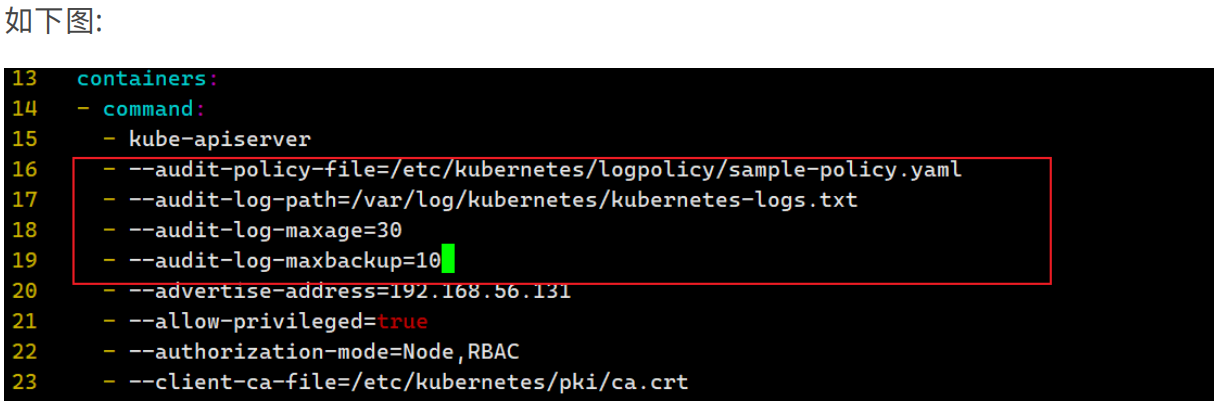

16 - --audit-policy-file=/etc/kubernetes/logpolicy/sample-policy.yaml # 添加这四⾏

17 - --audit-log-path=/var/log/kubernetes/kubernetes-logs.txt

18 - --audit-log-maxage=30

19 - --audit-log-maxbackup=10

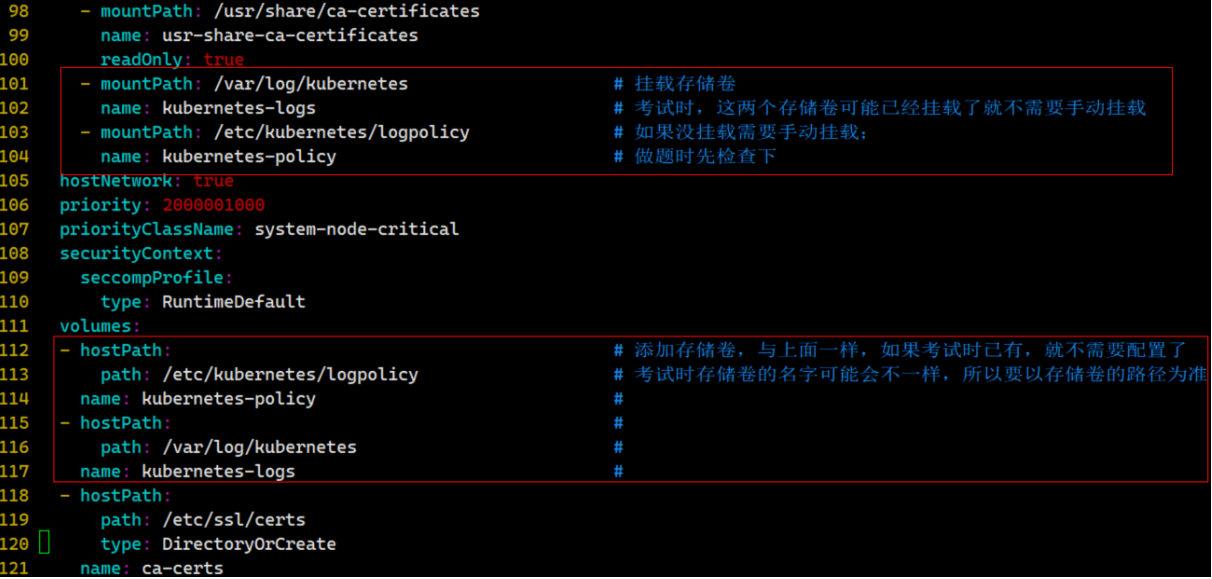

然后挂载策略和⽇志⾄ APIServer 的 Pod(考试环境有可能已经挂载, 如果没挂载就挂载下):

82 volumeMounts:

101 - mountPath: /etc/kubernetes/logpolicy # 添加这四⾏,别漏了

102 name: kubernetes-policy

103 - mountPath: /var/log/kubernetes

104 name: kubernetes-logs

105 hostNetwork: true

106 priority: 2000001000

107 priorityClassName: system-node-critical

108 securityContext:

109 seccompProfile:

110 type: RuntimeDefault

111 volumes:

112 - hostPath: # 添加这四⾏,别漏了

113 path: /etc/kubernetes/logpolicy

114 # type: DirectoryOrCreate

115 name: kubernetes-policy

116 - hostPath:

117 path: /var/log/kubernetes

118 # type: DirectoryOrCreate

119 name: kubernetes-logs

120 - hostPath:

121 path: /etc/ssl/certs

122 type: DirectoryOrCreate

如下图

重复说⼀下, ⾮常重要。

我们在练习环境中⼿动挂载了两个存储卷, 分别是审计⽇志的规则⽂件和⽇志存储⽂件,

但是在**考试时这两个存储卷很可能已经挂载好了, 这时候就不要重复挂载了, 所以要先检 **

查下这两个存储卷有没有被挂载。

⽐如先查找下 logpolicy规则⽂件路径的 关键字(如果是其它路径就⽤其它关键字)

grep ‘/etc/kubernetes/logpolicy’ /etc/kubernetes/manifests/kube-apiserver.yaml

或者是⾃⼰检查下 volumes 配置部分。

重复挂载会导致kubelet起不来。

条件2:

编辑和扩展基本策略并记录,这个基本策略是在/etc/kubernetes/logpolicy/sample-policy.yaml 提供的基本策略,所以直接修改这个即可。

编辑和扩展基本策略以记录:

· RequestResponse 级别的 cronjobs 更改

· namespace front-apps 中persistentvolumes 更改的请求体

· Metadata 级别的所有 namespace 中的ConfigMap和Secret的更改,

· 此外,添加一个全方位的规则以在 Metadata 级别记录所有其他请求。

root@hk8s-master01:~# cd /etc/kubernetes/logpolicy/

root@hk8s-master01:/etc/kubernetes/logpolicy# vim sample-policy.yaml

1 apiVersion: audit.k8s.io/v1

2 kind: Policy

3 omitStages:

4 - "RequestReceived"

5 rules:

6 - level: RequestResponse

7 resources:

8 - group: ""

9 resources: ["cronjobs"] # 根据题⽬要求,添加指定的资源

10 - level: Request

11 resources:

12 - group: ""

13 resources: ["persistentvolumes"] # 根据题⽬要求,添加指定的资源

14 namespaces: ["front-apps"] #命名空间

15 - level: Metadata

16 resources:

17 - group: "" ##注意,这里一定要按照文档的内容修改,别自己按照题目改

18 resources: ["ConfigMaps", "Secrets"] # 根据题⽬要求,添加指定的资源

19 - level: Metadata # 添加时需要把下面这两行加上

omitStages: #

- "RequestReceived" #

重启 kubelet:

sudo systemctl daemon-reload

sudo systemctl restart kubelet

# 如果kubelet⽆法启动,则检查上⾯修改的配置⽂件

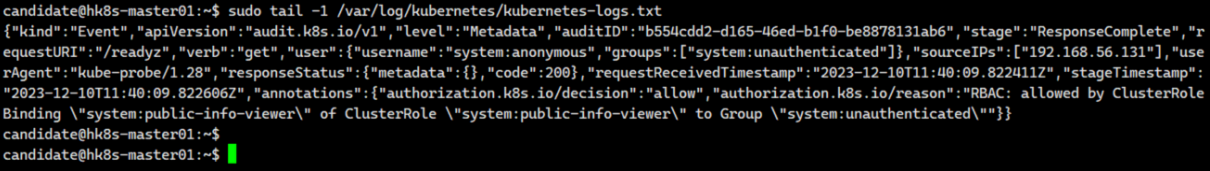

查看⽇志:

tail -1 /var/log/kubernetes/kubernetes-logs.txt

可以看到已经有审计⽇志了_,_解题结束

cp kube-apiserver.yaml /tmp/

1 apiVersion: v1

2 kind: Pod

3 metadata:

4 annotations:

5 kubeadm.kubernetes.io/kube-apiserver.advertise-address.endpoint: 192.168.56.131:644 3

6 creationTimestamp: null

7 labels:

8 component: kube-apiserver

9 tier: control-plane

10 name: kube-apiserver

11 namespace: kube-system

12 spec:

13 containers:

14 - command:

15 - kube-apiserver

16 - --audit-policy-file=/etc/kubernetes/logpolicy/sample-policy.yaml

17 - --audit-log-path=/var/log/kubernetes/kubernetes-logs.txt

18 - --audit-log-maxage=30

19 - --audit-log-maxbackup=10

101 - mountPath: /etc/kubernetes/logpolicy

102 name: sample

103 - mountPath: /var/log/kubernetes

104 name: kubelog

111 volumes:

112 - hostPath:

113 path: /etc/kubernetes/logpolicy

114 name: sample

115 - hostPath:

116 path: /var/log/kubernetes

117 name: kubelog

1 apiVersion: audit.k8s.io/v1

2 kind: Policy

3 omitStages:

4 - "RequestReceived"

5 rules:

6 - level: RequestResponse

7 resources:

8 - group: ""

9 resources: ["cronjobs"]

10 - level: Request

11 resources:

12 - group: ""

13 resources: ["persistentvolumes"]

14 namespaces: ["front-apps"]

15 - level: Metadata

16 resources:

17 - group: ""

18 resources: ["ConfigMap", "Secret"]

19 - level: Metadata

root@hk8s-master01:/etc/kubernetes/manifests# vim kube-apiserver.yaml

11 namespace: kube-system

12 spec:

13 containers:

14 - command:

15 - kube-apiserver

16 - --audit-policy-file=/etc/kubernetes/logpolicy/sample-policy.yaml

17 - --audit-log-path=/var/log/kubernetes/kubernetes-logs.txt

18 - --audit-log-maxage=30

19 - --audit-log-maxbackup=10

1 - mountPath: /etc/kubernetes/logpolicy

102 name: logpolicy

103 - mountPath: /var/log/kubernetes

104 name: kubelog

105 hostNetwork: true

106 priority: 2000001000

107 priorityClassName: system-node-critical

108 securityContext:

109 seccompProfile:

110 type: RuntimeDefault

111 volumes:

112 - hostPath:

113 path: /etc/kubernetes/logpolicy

114 name: logpolicy

115 - hostPath:

116 path: /var/log/kubernetes

117 name: kubelog

root@hk8s-master01:/etc/kubernetes/manifests# cp kube-apiserver.yaml /tmp/kube-apiserver.yaml.7

root@hk8s-master01:/etc/kubernetes/manifests# cd /etc/kubernetes/logpolicy/

root@hk8s-master01:/etc/kubernetes/logpolicy# vim sample-policy.yaml

1 apiVersion: audit.k8s.io/v1

2 kind: Policy

3 omitStages:

4 - "RequestReceived"

5 rules:

6 - level: RequestResponse

7 resources:

8 - group: ""

9 resources: ["cronjobs"]

10 - level: Request

11 resources:

12 - group: ""

13 resources: ["persistentvolumes"]

14 namespaces: ["front-apps"]

15 - level: Metadata

16 resources:

17 - group: ""

18 resources: ["ConfigMap", "Secret"]

19 - level: Metadata

root@hk8s-master01:/etc/kubernetes/logpolicy# systemctl daemon-reload

root@hk8s-master01:/etc/kubernetes/logpolicy# systemctl restart kubelet.service

root@hk8s-master01:/etc/kubernetes/logpolicy#

root@hk8s-master01:/var/lib/kubelet# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-86d8c4fb68-s7f7d 0/1 CrashLoopBackOff 12 (82s ago) 425d

calico-node-l7f5k 1/1 Running 16 (84m ago) 425d

calico-node-lksqf 1/1 Running 16 (84m ago) 425d

calico-node-s95fd 1/1 Running 36 425d

calico-typha-768795f74d-qgxx2 1/1 Running 5 (84m ago) 425d

coredns-567c556887-5j84n 1/1 Running 5 (84m ago) 399d

coredns-567c556887-j565b 1/1 Running 1 (84m ago) 399d

etcd-hk8s-master01 1/1 Running 0 37m

kube-apiserver-hk8s-master01 1/1 Running 0 19s

kube-controller-manager-hk8s-master01 1/1 Running 21 (118s ago) 60d

kube-proxy-8b876 1/1 Running 10 (84m ago) 399d

kube-proxy-9wmbm 1/1 Running 5 (84m ago) 399d

kube-proxy-s4rvl 1/1 Running 5 (84m ago) 399d

kube-scheduler-hk8s-master01 1/1 Running 21 (118s ago) 60d

metrics-server-74db45c9df-n7dlb 1/1 Running 1 (84m ago) 42

root@hk8s-master01:/etc/kubernetes/logpolicy# vim sample-policy.yaml

1 apiVersion: audit.k8s.io/v1

2 kind: Policy

3 omitStages:

4 - "RequestReceived"

5 rules:

6 - level: RequestResponse

7 resources:

8 - group: ""

9 resources: ["cronjobs"]

10 - level: Request

11 resources:

12 - group: ""

13 resources: ["persistentvolumes"]

14 namespaces: ["front-apps"]

15 - level: Metadata

16 resources:

17 - group: ""

18 resources: ["ConfigMap", "Secret"]

19 - level: Metadata

root@hk8s-master01:/etc/kubernetes/manifests# vim kube-apiserver.yaml

12 spec:

13 containers:

14 - command:

15 - kube-apiserver

16 - --audit-log-maxage=30

17 - --audit-log-maxbackup=10

18 - --audit-policy-file=/etc/kubernetes/logpolicy/sample-policy.yaml

19 - --audit-log-path=/var/log/kubernetes/kubernetes-logs.txt

101 - mountPath: /etc/kubernetes/logpolicy

102 name: logpolicy

103 - mountPath: /var/log/kubernetes

104 name: kubelog

111 volumes:

112 - hostPath:

113 path: /etc/kubernetes/logpolicy

114 name: logpolicy

115 - hostPath:

116 path: /var/log/kubernetes

117 name: kubelog

root@hk8s-master01:/etc/kubernetes/manifests# vim kube-apiserver.yaml

12 spec:

13 containers:

14 - command:

15 - kube-apiserver

16 - --audit-policy-file=/etc/kubernetes/logpolicy/sample-policy.yaml

17 - --audit-log-path=/var/log/kubernetes/kubernetes-logs.txt

18 - --audit-log-maxage=30

19 - --audit-log-maxbackup=10

101 - mountPath: /etc/kubernetes/logpolicy

102 name: logpolicy

103 - mountPath: /var/log/kubernetes

104 name: kubelogs

111 volumes:

112 - hostPath:

113 path: /etc/kubernetes/logpolicy

114 name: logpolicy

115 - hostPath:

116 path: /var/log/kubernetes

117 name: kubelogs

root@hk8s-master01://etc/kubernetes/logpolicy# vim sample-policy.yaml

1 apiVersion: audit.k8s.io/v1

2 kind: Policy

3 omitStages:

4 - "RequestReceived"

5 rules:

6 - level: RequestResponse

7 resources:

8 - group: ""

9 resources: ["cronjobs"]

10 - level: Request

11 resources:

12 - group: ""

13 resources: ["persistentvolumes"]

14 # 这个规则仅适用于 "kube-system" 名字空间中的资源。

15 # 空字符串 "" 可用于选择非名字空间作用域的资源。

16 namespaces: ["front-apps"]

17 - level: Metadata

18 resources:

19 - group: ""

20 resources: ["ConfigMap", "Secret"]

21 - level: Metadata

root@hk8s-master01://etc/kubernetes/logpolicy# systemctl daemon-reload

root@hk8s-master01://etc/kubernetes/logpolicy# systemctl restart kubelet.service

root@hk8s-master01:/etc/kubernetes/manifests# cp kube-apiserver.yaml /tmp/kube-apiserver.yaml.7

root@hk8s-master01:/etc/kubernetes/manifests# vim kube-apiserver.yaml

root@hk8s-master01:/etc/kubernetes/manifests# cd ../logpolicy/

root@hk8s-master01:/etc/kubernetes/logpolicy# vim sample-policy.yaml

root@hk8s-master01:/etc/kubernetes/logpolicy# kubectl apply -f sample-policy.yaml

root@hk8s-master01:/etc/kubernetes/logpolicy# systemctl daemon-reload

root@hk8s-master01:/etc/kubernetes/logpolicy# systemctl restart kubelet.service

root@hk8s-master01:/etc/kubernetes/logpolicy# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-86d8c4fb68-s7f7d 1/1 Running 2 (36m ago) 440d

calico-node-l7f5k 1/1 Running 16 (143m ago) 440d

calico-node-lksqf 1/1 Running 16 (143m ago) 440d

calico-node-s95fd 1/1 Running 36 (143m ago) 440d

calico-typha-768795f74d-qgxx2 1/1 Running 5 (143m ago) 440d

coredns-567c556887-5j84n 1/1 Running 5 (143m ago) 413d

coredns-567c556887-j565b 1/1 Running 1 (143m ago) 413d

etcd-hk8s-master01 1/1 Running 0 36m

kube-apiserver-hk8s-master01 1/1 Running 0 8m35s

kube-controller-manager-hk8s-master01 1/1 Running 17 (9m19s ago) 74d

kube-proxy-8b876 1/1 Running 10 (143m ago) 413d

kube-proxy-9wmbm 1/1 Running 5 (143m ago) 413d

kube-proxy-s4rvl 1/1 Running 5 (143m ago) 413d

kube-scheduler-hk8s-master01 1/1 Running 17 (9m18s ago) 74d

metrics-server-74db45c9df-n7dlb 1/1 Running 1 (143m ago) 440d

root@hk8s-master01:/etc/kubernetes/manifests# cp kube-apiserver.yaml /tmp/kube-apiserver.yaml.7

root@hk8s-master01:/etc/kubernetes/manifests# vim kube-apiserver.yaml

root@hk8s-master01:/etc/kubernetes/manifests# ls

etcd.yaml kube-apiserver.yaml kube-controller-manager.yaml kube-scheduler.yaml

root@hk8s-master01:/etc/kubernetes/manifests# cp etcd.yaml /tmp/etcd.yaml.7

root@hk8s-master01:/etc/kubernetes/manifests# cp /var/lib/kubelet/config.yaml /tmp/config.yaml.7

root@hk8s-master01:/etc/kubernetes/manifests# vim kube-apiserver.yaml

root@hk8s-master01:/etc/kubernetes/manifests# cd /etc/kubernetes/logpolicy/

root@hk8s-master01:/etc/kubernetes/logpolicy# ls

sample-policy.yaml

root@hk8s-master01:/etc/kubernetes/logpolicy# vim sample-policy.yaml

root@hk8s-master01:/etc/kubernetes/logpolicy# systemctl daemon-reload

root@hk8s-master01:/etc/kubernetes/logpolicy# systemctl restart kubelet.service

16 # - --audit-policy-file=/etc/kubernetes/logpolicy/sample-policy.yaml

17 # - --audit-log-path=/var/log/kubernetes/kubernetes-logs.txt

18 # - --audit-log-maxage=30

19 # - --audit-log-maxbackup=10

101 # - mountPath: /etc/kubernetes/logpolicy

102 # name: logpolicy

103 # - mountPath: /var/log/kubernetes

104 # name: kubelogs

112 # - hostPath:

113 # path: /etc/kubernetes/logpolicy

114 # name: logpolicy

115 # - hostPath:

116 # path: /var/log/kubernetes

117 # name: kubelogs

root@hk8s-master01:/etc/kubernetes/manifests# cp kube-apiserver.yaml /tmp/kube-apiserver.yaml.7

root@hk8s-master01:/etc/kubernetes/manifests# vim kube-apiserver.yaml

root@hk8s-master01:/etc/kubernetes/manifests# systemctl restart kubelet.service

root@hk8s-master01:/etc/kubernetes/manifests# kubectl get pods -n kube-system

root@hk8s-master01:/etc/kubernetes/manifests# cd /etc/kubernetes/logpolicy/

root@hk8s-master01:/etc/kubernetes/logpolicy# vim sample-policy.yaml

root@hk8s-master01:/etc/kubernetes/logpolicy# systemctl restart kubelet.service

root@hk8s-master01:/etc/kubernetes/logpolicy# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-86d8c4fb68-s7f7d 1/1 Running 1 (4h27m ago) 442d

calico-node-l7f5k 1/1 Init:0/3 16 (4h27m ago) 442d

calico-node-lksqf 1/1 Running 16 (4h27m ago) 442d

calico-node-s95fd 1/1 Running 36 (4h27m ago) 442d

calico-typha-768795f74d-qgxx2 1/1 Running 5 (4h27m ago) 442d

coredns-567c556887-5j84n 1/1 Running 5 (4h27m ago) 415d

coredns-567c556887-j565b 1/1 Running 1 (4h27m ago) 415d

etcd-hk8s-master01 1/1 Running 0 76d

kube-apiserver-hk8s-master01 1/1 Running 0 23m

kube-controller-manager-hk8s-master01 1/1 Running 16 (24m ago) 76d

kube-proxy-8b876 1/1 Running 10 (4h27m ago) 415d

kube-proxy-9wmbm 1/1 Running 5 (4h27m ago) 415d

kube-proxy-s4rvl 1/1 Running 5 (4h27m ago) 415d

kube-scheduler-hk8s-master01 1/1 Running 16 (24m ago) 76d

metrics-server-74db45c9df-n7dlb 1/1 Running 1 (4h27m ago) 442d

root@hk8s-master01:/etc/kubernetes/manifests# ls

etcd.yaml kube-apiserver.yaml kube-controller-manager.yaml kube-scheduler.yaml

root@hk8s-master01:/etc/kubernetes/manifests# cp kube-apiserver.yaml /tmp/kube-apiserver.yaml.7

root@hk8s-master01:/etc/kubernetes/manifests# vim kube-apiserver.yaml

root@hk8s-master01:/etc/kubernetes/manifests# cd /etc/kubernetes/logpolicy/

root@hk8s-master01:/etc/kubernetes/logpolicy# vim sample-policy.yaml

root@hk8s-master01:/etc/kubernetes/logpolicy# systemctl daemon-reload

root@hk8s-master01:/etc/kubernetes/logpolicy# systemctl restart kubelet.service

root@hk8s-master01:/etc/kubernetes/logpolicy# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-86d8c4fb68-s7f7d 1/1 Running 4 (3m39s ago) 443d

calico-node-l7f5k 1/1 Running 16 (12h ago) 443d

calico-node-lksqf 1/1 Running 16 (12h ago) 443d

calico-node-s95fd 1/1 Running 36 (12h ago) 443d

calico-typha-768795f74d-qgxx2 1/1 Running 5 (12h ago) 443d

coredns-567c556887-5j84n 1/1 Running 5 (12h ago) 417d

coredns-567c556887-j565b 1/1 Running 1 (12h ago) 417d

etcd-hk8s-master01 1/1 Running 0 78d

kube-apiserver-hk8s-master01 1/1 Running 0 3m48s

kube-controller-manager-hk8s-master01 1/1 Running 16 (4m24s ago) 78d

kube-proxy-8b876 1/1 Running 10 (12h ago) 417d

kube-proxy-9wmbm 1/1 Running 5 (12h ago) 417d

kube-proxy-s4rvl 1/1 Running 5 (12h ago) 417d

kube-scheduler-hk8s-master01 1/1 Running 16 (4m25s ago) 78d

metrics-server-74db45c9df-n7dlb 1/1 Running 1 (12h ago) 443d

902

902

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?