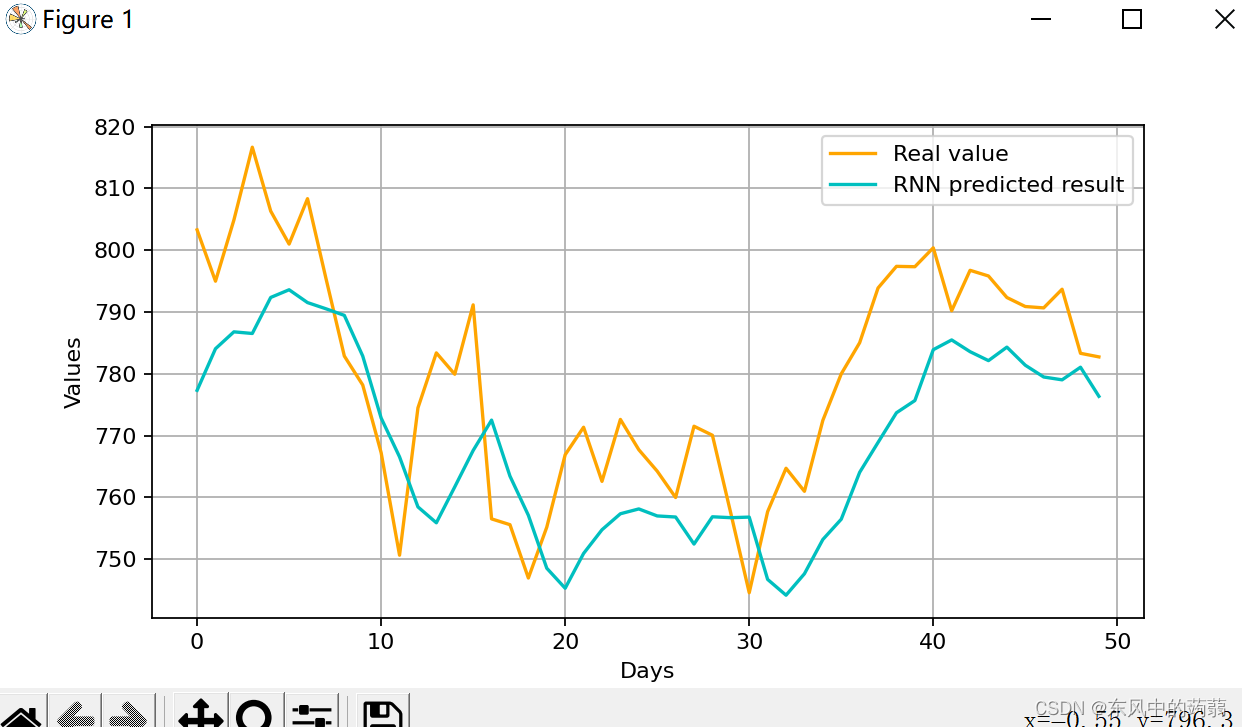

使用简单RNN预测谷歌的股票

import numpy as np

import tensorflow.keras as keras

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.preprocessing import MinMaxScaler

def RNN(x_train, y_train):

regressor = keras.Sequential()

# add the first RNN and the Dropout to prevent overfitting

regressor.add(keras.layers.SimpleRNN(units=50, return_sequences=True,

input_shape=(x_train.shape[1], 1)))

# if the return_sequences is True, it means that it has another rnn upward,and it return

# (batch_size,time_step,units) shape matrix

# otherwise it return (batch_size,units) matrix

regressor.add(keras.layers.Dropout(0.2))

# add the second one

regressor.add(keras.layers.SimpleRNN(units=50, activation='tanh', return_sequences=True))

regressor.add(keras.layers.Dropout(0.2))

regressor.add(keras.layers.SimpleRNN(units=50))

regressor.add(keras.layers.Dropout(0.2))

# add the output layer

regressor.add(keras.layers.Dense(units=1))

# compile

regressor.compile(optimizer='adam', loss='mean_squared_error', metrics=['accuracy'])

regressor.fit(x=x_train, y=y_train, epochs=30, batch_size=32)

# print(regressor.summary())

return regressor

def visualization(real, pred):

plt.figure(figsize=(8, 4), dpi=80, facecolor='w', edgecolor='k')

plt.plot(real, color="orange", label="Real value")

plt.plot(pred, color="c", label="RNN predicted result")

plt.legend()

plt.xlabel("Days")

plt.ylabel("Values")

plt.grid(True)

plt.show()

if __name__ == "__main__":

data = pd.read_csv(r"dataset\geogle_stock_price\archive\Google_Stock_Price_Train.csv")

data = data.loc[:, ["Open"]].values

train = data[:len(data) - 50]

test = data[len(train):]

train.reshape(train.shape[0], 1)

scaler = MinMaxScaler(feature_range=(0, 1))

train_scaled = scaler.fit_transform(train)

# plt.plot(train_scaled)

# plt.show()

X_train = []

Y_train = []

time_step = 50

for i in range(time_step, train_scaled.shape[0]):

X_train.append(train_scaled[i - time_step:i, 0])

Y_train.append(train_scaled[i, 0])

X_train, Y_train = np.array(X_train), np.array(Y_train)

X_train = X_train.reshape(X_train.shape[0], X_train.shape[1], 1)

inputs = data[len(data) - len(test) - time_step:]

inputs = scaler.transform(inputs)

X_test = []

for i in range(time_step, inputs.shape[0]):

X_test.append(inputs[i - time_step:i, 0])

X_test = np.array(X_test)

X_test = X_test.reshape(X_test.shape[0], X_test.shape[1], 1)

model = RNN(X_train, Y_train)

pred = model.predict(X_test)

pred = scaler.inverse_transform(pred)

visualization(real=test, pred=pred)

- 简单的RNN会遇到例如梯度消失无法嵌套太多层、单项传播无法考虑后面对前面的影响等各种问题

LSTM模型预测

# -*-coding = utf-8

import numpy as np

import tensorflow.keras as keras

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.preprocessing import MinMaxScaler

def RNN(x_train, y_train):

regressor = keras.Sequential()

# add the first RNN and the Dropout to prevent overfitting

regressor.add(keras.layers.SimpleRNN(units=50, return_sequences=True,

input_shape=(x_train.shape[1], 1)))

# if the return_sequences is True, it means that it has another rnn upward,and it return

# (batch_size,time_step,units) shape matrix

# otherwise it return (batch_size,units) matrix

regressor.add(keras.layers.Dropout(0.2))

# add the second one

regressor.add(keras.layers.SimpleRNN(units=50, activation='tanh', return_sequences=True))

regressor.add(keras.layers.Dropout(0.2))

regressor.add(keras.layers.SimpleRNN(units=50))

regressor.add(keras.layers.Dropout(0.2))

# add the output layer

regressor.add(keras.layers.Dense(units=1))

# compile

regressor.compile(optimizer='adam', loss='mean_squared_error')

# a important point: we do not give a metric such as accuracy in the CNN or ohter neural network

#

regressor.fit(x=x_train, y=y_train, epochs=30, batch_size=32)

# print(regressor.summary())

return regressor

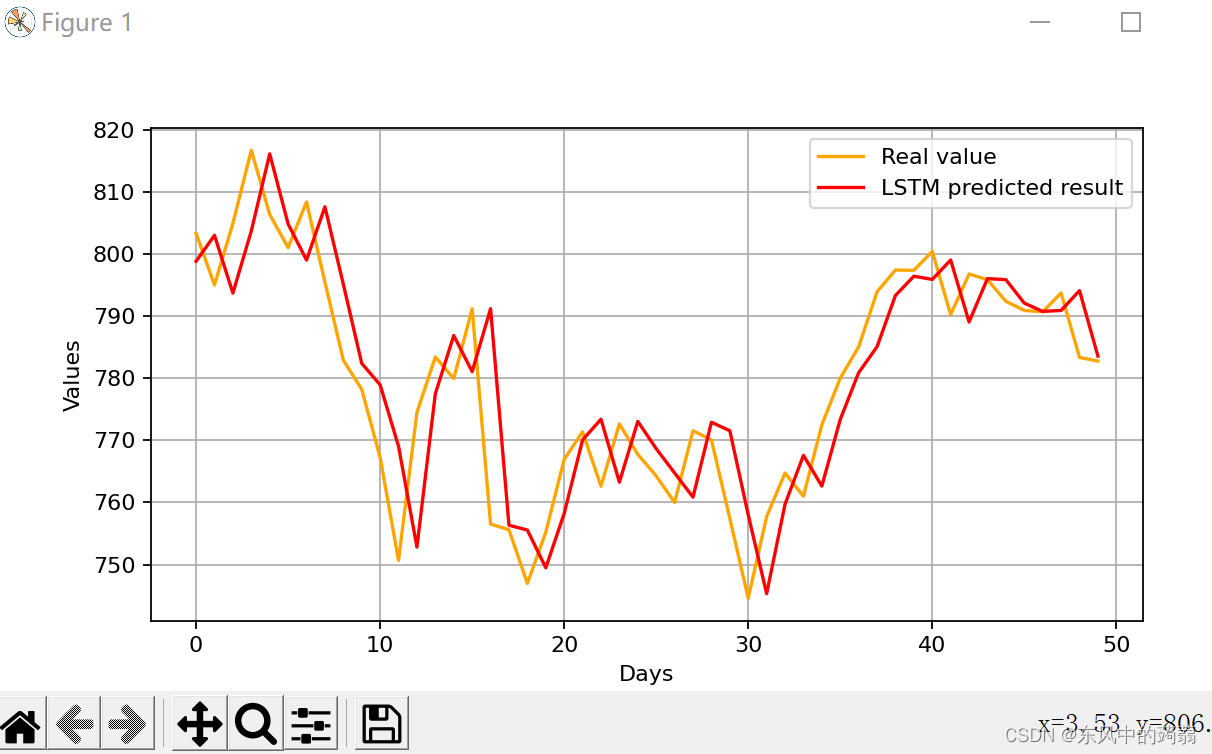

def LSTM(x_train, y_train):

model = keras.Sequential()

model.add(keras.layers.LSTM(units=10, activation='tanh', input_shape=(None, 1)))

# ten units LSTM

model.add(keras.layers.Dense(1))

model.compile(loss='mean_squared_error', optimizer='adam', metrics=['accuracy'])

model.fit(x_train, y_train, batch_size=1, epochs=15)

return model

def visualization(real, pred):

plt.figure(figsize=(8, 4), dpi=80, facecolor='w', edgecolor='k')

plt.plot(real, color="orange", label="Real value")

# plt.plot(pred, color="c", label="RNN predicted result")

plt.plot(pred, color='r', label='LSTM predicted result')

plt.legend()

plt.xlabel("Days")

plt.ylabel("Values")

plt.grid(True)

plt.show()

if __name__ == "__main__":

data = pd.read_csv(r"dataset\geogle_stock_price\archive\Google_Stock_Price_Train.csv")

data = data.loc[:, ["Open"]].values

train = data[:len(data) - 50]

test = data[len(train):]

train.reshape(train.shape[0], 1)

scaler = MinMaxScaler(feature_range=(0, 1))

train_scaled = scaler.fit_transform(train)

# plt.plot(train_scaled)

# plt.show()

X_train = []

Y_train = []

time_step = 50

for i in range(time_step, train_scaled.shape[0]):

X_train.append(train_scaled[i - time_step:i, 0])

Y_train.append(train_scaled[i, 0])

X_train, Y_train = np.array(X_train), np.array(Y_train)

X_train = X_train.reshape(X_train.shape[0], X_train.shape[1], 1)

inputs = data[len(data) - len(test) - time_step:]

inputs = scaler.transform(inputs)

X_test = []

for i in range(time_step, inputs.shape[0]):

X_test.append(inputs[i - time_step:i, 0])

X_test = np.array(X_test)

X_test = X_test.reshape(X_test.shape[0], X_test.shape[1], 1)

# model = RNN(X_train, Y_train)

model = LSTM(X_train, Y_train)

pred = model.predict(X_test)

pred = scaler.inverse_transform(pred)

visualization(real=test, pred=pred)

可以看到LSTM模型的预测效果比简单CNN要好很多,基本上复现了真实的股价,只是在时间上面有一些延迟。

1231

1231

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?