pv描述的是持久化数据卷,比如挂载一个nfs目录我们可以通过下面的yaml创建pv

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs

spec:

storageClass: manual

capacity:

storage: 1Gi

accessModes:

- ReadWriteMany

nfs:

server: 192.168.150.29

path: "/"

pvc描述的是需要存储的属性,比如volume使用的大小和使用权限等,比如下面所定义的

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

pvc真正要能被使用就必须和某个符合条件的pv进行绑定,这里要检查的条件包括两个部分,是pv和pvc的spec字段,比如storage的大小就必须满足pvc的要求,第二个就是storageclass字段必须要一样,pvc在经行绑定之后pod就可以再volumes下声明使用这个pvc

apiVersion: v1

kind: Pod

metadata:

labels:

role: web-frontend

spec:

containers:

- name: web

image: nginx

ports:

- name: web

containerPort: 80

volumeMounts:

- name: nfs

mountPath: "/usr/share/nginx/html"

volumes:

- name: nfs

persistentVolumeClaim:

claimName: nfs

pod创建之后kubelet就会吧pv对应的pvc挂载到这个pod上,k8s里面存在一个专门处理持久化存储的控制器,是volumecontroller这个controller维护着多个控制器其中一个循环就是负责pv和pvc的绑定工作,是persistentvolumecontroller,它会不断的检查当前每个pvc是不是处于绑定的状态,如果不是,它会遍历所有的pv,尝试与pvc绑定,绑定操作就是吧这个pv的名字填在pvc的spec.volumeName上,

那么kubelet又是怎样处理持久化存储的,处理过程需要两个阶段,首先是attach,kubelet调用远端存储的api将它提供的persistent disk挂载到pod 所在的宿主机上,为了能够使用这个磁盘,第二个阶段是mount阶段,先格式化这个磁盘设备,然后把它挂载到指定的挂载点上,而如果你的 Volume 类型是远程文件存储(比如 NFS)的话,kubelet 的处理过程就会更简单一些,因为在这种情况下,kubelet 可以跳过“第一阶段”(Attach)的操作,这是因为一般来说,远程文件存储并没有一个“存储设备”需要挂载在宿主机上,接下来,kubelet 只要把这个 Volume 目录通过 CRI 里的 Mounts 参数,传递给 Docker,然后就可以为 Pod 里的容器挂载这个“持久化”的 Volume 了

关于pv的两阶段处理流程是独立与kubelet主控制循环的,第一阶段的attach是由volume controller负责维护的,这个控制循环的名字是attachDetachController,就是不断检查每一个pod对应的pv,和这个pod所在宿主机之间的挂载情况,从而决定是否对pv进行attach操作,第二个阶段的mount操作,必须发生在pod对应的宿主机上,所以它是kubelet组件的一部分,它叫做volumemanagerReconciler,它是独立的一个goroutine

kubernetes提供一个自动创建pv的机制,就是Dynamic Provisioning,它的核心就是提供一个storageclass对象,storageclass的作用就是创建pv的模板,第一定义pv的属性,比如存储类型,volume的大小,第二创建这种pv需要用到的存储插件,比如ceph像下面的yaml

apiVersion: ceph.rook.io/v1beta1

kind: Pool

metadata:

name: replicapool

namespace: rook-ceph

spec:

replicated:

size: 3

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: block-service

provisioner: ceph.rook.io/block

parameters:

pool: replicapool

#The value of "clusterNamespace" MUST be the same as the one in which your rook cluster exist

clusterNamespace: rook-ceph

这个时候就只需要在pod字段下使用这个storageclass就可以了

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: claim1

spec:

accessModes:

- ReadWriteOnce

storageClassName: block-service

resources:

requests:

storage: 30Gi

实际上,如果你的集群已经开启了名叫 DefaultStorageClass 的 Admission Plugin,它就会为 PVC 和 PV 自动添加一个默认的 StorageClass;否则,PVC 的 storageClassName 的值就是“”,这也意味着它只能够跟 storageClassName 也是“”的 PV 进行绑定

下面来了解一下local persistent,就是把本地次磁盘抽象成pv,本地的一块盘作为一个pv,他会在调度的时候考虑 Volume 分布,需要使用到nodeaffinity创建的pv如下

apiVersion: v1

kind: PersistentVolume

metadata:

name: example-pv

spec:

capacity:

storage: 5Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Delete

storageClassName: local-storage

local:

path: /mnt/disks/vol1

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- node-1

我们在创建一个storageclass

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: local-storage

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumer

WaitForFirstConsumer 的属性。它是 Local Persistent Volume 里一个非常重要的特性,即:延迟绑定。推迟到调度的时候在执行绑定操作然后创建一个pvc去使用这个storageclass

kind: Pod

apiVersion: v1

metadata:

name: example-pv-pod

spec:

volumes:

- name: example-pv-storage

persistentVolumeClaim:

claimName: example-local-claim

containers:

- name: example-pv-container

image: nginx

ports:

- containerPort: 80

name: "http-server"

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: example-pv-storage

需要注意的是,我们上面手动创建 PV 的方式,即 Static 的 PV 管理方式,在删除 PV 时需要按如下流程执行操作:删除使用这个 PV 的 Pod;从宿主机移除本地磁盘(比如,umount 它);删除 PVC;删除 PV。

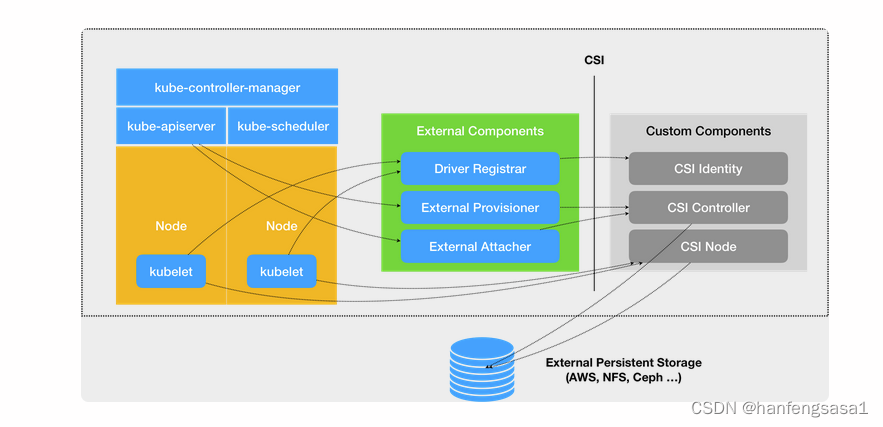

编写自己的存储插件通常需要flexVolume和csi,csi是常用的一种,CSI 插件体系的设计思想,就是把这个 Provision 阶段,以及 Kubernetes 里的一部分存储管理功能,从主干代码里剥离出来,做成了几个单独的组件。这些组件会通过 Watch API 监听 Kubernetes 里与存储相关的事件变化,比如 PVC 的创建,来执行具体的存储管理动作。而这些管理动作,比如“Attach 阶段”和“Mount 阶段”的具体操作,实际上就是通过调用 CSI 插件来完成的。这种设计思路,我可以用如下所示的一幅示意图来表示:

1.Driver Registrar 组件,负责将插件注册到 kubelet 里面

1.Driver Registrar 组件,负责将插件注册到 kubelet 里面

2.External Provisioner 组件,负责的正是 Provision 阶段,在具体实现上,External Provisioner 监听(Watch)了 APIServer 里的 PVC 对象。当一个 PVC 被创建时,它就会调用 CSI Controller 的 CreateVolume 方法,为你创建对应 PV

3.External Attacher 组件,负责的正是“Attach 阶段”。在具体实现上,它监听了 APIServer 里 VolumeAttachment 对象的变化

4.CSI 插件的 CSI Identity 服务,负责对外暴露这个插件本身的信息

5. CSI Controller 服务,定义的则是对 CSI Volume(对应 Kubernetes 里的 PV)的管理接口,比如:创建和删除 CSI Volume

6. CSI Controller 服务的实际调用者,并不是 Kubernetes(即:通过 pkg/volume/csi 发起 CSI 请求),而是 External Provisioner 和 External Attacher。这两个 External Components,分别通过监听 PVC 和 VolumeAttachement 对象,来跟 Kubernetes 进行协作

7. CSI Volume 需要在宿主机上执行的操作,都定义在了 CSI Node 服务里

8. Mount 阶段”在 CSI Node 里的接口,是由 NodeStageVolume 和 NodePublishVolume 两个接口共同实现的

下面我们编写一个简单的自定义csi插件

为了能够让 Kubernetes 访问到 CSI Identity 服务,我们需要先在 driver.go 文件里,定义一个标准的 gRPC Server

package kvm

import (

"fmt"

"github.com/container-storage-interface/spec/lib/go/csi"

"github.com/kubernetes-csi/csi-lib-utils/protosanitizer"

"golang.org/x/net/context"

"google.golang.org/grpc"

"k8s.io/klog"

"net"

"os"

"strings"

)

type Driver struct {

nodeID string

endpoint string

}

const (

version = "1.0.0"

driverName = "kvm.csi.dianduidian.com"

DevicePathKey = "devicePath"

)

func NewDriver(nodeID, endpoint string) *Driver {

klog.V(4).Infof("Driver: %v version: %v", driverName, version)

n := &Driver{

nodeID: nodeID,

endpoint: endpoint,

}

return n

}

func (d *Driver) Run() {

ctl := NewControllerServer()

identity := NewIdentityServer()

node := NewNodeServer(d.nodeID)

opts := []grpc.ServerOption{

grpc.UnaryInterceptor(logGRPC),

}

srv := grpc.NewServer(opts...)

csi.RegisterControllerServer(srv, ctl)

csi.RegisterIdentityServer(srv, identity)

csi.RegisterNodeServer(srv, node)

proto, addr, err := ParseEndpoint(d.endpoint)

klog.V(4).Infof("protocol: %s,addr: %s", proto, addr)

if err != nil {

klog.Fatal(err.Error())

}

if proto == "unix" {

addr = "/" + addr

if err := os.Remove(addr); err != nil && !os.IsNotExist(err) {

klog.Fatalf("Failed to remove %s, error: %s", addr, err.Error())

}

}

listener, err := net.Listen(proto, addr)

if err != nil {

klog.Fatalf("Failed to listen: %v", err)

}

srv.Serve(listener)

}

func logGRPC(ctx context.Context, req interface{}, info *grpc.UnaryServerInfo, handler grpc.UnaryHandler) (interface{}, error) {

klog.V(4).Infof("GRPC call: %s", info.FullMethod)

klog.V(4).Infof("GRPC request: %s", protosanitizer.StripSecrets(req))

resp, err := handler(ctx, req)

if err != nil {

klog.Errorf("GRPC error: %v", err)

} else {

klog.V(4).Infof("GRPC response: %s", protosanitizer.StripSecrets(resp))

}

return resp, err

}

func ParseEndpoint(ep string) (string, string, error) {

if strings.HasPrefix(strings.ToLower(ep), "unix://") || strings.HasPrefix(strings.ToLower(ep), "tcp://") {

s := strings.SplitN(ep, "://", 2)

if s[1] != "" {

return s[0], s[1], nil

}

}

return "", "", fmt.Errorf("Invalid endpoint: %v", ep)

}

CSI Identity 服务中,最重要的接口是 GetPluginInfo,它返回的就是这个插件的名字和版本号,GetPluginCapabilities 接口也很重要。这个接口返回的是这个 CSI 插件的“能力,CSI Identity 服务还提供了一个 Probe 接口。Kubernetes 会调用它来检查这个 CSI 插件是否正常工作。

// Node Plugin 和 the Controller Plugin 都需要此服务

package kvm

import (

"github.com/container-storage-interface/spec/lib/go/csi"

"golang.org/x/net/context"

"k8s.io/klog"

)

type IdentityServer struct{}

func NewIdentityServer() *IdentityServer {

return &IdentityServer{}

}

// GetPluginInfo 返回插件信息

func (ids *IdentityServer) GetPluginInfo(ctx context.Context, req *csi.GetPluginInfoRequest) (*csi.GetPluginInfoResponse, error) {

klog.V(4).Infof("GetPluginInfo: called with args %+v", *req)

return &csi.GetPluginInfoResponse{

Name: driverName,

VendorVersion: version,

}, nil

}

// GetPluginCapabilities 返回插件支持的功能

func (ids *IdentityServer) GetPluginCapabilities(ctx context.Context, req *csi.GetPluginCapabilitiesRequest) (*csi.GetPluginCapabilitiesResponse, error) {

klog.V(4).Infof("GetPluginCapabilities: called with args %+v", *req)

resp := &csi.GetPluginCapabilitiesResponse{

Capabilities: []*csi.PluginCapability{

{

Type: &csi.PluginCapability_Service_{

Service: &csi.PluginCapability_Service{

Type: csi.PluginCapability_Service_CONTROLLER_SERVICE,

},

},

},

{

Type: &csi.PluginCapability_Service_{

Service: &csi.PluginCapability_Service{

Type: csi.PluginCapability_Service_VOLUME_ACCESSIBILITY_CONSTRAINTS,

},

},

},

},

}

return resp, nil

}

// Probe 插件健康检测

func (ids *IdentityServer) Probe(ctx context.Context, req *csi.ProbeRequest) (*csi.ProbeResponse, error) {

klog.V(4).Infof("Probe: called with args %+v", *req)

return &csi.ProbeResponse{}, nil

}

controllerserver.go要实现的就是 Volume 管理流程中的“Provision 阶段”和“Attach 阶段”。“Provision 阶段”对应的接口,是 CreateVolume 和 DeleteVolume,它们的调用者是 External Provisoner,“Attach 阶段”对应的接口是 ControllerPublishVolume 和 ControllerUnpublishVolume,它们的调用者是 External Attacher

//Controller插件

package kvm

import (

"github.com/container-storage-interface/spec/lib/go/csi"

"golang.org/x/net/context"

"google.golang.org/grpc/codes"

"google.golang.org/grpc/status"

"k8s.io/klog"

)

var (

// volCaps = []csi.VolumeCapability_AccessMode{

// {

// Mode: csi.VolumeCapability_AccessMode_SINGLE_NODE_WRITER,

// },

// }

// controllerCaps 代表Controller Plugin支持的功能,可选类型见https://github.com/container-storage-interface/spec/blob/4731db0e0bc53238b93850f43ab05d9355df0fd9/lib/go/csi/csi.pb.go#L181:6

// 这里只实现Volume的创建/删除,附加/卸载

controllerCaps = []csi.ControllerServiceCapability_RPC_Type{

csi.ControllerServiceCapability_RPC_CREATE_DELETE_VOLUME,

csi.ControllerServiceCapability_RPC_PUBLISH_UNPUBLISH_VOLUME,

}

)

type ControllerServer struct{}

func NewControllerServer() *ControllerServer {

return &ControllerServer{}

}

// ControllerGetCapabilities 返回Controller Plugin支持的功能

func (cs *ControllerServer) ControllerGetCapabilities(ctx context.Context, req *csi.ControllerGetCapabilitiesRequest) (*csi.ControllerGetCapabilitiesResponse, error) {

klog.V(4).Infof("ControllerGetCapabilities: called with args %+v", *req)

var caps []*csi.ControllerServiceCapability

for _, cap := range controllerCaps {

c := &csi.ControllerServiceCapability{

Type: &csi.ControllerServiceCapability_Rpc{

Rpc: &csi.ControllerServiceCapability_RPC{

Type: cap,

},

},

}

caps = append(caps, c)

}

return &csi.ControllerGetCapabilitiesResponse{Capabilities: caps}, nil

}

// CreateVolume 创建

func (cs *ControllerServer) CreateVolume(ctx context.Context, req *csi.CreateVolumeRequest) (*csi.CreateVolumeResponse, error) {

klog.V(4).Infof("CreateVolume: called with args %+v", *req)

// 这里先返回一个假数据,模拟我们创建出了一块id为"qcow-1234567"容量为20G的云盘

return &csi.CreateVolumeResponse{

Volume: &csi.Volume{

VolumeId: "qcow-1234567",

CapacityBytes: 20 * (1 << 30),

VolumeContext: req.GetParameters(),

},

}, nil

}

// DeleteVolume 删除

func (cs *ControllerServer) DeleteVolume(ctx context.Context, req *csi.DeleteVolumeRequest) (*csi.DeleteVolumeResponse, error) {

klog.V(4).Infof("DeleteVolume: called with args: %+v", *req)

return &csi.DeleteVolumeResponse{}, nil

}

// ControllerPublishVolume 附加

func (cs *ControllerServer) ControllerPublishVolume(ctx context.Context, req *csi.ControllerPublishVolumeRequest) (*csi.ControllerPublishVolumeResponse, error) {

klog.V(4).Infof("ControllerPublishVolume: called with args %+v", *req)

pvInfo := map[string]string{DevicePathKey: "/dev/sdb"}

return &csi.ControllerPublishVolumeResponse{PublishContext: pvInfo}, nil

}

// ControllerUnpublishVolume 卸载

func (cs *ControllerServer) ControllerUnpublishVolume(ctx context.Context, req *csi.ControllerUnpublishVolumeRequest) (*csi.ControllerUnpublishVolumeResponse, error) {

klog.V(4).Infof("ControllerUnpublishVolume: called with args %+v", *req)

return &csi.ControllerUnpublishVolumeResponse{}, nil

}

// TODO(xnile): implement this

func (cs *ControllerServer) ValidateVolumeCapabilities(ctx context.Context, req *csi.ValidateVolumeCapabilitiesRequest) (*csi.ValidateVolumeCapabilitiesResponse, error) {

return nil, status.Error(codes.Unimplemented, "")

}

func (cs *ControllerServer) ListVolumes(ctx context.Context, req *csi.ListVolumesRequest) (*csi.ListVolumesResponse, error) {

return nil, status.Error(codes.Unimplemented, "")

}

func (cs *ControllerServer) GetCapacity(ctx context.Context, req *csi.GetCapacityRequest) (*csi.GetCapacityResponse, error) {

return nil, status.Error(codes.Unimplemented, "")

}

func (cs *ControllerServer) CreateSnapshot(ctx context.Context, req *csi.CreateSnapshotRequest) (*csi.CreateSnapshotResponse, error) {

return nil, status.Error(codes.Unimplemented, "")

}

func (cs *ControllerServer) DeleteSnapshot(ctx context.Context, req *csi.DeleteSnapshotRequest) (*csi.DeleteSnapshotResponse, error) {

return nil, status.Error(codes.Unimplemented, "")

}

func (cs *ControllerServer) ListSnapshots(ctx context.Context, req *csi.ListSnapshotsRequest) (*csi.ListSnapshotsResponse, error) {

return nil, status.Error(codes.Unimplemented, "")

}

func (cs *ControllerServer) ControllerExpandVolume(ctx context.Context, req *csi.ControllerExpandVolumeRequest) (*csi.ControllerExpandVolumeResponse, error) {

return nil, status.Error(codes.Unimplemented, "")

}

CSI Node 服务对应的,是 Volume 管理流程里的“Mount 阶段”。它的代码实现,在 nodeserver.go里

// Node插件

package kvm

import (

// "fmt"

// "os"

"github.com/container-storage-interface/spec/lib/go/csi"

"golang.org/x/net/context"

"google.golang.org/grpc/codes"

"google.golang.org/grpc/status"

"k8s.io/klog"

"k8s.io/kubernetes/pkg/util/mount"

)

type nodeServer struct {

nodeID string

mounter mount.SafeFormatAndMount

}

func NewNodeServer(nodeid string) *nodeServer {

return &nodeServer{

nodeID: nodeid,

mounter: mount.SafeFormatAndMount{

Interface: mount.New(""),

Exec: mount.NewOsExec(),

},

}

}

// NodeStageVolume 格式化硬盘,Mount到全局目录

func (ns *nodeServer) NodeStageVolume(ctx context.Context, req *csi.NodeStageVolumeRequest) (*csi.NodeStageVolumeResponse, error) {

klog.V(4).Infof("NodeStageVolume: called with args %+v", *req)

return &csi.NodeStageVolumeResponse{}, nil

}

func (ns *nodeServer) NodeUnstageVolume(ctx context.Context, req *csi.NodeUnstageVolumeRequest) (*csi.NodeUnstageVolumeResponse, error) {

klog.V(4).Infof("NodeUnstageVolume: called with args %+v", *req)

return &csi.NodeUnstageVolumeResponse{}, nil

}

//NodePublishVolume 从全局目录mount到目标目录(后续将映射到Pod中)

func (ns *nodeServer) NodePublishVolume(ctx context.Context, req *csi.NodePublishVolumeRequest) (*csi.NodePublishVolumeResponse, error) {

klog.V(4).Infof("NodePublishVolume: called with args %+v", *req)

return &csi.NodePublishVolumeResponse{}, nil

}

func (ns *nodeServer) NodeUnpublishVolume(ctx context.Context, req *csi.NodeUnpublishVolumeRequest) (*csi.NodeUnpublishVolumeResponse, error) {

klog.V(4).Infof("NodeUnpublishVolume: called with args %+v", *req)

return &csi.NodeUnpublishVolumeResponse{}, nil

}

// NodeGetInfo 返回节点信息

func (ns *nodeServer) NodeGetInfo(ctx context.Context, req *csi.NodeGetInfoRequest) (*csi.NodeGetInfoResponse, error) {

klog.V(4).Infof("NodeGetInfo: called with args %+v", *req)

return &csi.NodeGetInfoResponse{

NodeId: ns.nodeID,

}, nil

}

// NodeGetCapabilities 返回节点支持的功能

func (ns *nodeServer) NodeGetCapabilities(ctx context.Context, req *csi.NodeGetCapabilitiesRequest) (*csi.NodeGetCapabilitiesResponse, error) {

klog.V(4).Infof("NodeGetCapabilities: called with args %+v", *req)

return &csi.NodeGetCapabilitiesResponse{

Capabilities: []*csi.NodeServiceCapability{

{

Type: &csi.NodeServiceCapability_Rpc{

Rpc: &csi.NodeServiceCapability_RPC{

Type: csi.NodeServiceCapability_RPC_STAGE_UNSTAGE_VOLUME,

},

},

},

},

}, nil

}

func (ns *nodeServer) NodeGetVolumeStats(ctx context.Context, in *csi.NodeGetVolumeStatsRequest) (*csi.NodeGetVolumeStatsResponse, error) {

return nil, status.Error(codes.Unimplemented, "")

}

func (ns *nodeServer) NodeExpandVolume(ctx context.Context, req *csi.NodeExpandVolumeRequest) (*csi.NodeExpandVolumeResponse, error) {

return nil, status.Error(codes.Unimplemented, "")

}

打包镜像

FROM centos:7

copy main.go /main

ENTRYPOINT ["/main"]

创建namespace rbac相关的内容

apiVersion: v1

kind: Namespace

metadata:

name: csi-dev

apiVersion: v1

kind: ServiceAccount

metadata:

name: kvm-csi-driver

namespace: csi-dev

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: kvm-csi-driver

rules:

- apiGroups: [""]

resources: ["secrets"]

verbs: ["get", "list"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "update", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: ["storage.k8s.io"]

resources: ["csinodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "watch", "list", "delete", "update", "create"]

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["get", "watch", "list", "delete", "update", "create"]

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: ["csi.storage.k8s.io"]

resources: ["csinodeinfos"]

verbs: ["get", "list", "watch"]

- apiGroups: ["storage.k8s.io"]

resources: ["volumeattachments"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["snapshot.storage.k8s.io"]

resources: ["volumesnapshotclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: ["snapshot.storage.k8s.io"]

resources: ["volumesnapshotcontents"]

verbs: ["create", "get", "list", "watch", "update", "delete"]

- apiGroups: ["snapshot.storage.k8s.io"]

resources: ["volumesnapshots"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["apiextensions.k8s.io"]

resources: ["customresourcedefinitions"]

verbs: ["create", "list", "watch", "delete"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: kvm-csi-driver

subjects:

- kind: ServiceAccount

name: kvm-csi-driver

namespace: csi-dev

roleRef:

kind: ClusterRole

name: kvm-csi-driver

apiGroup: rbac.authorization.k8s.io

创建csi的deployment

kind: Deployment

apiVersion: apps/v1

metadata:

name: kvm-csi-driver

namespace: csi-dev

spec:

replicas: 1

selector:

matchLabels:

app: kvm-csi-driver

template:

metadata:

labels:

app: kvm-csi-driver

spec:

nodeSelector:

kubernetes.io/hostname: knode02

serviceAccount: kvm-csi-driver

containers:

#plugin

- name: kvm-csi-driver

image: xnile/kvm-csi-driver:v0.1

args:

- --endpoint=$(CSI_ENDPOINT)

- --nodeid=$(KUBE_NODE_NAME)

- --logtostderr

- --v=5

env:

- name: CSI_ENDPOINT

value: unix:///csi/csi.sock

- name: KUBE_NODE_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: spec.nodeName

securityContext:

privileged: true

volumeMounts:

- name: kubelet-dir

mountPath: /var/lib/kubelet

mountPropagation: "Bidirectional"

- name: plugin-dir

mountPath: /csi

- name: device-dir

mountPath: /dev

#Sidecar:node-driver-registrar

- name: node-driver-registrar

image: quay.io/k8scsi/csi-node-driver-registrar:v1.2.0

args:

- --csi-address=$(ADDRESS)

- --kubelet-registration-path=$(DRIVER_REG_SOCK_PATH)

- --v=5

lifecycle:

preStop:

exec:

command: ["/bin/sh", "-c", "rm -rf /registration/kvm.csi.dianduidian.com-reg.sock /csi/csi.sock"]

env:

- name: ADDRESS

value: /csi/csi.sock

- name: DRIVER_REG_SOCK_PATH

value: /var/lib/kubelet/plugins/kvm.csi.dianduidian.com/csi.sock

volumeMounts:

- name: plugin-dir

mountPath: /csi

- name: registration-dir

mountPath: /registration

#Sidecar: livenessprobe

- name: liveness-probe

image: quay.io/k8scsi/livenessprobe:v1.1.0

args:

- "--csi-address=/csi/csi.sock"

- "--v=5"

volumeMounts:

- name: plugin-dir

mountPath: /csi

#Sidecar: csi-provisione

- name: csi-provisioner

image: quay.io/k8scsi/csi-provisioner:v1.3.1

args:

- "--csi-address=$(ADDRESS)"

- "--v=5"

- "--feature-gates=Topology=True"

env:

- name: ADDRESS

value: unix:///csi/csi.sock

imagePullPolicy: "IfNotPresent"

volumeMounts:

- name: plugin-dir

mountPath: /csi

#Sidecar: csi-attacher

- name: csi-attacher

image: quay.io/k8scsi/csi-attacher:v1.2.1

args:

- "--v=5"

- "--csi-address=$(ADDRESS)"

env:

- name: ADDRESS

value: /csi/csi.sock

imagePullPolicy: "Always"

volumeMounts:

- name: plugin-dir

mountPath: /csi

volumes:

- name: kubelet-dir

hostPath:

path: /var/lib/kubelet

type: Directory

- name: plugin-dir

hostPath:

path: /var/lib/kubelet/plugins/kvm.csi.dianduidian.com/

type: DirectoryOrCreate

- name: registration-dir

hostPath:

path: /var/lib/kubelet/plugins_registry/

type: Directory

- name: device-dir

hostPath:

path: /dev

type: Directory

2149

2149

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?