循环神经网络

任务

任务:自动撰写文章

提供标题:what is flare

AI生成文章:“A flare, also sometimes called a fusee, is a type of pyrotechnic that produces a bright light or intense heat without an explosion. Flares are used for distress signaling, illumination, or defensive countermeasures in civilian and military applications … (数据来源:http://ai-writer.com/)”

任务:自动寻找语句中的人名

1、The courses are taught by Flare Zhao and David Chen.

2、How long does a flare last in the air?

基于文本内容及其前后信息进行预测

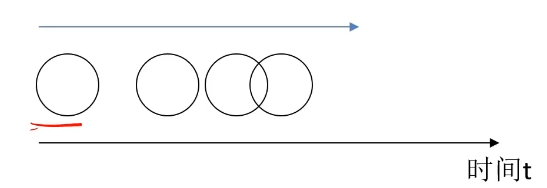

任务:物体位置预测

T=10s的时候,球在什么位置?

基于目标不同时刻状态进行预测

任务:股价预测

通过历史股价,预测次日股票价格

基于数据历史信息进行预测

序列模型

输入或者输出中包含有序列的数据的模型

突出数据的前后序列关系

两大特点:

1、输入(输出)元素之间是具有顺序关系。不同的顺序,得到的结果应该是不同的,不如”不吃饭“和”吃饭不“这两个短语的意思是不同的

2、输入输出不定长。比如文章生成、聊天机器人

循环神经网络

RNN:前部序列的信息经处理后,作为输入信息传递到后部序列.

数学公式:

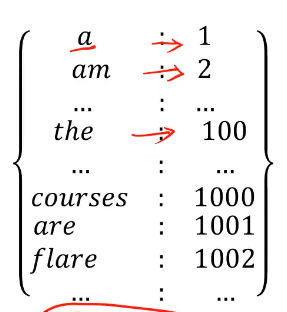

词汇数值化:

建立一个词汇-数值一一对应的字典,然后把输入词汇转化为数值矩阵

模型通过loss值进行更新

RNN常见结构

结构1:多输入对应多输出、维度相同RNN结构

输入:x1,x2,…,xi

输出:y1,y2,…,yi

应用:特定信息识别

结构2:多输入单输出RNN结构

输入:x1,x2,…,xi

输出:y

应用:情感识别

举例:输入I feel happy watching the movie,输出angry

单输入多输出RNN结构

输入:x

输出:y1,y2,…,yi

应用:序列数据生成器

举例:文章生成,音乐生成

多输入多输出RNN结构

输入:x1,x2,…,xi

输出:y1,y2,…,yi

应用:语言翻译

what is artificial intelligence? --> 什么是人工智能?

普通RNN结构缺陷

1、前部序列信息在传递到后部的同时,信息权重下降,导致重要信息丢失

2、求解过程中梯度消失

需要提高前部特定信息的决策权重

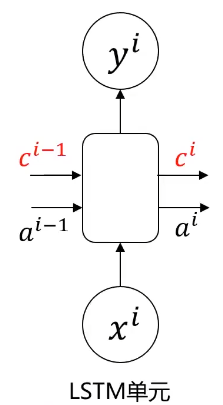

长短期记忆网络(LSTM)

通过ai传递前部序列信息,距离越远信息丢失越多

增加记忆细胞ci,可以传递前部远处部位信息

总结(简化理解):

相比ai,记忆细胞ci重点记录前部序列重要信息,且在传递过程中信息丢失少

忘记门:选择性丢弃ai-1与xi中不重要的信息

更新门:确定给记忆细胞添加哪些信息

输出门:筛选需要输出的信息

1、在网络结构很深(很多层)的情况下,也能保留重要信息

2、解决了普通RNN求解过程中的梯度消失问题

双向循环神经网络BRNN

判断flare是否为人名:

1、The courses are taught by Flare Zhao and David Chen.

2、Hong long does a flare last in the air?

做判断时,把后部序列信息也考虑

深层循环神经网络(DRNN)

解决更复杂的序列任务,可以把单层RNN叠起来或者在输出前和普通mlp结构结合使用

实战准备

实战(1)

任务:基于zgpa_train.csv数据,建立RNN模型,预测股价

1、完成数据预处理,将序列数据转化为可用于RNN输入的数据

2、对新数据zgpa_test.csv进行预测,可视化结果

3、存储预测结果,并观察局部预测结果

模型结构:单层RNN,输出有5个神经元

每次使用前八个数据预测第九个数据

#提取序列数据:

def extract_data(data,slide):

x=[]

y=[]

for i in range(len(data)-slide):

x.append([a for a in data[i:i+slide]])

#或x.append(data[i:i+slide])

y.append(data[i+slide])

x = np.array(x)

x = x.reshape(x.reshape[0],x.shape[1],1)

return x, y

其中代码:

x = np.array(x)

x = x.reshape(x.reshape[0],x.shape[1],1)

的意思如下:

#建立普通RNN模型:

from keras.models import Sequential

from keras.layers import Densen,SimpleRNN

model = Sequential()

#增加一个RNN层:

model.add(SimpleRNN(units=5,input_shape=(X.shape[1],X.shape[2]),activation='relu')) #samples默认自动计算

#增加输出层

model.add(Dense(unit=1,activation='linear'))

model.compile(optimizer='adam',loss='mean_squared_error')

input_shape=(sample,time_steps,features)

samples:样本数量(模型根据输入数据自动计算)

time_steps:序列的长度,即用多少个连续样本预测一个输出

features:样本的特征维数([0,0,1]对应3)

假设股票数据样本有100个,每次用8跳数据预测第九条,股票数据为单维度数值,要求输入数据的shape为(100,8,1)

完整代码:

import pandas as pd

import numpy as np

data = pd.read_csv('zgpa_train.csv')

data.head()

price = data.loc[:,'close'] #读入收盘价

price.head()

#归一化处理

price_norm = price/max(price)

print(price_norm)

%matplotlib inline

from matplotlib import pyplot as plt

fig1 = plt.figure(figsize=(8,5))

plt.plot(price)

plt.title('close price')

plt.xlabel('time')

plt.ylabel('price')

plt.show()

#define the X and y

#define methond to extract X and y

def extract_data(data,time_step):

x = []

y = []

#0,1,2,3,...,9:10个样本:time_step=8;0,1,...,7;1,2,...,8;2,3,...,9三组(两组样本)

for i in range(len(data)-time_step):

X.append([a for a in data[i:i+time_step]])

y.append(data[i+time_step])

X = np.array(X)

X = X.reshape(X.shape[0],X.shape[1],1)

return X, y

time_step = 8

#define X and y

X, y = extract_data(price_norm,time_step)

##print(X)

##print(X.shape)

##print(X[0,:,:])

##print(y)

#set up the moedl

from keras.models import Sequential

from keras.layers import Dense, SimplieRNN

model = Sequential()

#add RNN layer

model.add(SimpleRNN(units=5,input_shape=(time_step,1),activation='relu'))

#add output layer

model.add(Dense(units=1,activation='linear'))

#configure the model

model.compile(optimizer='adam',loss='mean_squard_error')

model.summary()

#train the model

model.fit(X,y,batch_size=30,epochs=200)

#make prediction based on training data

y_train_predict = model.predict(X)*max(price)

y_train = [y*max(price) for i in y]

##print(y_train_predict, y_train)

fig2 = plt.figure(figsize=(8,5))

plt.plot(y_train,label='real price')

plt.plot(y_train_predict,label='predict price')

plt.title('close price')

plt.xlabel('time')

plt.ylabel('price')

plt.show()

#load test data

data_test = pd.read_csv('zgpa_test.csv')

data_test.head()

price_test = data_test.loc[:,'close']

pirce_test.head()

price_test_norm = price_test/max(price)

#extract X_test and y_test 取出X和y

X_test_norm, y_test_norm = extract_data(price_test_norm,time_step)

print(X_test_norm.shape,len(y_test_norm))

#make prediction based on the test data

y_test_predict = model.predict(X_test_norm)*max(price)

y_test = [i*max(price) for i in y_test_norm]

fig3 = plt.figure(figsize=(8,5))

plt.plot(y_test,label='real price_test')

plt.plot(y_test_predict,label='predict price_test')

plt.title('close price')

plt.xlabel('time')

plt.ylabel('price')

plt.show()

#预测数据的存储

result_y_test = np.array(y_test).reshape(-1,1)

result_y_test_predict = y_test_predict

##print(result_y_test .shape,result_y_test_predict.shape)

result = np.concate(result_y_test,result_y_test_predict,axis=1)

##print(result.shape)

result = pd.DataFrame(result,columns=['real_price_test','predict_price_test'])

result.to_csv('zgpa_predict_test.csv')

实战(2):LSTM自动生成文本

任务:基于flare文本数据,建立LSTM模型,预测序列文字

1、完成数据预处理,将文字序列数据转化为可用于LSTM输入的数据

2、查看文字数据预处理后的数据结构,并进行数据分离操作

3、针对字符串输入(“flare is a teacher in ai industry.He obtained his phd in Australia.”),预测其对应的后续字符

模型结构:

单层LSTM,输出有20个神经元

每次使用前20个字符预测第21个字符

#文本加载

raw data = open('flare').read()

#字符字典建立

#字符去重

letters = list(set(data))

#建立数字到字符的索引字典

int_to_char = {a:b for a,b in enumerate(letters)}

#建立字符到数字的索引字典

char_to_int = {b:a for a,b in enumerate(letters)}

注:

完整代码

源代码V1(报错):

#load the data

data = open('flare').read()

#移除换行符

data = data.replace('\n','').replace('\r','')

print(data)

#字符去重处理

letters = list(set(data))

print(letters)

num_letters = len(letters)

print(num_letters)

#建立字典

#int to char

int_to_char = {a:b for a,b in enumerate(letters)}

##print(int_to_char)

#char to int

char_to_int = {b:a for a,b int enumerate(letters)}

##print(char_to_int)

#time_step

time_step = 20

#批量处理

import numpy as np

from keras.utils import to_categorical

#滑动窗口提取数据

def extract_data(data, slide):

x = []

y = []

for i in range(len(data) - slide):

x.append([a for a in data[i:i+slide]])

y.append(data[i+slide])

X = np.array(X)

X = X.reshape(X.shape[0],X.shape[1],1)

return X, y

#字符到数字的批量转化

def char_to_int_Data(x,y, char_to_int):

x_to_int = []

y_to_int = []

for i in range(len(x)):

x_to_int.append([char_to_int[char] for char in x[i]])

y_to_int.append([char_to_int[char] for char in y[i]])

return x_to_int, y_to_int

#实现输入字符文章的批量处理,输入整个字符、滑动窗口大小、转化字典

def data_preprocessing(data, slide, num_letters, char_to_int):

char_Data = extract_data(data, slide)

int_Data = char_to_int_Data(char_Data[0], char_Data[1], char_to_int)

Input = int_Data[0]

Output = list(np.array(int_Data[1]).flatten())

Input_RESHAPED = np.array(Input).reshape(len(Input), slide)

new = np.random.randint(0,10,size=[Input_RESHAPED.shape[0],Input_RESHAPED.shape[1],num_letters])

for i in range(Input_RESHAPED.shape[0]):

for j in range(Input_RESHAPED.shape[1]):

new[i,j,:] = to_categorical(Input_RESHAPED[i,j],num_classes=num_letters)

return new, Output

#extract X and y from text data

X, y = data_preprocessing(data,time_step,num_letters,char_to_int)

##print(X.shape)

##print(len(y))

#split the data数据分离

from sklearn.model_selection import train_test_split

X_train,X_test,y_train,y_test = train_test_split(X,y,test_size=0.1,random_state=10)

##print(X.shape,len(y))

y_train_category = to_categorical(y_train,num_letters)

print(y_train_category)

#set up the model

from keras.models import Sequential

from keras.layers import Dense,LSTM

model = Sequential()

model.add(LSTM(units=20,input_shape=(X_train.shape[1],X_train.shape[2]),activation='relu'))

model.add(Dense(units=num_letters,activation='softmax'))

model.compile(optimizer='adam',loss='categorical_crossentropy',metrics=['accuracy'])

model.summary()

#train the model

model.fit(X_train,y_train_category,batch_size=1000,epochs=5)

#make prediction based on the training data

y_train_predict = model.predict_classes(X_train)

print(y_train_predict)

#transform the int to letters

y_train_predict_char = [int_to_char[i] for i in y_train_predict]

print(y_train_predict_char)

from sklearn.metrics import accuracy_score

accuracy_train = accuracy_score(y_train,y_train_predict)

print(accuracy_train)

y_test_predict = model.predict_classes(X_test)

y_test_predict_char = [int_to_char[i]] for i in y_test_predict]

accuracy_test = accuracy_score(y_test,y_test_predict)

print(accuracy_test)

print(y_test_predict)

print(y_test)

new_letters = 'flare is a teacher in ai industry.He obtained his phd in Australia'

X_new, y_new = data_preprocessing(data,time_step,num_letters,char_to_int)

y_new_predict = model.predict(X_new)

print(y_new_predict)

#transform the int to letters

y_new_predict_char = [int_to_char[i] for i in y_new_predict]

print(y_new_predict_char)

for i in range(0,X_new.shape[0]-20):

print(new_letters[i:i+20],'--predict next letters is--',y_new_predict_char[i])

修改版V2:

import numpy as np

from keras.utils import to_categorical

from keras.models import Sequential

from keras.layers import Dense, LSTM

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

# Load the data

data = open('flare.txt').read()

# Remove newlines

data = data.replace('\n', '').replace('\r', '')

print(data)

# Character deduplication

letters = list(set(data))

print(letters)

num_letters = len(letters)

print(num_letters)

# Create dictionaries

int_to_char = {a: b for a, b in enumerate(letters)}

char_to_int = {b: a for a, b in enumerate(letters)}

# Time step

time_step = 20

# Sliding window data extraction

def extract_data(data, slide):

x = []

y = []

for i in range(len(data) - slide):

x.append([a for a in data[i:i + slide]])

y.append(data[i + slide]) # y should be a single character

return x, y

# Character to integer batch conversion

def char_to_int_Data(x, y, char_to_int):

x_to_int = []

y_to_int = []

for i in range(len(x)):

x_to_int.append([char_to_int[char] for char in x[i]])

y_to_int.append(char_to_int[y[i]]) # y[i] is a single character

return x_to_int, y_to_int

# Implement batch processing for input text

def data_preprocessing(data, slide, num_letters, char_to_int):

char_Data = extract_data(data, slide)

int_Data = char_to_int_Data(char_Data[0], char_Data[1], char_to_int)

Input = int_Data[0]

Output = int_Data[1] # Output is already a list of integers

Input_RESHAPED = np.array(Input).reshape(len(Input), slide, 1)

new = np.zeros((Input_RESHAPED.shape[0], Input_RESHAPED.shape[1], num_letters))

for i in range(Input_RESHAPED.shape[0]):

for j in range(Input_RESHAPED.shape[1]):

new[i, j, :] = to_categorical(Input_RESHAPED[i, j], num_classes=num_letters)

return new, Output

# Extract X and y from text data

X, y = data_preprocessing(data, time_step, num_letters, char_to_int)

# Split the data

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.1, random_state=10)

# Convert y_train to categorical

y_train_category = to_categorical(y_train, num_letters)

print(y_train_category)

# Set up the model

model = Sequential()

model.add(LSTM(units=20, input_shape=(X_train.shape[1], X_train.shape[2]), activation='relu'))

model.add(Dense(units=num_letters, activation='softmax'))

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

model.summary()

# Train the model

model.fit(X_train, y_train_category, batch_size=1000, epochs=3)

# Make predictions based on the training data

y_train_predict = np.argmax(model.predict(X_train), axis=1)

print(y_train_predict)

# Transform the int to letters

y_train_predict_char = [int_to_char[i] for i in y_train_predict]

print(y_train_predict_char)

# Calculate accuracy

accuracy_train = accuracy_score(y_train, y_train_predict)

print(accuracy_train)

# Predict on test data

y_test_predict = np.argmax(model.predict(X_test), axis=1)

y_test_predict_char = [int_to_char[i] for i in y_test_predict]

accuracy_test = accuracy_score(y_test, y_test_predict)

print(accuracy_test)

print(y_test_predict)

print(y_test)

# New data for prediction

new_letters = 'flare is a teacher in ai industry. He obtained his phd in Australia'

X_new, y_new = data_preprocessing(new_letters, time_step, num_letters, char_to_int)

y_new_predict = np.argmax(model.predict(X_new), axis=1)

y_new_predict_char = [int_to_char[i] for i in y_new_predict]

print(y_new_predict_char)

# Print predictions

for i in range(0, X_new.shape[0]):

print(new_letters[i:i + time_step], '--predict next letter is--', y_new_predict_char[i])

总结

LSTM文本生成实战summary

1、通过搭建LSTM模型,实现了基于文本序列的字符生成功能;

2、学习了文本加载、字典生成方法;

3、掌握了文本的数据预处理方法,并熟悉了转化数据的结构;

4、实现了对新文本数据的字符预测

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?