1.前置小知识

1)log_sum_exp

这个是升级版的softmax,防止出现上溢或下溢,详见关于LogSumExp - 知乎

# Compute log sum exp in a numerically stable way for the forward algorithm

def log_sum_exp(vec):

max_score = vec[0, argmax(vec)]

max_score_broadcast = max_score.view(1, -1).expand(1, vec.size()[1])

return max_score + \

torch.log(torch.sum(torch.exp(vec - max_score_broadcast)))2)维特比算法

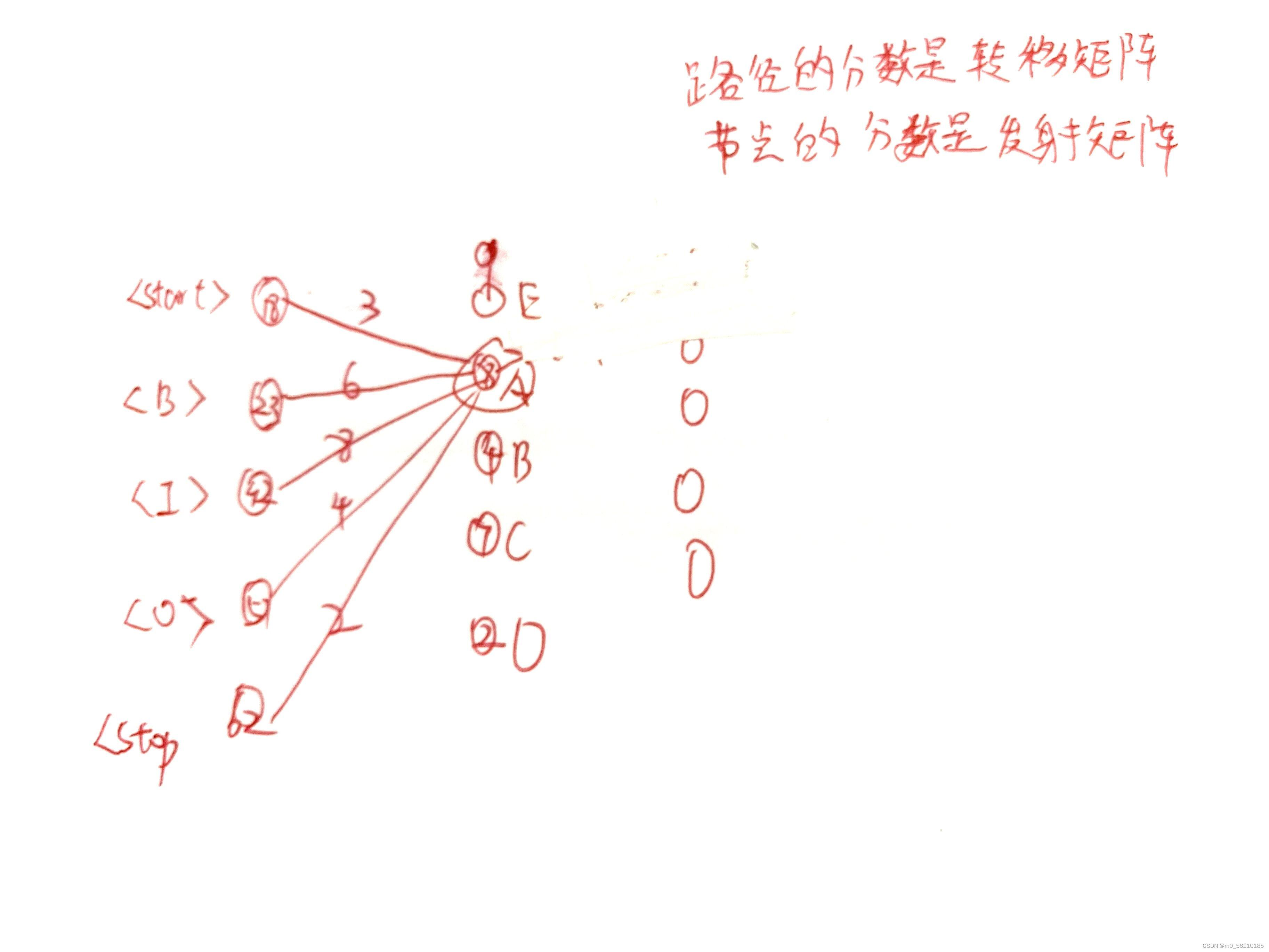

这个是已知发射矩阵和转移矩阵,算经过一个图最大得分的,主要就是每两步就删一些必不可能得到最大分的路经,如图

都是到达第二列A点的(第一列是之前的总分)这五条路必有一条最高分,那么我们只取最高分的到达A的路经就好了,再加上A节点本身的分数,作为新的A节点的总分,BCDE四点也如法炮制,于是第二列的总分就算出来了,再找到它们到第三列一点的最大分数,一次类推,于是算整条路经的最大得分时,只要做一个循环就够了,复杂度大大降低,这里有个小细节,因为句子总会从<start>开始,所以最开始的第一列总分我们除把<start>那个点设为0外其余都设一个很大的负数,来保证第一列引到第二列的最短路径都是从<start>开始的

def _viterbi_decode(self, feats):

backpointers = []

# Initialize the viterbi variables in log space

init_vvars = torch.full((1, self.tagset_size), -10000.)

init_vvars[0][self.tag_to_ix[START_TAG]] = 0

# forward_var at step i holds the viterbi variables for step i-1

forward_var = init_vvars

for feat in feats:

bptrs_t = [] # holds the backpointers for this step

viterbivars_t = [] # holds the viterbi variables for this step

for next_tag in range(self.tagset_size):

# next_tag_var[i] holds the viterbi variable for tag i at the

# previous step, plus the score of transitioning

# from tag i to next_tag.

# We don't include the emission scores here because the max

# does not depend on them (we add them in below)

next_tag_var = forward_var + self.transitions[next_tag]

best_tag_id = argmax(next_tag_var)

bptrs_t.append(best_tag_id)

viterbivars_t.append(next_tag_var[0][best_tag_id].view(1))

# Now add in the emission scores, and assign forward_var to the set

# of viterbi variables we just computed

forward_var = (torch.cat(viterbivars_t) + feat).view(1, -1)

backpointers.append(bptrs_t)

# Transition to STOP_TAG

terminal_var = forward_var + self.transitions[self.tag_to_ix[STOP_TAG]]

best_tag_id = argmax(terminal_var)

path_score = terminal_var[0][best_tag_id]

# Follow the back pointers to decode the best path.

best_path = [best_tag_id]

for bptrs_t in reversed(backpointers):

best_tag_id = bptrs_t[best_tag_id]

best_path.append(best_tag_id)

# Pop off the start tag (we dont want to return that to the caller)

start = best_path.pop()

assert start == self.tag_to_ix[START_TAG] # Sanity check

best_path.reverse()

return path_score, best_path

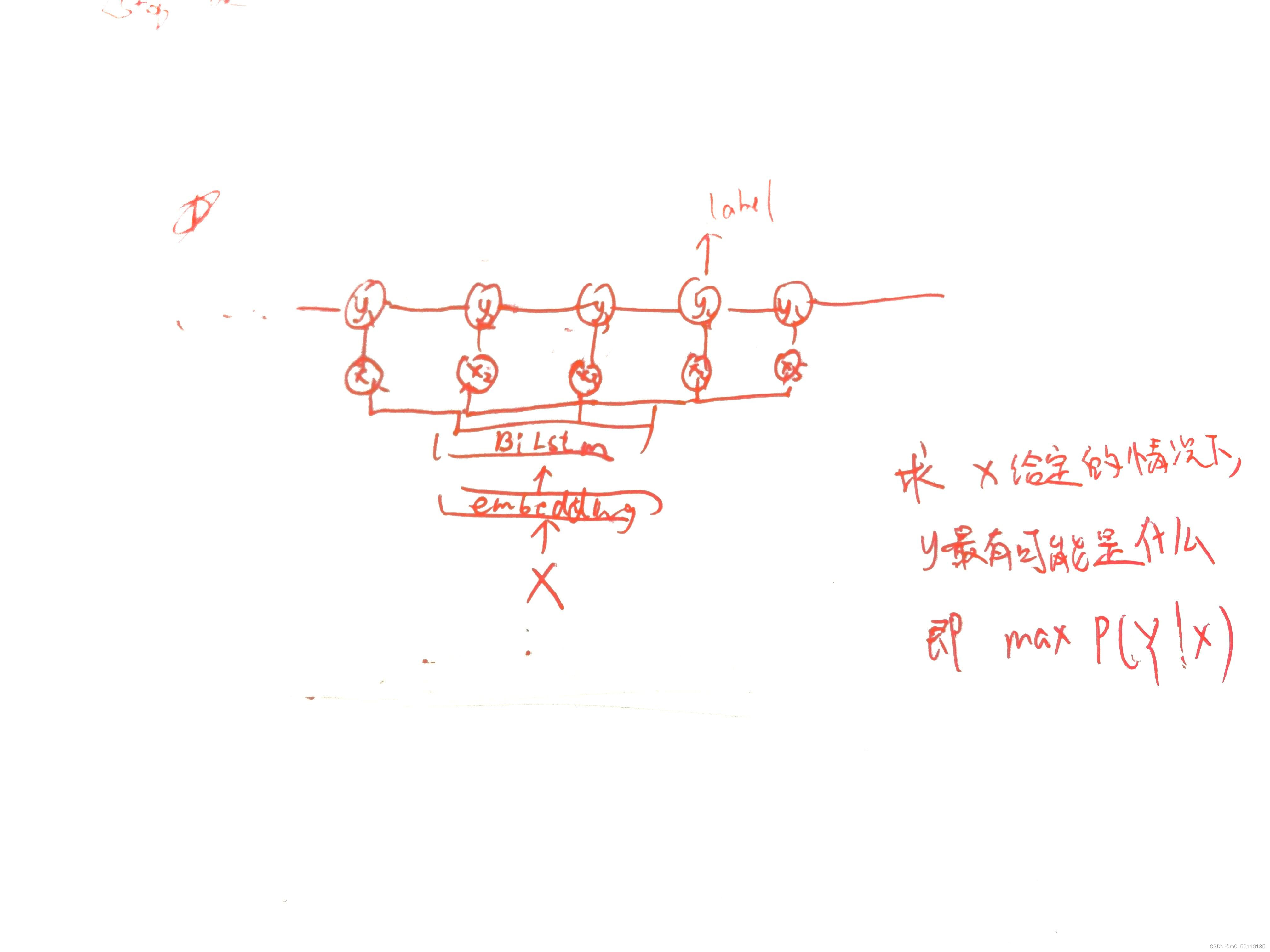

3)我们要干啥

给定一个句子,找到概率最大的label组合,如图

2 正文

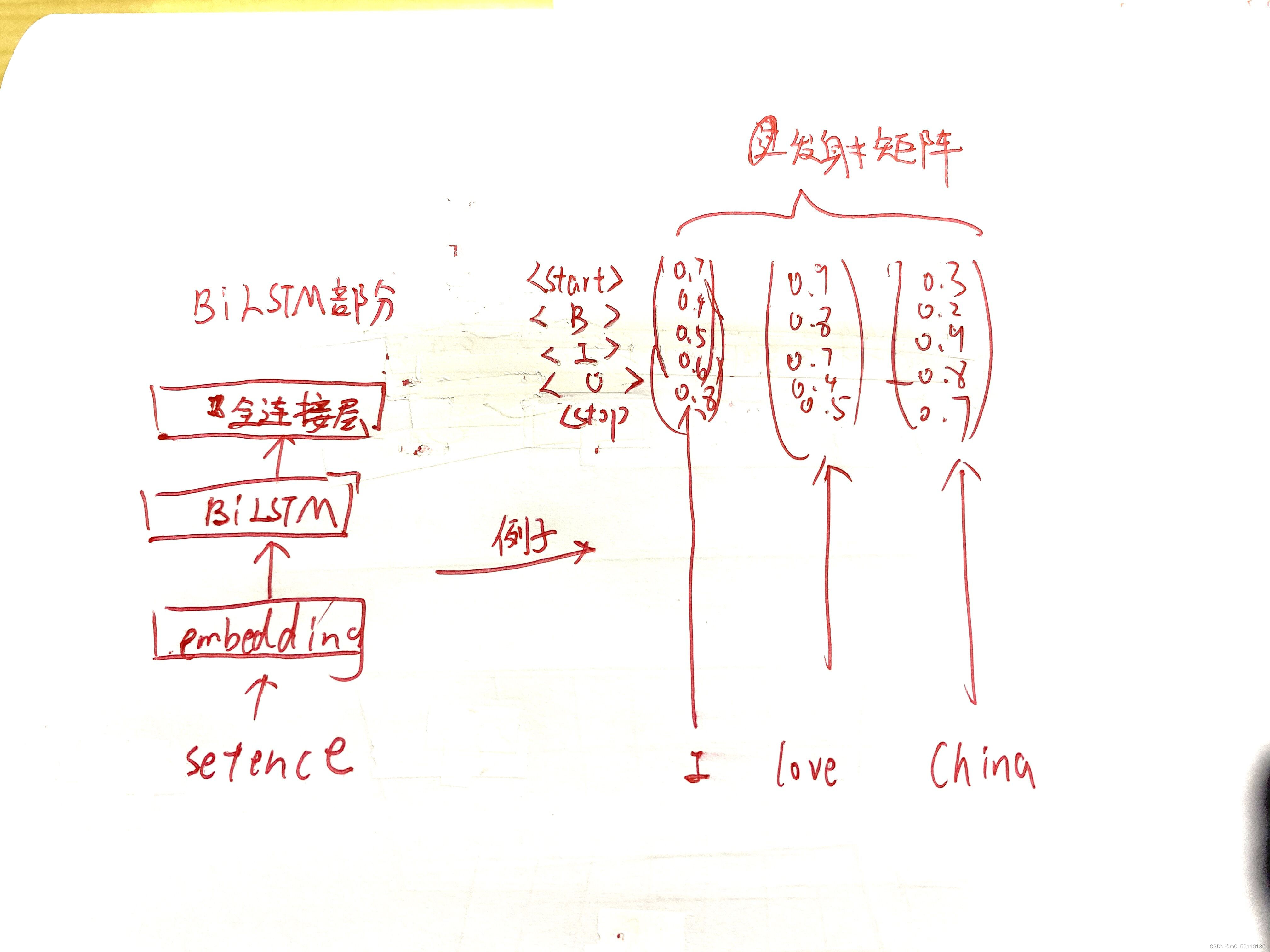

BiLSTM_CRF模型共分两个部分,一部分是BiLSTM部分,一部分是CRF部分

先从简单的BiLSTM部分开始吧,与之有关的主要代码如下

在初始化中,建立一个正常的BiLstm模型,输出的大小为target(标签)的种类多少

而将每一个字的输出都连起来,拼成一个矩阵,就是所谓的发射矩阵,如图,代码如下:

def __init__(self, vocab_size, tag_to_ix, embedding_dim, hidden_dim):

super(BiLSTM_CRF, self).__init__()

self.embedding_dim = embedding_dim

self.hidden_dim = hidden_dim

self.vocab_size = vocab_size

self.tag_to_ix = tag_to_ix

self.tagset_size = len(tag_to_ix)

self.word_embeds = nn.Embedding(vocab_size, embedding_dim)

self.lstm = nn.LSTM(embedding_dim, hidden_dim // 2,

num_layers=1, bidirectional=True)

# Maps the output of the LSTM into tag space.

self.hidden2tag = nn.Linear(hidden_dim, self.tagset_size) def _get_lstm_features(self, sentence):#这个函数已经算出状态方程(发射矩阵了),就是feats(len(sentence),tag_num)

self.hidden = self.init_hidden()

embeds = self.word_embeds(sentence).view(len(sentence), 1, -1)

lstm_out, self.hidden = self.lstm(embeds, self.hidden)

lstm_out = lstm_out.view(len(sentence), self.hidden_dim)

lstm_feats = self.hidden2tag(lstm_out)

return lstm_feats而BiLSTM模型需要给出根据X预测的输出Y的序列进行更新,即需要前文讲到的维特比算法进行更新,什么,你问正常的BiLSTM算法不是由softmax前向更新吗,这当然是因为BiLSTM不再是独立的了,而是隐藏在CRF之下,也就是它有权利给一个在CRF看起来不可能产生的单词一个很大的权重,比如跳跳真可爱但看跳这个字判为O的可能性很大,LSTM也有权利这样做,反正最后维特比路经不会选它而已,如果只是分立的用BiLSTM训练,那么就有可能告诉模型跳是B(句子标注)干扰LSTM层,代码如下

def forward(self, sentence): # dont confuse this with _forward_alg above.

# Get the emission scores from the BiLSTM

lstm_feats = self._get_lstm_features(sentence)

# Find the best path, given the features.

score, tag_seq = self._viterbi_decode(lstm_feats)

return score, tag_seq现在我们有了发射矩阵(x--yi关系),其实就相当于把CRF部分的X部分需要的数据准备好了,而我们还差一个转移矩阵,即(yi-1--yi)的关系,其建立代码如下

#转移矩阵,从一个标签转移到到另一个标签的概率

self.transitions = nn.Parameter(

torch.randn(self.tagset_size, self.tagset_size))

# 行(第一个参数是被转移到的),列(第二个参数是向外转移的),开始不可能被任何标签转移,结束不可能转移到任何标签

self.transitions.data[tag_to_ix[START_TAG], :] = -10000

self.transitions.data[:, tag_to_ix[STOP_TAG]] = -10000是的,你没有看错,它是先随机建立的,但要规定两个参数,即<start>标签永远不可能被转移到,<stop>标签永远不可能转移到其他标签,人工给他们设低,如图

好了,现在我们两个矩阵都有了,那怎么计算最大的p(y/x)呢,即CRF模型前向计算,代码如下

def _forward_alg(self, feats):

# Do the forward algorithm to compute the partition function

init_alphas = torch.full((1, self.tagset_size), -10000.)#([[-10000,-10000,-10000,-10000,-10000]])

# START_TAG has all of the score.

init_alphas[0][self.tag_to_ix[START_TAG]] = 0.#([0,-10000,-10000,-10000,-10000])

#总的来说就是让不从start开始的分数低

# Wrap in a variable so that we will get automatic backprop

forward_var = init_alphas

# Iterate through the sentence

for feat in feats:

alphas_t = [] # The forward tensors at this timestep

for next_tag in range(self.tagset_size):

# broadcast the emission score: it is the same regardless of

# the previous tag

emit_score = feat[next_tag].view(

1, -1).expand(1, self.tagset_size)

# the ith entry of trans_score is the score of transitioning to

# next_tag from i计算发射分数

trans_score = self.transitions[next_tag].view(1, -1)#看next_tag被转移到

# The ith entry of next_tag_var is the value for the

# edge (i -> next_tag) before we do log-sum-exp

next_tag_var = forward_var + trans_score + emit_score

# The forward variable for this tag is log-sum-exp of all the

# scores.

alphas_t.append(log_sum_exp(next_tag_var).view(1))

forward_var = torch.cat(alphas_t).view(1, -1)

terminal_var = forward_var + self.transitions[self.tag_to_ix[STOP_TAG]]

alpha = log_sum_exp(terminal_var)

return alpha至于这段代码的原理,前辈已经解释的很清楚啦,详见下面学习日志中的已知模型求边缘概率[学习日志]白板推导-条件随机场 CRF Conditional Random Field_烫烫烫烫的若愚的博客-CSDN博客h

好了,现在就只剩损失函数没求了,作者将它定义为按标注的tag计算的p(y/x)与算得的p(y/x)的差

代码如图

def neg_log_likelihood(self, sentence, tags):

feats = self._get_lstm_features(sentence)

forward_score = self._forward_alg(feats)

gold_score = self._score_sentence(feats, tags)

return forward_score - gold_score

写在后面:深度学习小白一只,读了很多关于CRF的介绍,都觉得公式很多,萌新看的有点吃力,故废了大力气自认些许明白以后想写一篇文章节省后来人的时间,因为全靠自己理解,必有差错之处,希望路过的大佬指正!

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?