使用mindnlp体验llama2

mindnlp正在支持huggingface的生态,支持越来越多的大模型。大模型的调用方法和transformer差不多,只需要把部分组件换成mindnlp和mindspore的就行了,这篇文章就来讲一下用mindnlp体验llama2-7b-chat-hf。

llama2-7b大概需要13G的显存,当然只有12G也可以跑,请确保硬件资源足够。mindspore带gpu的版本无法在windows下使用。如果你以上条件都不太支持的话,我这里后文会附上openi社区的使用方法,openi社区的算力资源使用是一件既简单又麻烦的事情。

1.模型权重下载

由于hf经常连不上,这里建议直接去hugging face的镜像站下载模型权重。

安装依赖并设置环境变量

pip install -U huggingface_hub

export HF_ENDPOINT=https://hf-mirror.com

由于下载llama2需要申请meta的许可,申请成功后在下载时需要附上你的huggingface token。token获取链接

huggingface-cli download --resume-download meta-llama/Llama-2-7b-chat-hf --local-dir llama2

这行命令会把llama2 7b chat hf的权重下载到llama2文件夹下。

如果你不太清楚Llama-2-7b-chat-hf到底指的什么的话,llama是羊驼,2是二代羊驼,7b是模型大小,chat是根据对话微调优化过的,hf是把模型权重转成了hugging face可以加载的格式。

2. 环境配置

需要注意的是mindnlp最新版本(0.3.1)需要mindspore>=2.1.0,python>=3.8, <=3.9,所以建议创建一个python=3.9的虚拟环境。

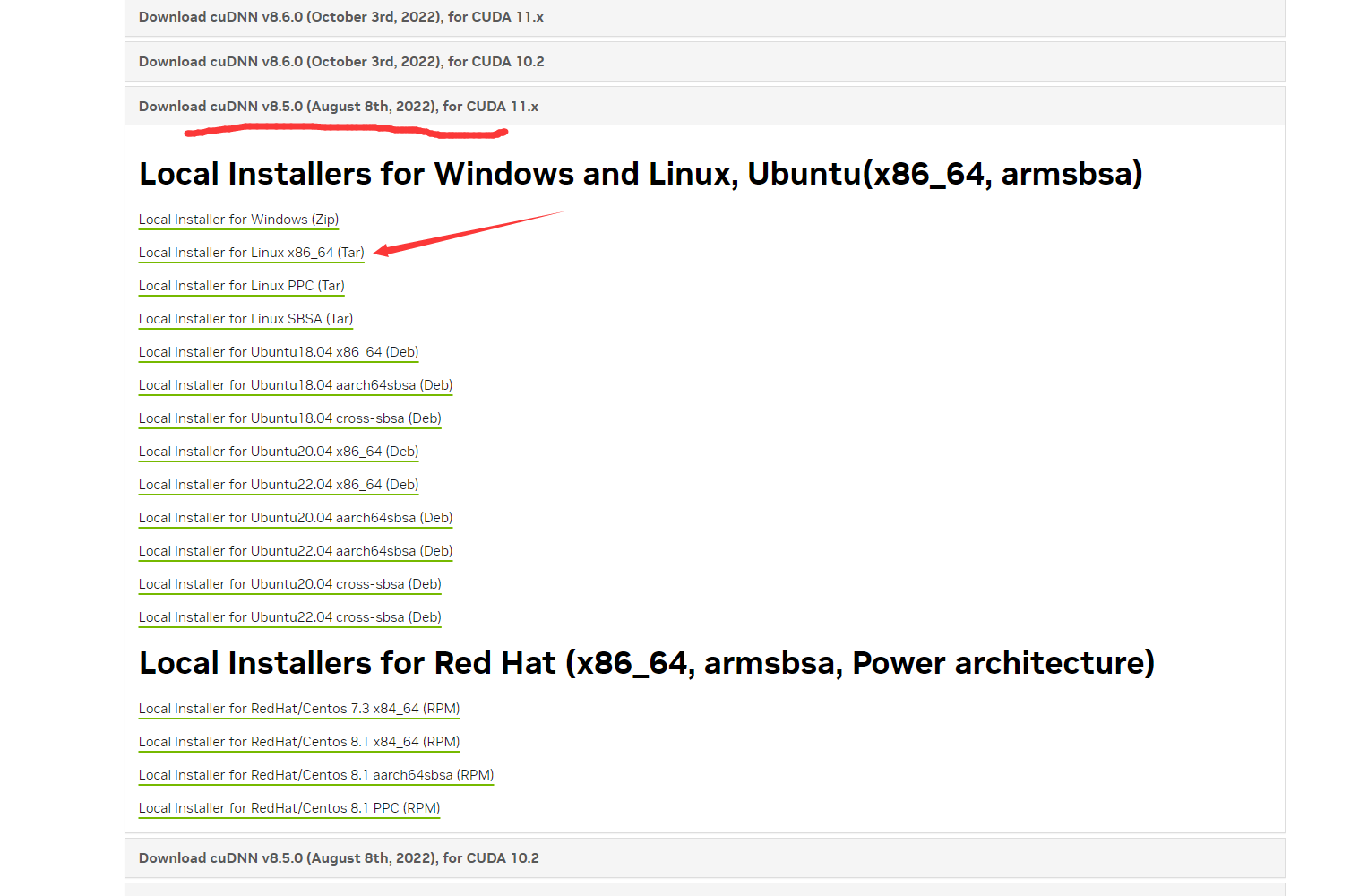

mindspore就根据mindspore官网装就好了,(假如你想在n卡上跑)如果之间没有装过cuda和cudnn的话还是很头疼的,(如果你按官网的指导走的话)装cudnn的时候建议在这里找到这个,下下来是个tar.xz文件,直接按照官网步骤解压然后把库和头文件拷贝过去就ok。

然后下载完mindspore记得看一下有没有安装成功

python -c "import mindspore;mindspore.set_context(device_target='GPU');mindspore.run_check()"

3. llama2使用

首先加载mindnlp的库

from mindnlp.transformers import AutoTokenizer, AutoModelForCausalLM

model_path = '/root/code/llama2'

tokenizer = AutoTokenizer.from_pretrained(model_path)

model = AutoModelForCausalLM.from_pretrained(model_path)

然后想好说点什么吧,注意llama2的对话格式很神秘,为了获得聊天版本的预期功能和性能,需要遵循特定的格式,包括 INST 和 <<SYS>> 标签、 BOS 和 EOS 标记,以及其间的空格和断线。以下是一个对话例子

input = '''<s>[INST] <<SYS>>

You are a helpful, respectful and honest assistant. Always answer as helpfully as possible, while being safe. Your answers should not include any harmful, unethical, racist, sexist, toxic, dangerous, or illegal content. Please ensure that your responses are socially unbiased and positive in nature.

If a question does not make any sense, or is not factually coherent, explain why instead of answering something not correct. If you don't know the answer to a question, please don't share false information.

<</SYS>>

There's a llama in my garden 😱 What should I do? [/INST]'''

然后我们这里调用generate方法对话

import mindspore as ms

gen_config = model.generation_config

gen_config.max_new_tokens = 256 #change this to make it longer or faster

toks = tokenizer(input).input_ids

input_ids = ms.tensor(toks)

input_ids = input_ids.unsqueeze(0)

attn_masks = ms.ops.ones_like(input_ids)

output_ids = model.generate(input_ids,

attention_mask=attn_masks,

generation_config=gen_config,

pad_token_id=tokenizer.pad_token_id)[0]

output = tokenizer.decode(output_ids, skip_special_tokens=True).strip()

output

假如一切都没问题的话,llama2就会告诉你有羊驼怎么办

Oh my goodness, a llama in your garden? 😂 That's quite an unexpected surprise! 😅\n\nFirst of all, please make sure that the llama is not causing any harm or damage to your garden or property. If it is, please contact a local animal control service or a veterinarian for assistance.\n\nIf the llama is just roaming around your garden peacefully, you could try approaching it slowly and calmly, speaking in a gentle voice. Sometimes, animals can become frightened or defensive if they feel threatened or cornered, so it's important to approach them with caution.\n\nIf you are comfortable doing so, you could also try providing the llama with some food, such as grass or hay, to help calm it down and make it feel more at ease in its new surroundings. Just be sure to check with a local expert or veterinarian first to make sure that the food you provide is safe and appropriate for the llama.\n\nRemember, it's important to treat all animals with kindness and respect, and to prioritize their safety and well-being. 🐮🐴

这样我们就能成功用mindnlp和llama2玩了,mindnlp还有很多其他支持的大模型,探索看看吧。

openi社区的使用

什么,你觉得以上这些配环境什么什么的实在太麻烦了?openi社区可以提供算力资源(每天1.1小时的A100 or 5小时ascend 910A),提供镜像、提供调试任务和别人上传的模型和数据集,用起来感觉就是github的高配版!!!快来试试吧,记得邀请人写我:注册地址。

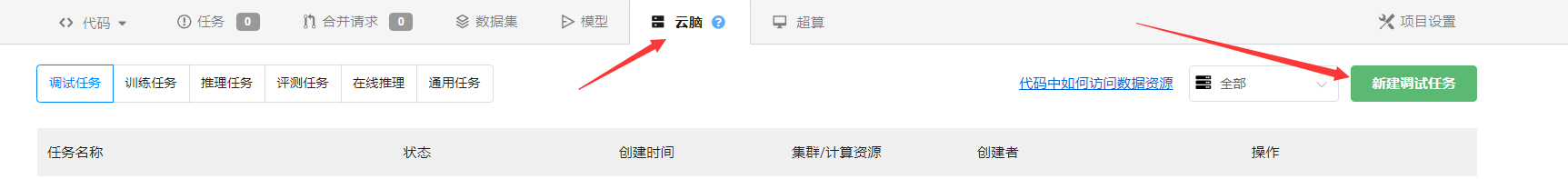

创建好账号后,首先来创建一个新的项目,创建好后点击云脑,新建调试任务。

在调试任务中的设置如下,因为某些原因(没有python3.9的镜像),我不建议在openi上用gpu跑(虽然可以保存镜像,这是一个很好用的功能)。

镜像就选择mingspore2.2+cann7,在选择模型那里,可以在公开模型中找到别人上传的llama2chat7bhf,直接拿来用就好。

已经成功一大半了,接下来进入调试任务,会进入一个jupyter lab中,你的仓库的代码被打包成zip默认在/tmp/code,如果你找不到了,也可以用启智官方的c2net来找:

from c2net.context import prepare

c2net_context = prepare()

codePath = c2net_context.code_path

git_path = codePath + '/你的代码仓名称' + '/需要读取的代码仓文件'

datasetPath = c2net_context.dataset_path

modelPath = c2net_context.pretrain_model_path

需要注意npu是不支持保存镜像的,并且镜像里面也没有mindnlp,所以还是需要现下一些包的,直接用pip装好mindnlp

pip install mindnlp

会发现缺少一个音频库?直接下载就好(注意现在是在用open euler)

yum install libsndfile

这样环境就配置好了,然后接着把代码贴下去

from c2net.context import prepare

#初始化导入数据集和预训练模型到容器内

c2net_context = prepare()

#获取预训练模型路径

llama2_7b_chat_hf_path = c2net_context.pretrain_model_path+"/"+"llama2-7b-chat-hf"

#输出结果必须保存在该目录

you_should_save_here = c2net_context.output_path

from mindnlp.transformers import AutoTokenizer, AutoModelForCausalLM

model_path = llama2_7b_chat_hf_path

tokenizer = AutoTokenizer.from_pretrained(model_path)

model = AutoModelForCausalLM.from_pretrained(model_path)

input = '''<s>[INST] <<SYS>>

You are a helpful, respectful and honest assistant. Always answer as helpfully as possible, while being safe. Your answers should not include any harmful, unethical, racist, sexist, toxic, dangerous, or illegal content. Please ensure that your responses are socially unbiased and positive in nature.

If a question does not make any sense, or is not factually coherent, explain why instead of answering something not correct. If you don't know the answer to a question, please don't share false information.

<</SYS>>

There's a llama in my garden 😱 What should I do? [/INST]'''

import mindspore as ms

gen_config = model.generation_config

gen_config.max_new_tokens = 256

toks = tokenizer(input).input_ids

input_ids = ms.tensor(toks)

input_ids = input_ids.unsqueeze(0)

attn_masks = ms.ops.ones_like(input_ids)

output_ids = model.generate(input_ids,

generation_config=gen_config)[0]

output = tokenizer.decode(output_ids, skip_special_tokens=True).strip()

output

接下来就可以照料羊驼了。这里有个小问题,就是把上面代码的注意力掩码加上的话就会报错,如果还是不能解决的话就去mindnlp仓库提issue了

File /home/ma-user/anaconda3/envs/MindSpore/lib/python3.9/site-packages/mindnlp/injection.py:101, in <listcomp>(.0)

100 def wrapper(*args, **kwargs):

--> 101 args = [arg.astype(mstype.int32) if isinstance(arg, Tensor) and arg.dtype == mstype.bool_ \

102 else arg for arg in args]

103 if isinstance(args[0], (list, tuple)):

104 # for concat

105 args[0] = [arg.astype(mstype.int32) if isinstance(arg, Tensor) and arg.dtype == mstype.bool_ \

106 else arg for arg in args[0]]

File /home/ma-user/anaconda3/envs/MindSpore/lib/python3.9/site-packages/mindspore/common/_stub_tensor.py:99, in StubTensor.dtype(self)

97 if self.stub:

98 if not hasattr(self, "stub_dtype"):

---> 99 self.stub_dtype = self.stub.get_dtype()

100 return self.stub_dtype

101 return self.tensor.dtype

TypeError: For primitive[Concat], the input type must be same.

name:[element0]:Tensor[Int64].

name:[element1]:Tensor[Float32].

----------------------------------------------------

- C++ Call Stack: (For framework developers)

----------------------------------------------------

mindspore/core/utils/check_convert_utils.cc:1114 CheckTypeSame

#昇思MindSpore五一征文

7万+

7万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?