都有啥

-

解封装

-

软硬件解码

-

像素格式转换

-

重采样

-

pts/dts

-

同步策略

FFmpeg解封装

总览

av_register_all()

avformat_network_init()

avformat_open_input(...)

avformat_find_stream_info(...)

av_find_best_stream(...)

AVFormatContext AVStream AVPacket

av_read_frame(...)

avformat_open_input

确保av_register_all avformat_network_init已调用

AVFormatContext **ps

const char *url

AVInputFormat *fmt

AVDictionary **options

AVFormatContext

AVIOContext *pb; char filename[1024];

unsigned int nb_streams;

AVStream **streams;

int64_6 duration; //AV_TIME_BASE

int64_t bit_rate

void avformat-close_input(AVFormatContext **s);

avformat_find_stream_info

int avformat_find_stream_info(

AVFormatContext *ic,

AVDictionary **options

);

flv(没法获取时长) h264 mpeg2

AVStream

AVCodecContext *codec; //过时了

AVRational time_base;

int64_t duration;

int64_t nb_frames;

AVRational avg_frame_rate;

AVCodecParameters *codecpar;(音视频参数)

AVCodecParameters

enum AVMediaType codec_type;

enum AVCodecID codec_id;

uint32_t codec_tag

int format

int width; int height;

uint64_t channel_layout; int channels; int sample_rate; int frame_size;

AVCodecParameters

enum AVMediaType codec_type;

enum AVCodecID codec_id;

uint32_t codec_tag

int format

int width; int height;

uint64_t channel_layout; int channels; int sample_rate; int frame_size;

static double r2d(AVRational r)

{

return r.num==0||r.den==0?0:(double)r.num/(double)r.den;

}

av_find_best_stream

int av_find_best_stream(

AVFormatContext * ic,

enum AVMediaType type,

int wanted_stream_nb,

int related_stream,

AVCodec** decoder_ret,

int flags

)

本文福利,免费领取C++音视频学习资料包、技术视频,内容包括(音视频开发,面试题,FFmpeg ,webRTC ,rtmp ,hls ,rtsp ,ffplay ,srs)↓↓↓↓↓↓见下面↓↓文章底部↓↓

av_read_frame

- AVFormatContext *s

- AVPacket *pkt

- return 0 if OK, < 0 on error or end of file

AVPacket

AVBufferRef *bug

int64_t pts; // pts * (num/den)

int64_t dts;

uint8_t data; int size;

AVPacket *av_packet_alloc(void);创建并初始化

AVPacket *av_packet_clone(const AVPacket *src); 创建并并用计数

int av_packet_ref(AVPacket *dst, const AVpacket *src); av_packet_unref(AVPacket *pkt)

void av_packet_free(AVPacket ** pkt); 清空对象并减引用计数

void av_init_packet(AVPacket *pkt);默认值

int av_packet_from_data(AVPacket *pkt, uint8_t *data, int size);

int av_copy_packet(AVPacket *dst, const AVPacket *src); attribute_deprecated

av_seek_frame

int av_seek_frame(AVFormatContext *s, int stream_index, // -1 default

int 64_t timestampe, //AVStream.time_base

int flags);

av_seek_frame flag

#define AVSEEK_FLAG_BACKWARD 1 /// < seek backward

#define AVSEEK_FLAG_BYTE 2 /// < seeking based on position in bytes

#define AVSEEK_FLAG_ANY 3 /// < seeking to any frame, even non-keyframes

#define AVSEEK_FLAG_FRAME 4 /// < seeking based on frame number

软硬件解码

avcodec_find_decoder

avcodec_register_all();

AVCodec *avcodec_find_decoder(enum AVCodecID id);

AVCodec *avcodec_find_decoder_by_name(const char *name);

avcodec_find_decoder_by_name(“h264_mediacodec”);

AVCodecContext

AVCodecContext *avcodec_alloc_context3(const AVCodec * codec)

void avcodec_free_context(AVCodecContext **avctx);

int avcodec_open2(AVCodecContext *avctx, const AVCodec *codec, AVDictionary **options);

/libavcodec/options_table.h

int thread_count;

time_base

avcodec_parameters_to_context

avcodec_parameters_to_context(codec, p);

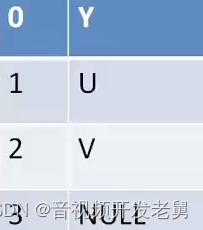

AVFrame

AVFrame *frame = av_frame_alloc();

void av_frame_free(AVFrame **frame);

int av_frame_ref(AVFrame *dst, const AVFrame *src);

AVFrame *av_frame_clone(const AVFrame *src);

void av_frame_unref(AVFrame *frame);

uint8_t *data[AV_NUM_DATA_POINTERS];

int linesize[AV_NUM_DATA_POINTERS];

int width, height; int nb_samples;

int64_t pts; int64_t pkt_dts;

int sample_rate; uint64_t channel_layout; int. channels;

int format; //AVPixelFormat AVSampleFormat

linesize

avcodec_send_packet

int avcodec_send_packet(AVCodecContext *avctx, const AVPacket *avpkt);

avcodec_receive_frame

int avcodec_receive_frame(AVCodecContext *avctx, AVFrame *frame);

重采样和像素格式转换

视频像素和是和尺寸转换

sws_getContext

struct SwsContext *sws_getCachedContext( struct SwsContext *context,

int srcW, int srcH, enum AVPixelFormat srcFormat,

int dstW, int dstH, enum AVPixelFormat dstFormat,

int flags, SwsFilter *srcFilter,

SwsFilter *dstFilter, const double *param);

int sws_scale(struct SwsContext *c, const uint8_t *const srcSlice[],

const int srcStride[], int srcSliceY, int srcSliceH,

uint8_t *const dst[], const int dstStride[]);

void sws_freeContext(struct SwsContext *swsContext);

#define SWS_FAST_BILINEAR 1

#define SWS_BILINEAR 2

#define SWS_BICUBIC 4

#define SWS_X 8

#define SWS_POINT 0x10

#define SWS_AREA 0x20

#define SWS_BICUBLIN 0x40

SwrContext

SwrContext *swr_alloc(void);

SwrContext *swr_alloc_set_opts(

struct SwrContext *s, int64_t out_ch_layout,

AVSampleFormat out_sample_fmt, int out_sample_rate,

int64_t in_ch_layout, AVSampleFormat in_sample_fmt, int in_sample_rate,

ing log_offset, void *log_ctx);

)

int swr_init(struct SwrContext *s);

void swr_free(struct SwrContext **s);

swr_convert

inv swr_convert(struct SwrContext *s, uint8_t **out, int out_count, const uint8_t **in, int in_count);

NDK绘制SurfaceView 3-1

cmake android

#include <android/native_window.h>

Einclude <android/native_window_jni.h>

NDK绘制SurfaceView3-2

ANativeWindow *nativeWindow = ANativveWindow_fromSurface(env, surface);

// 设置native window的buffer大小,可自动拉伸

ANativeWindow_setBuffersGeometry(natie Window, width, height, WINDOW_FORMAT_RGBA_8888);

NDK绘制SurfaceView3-3

ANativeWindow_Buffer windowBuffer;

ANativeWndow_lock(nativeWindow, &windowBuffer, 0);

uint8_t *dst = (uint8_t *)windowBuffer.bits;

memcpy(dst, rgb, widthheight4);

ANativeWindow_unlockAndPost(nativeWindow);

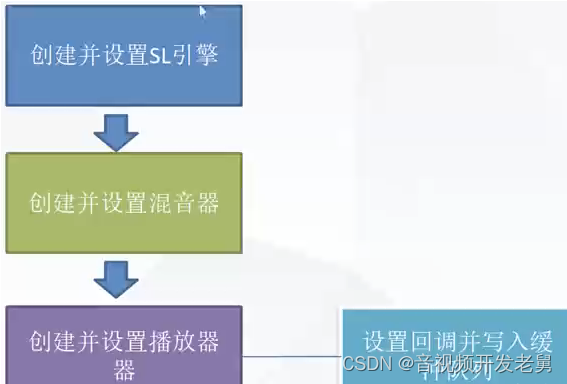

OpenSL ES

初始化引擎

//引擎接口

SLObjectltf engineObject = NULL;

SLEngineItf engineEngine = NULL;

slCreateEngine(&engineObject, 0, NULL, 0, NULL, NULL);

(*engineObject)->Realize(engineObject, SL_BOOLEAN_FALSE);

(*engineObject)->GetInterface(engineObjet, SL_IID_ENGINE, &engineEngline);

slCreateEngine

SL_AP SLresult SLAPINENTRY slCreateEngine(

SLObject ltf *pEngine,

SLuint32 numOptions

const SLEngineOption * pEngineOptions, //选择项目 默认参数

SLuint32 numInterfaces,

const SLInterfaceID *pInterfaceIds, //支持的接口

const SLboolean * pInterfaceRequired // 接口标识数值,是否支持

)

SLObjectltf

SLresult(*Realize) (

SLObjectltf self,

SLboolean async

)

对象已实现状态(false阻塞)

If SL_BOOLEAN_FALSE, the method will block until termination. Otherwise, the method will return SL_RESULT_SUCCESS, and will be executed asnychronously.

GetInterface

SLresult(* GetInterface)(

SLObjectltf self,

const SLInterfaceId iid,

void * pInterface

)

创建输出设备

SLEnginelft engineEngine = NULL;

SLObjectltf outputMixObject = NULL;

(*engineEngine)->CreateOutpuMix(engineEngine, &outputMixObject, 0, 0, 0);

(*outputMixObject)->Realize(outputMixObject, SL_BOOLEAN_FALSE);

SLDataLocator_OutputMix outputMix = {SL_DATALOCATOR_OUTPUTMIX, outputMixObject};

SLDataSink audioSnk = {&outputMix, NULL};

配置PCM格式信息

SLDataLocator_AndroidSimpleBufferQueue

android_queue={SL_DATALOCATOR_ANDROIDSIMPLEBUFFERQUEUE, 2};

SLDataFormat_PCM pcm={

SL_DATAFORMAT_PCM, //播放pcm格式的数据

2, //2个声道(立体声)

SL_SAMPLINGRATE_44_1, //44100hz的频率

SL_PCMSAMPLEFORMAT_FIXED_16, //位数16位

SL_PCMSAMPLEFORMAT_FIXED_16, //和位数一致就行

SL_SPEAKER_FRONT_LEFT | SL_SPEAKER_FRONT_RIGHT, //立体声(前左后右)

SL_BYTEORDER_LITTLEENDIAN //结束标志

}

SLDataSource slDataSource = {&android_queue, &pcm};

本文福利,免费领取C++音视频学习资料包、技术视频,内容包括(音视频开发,面试题,FFmpeg ,webRTC ,rtmp ,hls ,rtsp ,ffplay ,srs)↓↓↓↓↓↓见下面↓↓文章底部↓↓

初始化播放器

const SLInterfaceID ids[1] = {SL_IID_BUFFERQUEUE};

const SLboolean req[1] = {SL_BOOLEAN_TRUE};

(*engineEngine)->CreateAudioPlayer(engineEngine, &pcmPlayerObject, &slDataSource, &audioSnk, 1, ids, req);

// 初始化播放器

(*pcmPlayerObject)->Realize(pcmPlayerObject, SL_BOOLEAN_FALSE);

// 得到接口后调用 获取Player接口

(*pcmPlayerObject)->GetInterface(pcmPlayerObject, SL_IID_PLAY, pcmPlayerPlay);

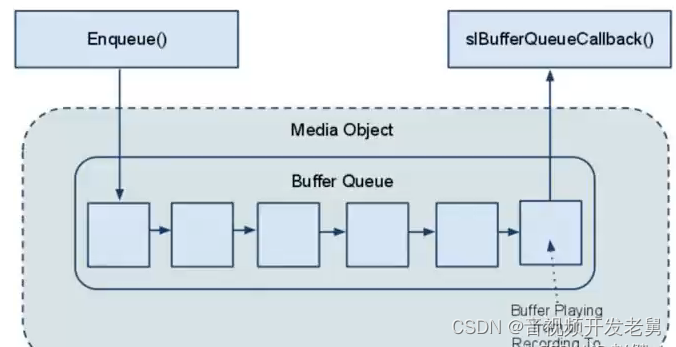

播放和缓冲队列

注册回调缓冲区 获取缓冲队列接口(*pcmPlayerObject)->GetInterface(pcmPlayerObject, SL_IID_BUFFERQUEUE, &pcmBufferQueue);

(*pcmBufferQueue)->RegisterCallback(pcmBufferQueue, pcmBufferCallBack, NULL);

(*pcmPlayerPlay)->SetPlayState(pcmPlayerPlay, SL_PLAYSTATE_PLAYING);

(*pcmBufferQueue)->Enqueue(pcmBufferQueue, “”, 1);

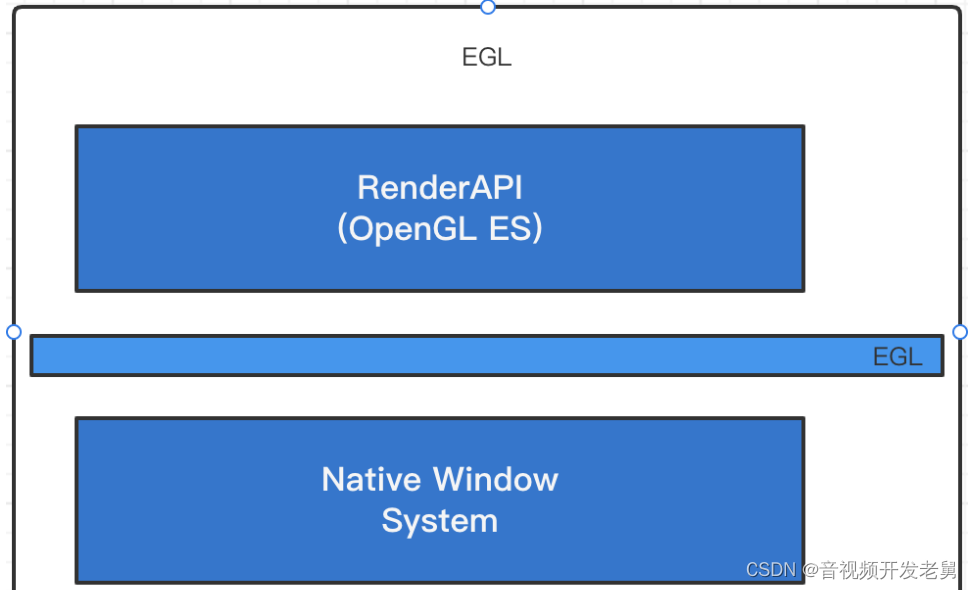

OpenGL ES 直接绘制YUV

EGL opengles shader glsl

https://www.khronos.org/registry/EGL/sdk/docs/man/

OpenG与窗口系统对应的适配层

EGL

Display 与原生窗口链接

EGLDisplay eglGetDisplay

EGLBoolean eglInitialize

Surface 配置和创建surface(窗口和屏幕上的渲染区域)

EGLBoolean eglChooseConfig

GELSurface eglCreateWindowSurface

Context创建渲染环境(Context上下文)

渲染环境指OpenGL ES的所有项目运行需要的数据结构。如顶点、片段着色器、顶点数据矩阵

eglCreateContext

eglMakeCurrent

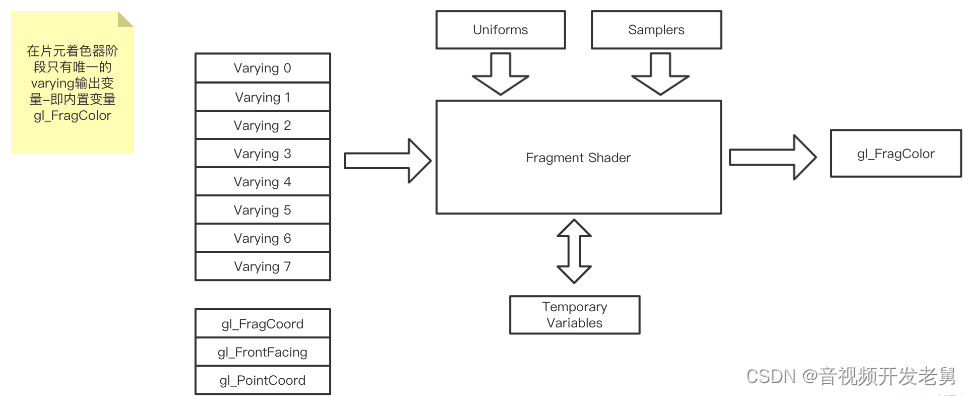

着色器语言GLSL

顶点着色器是针对每个顶点执行一次,用于确定顶点的位置;片元着色器是针对每个片元(可以理解为每个像素)执行一次,用于确定每个片元(像素)的颜色

GLSL的基本语法与C基本相同

它完美的支持向量和矩阵操作

GLSL提供了大量的内置函数来提供丰富的扩展功能

它是通过限定符操作来管理输入输出类型的

显示yuv代码演示

ffmpeg -i 720.mp4 -pix_fmt yuv420p -s 424x240 out.yuv

使用shader显示准备好的yuv数据

封装EGL和shader

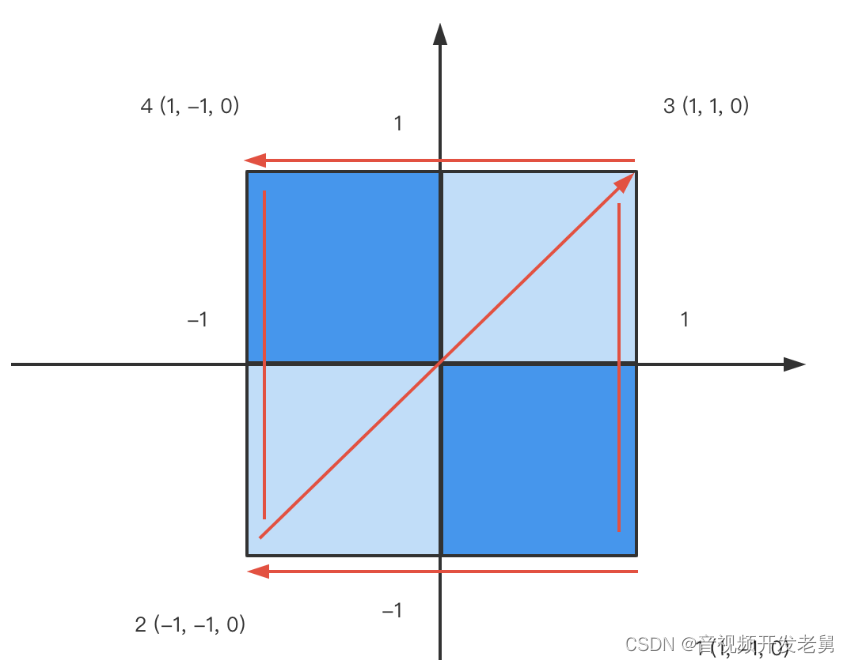

顶点着色器

顶点信息

YUV转RGB

R = Y + 1.402 (Cr - 128)

G = Y - 0.34414 (Cb-128) - 0.71414(Cr - 128)

B = Y + 1.772(Cb - 128)

( 1.0, 1.0, 1.0,

0.0, -0.39465, 2.03211,

1.13983, -0.58060, 0.0 )

glTextParameteri

glTextParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTextParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

GL_TEXTURE_MIN_FILTE: 缩小过滤

GL_TEXTURE_MAG_FILTER: 放大过滤

GL_LINEAR: 线性过滤,使用距离当前渲染像素中心最近的4个纹素加权平均值.

pts/dts

同步策略

项目实战

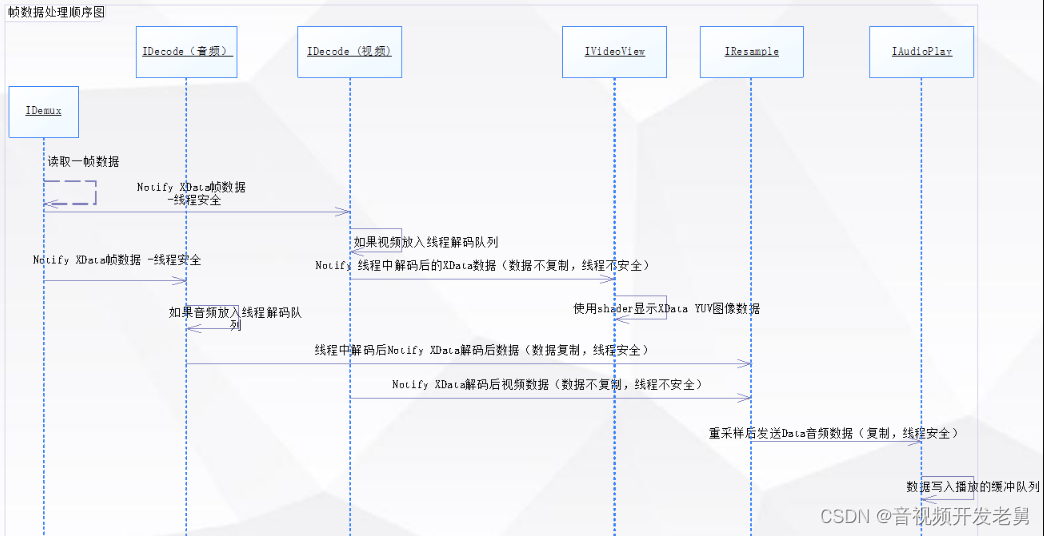

项目用到的设计模式

Adapter适配器模式:封装ffmpeg、opengl、opensl

Builder构建者模式:构建播放器对象

Proxy代理模式:管理播放器创建和线程安全

Facade外观(门面)模式:播放器管理解封装、解码、重采样、显示、音频播放

Singleton单例模式:唯一的构建者对象

生产者消费者模式:解封模块生产数据包,解码模块消费解码

Observer观察者模式:模块间通信

播放媒体文件的顺序图

原文链接:FFmpeg SDK软硬解码基础 - 资料 - 我爱音视频网 - 构建全国最权威的音视频技术交流分享论坛

本文福利,免费领取C++音视频学习资料包、技术视频,内容包括(音视频开发,面试题,FFmpeg ,webRTC ,rtmp ,hls ,rtsp ,ffplay ,srs)↓↓↓↓↓↓见下面↓↓文章底部↓↓

2272

2272

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?